Vishwanath Saragadam

Implicit Neural Representation of Waveform Measurements in Power Systems Waveform Data Analysis

May 14, 2025Abstract:There is currently a paradigm shift in several power system monitoring applications, such as incipient fault detection and monitoring inverter-based resources, to transition from traditional phasor analytics to more informative waveform analytics. This paper contributes to this transition by developing a novel approach to modeling voltage and current waveform measurements using implicit neural representations (INRs). INRs are continuous function approximators that are recently used in vision and signal processing. The proposed INR models are specifically designed to meet the requirements of waveform analytics in power systems, such as by using sinusoidal activation functions that capture the periodic nature of voltage and current waveforms. We also propose extended models that can efficiently represent correlated waveforms, such as three-phase waveforms and synchro-waveforms. Real-world case studies demonstrate the effectiveness of the proposed INR models in terms of accuracy (<1-2% MSE) and model size (4-6x compression). We also investigate the application of INR models in oscillation monitoring, for single mode oscillations and dual mode modulated oscillations.

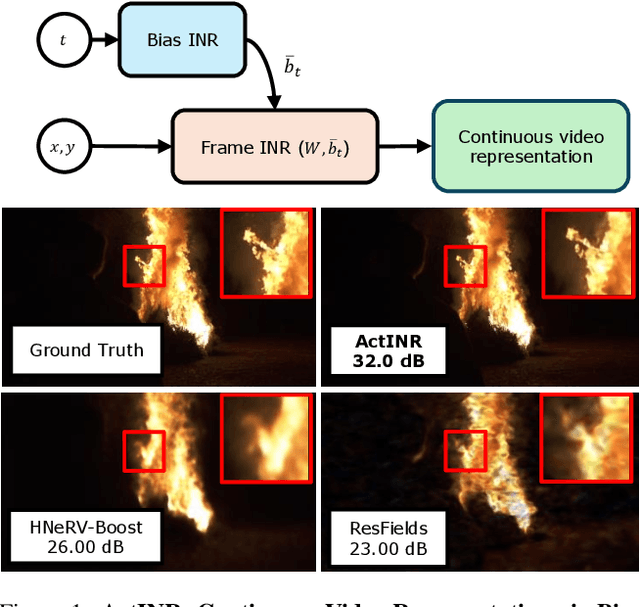

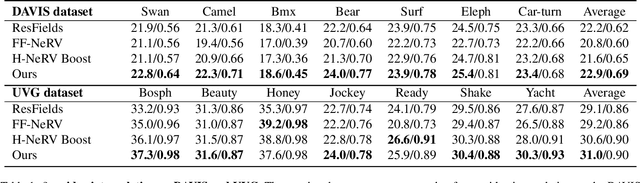

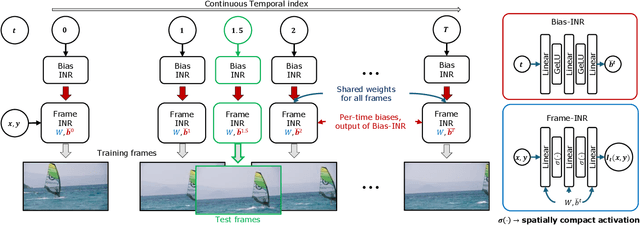

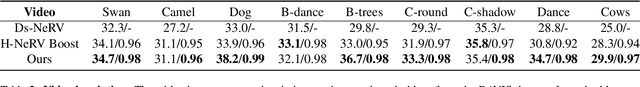

Bias for Action: Video Implicit Neural Representations with Bias Modulation

Jan 16, 2025

Abstract:We propose a new continuous video modeling framework based on implicit neural representations (INRs) called ActINR. At the core of our approach is the observation that INRs can be considered as a learnable dictionary, with the shapes of the basis functions governed by the weights of the INR, and their locations governed by the biases. Given compact non-linear activation functions, we hypothesize that an INR's biases are suitable to capture motion across images, and facilitate compact representations for video sequences. Using these observations, we design ActINR to share INR weights across frames of a video sequence, while using unique biases for each frame. We further model the biases as the output of a separate INR conditioned on time index to promote smoothness. By training the video INR and this bias INR together, we demonstrate unique capabilities, including $10\times$ video slow motion, $4\times$ spatial super resolution along with $2\times$ slow motion, denoising, and video inpainting. ActINR performs remarkably well across numerous video processing tasks (often achieving more than 6dB improvement), setting a new standard for continuous modeling of videos.

Implicit Neural Representations and the Algebra of Complex Wavelets

Oct 01, 2023

Abstract:Implicit neural representations (INRs) have arisen as useful methods for representing signals on Euclidean domains. By parameterizing an image as a multilayer perceptron (MLP) on Euclidean space, INRs effectively represent signals in a way that couples spatial and spectral features of the signal that is not obvious in the usual discrete representation, paving the way for continuous signal processing and machine learning approaches that were not previously possible. Although INRs using sinusoidal activation functions have been studied in terms of Fourier theory, recent works have shown the advantage of using wavelets instead of sinusoids as activation functions, due to their ability to simultaneously localize in both frequency and space. In this work, we approach such INRs and demonstrate how they resolve high-frequency features of signals from coarse approximations done in the first layer of the MLP. This leads to multiple prescriptions for the design of INR architectures, including the use of complex wavelets, decoupling of low and band-pass approximations, and initialization schemes based on the singularities of the desired signal.

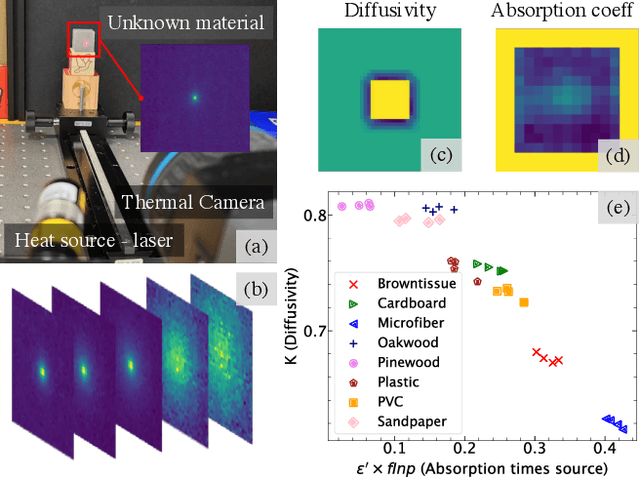

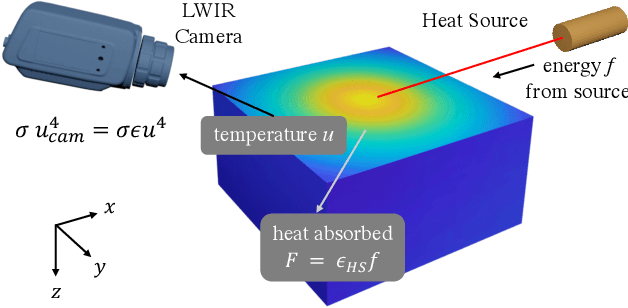

Thermal Spread Functions (TSF): Physics-guided Material Classification

Apr 03, 2023

Abstract:Robust and non-destructive material classification is a challenging but crucial first-step in numerous vision applications. We propose a physics-guided material classification framework that relies on thermal properties of the object. Our key observation is that the rate of heating and cooling of an object depends on the unique intrinsic properties of the material, namely the emissivity and diffusivity. We leverage this observation by gently heating the objects in the scene with a low-power laser for a fixed duration and then turning it off, while a thermal camera captures measurements during the heating and cooling process. We then take this spatial and temporal "thermal spread function" (TSF) to solve an inverse heat equation using the finite-differences approach, resulting in a spatially varying estimate of diffusivity and emissivity. These tuples are then used to train a classifier that produces a fine-grained material label at each spatial pixel. Our approach is extremely simple requiring only a small light source (low power laser) and a thermal camera, and produces robust classification results with 86% accuracy over 16 classes.

WIRE: Wavelet Implicit Neural Representations

Jan 05, 2023Abstract:Implicit neural representations (INRs) have recently advanced numerous vision-related areas. INR performance depends strongly on the choice of the nonlinear activation function employed in its multilayer perceptron (MLP) network. A wide range of nonlinearities have been explored, but, unfortunately, current INRs designed to have high accuracy also suffer from poor robustness (to signal noise, parameter variation, etc.). Inspired by harmonic analysis, we develop a new, highly accurate and robust INR that does not exhibit this tradeoff. Wavelet Implicit neural REpresentation (WIRE) uses a continuous complex Gabor wavelet activation function that is well-known to be optimally concentrated in space-frequency and to have excellent biases for representing images. A wide range of experiments (image denoising, image inpainting, super-resolution, computed tomography reconstruction, image overfitting, and novel view synthesis with neural radiance fields) demonstrate that WIRE defines the new state of the art in INR accuracy, training time, and robustness.

Foveated Thermal Computational Imaging in the Wild Using All-Silicon Meta-Optics

Dec 13, 2022Abstract:Foveated imaging provides a better tradeoff between situational awareness (field of view) and resolution and is critical in long-wavelength infrared regimes because of the size, weight, power, and cost of thermal sensors. We demonstrate computational foveated imaging by exploiting the ability of a meta-optical frontend to discriminate between different polarization states and a computational backend to reconstruct the captured image/video. The frontend is a three-element optic: the first element which we call the "foveal" element is a metalens that focuses s-polarized light at a distance of $f_1$ without affecting the p-polarized light; the second element which we call the "perifoveal" element is another metalens that focuses p-polarized light at a distance of $f_2$ without affecting the s-polarized light. The third element is a freely rotating polarizer that dynamically changes the mixing ratios between the two polarization states. Both the foveal element (focal length = 150mm; diameter = 75mm), and the perifoveal element (focal length = 25mm; diameter = 25mm) were fabricated as polarization-sensitive, all-silicon, meta surfaces resulting in a large-aperture, 1:6 foveal expansion, thermal imaging capability. A computational backend then utilizes a deep image prior to separate the resultant multiplexed image or video into a foveated image consisting of a high-resolution center and a lower-resolution large field of view context. We build a first-of-its-kind prototype system and demonstrate 12 frames per second real-time, thermal, foveated image, and video capture in the wild.

TITAN: Bringing The Deep Image Prior to Implicit Representations

Nov 01, 2022Abstract:We study the interpolation capabilities of implicit neural representations (INRs) of images. In principle, INRs promise a number of advantages, such as continuous derivatives and arbitrary sampling, being freed from the restrictions of a raster grid. However, empirically, INRs have been observed to poorly interpolate between the pixels of the fit image; in other words, they do not inherently possess a suitable prior for natural images. In this paper, we propose to address and improve INRs' interpolation capabilities by explicitly integrating image prior information into the INR architecture via deep decoder, a specific implementation of the deep image prior (DIP). Our method, which we call TITAN, leverages a residual connection from the input which enables integrating the principles of the grid-based DIP into the grid-free INR. Through super-resolution and computed tomography experiments, we demonstrate that our method significantly improves upon classic INRs, thanks to the induced natural image bias. We also find that by constraining the weights to be sparse, image quality and sharpness are enhanced, increasing the Lipschitz constant.

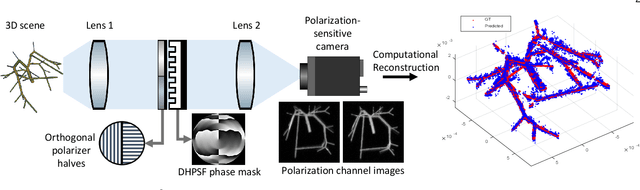

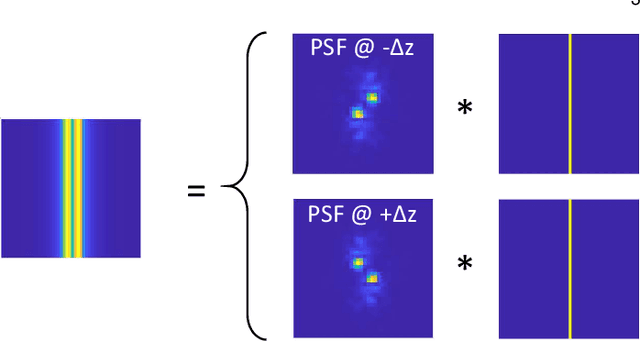

PS$^2$F: Polarized Spiral Point Spread Function for Single-Shot 3D Sensing

Jul 03, 2022

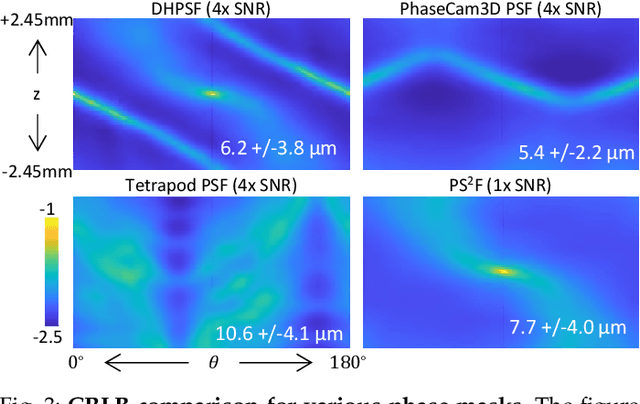

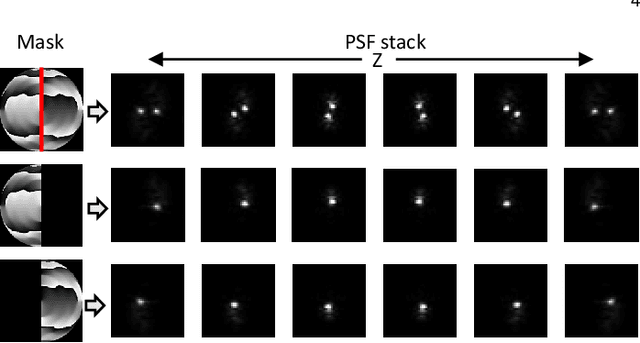

Abstract:We propose a compact snapshot monocular depth estimation technique that relies on an engineered point spread function (PSF). Traditional approaches used in microscopic super-resolution imaging, such as the Double-Helix PSF (DHPSF), are ill-suited for scenes that are more complex than a sparse set of point light sources. We show, using the Cram\'er-Rao lower bound (CRLB), that separating the two lobes of the DHPSF and thereby capturing two separate images leads to a dramatic increase in depth accuracy. A unique property of the phase mask used for generating the DHPSF is that a separation of the phase mask into two halves leads to a spatial separation of the two lobes. We leverage this property to build a compact polarization-based optical setup, where we place two orthogonal linear polarizers on each half of the DHPSF phase mask and then capture the resulting image with a polarization sensitive camera. Results from simulations and a lab prototype demonstrate that our technique achieves up to $50\%$ lower depth error compared to state-of-the-art designs including the DHPSF, and the Tetrapod PSF, with little to no loss in spatial resolution.

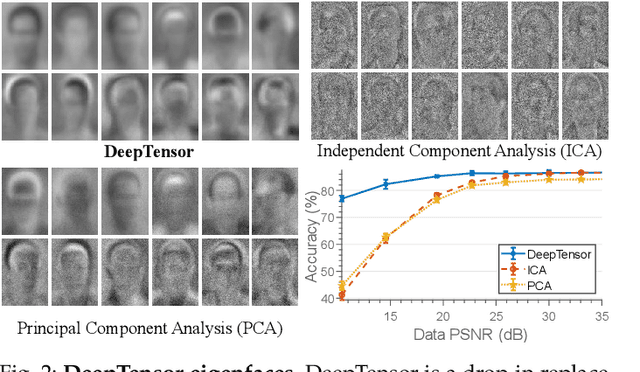

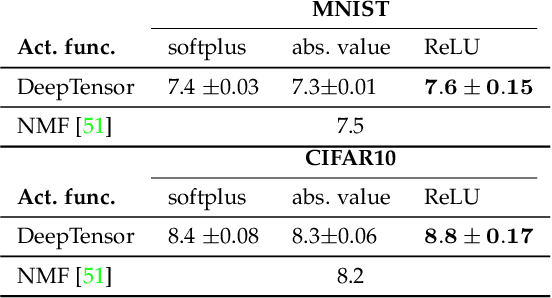

DeepTensor: Low-Rank Tensor Decomposition with Deep Network Priors

Apr 07, 2022

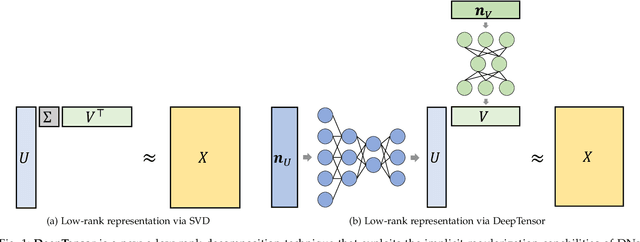

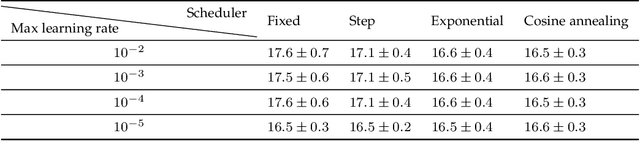

Abstract:DeepTensor is a computationally efficient framework for low-rank decomposition of matrices and tensors using deep generative networks. We decompose a tensor as the product of low-rank tensor factors (e.g., a matrix as the outer product of two vectors), where each low-rank tensor is generated by a deep network (DN) that is trained in a self-supervised manner to minimize the mean-squared approximation error. Our key observation is that the implicit regularization inherent in DNs enables them to capture nonlinear signal structures (e.g., manifolds) that are out of the reach of classical linear methods like the singular value decomposition (SVD) and principal component analysis (PCA). Furthermore, in contrast to the SVD and PCA, whose performance deteriorates when the tensor's entries deviate from additive white Gaussian noise, we demonstrate that the performance of DeepTensor is robust to a wide range of distributions. We validate that DeepTensor is a robust and computationally efficient drop-in replacement for the SVD, PCA, nonnegative matrix factorization (NMF), and similar decompositions by exploring a range of real-world applications, including hyperspectral image denoising, 3D MRI tomography, and image classification. In particular, DeepTensor offers a 6dB signal-to-noise ratio improvement over standard denoising methods for signals corrupted by Poisson noise and learns to decompose 3D tensors 60 times faster than a single DN equipped with 3D convolutions.

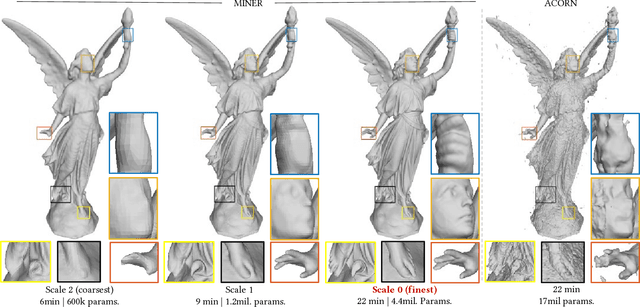

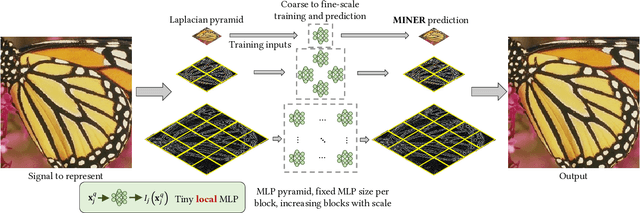

MINER: Multiscale Implicit Neural Representations

Feb 07, 2022

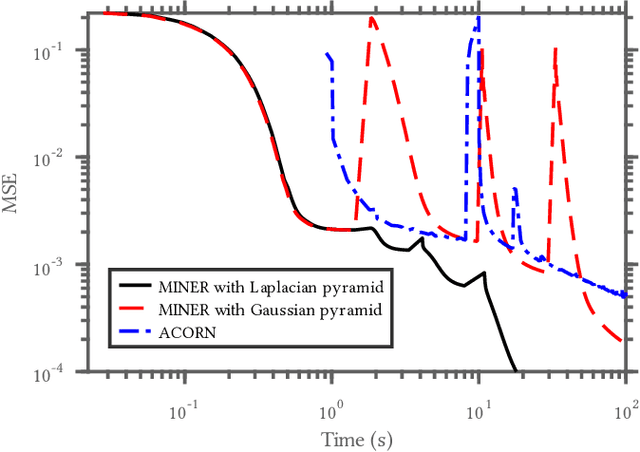

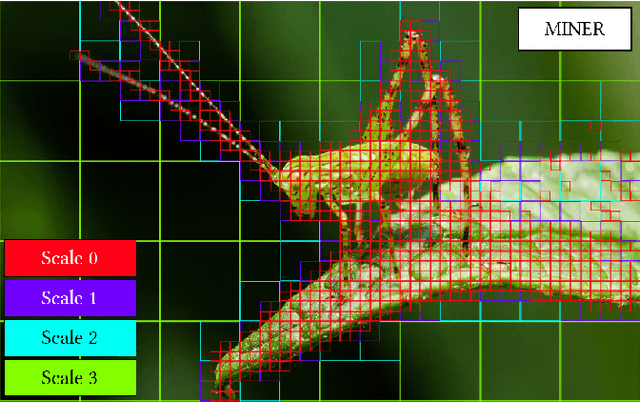

Abstract:We introduce a new neural signal representation designed for the efficient high-resolution representation of large-scale signals. The key innovation in our multiscale implicit neural representation (MINER) is an internal representation via a Laplacian pyramid, which provides a sparse multiscale representation of the signal that captures orthogonal parts of the signal across scales. We leverage the advantages of the Laplacian pyramid by representing small disjoint patches of the pyramid at each scale with a tiny MLP. This enables the capacity of the network to adaptively increase from coarse to fine scales, and only represent parts of the signal with strong signal energy. The parameters of each MLP are optimized from coarse-to-fine scale which results in faster approximations at coarser scales, thereby ultimately an extremely fast training process. We apply MINER to a range of large-scale signal representation tasks, including gigapixel images and very large point clouds, and demonstrate that it requires fewer than 25% of the parameters, 33% of the memory footprint, and 10% of the computation time of competing techniques such as ACORN to reach the same representation error.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge