Arka Majumdar

Learned split-spectrum metalens for obstruction-free broadband imaging in the visible

Jan 27, 2026Abstract:Obstructions such as raindrops, fences, or dust degrade captured images, especially when mechanical cleaning is infeasible. Conventional solutions to obstructions rely on a bulky compound optics array or computational inpainting, which compromise compactness or fidelity. Metalenses composed of subwavelength meta-atoms promise compact imaging, but simultaneous achievement of broadband and obstruction-free imaging remains a challenge, since a metalens that images distant scenes across a broadband spectrum cannot properly defocus near-depth occlusions. Here, we introduce a learned split-spectrum metalens that enables broadband obstruction-free imaging. Our approach divides the spectrum of each RGB channel into pass and stop bands with multi-band spectral filtering and learns the metalens to focus light from far objects through pass bands, while filtering focused near-depth light through stop bands. This optical signal is further enhanced using a neural network. Our learned split-spectrum metalens achieves broadband and obstruction-free imaging with relative PSNR gains of 32.29% and improves object detection and semantic segmentation accuracies with absolute gains of +13.54% mAP, +48.45% IoU, and +20.35% mIoU over a conventional hyperbolic design. This promises robust obstruction-free sensing and vision for space-constrained systems, such as mobile robots, drones, and endoscopes.

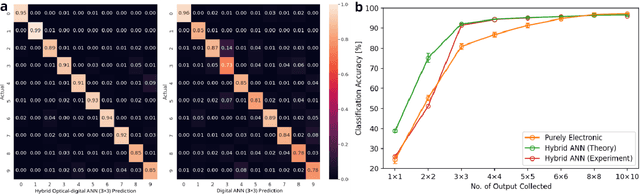

Neural Tangent Knowledge Distillation for Optical Convolutional Networks

Aug 11, 2025Abstract:Hybrid Optical Neural Networks (ONNs, typically consisting of an optical frontend and a digital backend) offer an energy-efficient alternative to fully digital deep networks for real-time, power-constrained systems. However, their adoption is limited by two main challenges: the accuracy gap compared to large-scale networks during training, and discrepancies between simulated and fabricated systems that further degrade accuracy. While previous work has proposed end-to-end optimizations for specific datasets (e.g., MNIST) and optical systems, these approaches typically lack generalization across tasks and hardware designs. To address these limitations, we propose a task-agnostic and hardware-agnostic pipeline that supports image classification and segmentation across diverse optical systems. To assist optical system design before training, we estimate achievable model accuracy based on user-specified constraints such as physical size and the dataset. For training, we introduce Neural Tangent Knowledge Distillation (NTKD), which aligns optical models with electronic teacher networks, thereby narrowing the accuracy gap. After fabrication, NTKD also guides fine-tuning of the digital backend to compensate for implementation errors. Experiments on multiple datasets (e.g., MNIST, CIFAR, Carvana Masking) and hardware configurations show that our pipeline consistently improves ONN performance and enables practical deployment in both pre-fabrication simulations and physical implementations.

Computational metaoptics for imaging

Nov 14, 2024

Abstract:Metasurfaces -- ultrathin structures composed of subwavelength optical elements -- have revolutionized light manipulation by enabling precise control over electromagnetic waves' amplitude, phase, polarization, and spectral properties. Concurrently, computational imaging leverages algorithms to reconstruct images from optically processed signals, overcoming limitations of traditional imaging systems. This review explores the synergistic integration of metaoptics and computational imaging, "computational metaoptics," which combines the physical wavefront shaping ability of metasurfaces with advanced computational algorithms to enhance imaging performance beyond conventional limits. We discuss how computational metaoptics addresses the inherent limitations of single-layer metasurfaces in achieving multifunctionality without compromising efficiency. By treating metasurfaces as physical preconditioners and co-designing them with reconstruction algorithms through end-to-end (inverse) design, it is possible to jointly optimize the optical hardware and computational software. This holistic approach allows for the automatic discovery of optimal metasurface designs and reconstruction methods that significantly improve imaging capabilities. Advanced applications enabled by computational metaoptics are highlighted, including phase imaging and quantum state measurement, which benefit from the metasurfaces' ability to manipulate complex light fields and the computational algorithms' capacity to reconstruct high-dimensional information. We also examine performance evaluation challenges, emphasizing the need for new metrics that account for the combined optical and computational nature of these systems. Finally, we identify new frontiers in computational metaoptics which point toward a future where computational metaoptics may play a central role in advancing imaging science and technology.

Computed tomography using meta-optics

Nov 13, 2024

Abstract:Computer vision tasks require processing large amounts of data to perform image classification, segmentation, and feature extraction. Optical preprocessors can potentially reduce the number of floating point operations required by computer vision tasks, enabling low-power and low-latency operation. However, existing optical preprocessors are mostly learned and hence strongly depend on the training data, and thus lack universal applicability. In this paper, we present a metaoptic imager, which implements the Radon transform obviating the need for training the optics. High quality image reconstruction with a large compression ratio of 0.6% is presented through the use of the Simultaneous Algebraic Reconstruction Technique. Image classification with 90% accuracy is presented on an experimentally measured Radon dataset through neural network trained on digitally transformed images.

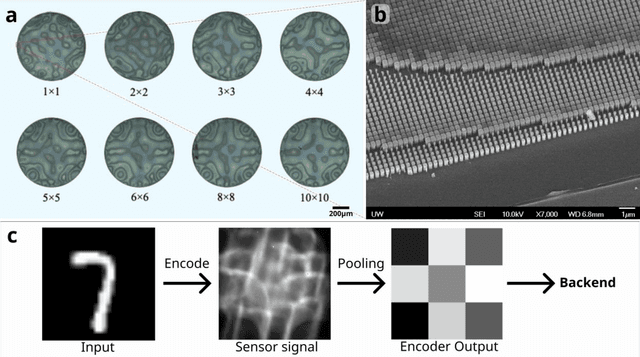

Transferable polychromatic optical encoder for neural networks

Nov 05, 2024Abstract:Artificial neural networks (ANNs) have fundamentally transformed the field of computer vision, providing unprecedented performance. However, these ANNs for image processing demand substantial computational resources, often hindering real-time operation. In this paper, we demonstrate an optical encoder that can perform convolution simultaneously in three color channels during the image capture, effectively implementing several initial convolutional layers of a ANN. Such an optical encoding results in ~24,000 times reduction in computational operations, with a state-of-the art classification accuracy (~73.2%) in free-space optical system. In addition, our analog optical encoder, trained for CIFAR-10 data, can be transferred to the ImageNet subset, High-10, without any modifications, and still exhibits moderate accuracy. Our results evidence the potential of hybrid optical/digital computer vision system in which the optical frontend can pre-process an ambient scene to reduce the energy and latency of the whole computer vision system.

End-to-end metasurface design for temperature imaging via broadband Planck-radiation regression

Sep 13, 2024Abstract:We present a theoretical framework for temperature imaging from long-wavelength infrared thermal radiation (e.g. 8-12 $\mu$m) through the end-to-end design of a metasurface-optics frontend and a computational-reconstruction backend. We introduce a new nonlinear reconstruction algorithm, ``Planck regression," that reconstructs the temperature map from a grayscale sensor image, even in the presence of severe chromatic aberration, by exploiting blackbody and optical physics particular to thermal imaging. We combine this algorithm with an end-to-end approach that optimizes a manufacturable, single-layer metasurface to yield the most accurate reconstruction. Our designs demonstrate high-quality, noise-robust reconstructions of arbitrary temperature maps (including completely random images) in simulations of an ultra-compact thermal-imaging device. We also show that Planck regression is much more generalizable to arbitrary images than a straightforward neural-network reconstruction, which requires a large training set of domain-specific images.

Spatially Varying Nanophotonic Neural Networks

Aug 07, 2023

Abstract:The explosive growth of computation and energy cost of artificial intelligence has spurred strong interests in new computing modalities as potential alternatives to conventional electronic processors. Photonic processors that execute operations using photons instead of electrons, have promised to enable optical neural networks with ultra-low latency and power consumption. However, existing optical neural networks, limited by the underlying network designs, have achieved image recognition accuracy much lower than state-of-the-art electronic neural networks. In this work, we close this gap by introducing a large-kernel spatially-varying convolutional neural network learned via low-dimensional reparameterization techniques. We experimentally instantiate the network with a flat meta-optical system that encompasses an array of nanophotonic structures designed to induce angle-dependent responses. Combined with an extremely lightweight electronic backend with approximately 2K parameters we demonstrate a nanophotonic neural network reaches 73.80\% blind test classification accuracy on CIFAR-10 dataset, and, as such, the first time, an optical neural network outperforms the first modern digital neural network -- AlexNet (72.64\%) with 57M parameters, bringing optical neural network into modern deep learning era.

Thin On-Sensor Nanophotonic Array Cameras

Aug 05, 2023

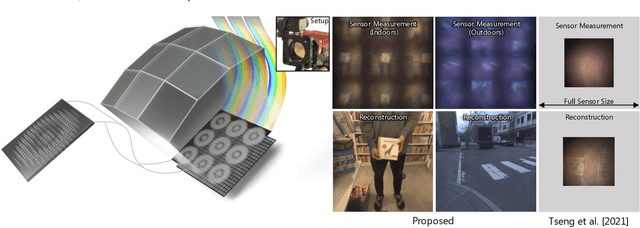

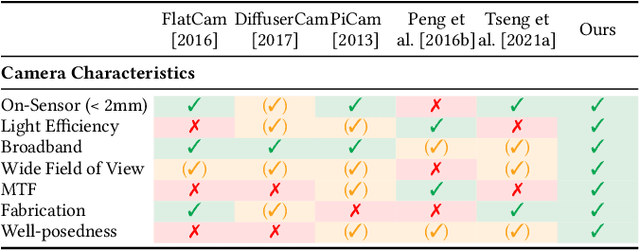

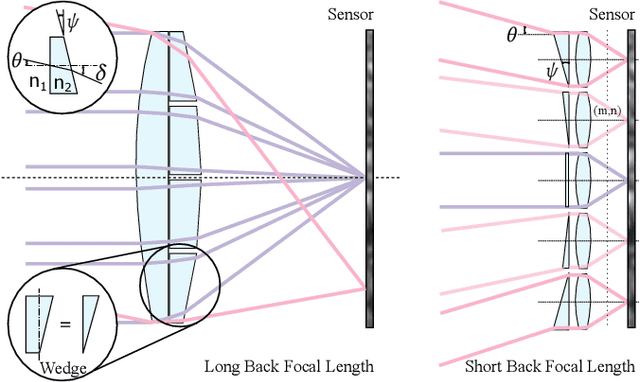

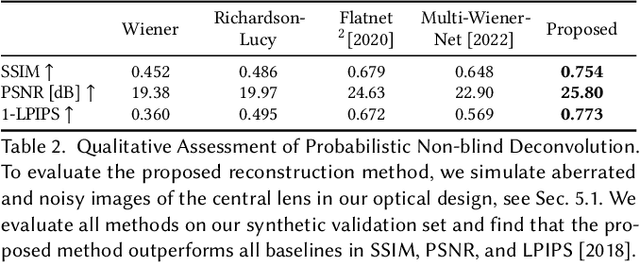

Abstract:Today's commodity camera systems rely on compound optics to map light originating from the scene to positions on the sensor where it gets recorded as an image. To record images without optical aberrations, i.e., deviations from Gauss' linear model of optics, typical lens systems introduce increasingly complex stacks of optical elements which are responsible for the height of existing commodity cameras. In this work, we investigate \emph{flat nanophotonic computational cameras} as an alternative that employs an array of skewed lenslets and a learned reconstruction approach. The optical array is embedded on a metasurface that, at 700~nm height, is flat and sits on the sensor cover glass at 2.5~mm focal distance from the sensor. To tackle the highly chromatic response of a metasurface and design the array over the entire sensor, we propose a differentiable optimization method that continuously samples over the visible spectrum and factorizes the optical modulation for different incident fields into individual lenses. We reconstruct a megapixel image from our flat imager with a \emph{learned probabilistic reconstruction} method that employs a generative diffusion model to sample an implicit prior. To tackle \emph{scene-dependent aberrations in broadband}, we propose a method for acquiring paired captured training data in varying illumination conditions. We assess the proposed flat camera design in simulation and with an experimental prototype, validating that the method is capable of recovering images from diverse scenes in broadband with a single nanophotonic layer.

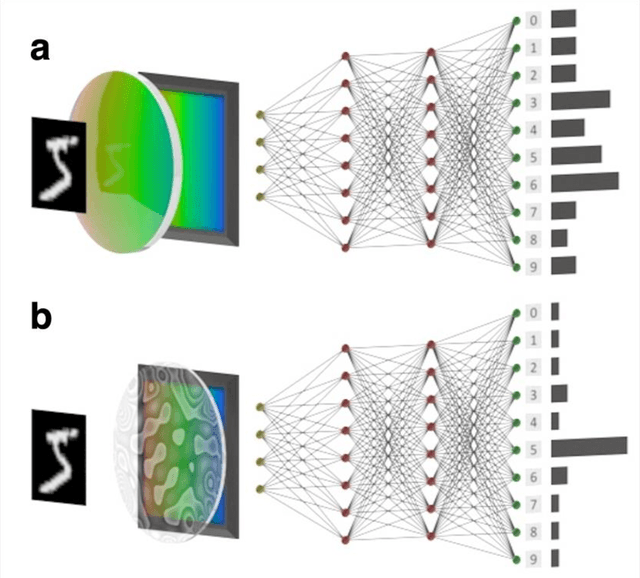

Photonic Advantage of Optical Encoders

May 02, 2023

Abstract:Light's ability to perform massive linear operations parallelly has recently inspired numerous demonstrations of optics-assisted artificial neural networks (ANN). However, a clear advantage of optics over purely digital ANN in a system-level has not yet been established. While linear operations can indeed be optically performed very efficiently, the lack of nonlinearity and signal regeneration require high-power, low-latency signal transduction between optics and electronics. Additionally, a large power is needed for the lasers and photodetectors, which are often neglected in the calculation of energy consumption. Here, instead of mapping traditional digital operations to optics, we co-optimized a hybrid optical-digital ANN, that operates on incoherent light, and thus amenable to operations under ambient light. Keeping the latency and power constant between purely digital ANN and hybrid optical-digital ANN, we identified a low-power/ latency regime, where an optical encoder provides higher classification accuracy than a purely digital ANN. However, in that regime, the overall classification accuracy is lower than what is achievable with higher power and latency. Our results indicate that optics can be advantageous over digital ANN in applications, where the overall performance of the ANN can be relaxed to prioritize lower power and latency.

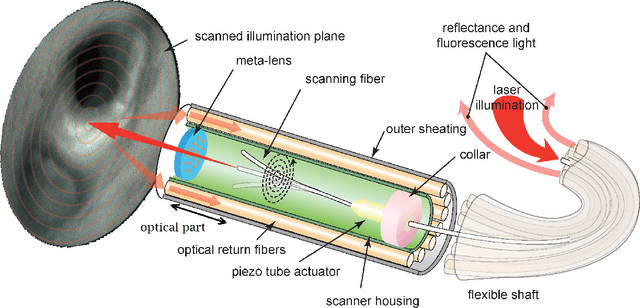

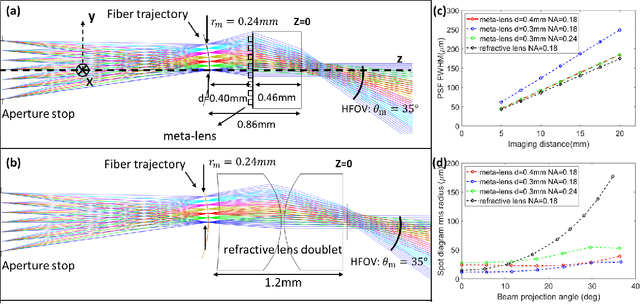

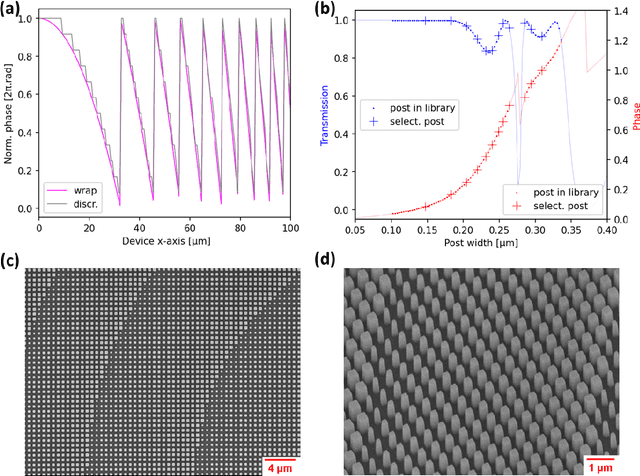

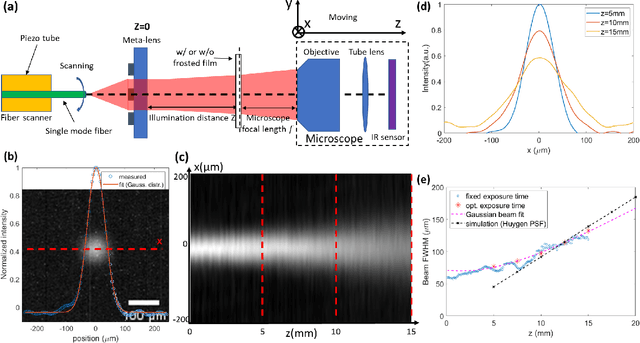

Large FOV short-wave infrared meta-lens for scanning fiber endoscopy

Dec 21, 2022

Abstract:The scanning fiber endoscope (SFE), an ultra-small optical imaging device with a large field-of-view (FOV) for having a clear forward view into the interior of blood vessels, has great potential in the cardio-vascular disease diagnosis and surgery assistance, which is one of the key applications for short-wave infrared (SWIR) biomedical imaging. The state-of-the-art SFE system uses a miniaturized refractive spherical lens doublet for beam projection. A meta-lens is a promising alternative which can be made much thinner and has fewer off-axis aberrations than its refractive counterpart. We report an SFE system with meta-lens working at 1310nm to achieve a resolution ($\sim 140\mu m$ at the center of field and the imaging distance of $15mm$), FOV ($\sim 70 \circ$), and depth-of-focus (DOF $\sim 15mm$), which are comparable to a state-of-the-art refractive lens SFE. The use of the meta-lens reduces the length of the optical track from $1.2mm$ to $0.86mm$. The resolution of our meta-lens based SFE drops by less than a factor of $2$ at the edge of the FOV, while the refractive lens counterpart has a $\sim 3$ times resolution degradation. These results show the promise of integrating a meta-lens into an endoscope for device minimization and optical performance improvement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge