Emma Alexander

Homeostatic Adaptation of Optimal Population Codes under Metabolic Stress

Jul 10, 2025Abstract:Information processing in neural populations is inherently constrained by metabolic resource limits and noise properties, with dynamics that are not accurately described by existing mathematical models. Recent data, for example, shows that neurons in mouse visual cortex go into a "low power mode" in which they maintain firing rate homeostasis while expending less energy. This adaptation leads to increased neuronal noise and tuning curve flattening in response to metabolic stress. We have developed a theoretical population coding framework that captures this behavior using two novel, surprisingly simple constraints: an approximation of firing rate homeostasis and an energy limit tied to noise levels via biophysical simulation. A key feature of our contribution is an energy budget model directly connecting adenosine triphosphate (ATP) use in cells to a fully explainable mathematical framework that generalizes existing optimal population codes. Specifically, our simulation provides an energy-dependent dispersed Poisson noise model, based on the assumption that the cell will follow an optimal decay path to produce the least-noisy spike rate that is possible at a given cellular energy budget. Each state along this optimal path is associated with properties (resting potential and leak conductance) which can be measured in electrophysiology experiments and have been shown to change under prolonged caloric deprivation. We analytically derive the optimal coding strategy for neurons under varying energy budgets and coding goals, and show how our method uniquely captures how populations of tuning curves adapt while maintaining homeostasis, as has been observed empirically.

Focal Split: Untethered Snapshot Depth from Differential Defocus

Apr 15, 2025Abstract:We introduce Focal Split, a handheld, snapshot depth camera with fully onboard power and computing based on depth-from-differential-defocus (DfDD). Focal Split is passive, avoiding power consumption of light sources. Its achromatic optical system simultaneously forms two differentially defocused images of the scene, which can be independently captured using two photosensors in a snapshot. The data processing is based on the DfDD theory, which efficiently computes a depth and a confidence value for each pixel with only 500 floating point operations (FLOPs) per pixel from the camera measurements. We demonstrate a Focal Split prototype, which comprises a handheld custom camera system connected to a Raspberry Pi 5 for real-time data processing. The system consumes 4.9 W and is powered on a 5 V, 10,000 mAh battery. The prototype can measure objects with distances from 0.4 m to 1.2 m, outputting 480$\times$360 sparse depth maps at 2.1 frames per second (FPS) using unoptimized Python scripts. Focal Split is DIY friendly. A comprehensive guide to building your own Focal Split depth camera, code, and additional data can be found at https://focal-split.qiguo.org.

Spectrum from Defocus: Fast Spectral Imaging with Chromatic Focal Stack

Mar 26, 2025

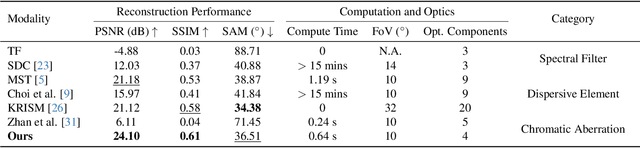

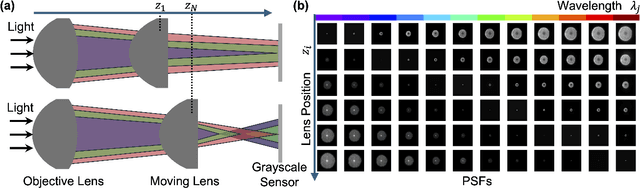

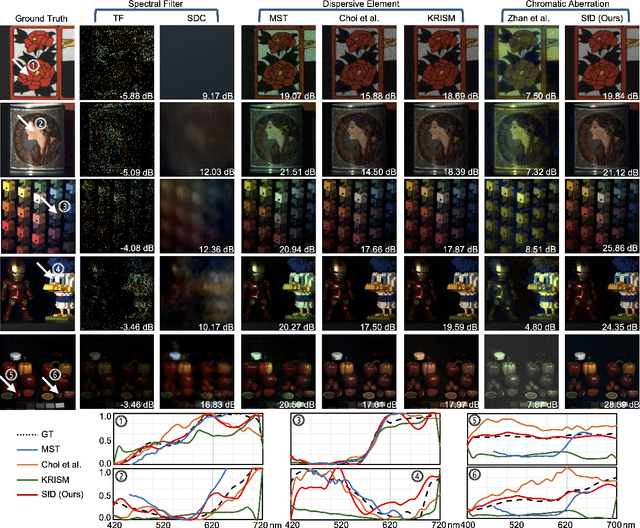

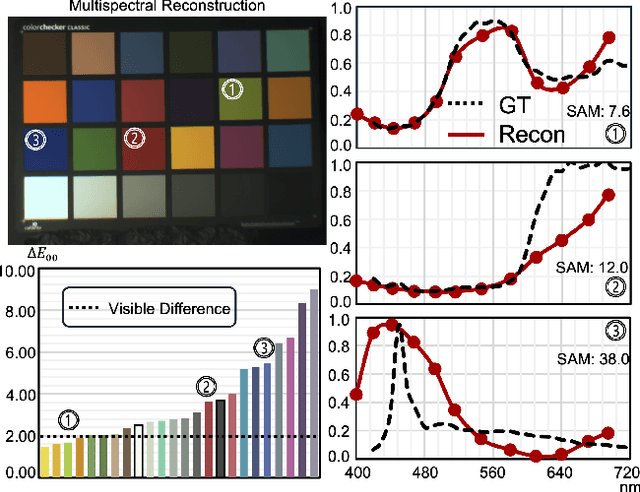

Abstract:Hyperspectral cameras face harsh trade-offs between spatial, spectral, and temporal resolution in an inherently low-photon regime. Computational imaging systems break through these trade-offs with compressive sensing, but require complex optics and/or extensive compute. We present Spectrum from Defocus (SfD), a chromatic focal sweep method that recovers state-of-the-art hyperspectral images with a small system of off-the-shelf optics and < 1 second of compute. Our camera uses two lenses and a grayscale sensor to preserve nearly all incident light in a chromatically-aberrated focal stack. Our physics-based iterative algorithm efficiently demixes, deconvolves, and denoises the blurry grayscale focal stack into a sharp spectral image. The combination of photon efficiency, optical simplicity, and physical modeling makes SfD a promising solution for fast, compact, interpretable hyperspectral imaging.

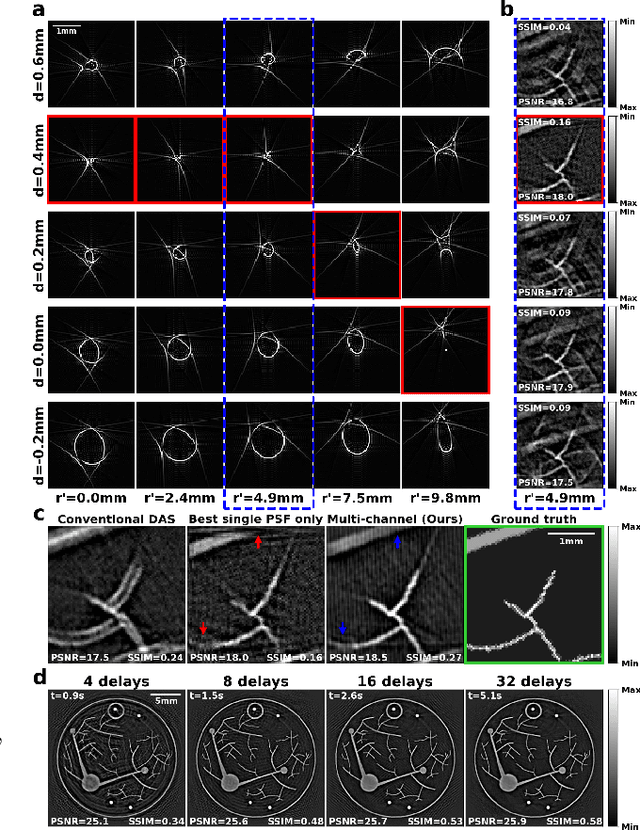

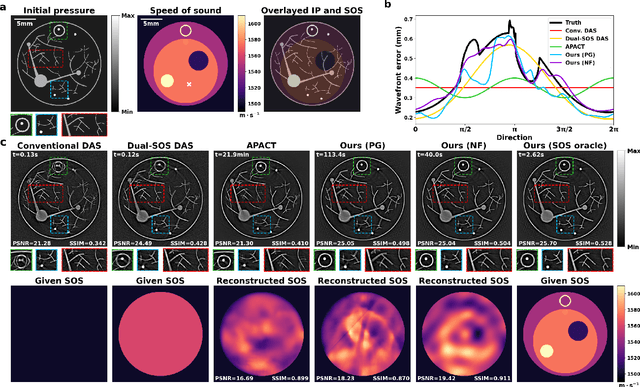

Neural Fields for Adaptive Photoacoustic Computed Tomography

Sep 17, 2024

Abstract:Photoacoustic computed tomography (PACT) is a non-invasive imaging modality with wide medical applications. Conventional PACT image reconstruction algorithms suffer from wavefront distortion caused by the heterogeneous speed of sound (SOS) in tissue, which leads to image degradation. Accounting for these effects improves image quality, but measuring the SOS distribution is experimentally expensive. An alternative approach is to perform joint reconstruction of the initial pressure image and SOS using only the PA signals. Existing joint reconstruction methods come with limitations: high computational cost, inability to directly recover SOS, and reliance on inaccurate simplifying assumptions. Implicit neural representation, or neural fields, is an emerging technique in computer vision to learn an efficient and continuous representation of physical fields with a coordinate-based neural network. In this work, we introduce NF-APACT, an efficient self-supervised framework utilizing neural fields to estimate the SOS in service of an accurate and robust multi-channel deconvolution. Our method removes SOS aberrations an order of magnitude faster and more accurately than existing methods. We demonstrate the success of our method on a novel numerical phantom as well as an experimentally collected phantom and in vivo data. Our code and numerical phantom are available at https://github.com/Lukeli0425/NF-APACT.

Depth from Coupled Optical Differentiation

Sep 16, 2024

Abstract:We propose depth from coupled optical differentiation, a low-computation passive-lighting 3D sensing mechanism. It is based on our discovery that per-pixel object distance can be rigorously determined by a coupled pair of optical derivatives of a defocused image using a simple, closed-form relationship. Unlike previous depth-from-defocus (DfD) methods that leverage spatial derivatives of the image to estimate scene depths, the proposed mechanism's use of only optical derivatives makes it significantly more robust to noise. Furthermore, unlike many previous DfD algorithms with requirements on aperture code, this relationship is proved to be universal to a broad range of aperture codes. We build the first 3D sensor based on depth from coupled optical differentiation. Its optical assembly includes a deformable lens and a motorized iris, which enables dynamic adjustments to the optical power and aperture radius. The sensor captures two pairs of images: one pair with a differential change of optical power and the other with a differential change of aperture scale. From the four images, a depth and confidence map can be generated with only 36 floating point operations per output pixel (FLOPOP), more than ten times lower than the previous lowest passive-lighting depth sensing solution to our knowledge. Additionally, the depth map generated by the proposed sensor demonstrates more than twice the working range of previous DfD methods while using significantly lower computation.

HyperColorization: Propagating spatially sparse noisy spectral clues for reconstructing hyperspectral images

Mar 18, 2024

Abstract:Hyperspectral cameras face challenging spatial-spectral resolution trade-offs and are more affected by shot noise than RGB photos taken over the same total exposure time. Here, we present a colorization algorithm to reconstruct hyperspectral images from a grayscale guide image and spatially sparse spectral clues. We demonstrate that our algorithm generalizes to varying spectral dimensions for hyperspectral images, and show that colorizing in a low-rank space reduces compute time and the impact of shot noise. To enhance robustness, we incorporate guided sampling, edge-aware filtering, and dimensionality estimation techniques. Our method surpasses previous algorithms in various performance metrics, including SSIM, PSNR, GFC, and EMD, which we analyze as metrics for characterizing hyperspectral image quality. Collectively, these findings provide a promising avenue for overcoming the time-space-wavelength resolution trade-off by reconstructing a dense hyperspectral image from samples obtained by whisk or push broom scanners, as well as hybrid spatial-spectral computational imaging systems.

* 16 Pages, 13 Figures, 3 Tables, for more information: https://mehmetkeremaydin.github.io/hypercolorization/

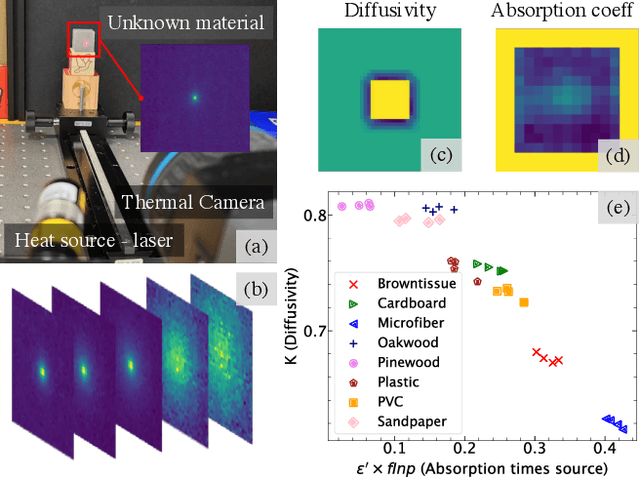

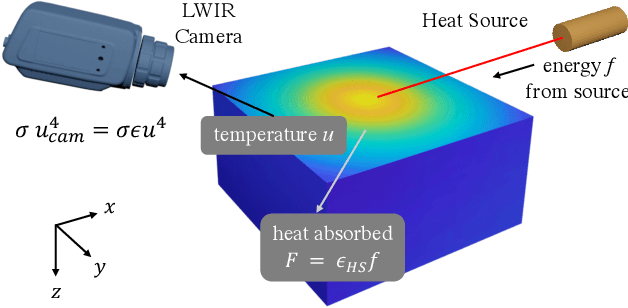

Thermal Spread Functions (TSF): Physics-guided Material Classification

Apr 03, 2023

Abstract:Robust and non-destructive material classification is a challenging but crucial first-step in numerous vision applications. We propose a physics-guided material classification framework that relies on thermal properties of the object. Our key observation is that the rate of heating and cooling of an object depends on the unique intrinsic properties of the material, namely the emissivity and diffusivity. We leverage this observation by gently heating the objects in the scene with a low-power laser for a fixed duration and then turning it off, while a thermal camera captures measurements during the heating and cooling process. We then take this spatial and temporal "thermal spread function" (TSF) to solve an inverse heat equation using the finite-differences approach, resulting in a spatially varying estimate of diffusivity and emissivity. These tuples are then used to train a classifier that produces a fine-grained material label at each spatial pixel. Our approach is extremely simple requiring only a small light source (low power laser) and a thermal camera, and produces robust classification results with 86% accuracy over 16 classes.

Galaxy Image Deconvolution for Weak Gravitational Lensing with Physics-informed Deep Learning

Nov 03, 2022

Abstract:Removing optical and atmospheric blur from galaxy images significantly improves galaxy shape measurements for weak gravitational lensing and galaxy evolution studies. This ill-posed linear inverse problem is usually solved with deconvolution algorithms enhanced by regularisation priors or deep learning. We introduce a so-called "physics-based deep learning" approach to the Point Spread Function (PSF) deconvolution problem in galaxy surveys. We apply algorithm unrolling and the Plug-and-Play technique to the Alternating Direction Method of Multipliers (ADMM) with a Poisson noise model and use a neural network to learn appropriate priors from simulated galaxy images. We characterise the time-performance trade-off of several methods for galaxies of differing brightness levels, showing an improvement of 26% (SNR=20)/48% (SNR=100) compared to standard methods and 14% (SNR=20) compared to modern methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge