Josiah Hester

MASCOT: Towards Multi-Agent Socio-Collaborative Companion Systems

Jan 20, 2026Abstract:Multi-agent systems (MAS) have recently emerged as promising socio-collaborative companions for emotional and cognitive support. However, these systems frequently suffer from persona collapse--where agents revert to generic, homogenized assistant behaviors--and social sycophancy, which produces redundant, non-constructive dialogue. We propose MASCOT, a generalizable framework for multi-perspective socio-collaborative companions. MASCOT introduces a novel bi-level optimization strategy to harmonize individual and collective behaviors: 1) Persona-Aware Behavioral Alignment, an RLAIF-driven pipeline that finetunes individual agents for strict persona fidelity to prevent identity loss; and 2) Collaborative Dialogue Optimization, a meta-policy guided by group-level rewards to ensure diverse and productive discourse. Extensive evaluations across psychological support and workplace domains demonstrate that MASCOT significantly outperforms state-of-the-art baselines, achieving improvements of up to +14.1 in Persona Consistency and +10.6 in Social Contribution. Our framework provides a practical roadmap for engineering the next generation of socially intelligent multi-agent systems.

CompanionCast: A Multi-Agent Conversational AI Framework with Spatial Audio for Social Co-Viewing Experiences

Dec 11, 2025Abstract:Social presence is central to the enjoyment of watching content together, yet modern media consumption is increasingly solitary. We investigate whether multi-agent conversational AI systems can recreate the dynamics of shared viewing experiences across diverse content types. We present CompanionCast, a general framework for orchestrating multiple role-specialized AI agents that respond to video content using multimodal inputs, speech synthesis, and spatial audio. Distinctly, CompanionCast integrates an LLM-as-a-Judge module that iteratively scores and refines conversations across five dimensions (relevance, authenticity, engagement, diversity, personality consistency). We validate this framework through sports viewing, a domain with rich dynamics and strong social traditions, where a pilot study with soccer fans suggests that multi-agent interaction improves perceived social presence compared to solo viewing. We contribute: (1) a generalizable framework for orchestrating multi-agent conversations around multimodal video content, (2) a novel evaluator-agent pipeline for conversation quality control, and (3) exploratory evidence of increased social presence in AI-mediated co-viewing. We discuss challenges and future directions for applying this approach to diverse viewing contexts including entertainment, education, and collaborative watching experiences.

Focal Split: Untethered Snapshot Depth from Differential Defocus

Apr 15, 2025Abstract:We introduce Focal Split, a handheld, snapshot depth camera with fully onboard power and computing based on depth-from-differential-defocus (DfDD). Focal Split is passive, avoiding power consumption of light sources. Its achromatic optical system simultaneously forms two differentially defocused images of the scene, which can be independently captured using two photosensors in a snapshot. The data processing is based on the DfDD theory, which efficiently computes a depth and a confidence value for each pixel with only 500 floating point operations (FLOPs) per pixel from the camera measurements. We demonstrate a Focal Split prototype, which comprises a handheld custom camera system connected to a Raspberry Pi 5 for real-time data processing. The system consumes 4.9 W and is powered on a 5 V, 10,000 mAh battery. The prototype can measure objects with distances from 0.4 m to 1.2 m, outputting 480$\times$360 sparse depth maps at 2.1 frames per second (FPS) using unoptimized Python scripts. Focal Split is DIY friendly. A comprehensive guide to building your own Focal Split depth camera, code, and additional data can be found at https://focal-split.qiguo.org.

hls4ml: An Open-Source Codesign Workflow to Empower Scientific Low-Power Machine Learning Devices

Mar 23, 2021

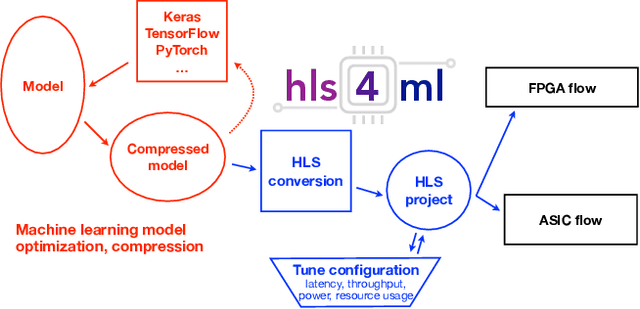

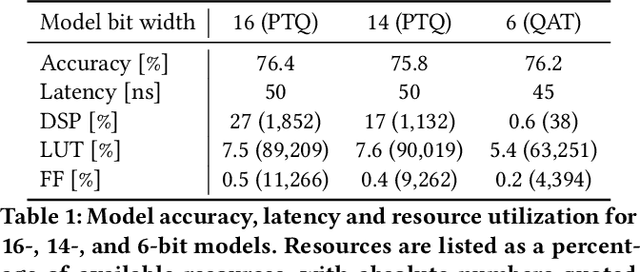

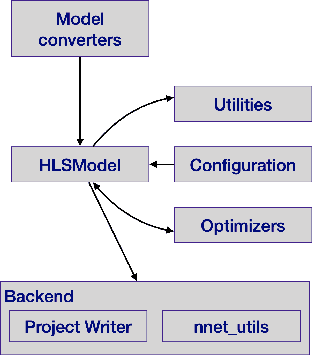

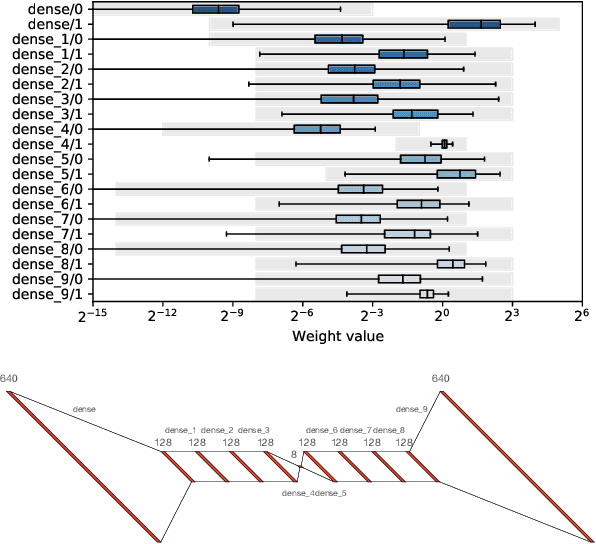

Abstract:Accessible machine learning algorithms, software, and diagnostic tools for energy-efficient devices and systems are extremely valuable across a broad range of application domains. In scientific domains, real-time near-sensor processing can drastically improve experimental design and accelerate scientific discoveries. To support domain scientists, we have developed hls4ml, an open-source software-hardware codesign workflow to interpret and translate machine learning algorithms for implementation with both FPGA and ASIC technologies. We expand on previous hls4ml work by extending capabilities and techniques towards low-power implementations and increased usability: new Python APIs, quantization-aware pruning, end-to-end FPGA workflows, long pipeline kernels for low power, and new device backends include an ASIC workflow. Taken together, these and continued efforts in hls4ml will arm a new generation of domain scientists with accessible, efficient, and powerful tools for machine-learning-accelerated discovery.

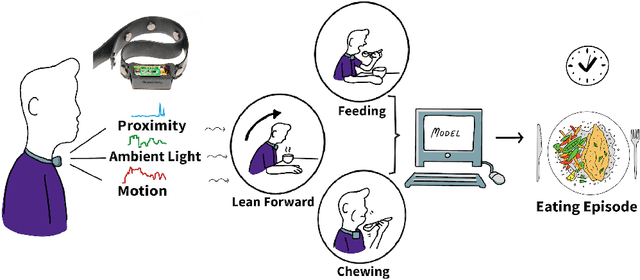

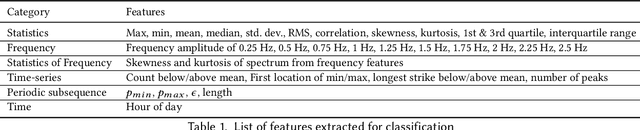

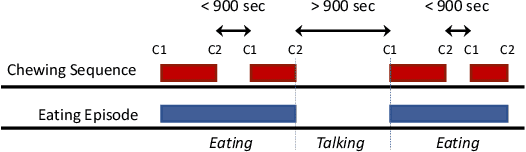

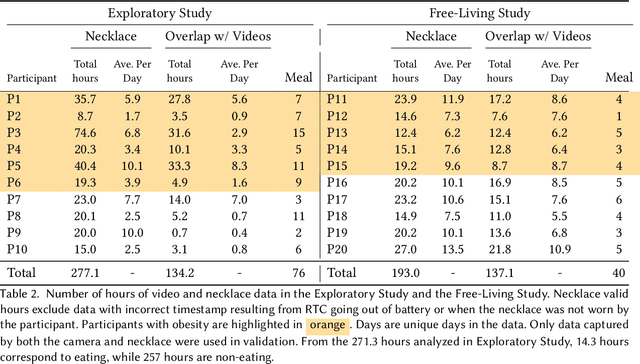

NeckSense: A Multi-Sensor Necklace for Detecting Eating Activities in Free-Living Conditions

Nov 20, 2019

Abstract:We present the design, implementation, and evaluation of a multi-sensor low-power necklace 'NeckSense' for automatically and unobtrusively capturing fine-grained information about an individual's eating activity and eating episodes, across an entire waking-day in a naturalistic setting. The NeckSense fuses and classifies the proximity of the necklace from the chin, the ambient light, the Lean Forward Angle, and the energy signals to determine chewing sequences, a building block of the eating activity. It then clusters the identified chewing sequences to determine eating episodes. We tested NeckSense with 11 obese and 9 non-obese participants across two studies, where we collected more than 470 hours of data in naturalistic setting. Our result demonstrates that NeckSense enables reliable eating-detection for an entire waking-day, even in free-living environments. Overall, our system achieves an F1-score of 81.6% in detecting eating episodes in an exploratory study. Moreover, our system can achieve a F1-score of 77.1% for episodes even in an all-day-around free-living setting. With more than 15.8 hours of battery-life NeckSense will allow researchers and dietitians to better understand natural chewing and eating behaviors, and also enable real-time interventions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge