Farah Fahim

End-to-end workflow for machine learning-based qubit readout with QICK and hls4ml

Jan 24, 2025

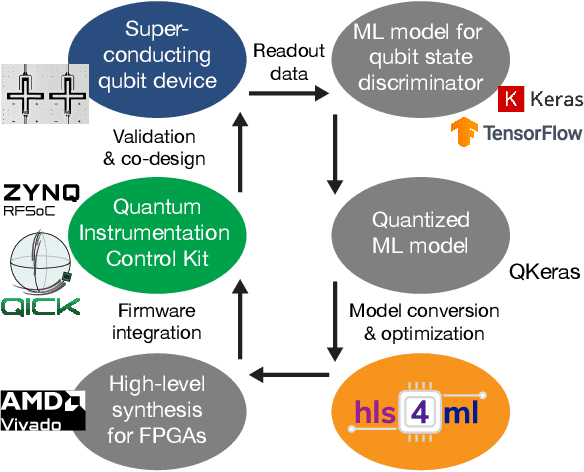

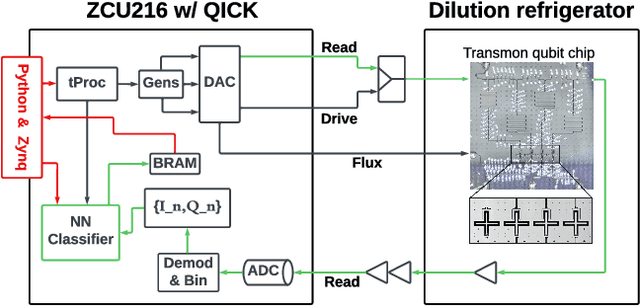

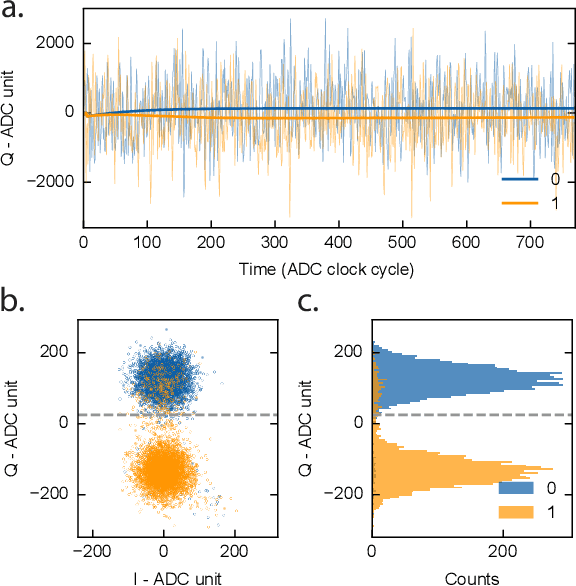

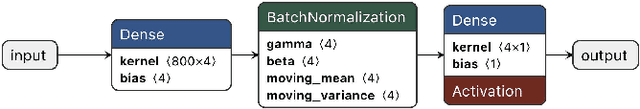

Abstract:We present an end-to-end workflow for superconducting qubit readout that embeds co-designed Neural Networks (NNs) into the Quantum Instrumentation Control Kit (QICK). Capitalizing on the custom firmware and software of the QICK platform, which is built on Xilinx RFSoC FPGAs, we aim to leverage machine learning (ML) to address critical challenges in qubit readout accuracy and scalability. The workflow utilizes the hls4ml package and employs quantization-aware training to translate ML models into hardware-efficient FPGA implementations via user-friendly Python APIs. We experimentally demonstrate the design, optimization, and integration of an ML algorithm for single transmon qubit readout, achieving 96% single-shot fidelity with a latency of 32ns and less than 16% FPGA look-up table resource utilization. Our results offer the community an accessible workflow to advance ML-driven readout and adaptive control in quantum information processing applications.

On-Sensor Data Filtering using Neuromorphic Computing for High Energy Physics Experiments

Jul 20, 2023

Abstract:This work describes the investigation of neuromorphic computing-based spiking neural network (SNN) models used to filter data from sensor electronics in high energy physics experiments conducted at the High Luminosity Large Hadron Collider. We present our approach for developing a compact neuromorphic model that filters out the sensor data based on the particle's transverse momentum with the goal of reducing the amount of data being sent to the downstream electronics. The incoming charge waveforms are converted to streams of binary-valued events, which are then processed by the SNN. We present our insights on the various system design choices - from data encoding to optimal hyperparameters of the training algorithm - for an accurate and compact SNN optimized for hardware deployment. Our results show that an SNN trained with an evolutionary algorithm and an optimized set of hyperparameters obtains a signal efficiency of about 91% with nearly half as many parameters as a deep neural network.

Memory-Immersed Collaborative Digitization for Area-Efficient Compute-in-Memory Deep Learning

Jul 07, 2023

Abstract:This work discusses memory-immersed collaborative digitization among compute-in-memory (CiM) arrays to minimize the area overheads of a conventional analog-to-digital converter (ADC) for deep learning inference. Thereby, using the proposed scheme, significantly more CiM arrays can be accommodated within limited footprint designs to improve parallelism and minimize external memory accesses. Under the digitization scheme, CiM arrays exploit their parasitic bit lines to form a within-memory capacitive digital-to-analog converter (DAC) that facilitates area-efficient successive approximation (SA) digitization. CiM arrays collaborate where a proximal array digitizes the analog-domain product-sums when an array computes the scalar product of input and weights. We discuss various networking configurations among CiM arrays where Flash, SA, and their hybrid digitization steps can be efficiently implemented using the proposed memory-immersed scheme. The results are demonstrated using a 65 nm CMOS test chip. Compared to a 40 nm-node 5-bit SAR ADC, our 65 nm design requires $\sim$25$\times$ less area and $\sim$1.4$\times$ less energy by leveraging in-memory computing structures. Compared to a 40 nm-node 5-bit Flash ADC, our design requires $\sim$51$\times$ less area and $\sim$13$\times$ less energy.

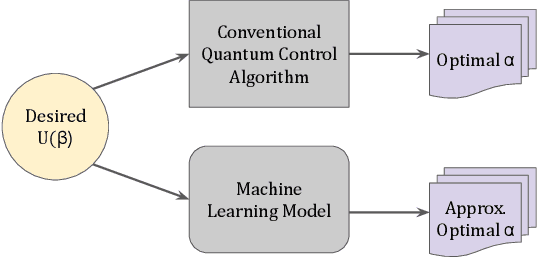

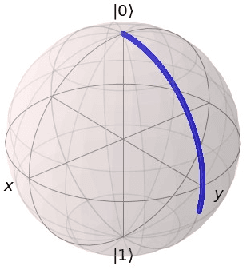

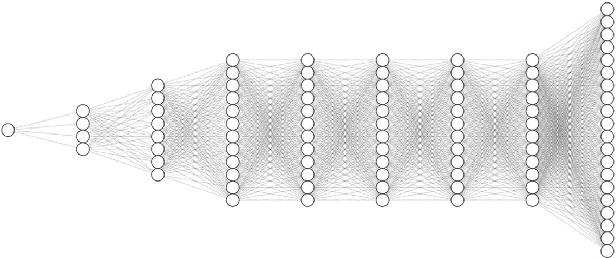

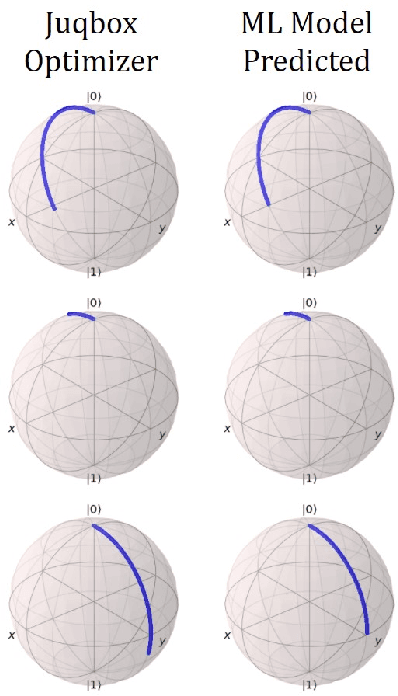

Neural network accelerator for quantum control

Aug 04, 2022

Abstract:Efficient quantum control is necessary for practical quantum computing implementations with current technologies. Conventional algorithms for determining optimal control parameters are computationally expensive, largely excluding them from use outside of the simulation. Existing hardware solutions structured as lookup tables are imprecise and costly. By designing a machine learning model to approximate the results of traditional tools, a more efficient method can be produced. Such a model can then be synthesized into a hardware accelerator for use in quantum systems. In this study, we demonstrate a machine learning algorithm for predicting optimal pulse parameters. This algorithm is lightweight enough to fit on a low-resource FPGA and perform inference with a latency of 175 ns and pipeline interval of 5 ns with $~>~$0.99 gate fidelity. In the long term, such an accelerator could be used near quantum computing hardware where traditional computers cannot operate, enabling quantum control at a reasonable cost at low latencies without incurring large data bandwidths outside of the cryogenic environment.

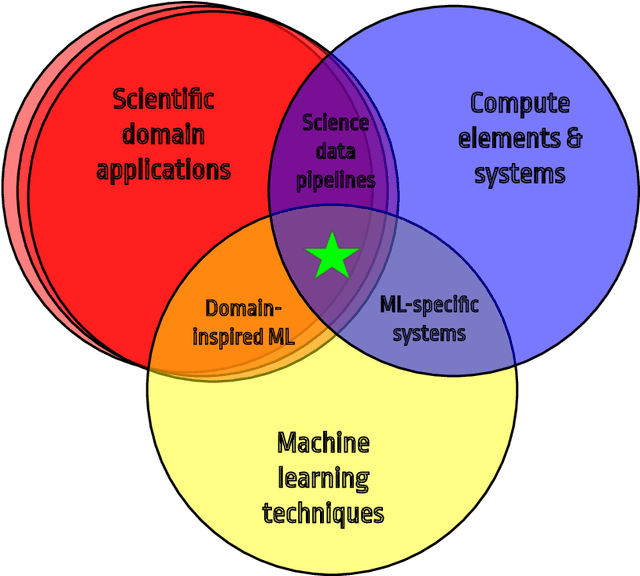

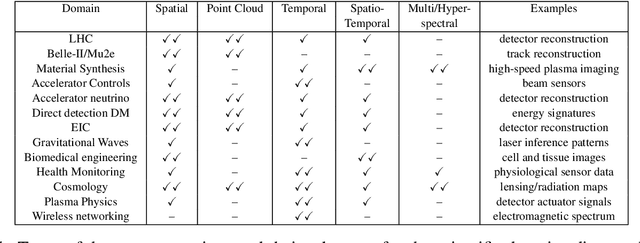

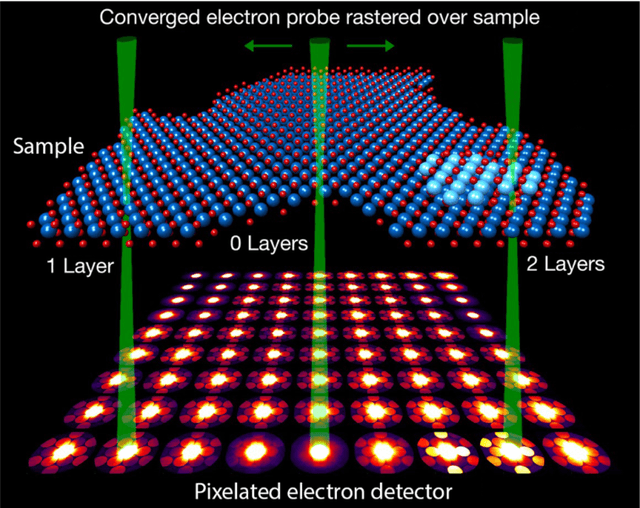

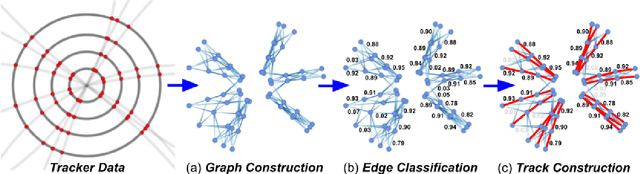

Applications and Techniques for Fast Machine Learning in Science

Oct 25, 2021

Abstract:In this community review report, we discuss applications and techniques for fast machine learning (ML) in science -- the concept of integrating power ML methods into the real-time experimental data processing loop to accelerate scientific discovery. The material for the report builds on two workshops held by the Fast ML for Science community and covers three main areas: applications for fast ML across a number of scientific domains; techniques for training and implementing performant and resource-efficient ML algorithms; and computing architectures, platforms, and technologies for deploying these algorithms. We also present overlapping challenges across the multiple scientific domains where common solutions can be found. This community report is intended to give plenty of examples and inspiration for scientific discovery through integrated and accelerated ML solutions. This is followed by a high-level overview and organization of technical advances, including an abundance of pointers to source material, which can enable these breakthroughs.

A reconfigurable neural network ASIC for detector front-end data compression at the HL-LHC

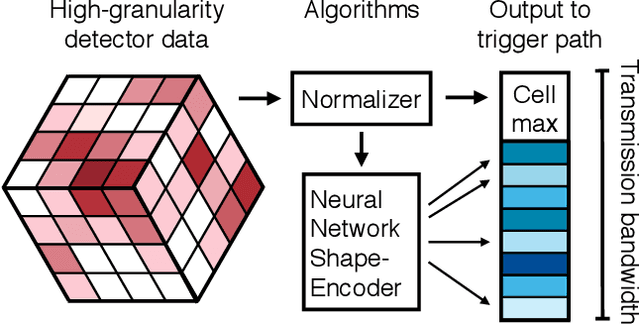

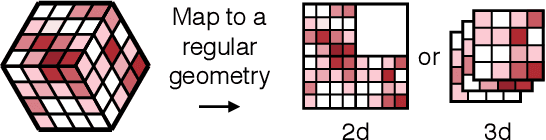

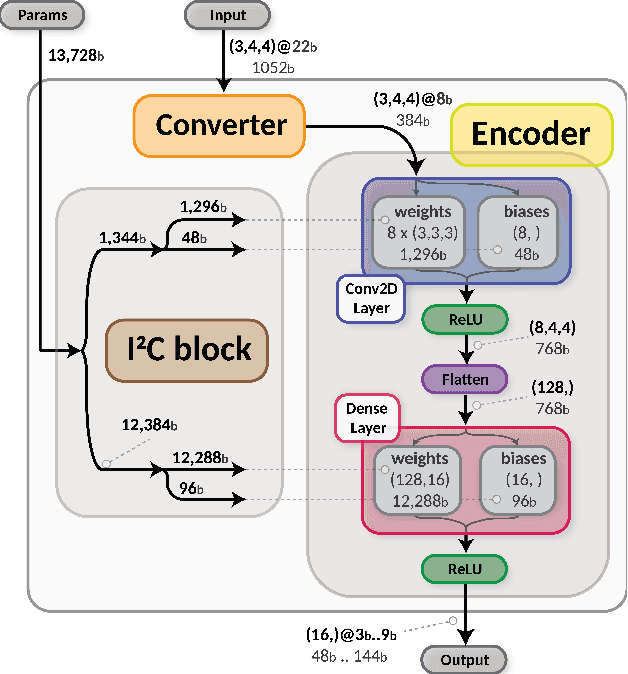

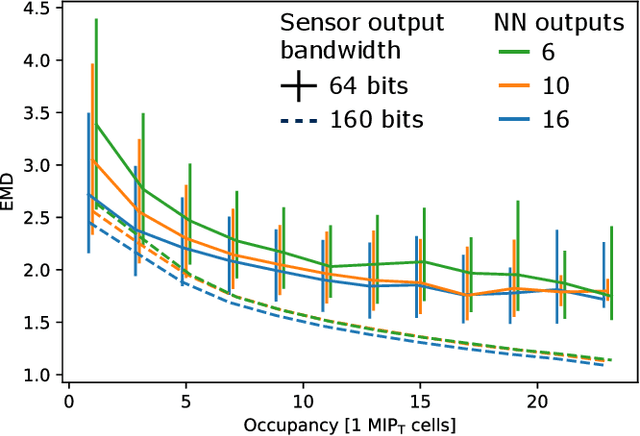

May 04, 2021

Abstract:Despite advances in the programmable logic capabilities of modern trigger systems, a significant bottleneck remains in the amount of data to be transported from the detector to off-detector logic where trigger decisions are made. We demonstrate that a neural network autoencoder model can be implemented in a radiation tolerant ASIC to perform lossy data compression alleviating the data transmission problem while preserving critical information of the detector energy profile. For our application, we consider the high-granularity calorimeter from the CMS experiment at the CERN Large Hadron Collider. The advantage of the machine learning approach is in the flexibility and configurability of the algorithm. By changing the neural network weights, a unique data compression algorithm can be deployed for each sensor in different detector regions, and changing detector or collider conditions. To meet area, performance, and power constraints, we perform a quantization-aware training to create an optimized neural network hardware implementation. The design is achieved through the use of high-level synthesis tools and the hls4ml framework, and was processed through synthesis and physical layout flows based on a LP CMOS 65 nm technology node. The flow anticipates 200 Mrad of ionizing radiation to select gates, and reports a total area of 3.6 mm^2 and consumes 95 mW of power. The simulated energy consumption per inference is 2.4 nJ. This is the first radiation tolerant on-detector ASIC implementation of a neural network that has been designed for particle physics applications.

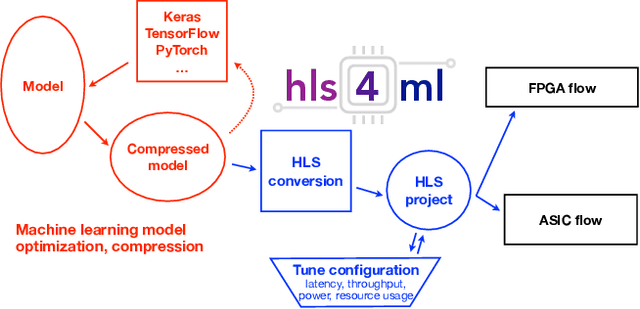

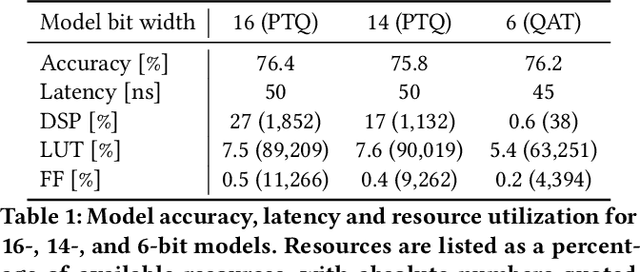

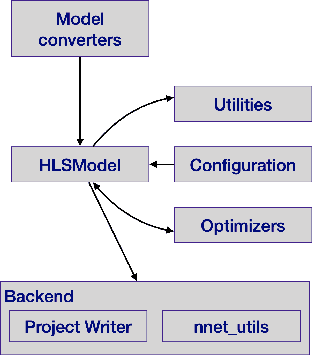

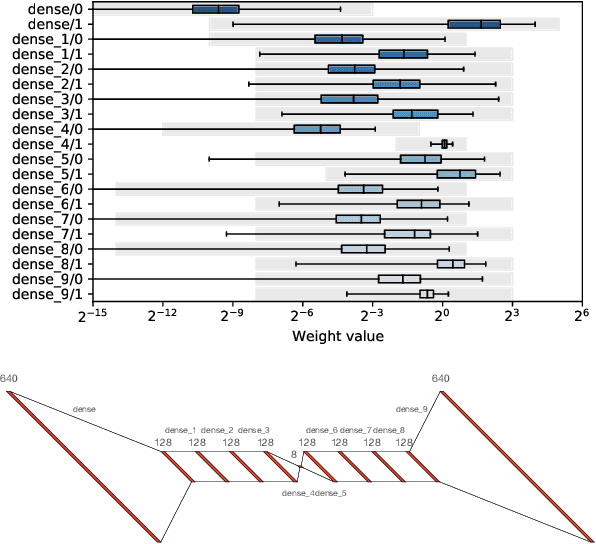

hls4ml: An Open-Source Codesign Workflow to Empower Scientific Low-Power Machine Learning Devices

Mar 23, 2021

Abstract:Accessible machine learning algorithms, software, and diagnostic tools for energy-efficient devices and systems are extremely valuable across a broad range of application domains. In scientific domains, real-time near-sensor processing can drastically improve experimental design and accelerate scientific discoveries. To support domain scientists, we have developed hls4ml, an open-source software-hardware codesign workflow to interpret and translate machine learning algorithms for implementation with both FPGA and ASIC technologies. We expand on previous hls4ml work by extending capabilities and techniques towards low-power implementations and increased usability: new Python APIs, quantization-aware pruning, end-to-end FPGA workflows, long pipeline kernels for low power, and new device backends include an ASIC workflow. Taken together, these and continued efforts in hls4ml will arm a new generation of domain scientists with accessible, efficient, and powerful tools for machine-learning-accelerated discovery.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge