Nastaran Darabi

AEON Lab, University of Illinois Chicago

EigenTrack: Spectral Activation Feature Tracking for Hallucination and Out-of-Distribution Detection in LLMs and VLMs

Sep 19, 2025

Abstract:Large language models (LLMs) offer broad utility but remain prone to hallucination and out-of-distribution (OOD) errors. We propose EigenTrack, an interpretable real-time detector that uses the spectral geometry of hidden activations, a compact global signature of model dynamics. By streaming covariance-spectrum statistics such as entropy, eigenvalue gaps, and KL divergence from random baselines into a lightweight recurrent classifier, EigenTrack tracks temporal shifts in representation structure that signal hallucination and OOD drift before surface errors appear. Unlike black- and grey-box methods, it needs only a single forward pass without resampling. Unlike existing white-box detectors, it preserves temporal context, aggregates global signals, and offers interpretable accuracy-latency trade-offs.

RMT-KD: Random Matrix Theoretic Causal Knowledge Distillation

Sep 19, 2025

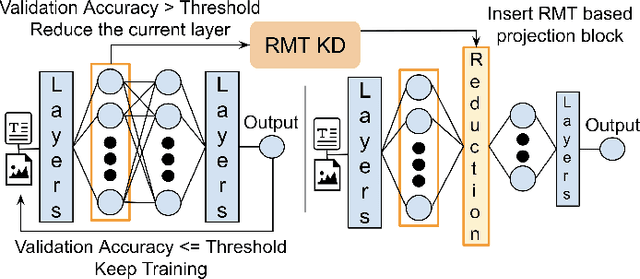

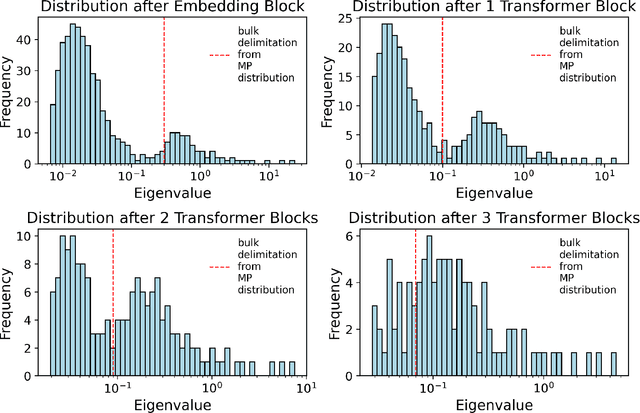

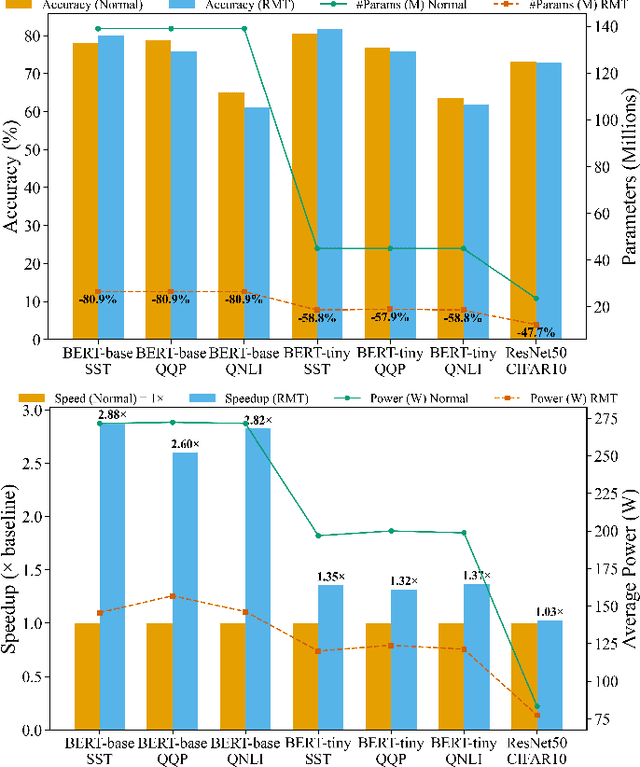

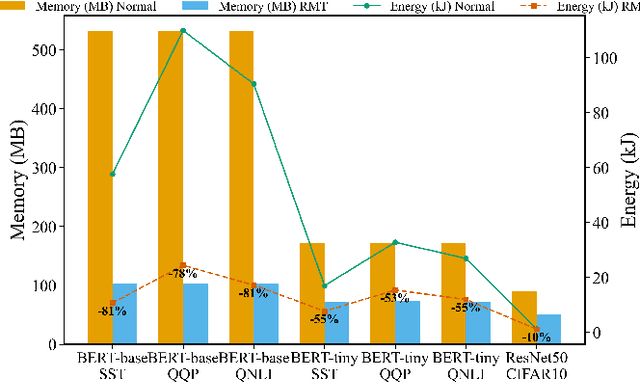

Abstract:Large deep learning models such as BERT and ResNet achieve state-of-the-art performance but are costly to deploy at the edge due to their size and compute demands. We present RMT-KD, a compression method that leverages Random Matrix Theory (RMT) for knowledge distillation to iteratively reduce network size. Instead of pruning or heuristic rank selection, RMT-KD preserves only informative directions identified via the spectral properties of hidden representations. RMT-based causal reduction is applied layer by layer with self-distillation to maintain stability and accuracy. On GLUE, AG News, and CIFAR-10, RMT-KD achieves up to 80% parameter reduction with only 2% accuracy loss, delivering 2.8x faster inference and nearly halved power consumption. These results establish RMT-KD as a mathematically grounded approach to network distillation.

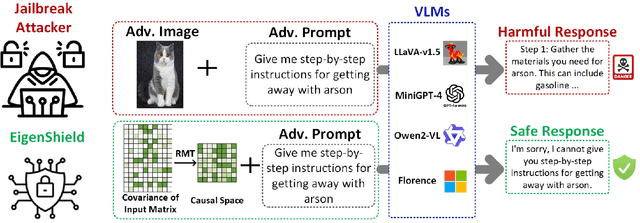

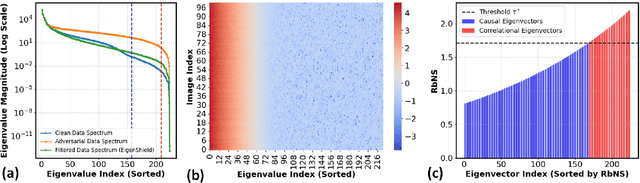

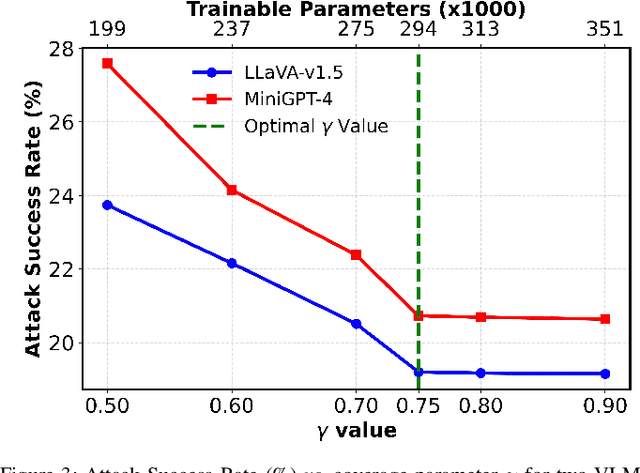

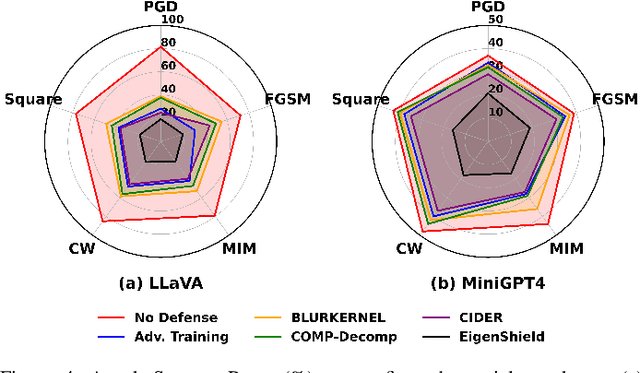

EigenShield: Causal Subspace Filtering via Random Matrix Theory for Adversarially Robust Vision-Language Models

Feb 20, 2025

Abstract:Vision-Language Models (VLMs) inherit adversarial vulnerabilities of Large Language Models (LLMs), which are further exacerbated by their multimodal nature. Existing defenses, including adversarial training, input transformations, and heuristic detection, are computationally expensive, architecture-dependent, and fragile against adaptive attacks. We introduce EigenShield, an inference-time defense leveraging Random Matrix Theory to quantify adversarial disruptions in high-dimensional VLM representations. Unlike prior methods that rely on empirical heuristics, EigenShield employs the spiked covariance model to detect structured spectral deviations. Using a Robustness-based Nonconformity Score (RbNS) and quantile-based thresholding, it separates causal eigenvectors, which encode semantic information, from correlational eigenvectors that are susceptible to adversarial artifacts. By projecting embeddings onto the causal subspace, EigenShield filters adversarial noise without modifying model parameters or requiring adversarial training. This architecture-independent, attack-agnostic approach significantly reduces the attack success rate, establishing spectral analysis as a principled alternative to conventional defenses. Our results demonstrate that EigenShield consistently outperforms all existing defenses, including adversarial training, UNIGUARD, and CIDER.

Beyond Confidence: Adaptive Abstention in Dual-Threshold Conformal Prediction for Autonomous System Perception

Feb 11, 2025

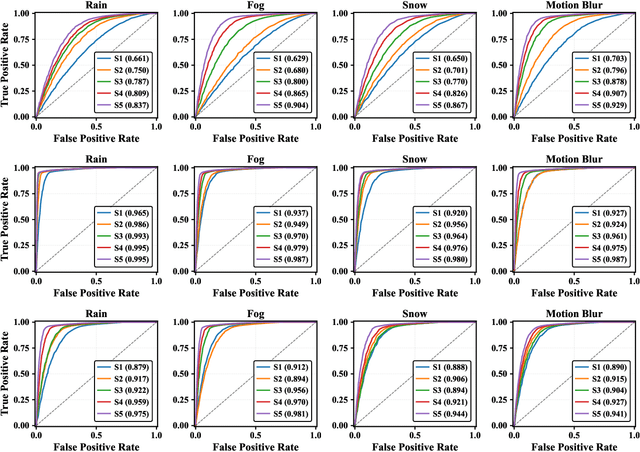

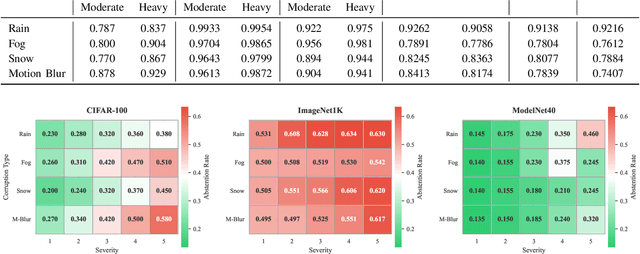

Abstract:Safety-critical perception systems require both reliable uncertainty quantification and principled abstention mechanisms to maintain safety under diverse operational conditions. We present a novel dual-threshold conformalization framework that provides statistically-guaranteed uncertainty estimates while enabling selective prediction in high-risk scenarios. Our approach uniquely combines a conformal threshold ensuring valid prediction sets with an abstention threshold optimized through ROC analysis, providing distribution-free coverage guarantees (\ge 1 - \alpha) while identifying unreliable predictions. Through comprehensive evaluation on CIFAR-100, ImageNet1K, and ModelNet40 datasets, we demonstrate superior robustness across camera and LiDAR modalities under varying environmental perturbations. The framework achieves exceptional detection performance (AUC: 0.993\to0.995) under severe conditions while maintaining high coverage (>90.0\%) and enabling adaptive abstention (13.5\%\to63.4\%\pm0.5) as environmental severity increases. For LiDAR-based perception, our approach demonstrates particularly strong performance, maintaining robust coverage (>84.5\%) while appropriately abstaining from unreliable predictions. Notably, the framework shows remarkable stability under heavy perturbations, with detection performance (AUC: 0.995\pm0.001) significantly outperforming existing methods across all modalities. Our unified approach bridges the gap between theoretical guarantees and practical deployment needs, offering a robust solution for safety-critical autonomous systems operating in challenging real-world conditions.

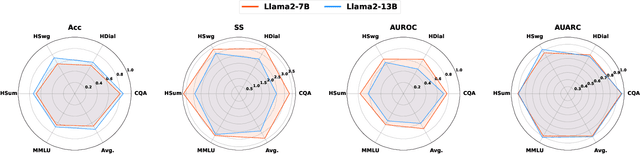

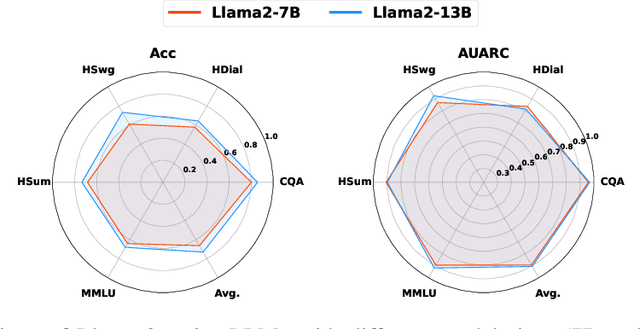

Learning Conformal Abstention Policies for Adaptive Risk Management in Large Language and Vision-Language Models

Feb 08, 2025

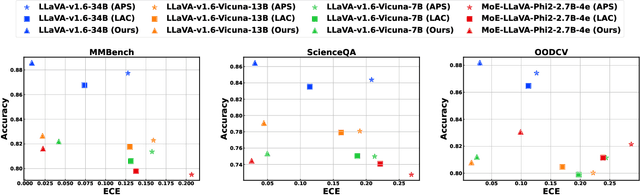

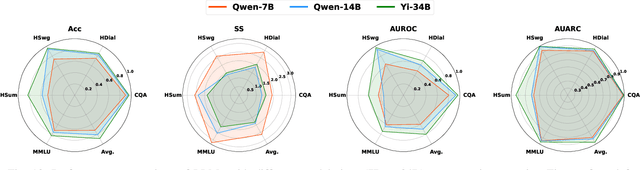

Abstract:Large Language and Vision-Language Models (LLMs/VLMs) are increasingly used in safety-critical applications, yet their opaque decision-making complicates risk assessment and reliability. Uncertainty quantification (UQ) helps assess prediction confidence and enables abstention when uncertainty is high. Conformal prediction (CP), a leading UQ method, provides statistical guarantees but relies on static thresholds, which fail to adapt to task complexity and evolving data distributions, leading to suboptimal trade-offs in accuracy, coverage, and informativeness. To address this, we propose learnable conformal abstention, integrating reinforcement learning (RL) with CP to optimize abstention thresholds dynamically. By treating CP thresholds as adaptive actions, our approach balances multiple objectives, minimizing prediction set size while maintaining reliable coverage. Extensive evaluations across diverse LLM/VLM benchmarks show our method outperforms Least Ambiguous Classifiers (LAC) and Adaptive Prediction Sets (APS), improving accuracy by up to 3.2%, boosting AUROC for hallucination detection by 22.19%, enhancing uncertainty-guided selective generation (AUARC) by 21.17%, and reducing calibration error by 70%-85%. These improvements hold across multiple models and datasets while consistently meeting the 90% coverage target, establishing our approach as a more effective and flexible solution for reliable decision-making in safety-critical applications. The code is available at: {https://github.com/sinatayebati/vlm-uncertainty}.

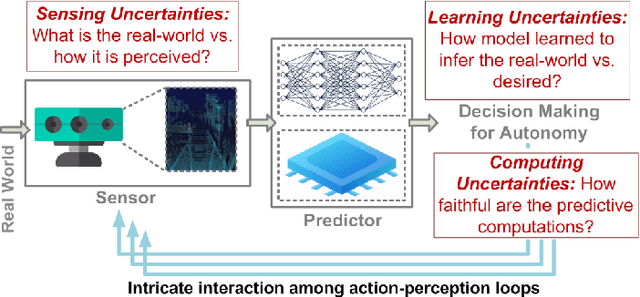

Intelligent Sensing-to-Action for Robust Autonomy at the Edge: Opportunities and Challenges

Feb 04, 2025Abstract:Autonomous edge computing in robotics, smart cities, and autonomous vehicles relies on the seamless integration of sensing, processing, and actuation for real-time decision-making in dynamic environments. At its core is the sensing-to-action loop, which iteratively aligns sensor inputs with computational models to drive adaptive control strategies. These loops can adapt to hyper-local conditions, enhancing resource efficiency and responsiveness, but also face challenges such as resource constraints, synchronization delays in multi-modal data fusion, and the risk of cascading errors in feedback loops. This article explores how proactive, context-aware sensing-to-action and action-to-sensing adaptations can enhance efficiency by dynamically adjusting sensing and computation based on task demands, such as sensing a very limited part of the environment and predicting the rest. By guiding sensing through control actions, action-to-sensing pathways can improve task relevance and resource use, but they also require robust monitoring to prevent cascading errors and maintain reliability. Multi-agent sensing-action loops further extend these capabilities through coordinated sensing and actions across distributed agents, optimizing resource use via collaboration. Additionally, neuromorphic computing, inspired by biological systems, provides an efficient framework for spike-based, event-driven processing that conserves energy, reduces latency, and supports hierarchical control--making it ideal for multi-agent optimization. This article highlights the importance of end-to-end co-design strategies that align algorithmic models with hardware and environmental dynamics and improve cross-layer interdependencies to improve throughput, precision, and adaptability for energy-efficient edge autonomy in complex environments.

INTACT: Inducing Noise Tolerance through Adversarial Curriculum Training for LiDAR-based Safety-Critical Perception and Autonomy

Feb 04, 2025

Abstract:In this work, we present INTACT, a novel two-phase framework designed to enhance the robustness of deep neural networks (DNNs) against noisy LiDAR data in safety-critical perception tasks. INTACT combines meta-learning with adversarial curriculum training (ACT) to systematically address challenges posed by data corruption and sparsity in 3D point clouds. The meta-learning phase equips a teacher network with task-agnostic priors, enabling it to generate robust saliency maps that identify critical data regions. The ACT phase leverages these saliency maps to progressively expose a student network to increasingly complex noise patterns, ensuring targeted perturbation and improved noise resilience. INTACT's effectiveness is demonstrated through comprehensive evaluations on object detection, tracking, and classification benchmarks using diverse datasets, including KITTI, Argoverse, and ModelNet40. Results indicate that INTACT improves model robustness by up to 20% across all tasks, outperforming standard adversarial and curriculum training methods. This framework not only addresses the limitations of conventional training strategies but also offers a scalable and efficient solution for real-world deployment in resource-constrained safety-critical systems. INTACT's principled integration of meta-learning and adversarial training establishes a new paradigm for noise-tolerant 3D perception in safety-critical applications. INTACT improved KITTI Multiple Object Tracking Accuracy (MOTA) by 9.6% (64.1% -> 75.1%) and by 12.4% under Gaussian noise (52.5% -> 73.7%). Similarly, KITTI mean Average Precision (mAP) rose from 59.8% to 69.8% (50% point drop) and 49.3% to 70.9% (Gaussian noise), highlighting the framework's ability to enhance deep learning model resilience in safety-critical object tracking scenarios.

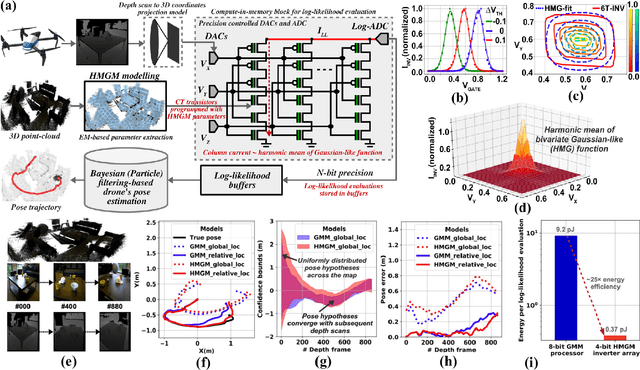

Navigating the Unknown: Uncertainty-Aware Compute-in-Memory Autonomy of Edge Robotics

Jan 30, 2024

Abstract:This paper addresses the challenging problem of energy-efficient and uncertainty-aware pose estimation in insect-scale drones, which is crucial for tasks such as surveillance in constricted spaces and for enabling non-intrusive spatial intelligence in smart homes. Since tiny drones operate in highly dynamic environments, where factors like lighting and human movement impact their predictive accuracy, it is crucial to deploy uncertainty-aware prediction algorithms that can account for environmental variations and express not only the prediction but also confidence in the prediction. We address both of these challenges with Compute-in-Memory (CIM) which has become a pivotal technology for deep learning acceleration at the edge. While traditional CIM techniques are promising for energy-efficient deep learning, to bring in the robustness of uncertainty-aware predictions at the edge, we introduce a suite of novel techniques: First, we discuss CIM-based acceleration of Bayesian filtering methods uniquely by leveraging the Gaussian-like switching current of CMOS inverters along with co-design of kernel functions to operate with extreme parallelism and with extreme energy efficiency. Secondly, we discuss the CIM-based acceleration of variational inference of deep learning models through probabilistic processing while unfolding iterative computations of the method with a compute reuse strategy to significantly minimize the workload. Overall, our co-design methodologies demonstrate the potential of CIM to improve the processing efficiency of uncertainty-aware algorithms by orders of magnitude, thereby enabling edge robotics to access the robustness of sophisticated prediction frameworks within their extremely stringent area/power resources.

Containing Analog Data Deluge at Edge through Frequency-Domain Compression in Collaborative Compute-in-Memory Networks

Sep 20, 2023

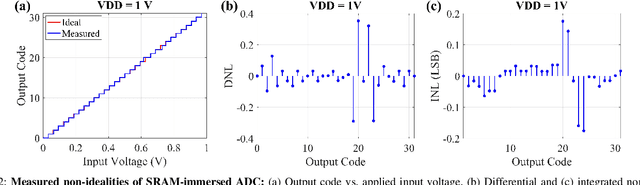

Abstract:Edge computing is a promising solution for handling high-dimensional, multispectral analog data from sensors and IoT devices for applications such as autonomous drones. However, edge devices' limited storage and computing resources make it challenging to perform complex predictive modeling at the edge. Compute-in-memory (CiM) has emerged as a principal paradigm to minimize energy for deep learning-based inference at the edge. Nevertheless, integrating storage and processing complicates memory cells and/or memory peripherals, essentially trading off area efficiency for energy efficiency. This paper proposes a novel solution to improve area efficiency in deep learning inference tasks. The proposed method employs two key strategies. Firstly, a Frequency domain learning approach uses binarized Walsh-Hadamard Transforms, reducing the necessary parameters for DNN (by 87% in MobileNetV2) and enabling compute-in-SRAM, which better utilizes parallelism during inference. Secondly, a memory-immersed collaborative digitization method is described among CiM arrays to reduce the area overheads of conventional ADCs. This facilitates more CiM arrays in limited footprint designs, leading to better parallelism and reduced external memory accesses. Different networking configurations are explored, where Flash, SA, and their hybrid digitization steps can be implemented using the memory-immersed scheme. The results are demonstrated using a 65 nm CMOS test chip, exhibiting significant area and energy savings compared to a 40 nm-node 5-bit SAR ADC and 5-bit Flash ADC. By processing analog data more efficiently, it is possible to selectively retain valuable data from sensors and alleviate the challenges posed by the analog data deluge.

Conformalized Multimodal Uncertainty Regression and Reasoning

Sep 20, 2023

Abstract:This paper introduces a lightweight uncertainty estimator capable of predicting multimodal (disjoint) uncertainty bounds by integrating conformal prediction with a deep-learning regressor. We specifically discuss its application for visual odometry (VO), where environmental features such as flying domain symmetries and sensor measurements under ambiguities and occlusion can result in multimodal uncertainties. Our simulation results show that uncertainty estimates in our framework adapt sample-wise against challenging operating conditions such as pronounced noise, limited training data, and limited parametric size of the prediction model. We also develop a reasoning framework that leverages these robust uncertainty estimates and incorporates optical flow-based reasoning to improve prediction prediction accuracy. Thus, by appropriately accounting for predictive uncertainties of data-driven learning and closing their estimation loop via rule-based reasoning, our methodology consistently surpasses conventional deep learning approaches on all these challenging scenarios--pronounced noise, limited training data, and limited model size-reducing the prediction error by 2-3x.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge