Alexandra Boltasseva

End-to-end workflow for machine learning-based qubit readout with QICK and hls4ml

Jan 24, 2025

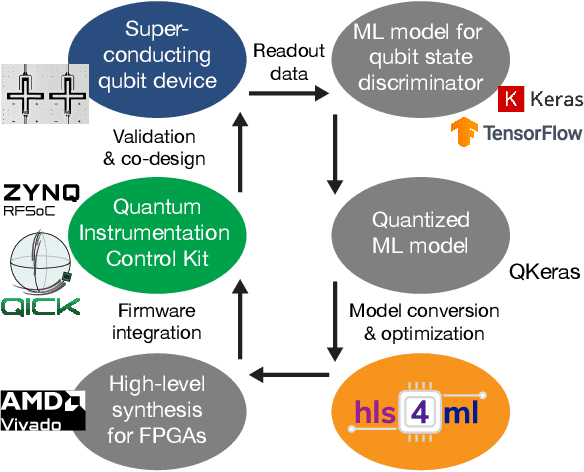

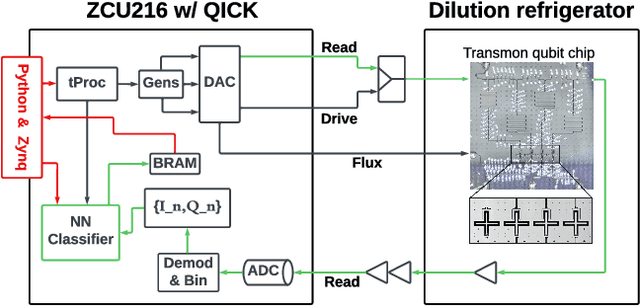

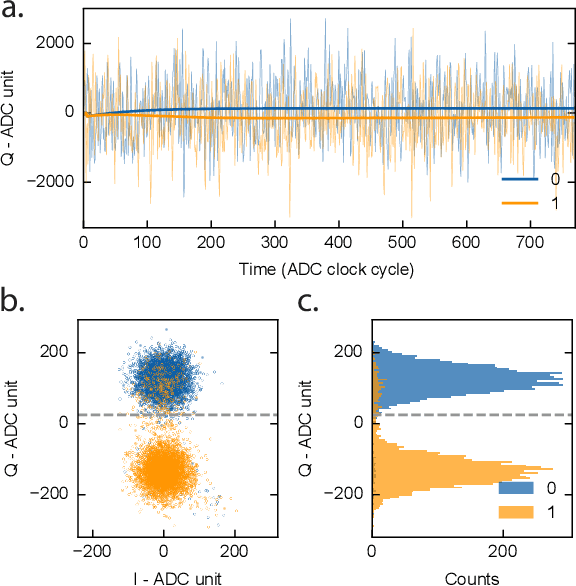

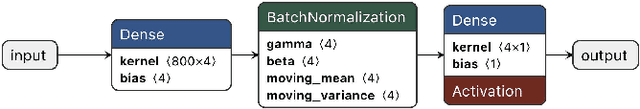

Abstract:We present an end-to-end workflow for superconducting qubit readout that embeds co-designed Neural Networks (NNs) into the Quantum Instrumentation Control Kit (QICK). Capitalizing on the custom firmware and software of the QICK platform, which is built on Xilinx RFSoC FPGAs, we aim to leverage machine learning (ML) to address critical challenges in qubit readout accuracy and scalability. The workflow utilizes the hls4ml package and employs quantization-aware training to translate ML models into hardware-efficient FPGA implementations via user-friendly Python APIs. We experimentally demonstrate the design, optimization, and integration of an ML algorithm for single transmon qubit readout, achieving 96% single-shot fidelity with a latency of 32ns and less than 16% FPGA look-up table resource utilization. Our results offer the community an accessible workflow to advance ML-driven readout and adaptive control in quantum information processing applications.

PearSAN: A Machine Learning Method for Inverse Design using Pearson Correlated Surrogate Annealing

Dec 26, 2024Abstract:PearSAN is a machine learning-assisted optimization algorithm applicable to inverse design problems with large design spaces, where traditional optimizers struggle. The algorithm leverages the latent space of a generative model for rapid sampling and employs a Pearson correlated surrogate model to predict the figure of merit of the true design metric. As a showcase example, PearSAN is applied to thermophotovoltaic (TPV) metasurface design by matching the working bands between a thermal radiator and a photovoltaic cell. PearSAN can work with any pretrained generative model with a discretized latent space, making it easy to integrate with VQ-VAEs and binary autoencoders. Its novel Pearson correlational loss can be used as both a latent regularization method, similar to batch and layer normalization, and as a surrogate training loss. We compare both to previous energy matching losses, which are shown to enforce poor regularization and performance, even with upgraded affine parameters. PearSAN achieves a state-of-the-art maximum design efficiency of 97%, and is at least an order of magnitude faster than previous methods, with an improved maximum figure-of-merit gain.

Non-native Quantum Generative Optimization with Adversarial Autoencoders

Jul 18, 2024

Abstract:Large-scale optimization problems are prevalent in several fields, including engineering, finance, and logistics. However, most optimization problems cannot be efficiently encoded onto a physical system because the existing quantum samplers have too few qubits. Another typical limiting factor is that the optimization constraints are not compatible with the native cost Hamiltonian. This work presents a new approach to address these challenges. We introduce the adversarial quantum autoencoder model (AQAM) that can be used to map large-scale optimization problems onto existing quantum samplers while simultaneously optimizing the problem through latent quantum-enhanced Boltzmann sampling. We demonstrate the AQAM on a neutral atom sampler, and showcase the model by optimizing 64px by 64px unit cells that represent a broad-angle filter metasurface applicable to improving the coherence of neutral atom devices. Using 12-atom simulations, we demonstrate that the AQAM achieves a lower Renyi divergence and a larger spectral gap when compared to classical Markov Chain Monte Carlo samplers. Our work paves the way to more efficient mapping of conventional optimization problems into existing quantum samplers.

Machine learning assisted quantum super-resolution microscopy

Jul 07, 2021

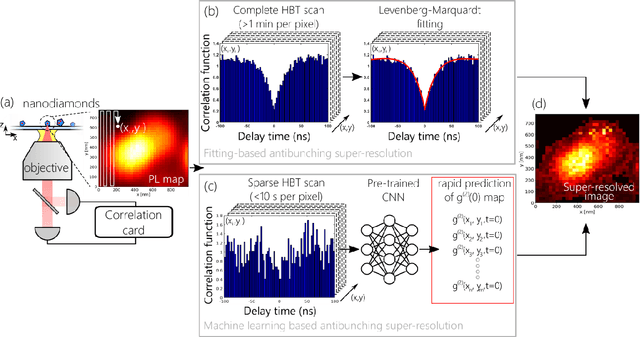

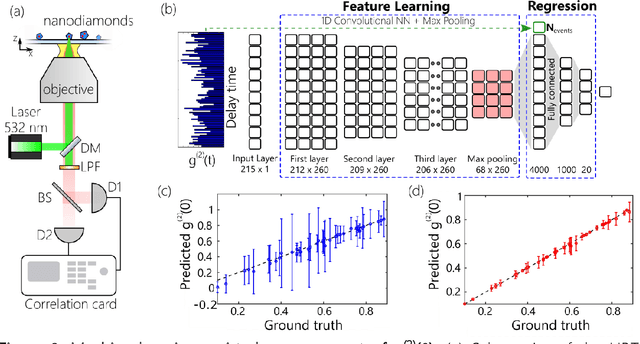

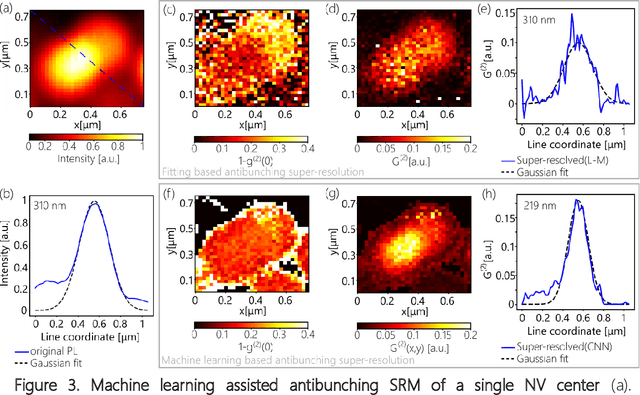

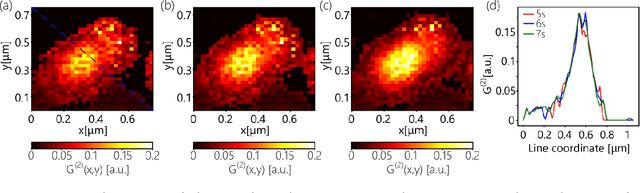

Abstract:One of the main characteristics of optical imaging systems is the spatial resolution, which is restricted by the diffraction limit to approximately half the wavelength of the incident light. Along with the recently developed classical super-resolution techniques, which aim at breaking the diffraction limit in classical systems, there is a class of quantum super-resolution techniques which leverage the non-classical nature of the optical signals radiated by quantum emitters, the so-called antibunching super-resolution microscopy. This approach can ensure a factor of $\sqrt{n}$ improvement in the spatial resolution by measuring the n-th order autocorrelation function. The main bottleneck of the antibunching super-resolution microscopy is the time-consuming acquisition of multi-photon event histograms. We present a machine learning-assisted approach for the realization of rapid antibunching super-resolution imaging and demonstrate 12 times speed-up compared to conventional, fitting-based autocorrelation measurements. The developed framework paves the way to the practical realization of scalable quantum super-resolution imaging devices that can be compatible with various types of quantum emitters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge