Nabil Alshurafa

THOR: Thermal-guided Hand-Object Reasoning via Adaptive Vision Sampling

Jul 08, 2025

Abstract:Wearable cameras are increasingly used as an observational and interventional tool for human behaviors by providing detailed visual data of hand-related activities. This data can be leveraged to facilitate memory recall for logging of behavior or timely interventions aimed at improving health. However, continuous processing of RGB images from these cameras consumes significant power impacting battery lifetime, generates a large volume of unnecessary video data for post-processing, raises privacy concerns, and requires substantial computational resources for real-time analysis. We introduce THOR, a real-time adaptive spatio-temporal RGB frame sampling method that leverages thermal sensing to capture hand-object patches and classify them in real-time. We use low-resolution thermal camera data to identify moments when a person switches from one hand-related activity to another, and adjust the RGB frame sampling rate by increasing it during activity transitions and reducing it during periods of sustained activity. Additionally, we use the thermal cues from the hand to localize the region of interest (i.e., the hand-object interaction) in each RGB frame, allowing the system to crop and process only the necessary part of the image for activity recognition. We develop a wearable device to validate our method through an in-the-wild study with 14 participants and over 30 activities, and further evaluate it on Ego4D (923 participants across 9 countries, totaling 3,670 hours of video). Our results show that using only 3% of the original RGB video data, our method captures all the activity segments, and achieves hand-related activity recognition F1-score (95%) comparable to using the entire RGB video (94%). Our work provides a more practical path for the longitudinal use of wearable cameras to monitor hand-related activities and health-risk behaviors in real time.

Deep Learning in Human Activity Recognition with Wearable Sensors: A Review on Advances

Nov 11, 2021

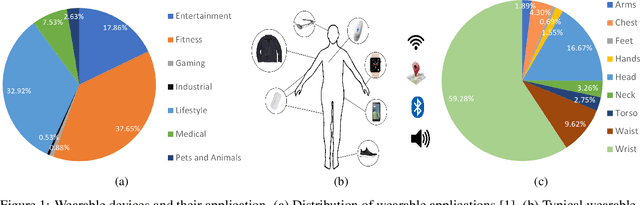

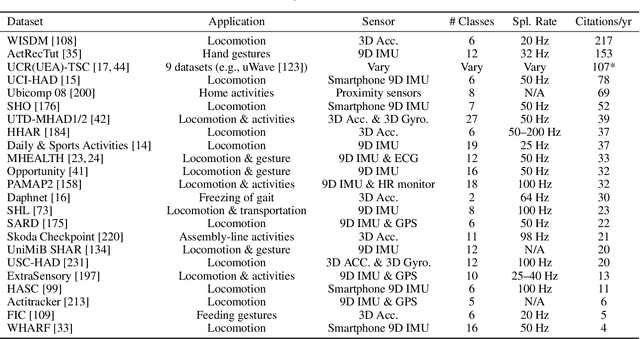

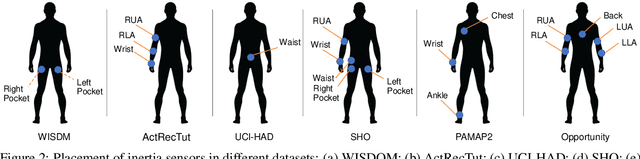

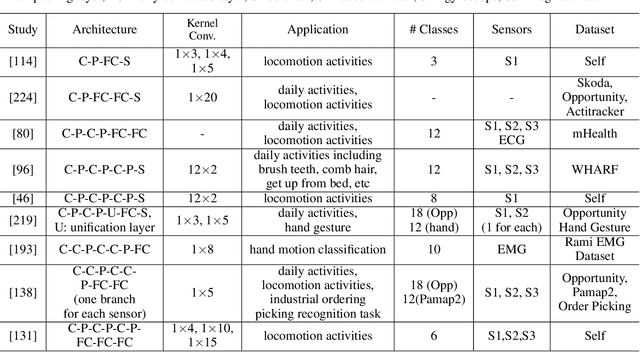

Abstract:Mobile and wearable devices have enabled numerous applications, including activity tracking, wellness monitoring, and human-computer interaction, that measure and improve our daily lives. Many of these applications are made possible by leveraging the rich collection of low-power sensors found in many mobile and wearable devices to perform human activity recognition (HAR). Recently, deep learning has greatly pushed the boundaries of HAR on mobile and wearable devices. This paper systematically categorizes and summarizes existing work that introduces deep learning methods for wearables-based HAR and provides a comprehensive analysis of the current advancements, developing trends, and major challenges. We also present cutting-edge frontiers and future directions for deep learning--based HAR.

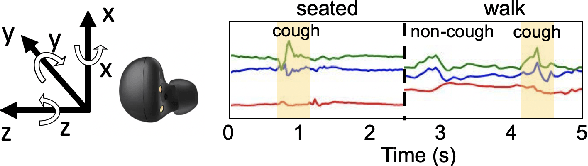

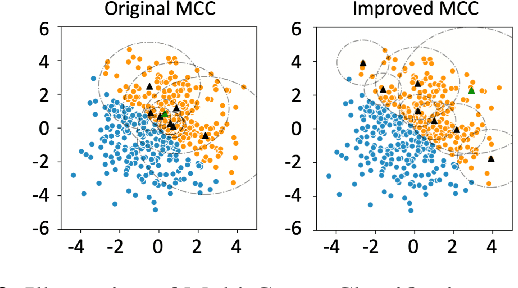

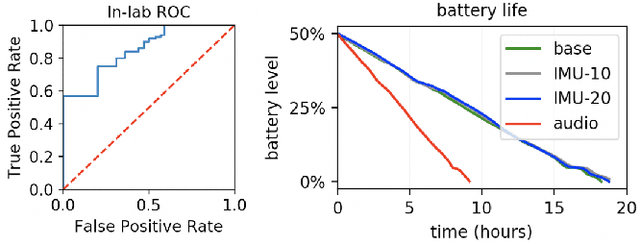

CoughTrigger: Earbuds IMU Based Cough Detection Activator Using An Energy-efficient Sensitivity-prioritized Time Series Classifier

Nov 07, 2021

Abstract:Persistent coughs are a major symptom of respiratory-related diseases. Increasing research attention has been paid to detecting coughs using wearables, especially during the COVID-19 pandemic. Among all types of sensors utilized, microphone is most widely used to detect coughs. However, the intense power consumption needed to process audio signals hinders continuous audio-based cough detection on battery-limited commercial wearable products, such as earbuds. We present CoughTrigger, which utilizes a lower-power sensor, an inertial measurement unit (IMU), in earbuds as a cough detection activator to trigger a higher-power sensor for audio processing and classification. It is able to run all-the-time as a standby service with minimal battery consumption and trigger the audio-based cough detection when a candidate cough is detected from IMU. Besides, the use of IMU brings the benefit of improved specificity of cough detection. Experiments are conducted on 45 subjects and our IMU-based model achieved 0.77 AUC score under leave one subject out evaluation. We also validated its effectiveness on free-living data and through on-device implementation.

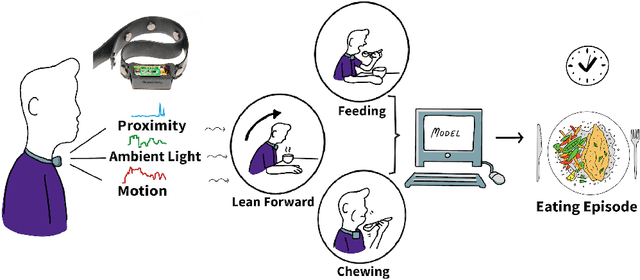

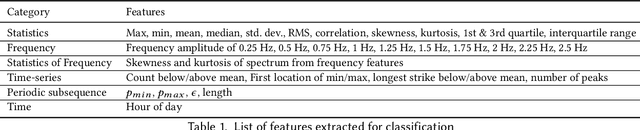

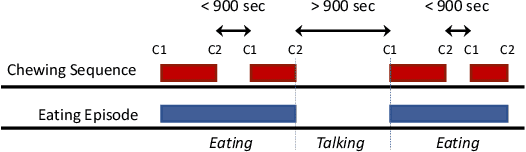

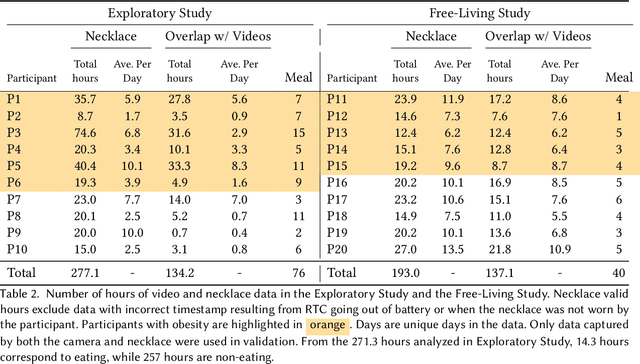

NeckSense: A Multi-Sensor Necklace for Detecting Eating Activities in Free-Living Conditions

Nov 20, 2019

Abstract:We present the design, implementation, and evaluation of a multi-sensor low-power necklace 'NeckSense' for automatically and unobtrusively capturing fine-grained information about an individual's eating activity and eating episodes, across an entire waking-day in a naturalistic setting. The NeckSense fuses and classifies the proximity of the necklace from the chin, the ambient light, the Lean Forward Angle, and the energy signals to determine chewing sequences, a building block of the eating activity. It then clusters the identified chewing sequences to determine eating episodes. We tested NeckSense with 11 obese and 9 non-obese participants across two studies, where we collected more than 470 hours of data in naturalistic setting. Our result demonstrates that NeckSense enables reliable eating-detection for an entire waking-day, even in free-living environments. Overall, our system achieves an F1-score of 81.6% in detecting eating episodes in an exploratory study. Moreover, our system can achieve a F1-score of 77.1% for episodes even in an all-day-around free-living setting. With more than 15.8 hours of battery-life NeckSense will allow researchers and dietitians to better understand natural chewing and eating behaviors, and also enable real-time interventions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge