Yongyi Zhao

Angle Sensitive Pixels for Lensless Imaging on Spherical Sensors

Jun 28, 2023Abstract:We propose OrbCam, a lensless architecture for imaging with spherical sensors. Prior work in lensless imager techniques have focused largely on using planar sensors; for such designs, it is important to use a modulation element, e.g. amplitude or phase masks, to construct a invertible imaging system. In contrast, we show that the diversity of pixel orientations on a curved surface is sufficient to improve the conditioning of the mapping between the scene and the sensor. Hence, when imaging on a spherical sensor, all pixels can have the same angular response function such that the lensless imager is comprised of pixels that are identical to each other and differ only in their orientations. We provide the computational tools for the design of the angular response of the pixels in a spherical sensor that leads to well-conditioned and noise-robust measurements. We validate our design in both simulation and a lab prototype. The implications of our design is that the lensless imaging can be enabled easily for curved and flexible surfaces thereby opening up a new set of application domains.

PANDORA: Polarization-Aided Neural Decomposition Of Radiance

Mar 25, 2022

Abstract:Reconstructing an object's geometry and appearance from multiple images, also known as inverse rendering, is a fundamental problem in computer graphics and vision. Inverse rendering is inherently ill-posed because the captured image is an intricate function of unknown lighting conditions, material properties and scene geometry. Recent progress in representing scene properties as coordinate-based neural networks have facilitated neural inverse rendering resulting in impressive geometry reconstruction and novel-view synthesis. Our key insight is that polarization is a useful cue for neural inverse rendering as polarization strongly depends on surface normals and is distinct for diffuse and specular reflectance. With the advent of commodity, on-chip, polarization sensors, capturing polarization has become practical. Thus, we propose PANDORA, a polarimetric inverse rendering approach based on implicit neural representations. From multi-view polarization images of an object, PANDORA jointly extracts the object's 3D geometry, separates the outgoing radiance into diffuse and specular and estimates the illumination incident on the object. We show that PANDORA outperforms state-of-the-art radiance decomposition techniques. PANDORA outputs clean surface reconstructions free from texture artefacts, models strong specularities accurately and estimates illumination under practical unstructured scenarios.

High Resolution, Deep Imaging Using Confocal Time-of-flight Diffuse Optical Tomography

Jan 27, 2021

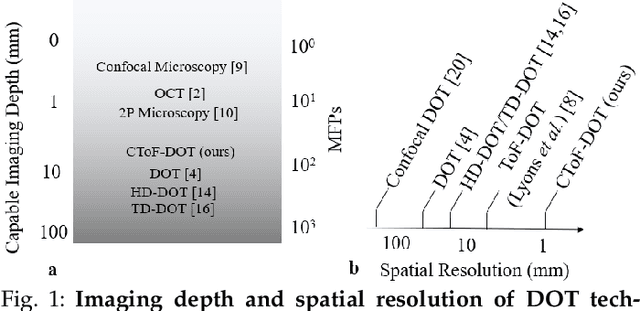

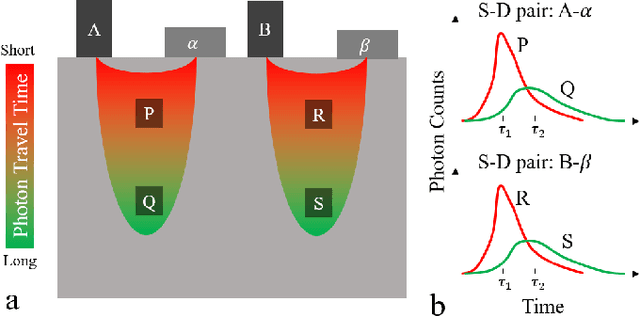

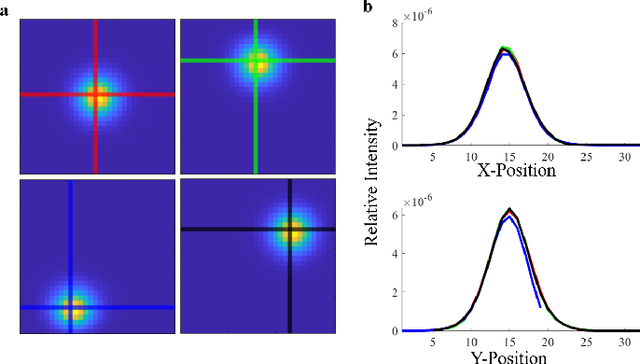

Abstract:Light scattering by tissue severely limits both how deep beneath the surface one can image, and at what spatial resolution one can obtain from these images. Diffuse optical tomography (DOT) has emerged as one of the most powerful techniques for imaging deep within tissue -- well beyond the conventional $\sim$ 10-15 mean scattering lengths tolerated by ballistic imaging techniques such as confocal and two-photon microscopy. Unfortunately, existing DOT systems are quite limited and achieve only centimeter-scale resolution. Furthermore, they also suffer from slow acquisition times and extremely slow reconstruction speeds making real-time imaging infeasible. We show that time-of-flight diffuse optical tomography (ToF-DOT) and its confocal variant (CToF-DOT), by exploiting the photon travel time information, allow us to achieve millimeter spatial resolution in the highly scattered diffusion regime ($>50$ mean free paths). In addition, we demonstrate that two additional innovations: focusing on confocal measurements, and multiplexing the illumination sources allow us to significantly reduce the scan time to acquire measurements. Finally, we also rely on a novel convolutional approximation that allows us to develop a fast reconstruction algorithm achieving a 100 $\times$ speedup in reconstruction time compared to traditional DOT reconstruction techniques. Together, we believe that these technical advances, serve as the first step towards real-time, millimeter resolution, deep tissue imaging using diffuse optical tomography.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge