Andrew A. Chael

Dynamic Black-hole Emission Tomography with Physics-informed Neural Fields

Feb 08, 2026Abstract:With the success of static black-hole imaging, the next frontier is the dynamic and 3D imaging of black holes. Recovering the dynamic 3D gas near a black hole would reveal previously-unseen parts of the universe and inform new physics models. However, only sparse radio measurements from a single viewpoint are possible, making the dynamic 3D reconstruction problem significantly ill-posed. Previously, BH-NeRF addressed the ill-posed problem by assuming Keplerian dynamics of the gas, but this assumption breaks down near the black hole, where the strong gravitational pull of the black hole and increased electromagnetic activity complicate fluid dynamics. To overcome the restrictive assumptions of BH-NeRF, we propose PI-DEF, a physics-informed approach that uses differentiable neural rendering to fit a 4D (time + 3D) emissivity field given EHT measurements. Our approach jointly reconstructs the 3D velocity field with the 4D emissivity field and enforces the velocity as a soft constraint on the dynamics of the emissivity. In experiments on simulated data, we find significantly improved reconstruction accuracy over both BH-NeRF and a physics-agnostic approach. We demonstrate how our method may be used to estimate other physics parameters of the black hole, such as its spin.

Orbital Polarimetric Tomography of a Flare Near the Sagittarius A* Supermassive Black Hole

Oct 11, 2023

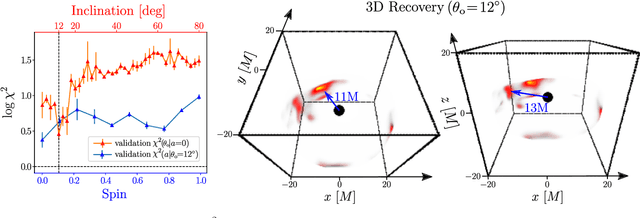

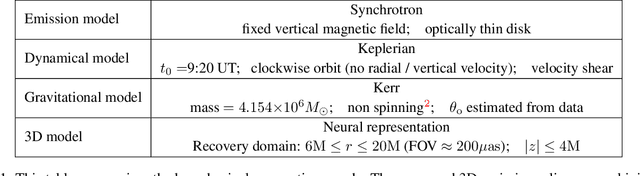

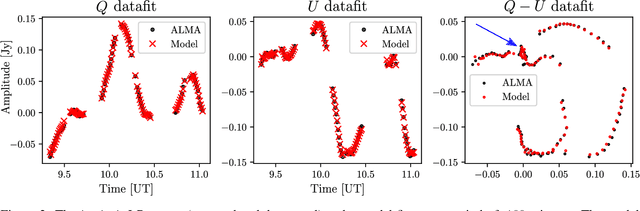

Abstract:The interaction between the supermassive black hole at the center of the Milky Way, Sagittarius A$^*$, and its accretion disk, occasionally produces high energy flares seen in X-ray, infrared and radio. One mechanism for observed flares is the formation of compact bright regions that appear within the accretion disk and close to the event horizon. Understanding these flares can provide a window into black hole accretion processes. Although sophisticated simulations predict the formation of these flares, their structure has yet to be recovered by observations. Here we show the first three-dimensional (3D) reconstruction of an emission flare in orbit recovered from ALMA light curves observed on April 11, 2017. Our recovery results show compact bright regions at a distance of roughly 6 times the event horizon. Moreover, our recovery suggests a clockwise rotation in a low-inclination orbital plane, a result consistent with prior studies by EHT and GRAVITY collaborations. To recover this emission structure we solve a highly ill-posed tomography problem by integrating a neural 3D representation (an emergent artificial intelligence approach for 3D reconstruction) with a gravitational model for black holes. Although the recovered 3D structure is subject, and sometimes sensitive, to the model assumptions, under physically motivated choices we find that our results are stable and our approach is successful on simulated data. We anticipate that in the future, this approach could be used to analyze a richer collection of time-series data that could shed light on the mechanisms governing black hole and plasma dynamics.

Gravitationally Lensed Black Hole Emission Tomography

Apr 07, 2022

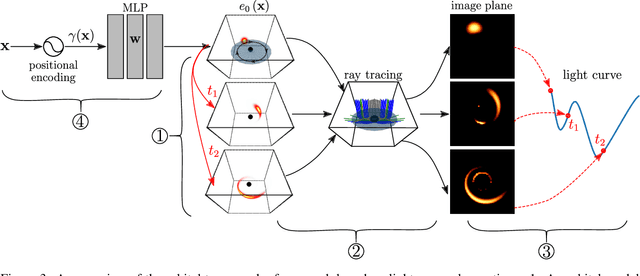

Abstract:Measurements from the Event Horizon Telescope enabled the visualization of light emission around a black hole for the first time. So far, these measurements have been used to recover a 2D image under the assumption that the emission field is static over the period of acquisition. In this work, we propose BH-NeRF, a novel tomography approach that leverages gravitational lensing to recover the continuous 3D emission field near a black hole. Compared to other 3D reconstruction or tomography settings, this task poses two significant challenges: first, rays near black holes follow curved paths dictated by general relativity, and second, we only observe measurements from a single viewpoint. Our method captures the unknown emission field using a continuous volumetric function parameterized by a coordinate-based neural network, and uses knowledge of Keplerian orbital dynamics to establish correspondence between 3D points over time. Together, these enable BH-NeRF to recover accurate 3D emission fields, even in challenging situations with sparse measurements and uncertain orbital dynamics. This work takes the first steps in showing how future measurements from the Event Horizon Telescope could be used to recover evolving 3D emission around the supermassive black hole in our Galactic center.

Reconstructing Video from Interferometric Measurements of Time-Varying Sources

Feb 01, 2018

Abstract:Very long baseline interferometry (VLBI) makes it possible to recover images of astronomical sources with extremely high angular resolution. Most recently, the Event Horizon Telescope (EHT) has extended VLBI to short millimeter wavelengths with a goal of achieving angular resolution sufficient for imaging the event horizons of nearby supermassive black holes. VLBI provides measurements related to the underlying source image through a sparse set spatial frequencies. An image can then be recovered from these measurements by making assumptions about the underlying image. One of the most important assumptions made by conventional imaging methods is that over the course of a night's observation the image is static. However, for quickly evolving sources, such as the galactic center's supermassive black hole (Sgr A*) targeted by the EHT, this assumption is violated and these conventional imaging approaches fail. In this work we propose a new way to model VLBI measurements that allows us to recover both the appearance and dynamics of an evolving source by reconstructing a video rather than a static image. By modeling VLBI measurements using a Gaussian Markov Model, we are able to propagate information across observations in time to reconstruct a video, while simultaneously learning about the dynamics of the source's emission region. We demonstrate our proposed Expectation-Maximization (EM) algorithm, StarWarps, on realistic synthetic observations of black holes, and show how it substantially improves results compared to conventional imaging algorithms. Additionally, we demonstrate StarWarps on real VLBI data of the M87 Jet from the VLBA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge