Siew-Kei Lam

Large Language Models for Computer-Aided Design: A Survey

May 13, 2025Abstract:Large Language Models (LLMs) have seen rapid advancements in recent years, with models like ChatGPT and DeepSeek, showcasing their remarkable capabilities across diverse domains. While substantial research has been conducted on LLMs in various fields, a comprehensive review focusing on their integration with Computer-Aided Design (CAD) remains notably absent. CAD is the industry standard for 3D modeling and plays a vital role in the design and development of products across different industries. As the complexity of modern designs increases, the potential for LLMs to enhance and streamline CAD workflows presents an exciting frontier. This article presents the first systematic survey exploring the intersection of LLMs and CAD. We begin by outlining the industrial significance of CAD, highlighting the need for AI-driven innovation. Next, we provide a detailed overview of the foundation of LLMs. We also examine both closed-source LLMs as well as publicly available models. The core of this review focuses on the various applications of LLMs in CAD, providing a taxonomy of six key areas where these models are making considerable impact. Finally, we propose several promising future directions for further advancements, which offer vast opportunities for innovation and are poised to shape the future of CAD technology. Github: https://github.com/lichengzhanguom/LLMs-CAD-Survey-Taxonomy

Taylor Series-Inspired Local Structure Fitting Network for Few-shot Point Cloud Semantic Segmentation

Apr 03, 2025Abstract:Few-shot point cloud semantic segmentation aims to accurately segment "unseen" new categories in point cloud scenes using limited labeled data. However, pretraining-based methods not only introduce excessive time overhead but also overlook the local structure representation among irregular point clouds. To address these issues, we propose a pretraining-free local structure fitting network for few-shot point cloud semantic segmentation, named TaylorSeg. Specifically, inspired by Taylor series, we treat the local structure representation of irregular point clouds as a polynomial fitting problem and propose a novel local structure fitting convolution, called TaylorConv. This convolution learns the low-order basic information and high-order refined information of point clouds from explicit encoding of local geometric structures. Then, using TaylorConv as the basic component, we construct two variants of TaylorSeg: a non-parametric TaylorSeg-NN and a parametric TaylorSeg-PN. The former can achieve performance comparable to existing parametric models without pretraining. For the latter, we equip it with an Adaptive Push-Pull (APP) module to mitigate the feature distribution differences between the query set and the support set. Extensive experiments validate the effectiveness of the proposed method. Notably, under the 2-way 1-shot setting, TaylorSeg-PN achieves improvements of +2.28% and +4.37% mIoU on the S3DIS and ScanNet datasets respectively, compared to the previous state-of-the-art methods. Our code is available at https://github.com/changshuowang/TaylorSeg.

GPSFormer: A Global Perception and Local Structure Fitting-based Transformer for Point Cloud Understanding

Jul 18, 2024

Abstract:Despite the significant advancements in pre-training methods for point cloud understanding, directly capturing intricate shape information from irregular point clouds without reliance on external data remains a formidable challenge. To address this problem, we propose GPSFormer, an innovative Global Perception and Local Structure Fitting-based Transformer, which learns detailed shape information from point clouds with remarkable precision. The core of GPSFormer is the Global Perception Module (GPM) and the Local Structure Fitting Convolution (LSFConv). Specifically, GPM utilizes Adaptive Deformable Graph Convolution (ADGConv) to identify short-range dependencies among similar features in the feature space and employs Multi-Head Attention (MHA) to learn long-range dependencies across all positions within the feature space, ultimately enabling flexible learning of contextual representations. Inspired by Taylor series, we design LSFConv, which learns both low-order fundamental and high-order refinement information from explicitly encoded local geometric structures. Integrating the GPM and LSFConv as fundamental components, we construct GPSFormer, a cutting-edge Transformer that effectively captures global and local structures of point clouds. Extensive experiments validate GPSFormer's effectiveness in three point cloud tasks: shape classification, part segmentation, and few-shot learning. The code of GPSFormer is available at \url{https://github.com/changshuowang/GPSFormer}.

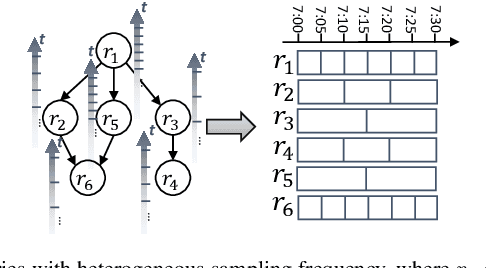

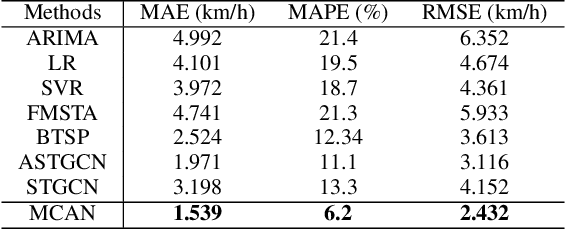

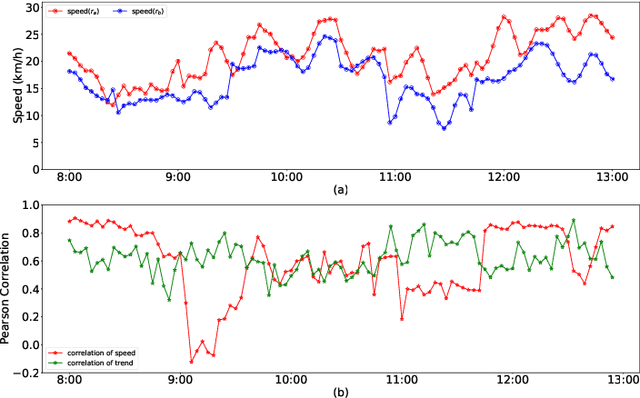

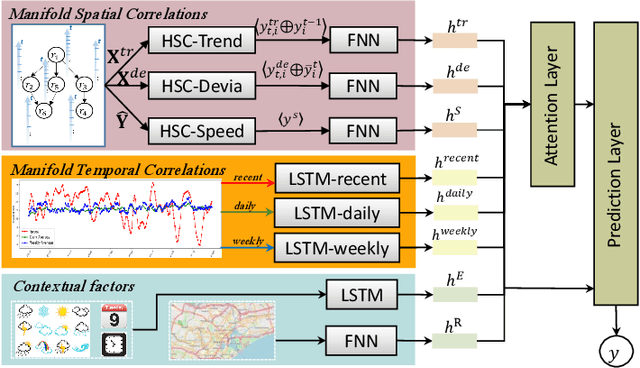

Multi-fold Correlation Attention Network for Predicting Traffic Speeds with Heterogeneous Frequency

Apr 19, 2021

Abstract:Substantial efforts have been devoted to the investigation of spatiotemporal correlations for improving traffic speed prediction accuracy. However, existing works typically model the correlations based solely on the observed traffic state (e.g. traffic speed) without due consideration that different correlation measurements of the traffic data could exhibit a diverse set of patterns under different traffic situations. In addition, the existing works assume that all road segments can employ the same sampling frequency of traffic states, which is impractical. In this paper, we propose new measurements to model the spatial correlations among traffic data and show that the resulting correlation patterns vary significantly under various traffic situations. We propose a Heterogeneous Spatial Correlation (HSC) model to capture the spatial correlation based on a specific measurement, where the traffic data of varying road segments can be heterogeneous (i.e. obtained with different sampling frequency). We propose a Multi-fold Correlation Attention Network (MCAN), which relies on the HSC model to explore multi-fold spatial correlations and leverage LSTM networks to capture multi-fold temporal correlations to provide discriminating features in order to achieve accurate traffic prediction. The learned multi-fold spatiotemporal correlations together with contextual factors are fused with attention mechanism to make the final predictions. Experiments on real-world datasets demonstrate that the proposed MCAN model outperforms the state-of-the-art baselines.

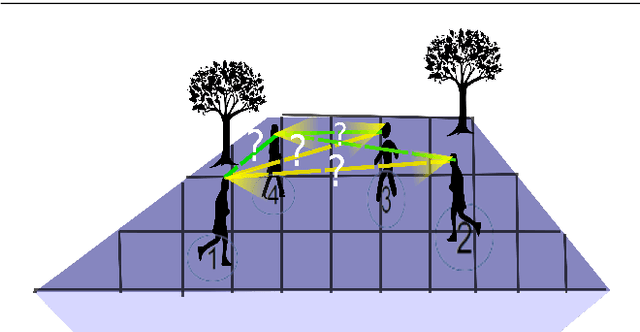

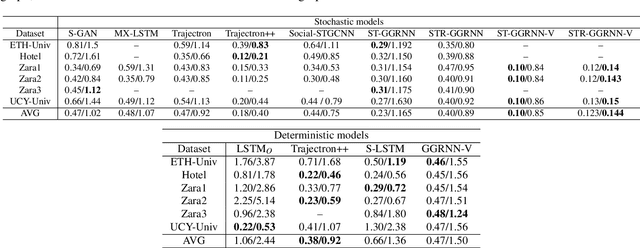

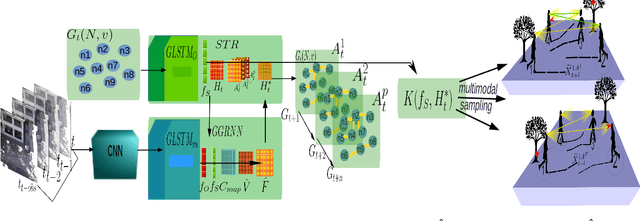

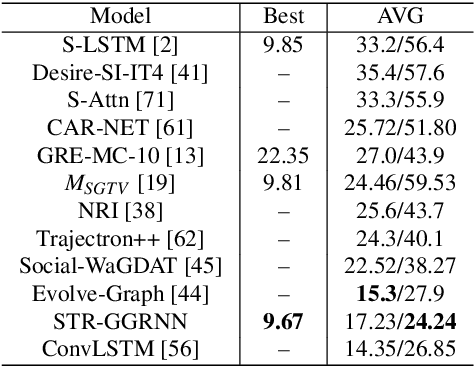

Self-Growing Spatial Graph Network for Context-Aware Pedestrian Trajectory Prediction

Dec 21, 2020

Abstract:Pedestrian trajectory prediction is an active research area with recent works undertaken to embed accurate models of pedestrians social interactions and their contextual compliance into dynamic spatial graphs. However, existing works rely on spatial assumptions about the scene and dynamics, which entails a significant challenge to adapt the graph structure in unknown environments for an online system. In addition, there is a lack of assessment approach for the relational modeling impact on prediction performance. To fill this gap, we propose Social Trajectory Recommender-Gated Graph Recurrent Neighborhood Network, (STR-GGRNN), which uses data-driven adaptive online neighborhood recommendation based on the contextual scene features and pedestrian visual cues. The neighborhood recommendation is achieved by online Nonnegative Matrix Factorization (NMF) to construct the graph adjacency matrices for predicting the pedestrians' trajectories. Experiments based on widely-used datasets show that our method outperforms the state-of-the-art. Our best performing model achieves 12 cm ADE and $\sim$15 cm FDE on ETH-UCY dataset. The proposed method takes only 0.49 seconds when sampling a total of 20K future trajectories per frame.

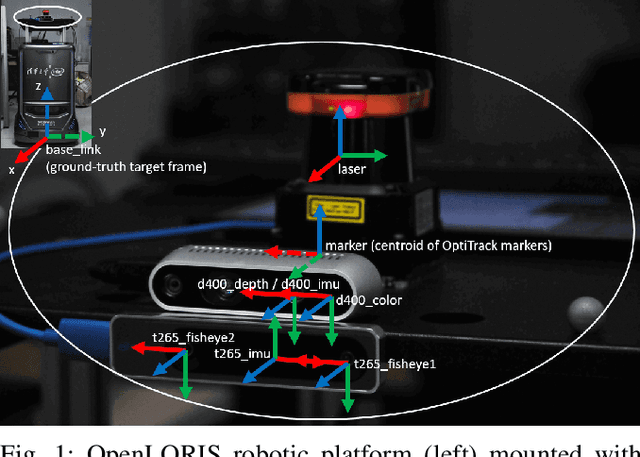

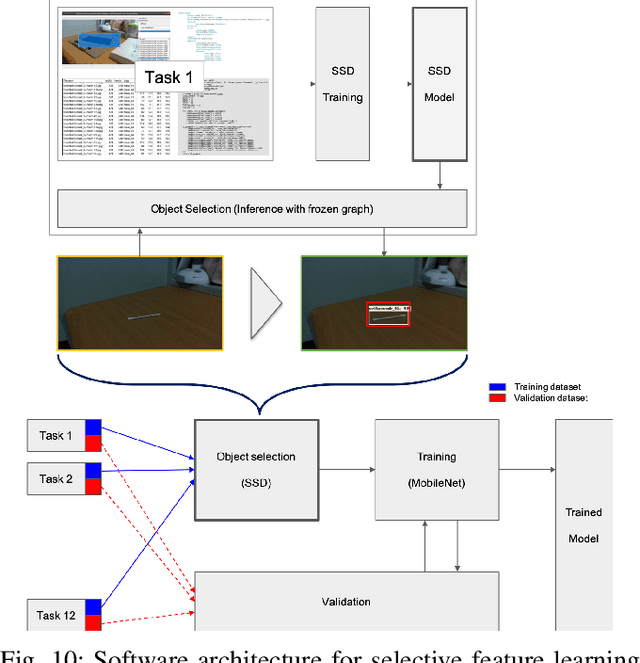

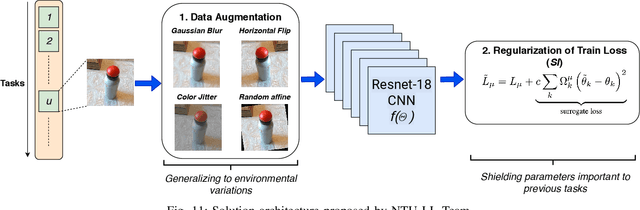

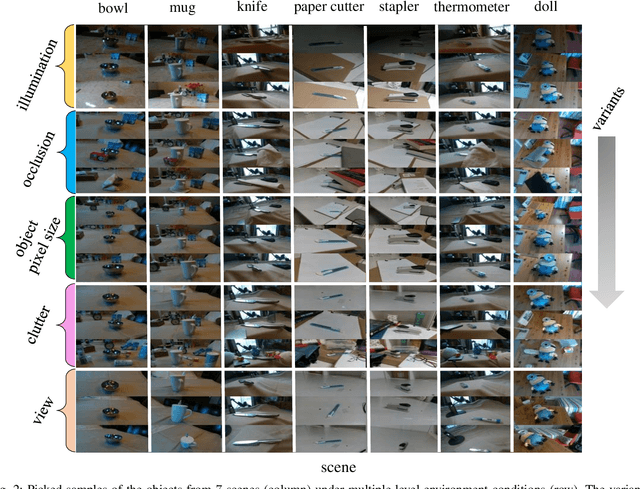

IROS 2019 Lifelong Robotic Vision Challenge -- Lifelong Object Recognition Report

Apr 26, 2020

Abstract:This report summarizes IROS 2019-Lifelong Robotic Vision Competition (Lifelong Object Recognition Challenge) with methods and results from the top $8$ finalists (out of over~$150$ teams). The competition dataset (L)ifel(O)ng (R)obotic V(IS)ion (OpenLORIS) - Object Recognition (OpenLORIS-object) is designed for driving lifelong/continual learning research and application in robotic vision domain, with everyday objects in home, office, campus, and mall scenarios. The dataset explicitly quantifies the variants of illumination, object occlusion, object size, camera-object distance/angles, and clutter information. Rules are designed to quantify the learning capability of the robotic vision system when faced with the objects appearing in the dynamic environments in the contest. Individual reports, dataset information, rules, and released source code can be found at the project homepage: "https://lifelong-robotic-vision.github.io/competition/".

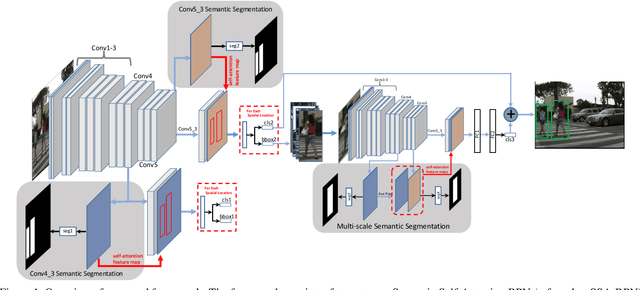

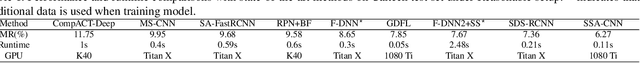

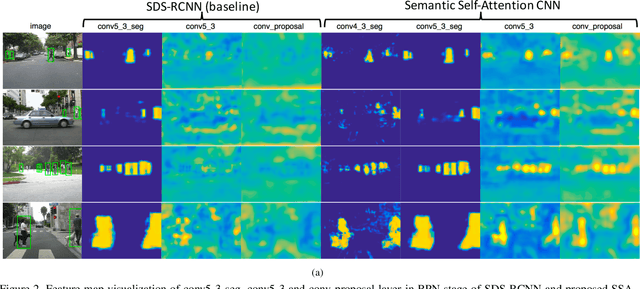

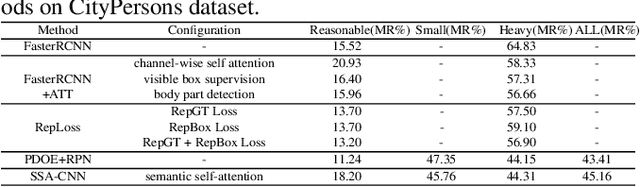

SSA-CNN: Semantic Self-Attention CNN for Pedestrian Detection

Mar 04, 2019

Abstract:Pedestrian detection plays an important role in many applications such as autonomous driving. We propose a method that explores semantic segmentation results as self-attention cues to significantly improve the pedestrian detection performance. Specifically, a multi-task network is designed to jointly learn semantic segmentation and pedestrian detection from image datasets with weak box-wise annotations. The semantic segmentation feature maps are concatenated with corresponding convolution features maps to provide more discriminative features for pedestrian detection and pedestrian classification. By jointly learning segmentation and detection, our proposed pedestrian self-attention mechanism can effectively identify pedestrian regions and suppress backgrounds. In addition, we propose to incorporate semantic attention information from multi-scale layers into deep convolution neural network to boost pedestrian detection. Experiment results show that the proposed method achieves the best detection performance with MR of 6.27% on Caltech dataset and obtain competitive performance on CityPersons dataset while maintaining high computational efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge