SSA-CNN: Semantic Self-Attention CNN for Pedestrian Detection

Paper and Code

Mar 04, 2019

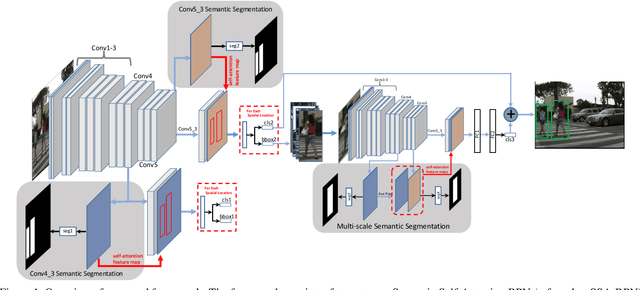

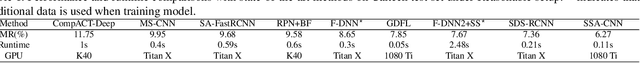

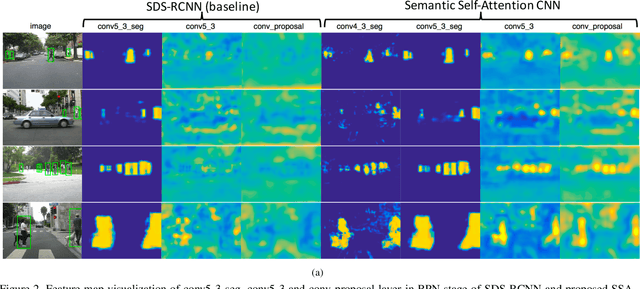

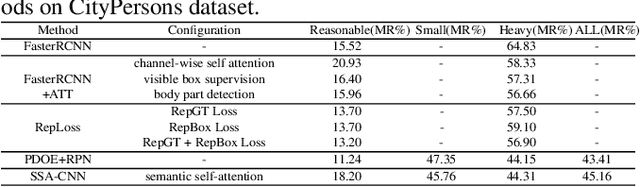

Pedestrian detection plays an important role in many applications such as autonomous driving. We propose a method that explores semantic segmentation results as self-attention cues to significantly improve the pedestrian detection performance. Specifically, a multi-task network is designed to jointly learn semantic segmentation and pedestrian detection from image datasets with weak box-wise annotations. The semantic segmentation feature maps are concatenated with corresponding convolution features maps to provide more discriminative features for pedestrian detection and pedestrian classification. By jointly learning segmentation and detection, our proposed pedestrian self-attention mechanism can effectively identify pedestrian regions and suppress backgrounds. In addition, we propose to incorporate semantic attention information from multi-scale layers into deep convolution neural network to boost pedestrian detection. Experiment results show that the proposed method achieves the best detection performance with MR of 6.27% on Caltech dataset and obtain competitive performance on CityPersons dataset while maintaining high computational efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge