Xiang Fang

Prototype-Driven Structure Synergy Network for Remote Sensing Images Segmentation

Aug 06, 2025

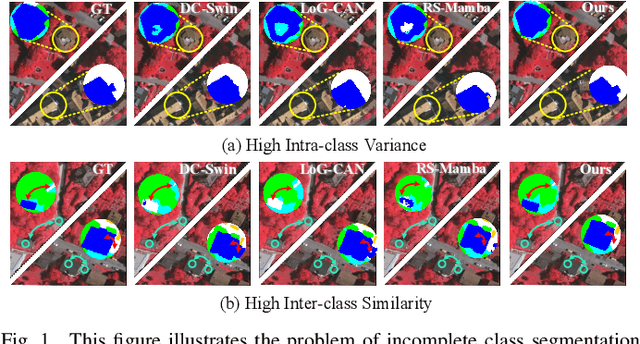

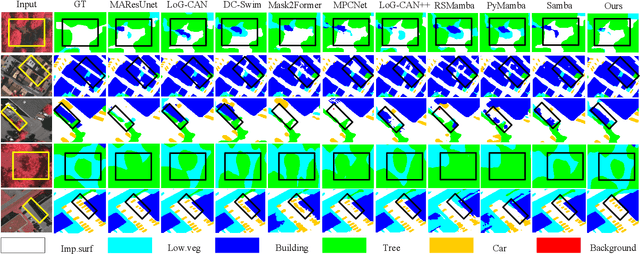

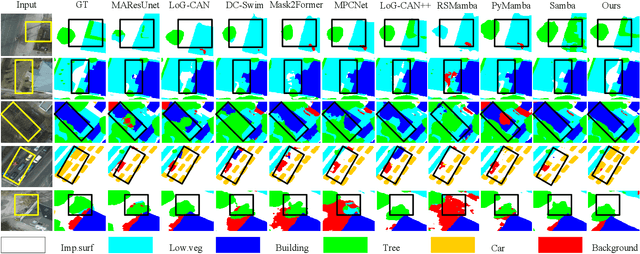

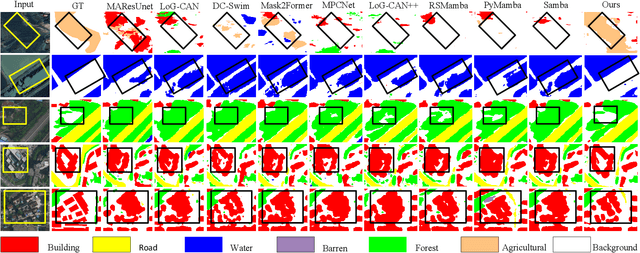

Abstract:In the semantic segmentation of remote sensing images, acquiring complete ground objects is critical for achieving precise analysis. However, this task is severely hindered by two major challenges: high intra-class variance and high inter-class similarity. Traditional methods often yield incomplete segmentation results due to their inability to effectively unify class representations and distinguish between similar features. Even emerging class-guided approaches are limited by coarse class prototype representations and a neglect of target structural information. Therefore, this paper proposes a Prototype-Driven Structure Synergy Network (PDSSNet). The design of this network is based on a core concept, a complete ground object is jointly defined by its invariant class semantics and its variant spatial structure. To implement this, we have designed three key modules. First, the Adaptive Prototype Extraction Module (APEM) ensures semantic accuracy from the source by encoding the ground truth to extract unbiased class prototypes. Subsequently, the designed Semantic-Structure Coordination Module (SSCM) follows a hierarchical semantics-first, structure-second principle. This involves first establishing a global semantic cognition, then leveraging structural information to constrain and refine the semantic representation, thereby ensuring the integrity of class information. Finally, the Channel Similarity Adjustment Module (CSAM) employs a dynamic step-size adjustment mechanism to focus on discriminative features between classes. Extensive experiments demonstrate that PDSSNet outperforms state-of-the-art methods. The source code is available at https://github.com/wangjunyi-1/PDSSNet.

EOOD: Entropy-based Out-of-distribution Detection

Apr 04, 2025

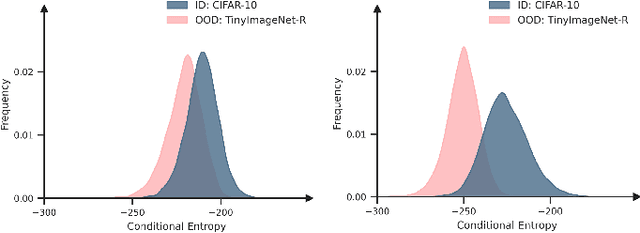

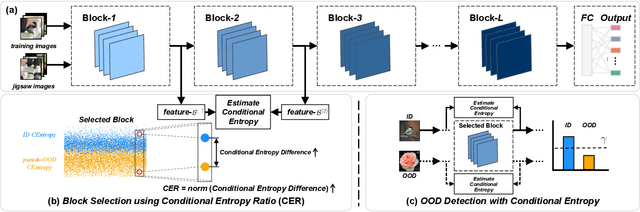

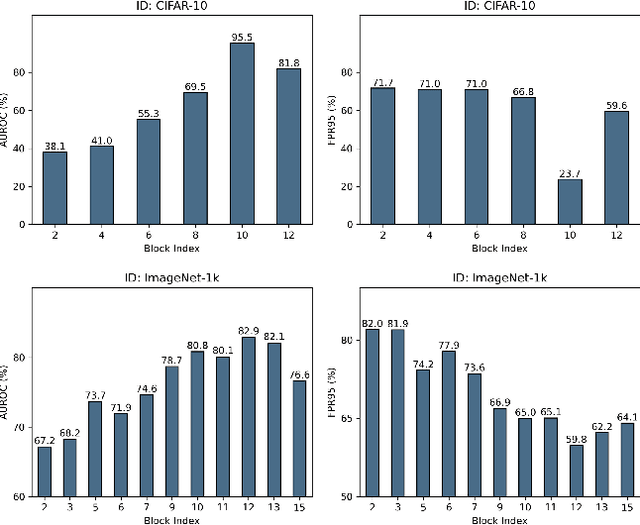

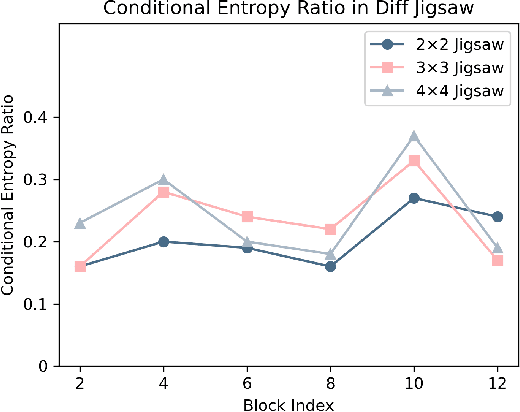

Abstract:Deep neural networks (DNNs) often exhibit overconfidence when encountering out-of-distribution (OOD) samples, posing significant challenges for deployment. Since DNNs are trained on in-distribution (ID) datasets, the information flow of ID samples through DNNs inevitably differs from that of OOD samples. In this paper, we propose an Entropy-based Out-Of-distribution Detection (EOOD) framework. EOOD first identifies specific block where the information flow differences between ID and OOD samples are more pronounced, using both ID and pseudo-OOD samples. It then calculates the conditional entropy on the selected block as the OOD confidence score. Comprehensive experiments conducted across various ID and OOD settings demonstrate the effectiveness of EOOD in OOD detection and its superiority over state-of-the-art methods.

Taylor Series-Inspired Local Structure Fitting Network for Few-shot Point Cloud Semantic Segmentation

Apr 03, 2025Abstract:Few-shot point cloud semantic segmentation aims to accurately segment "unseen" new categories in point cloud scenes using limited labeled data. However, pretraining-based methods not only introduce excessive time overhead but also overlook the local structure representation among irregular point clouds. To address these issues, we propose a pretraining-free local structure fitting network for few-shot point cloud semantic segmentation, named TaylorSeg. Specifically, inspired by Taylor series, we treat the local structure representation of irregular point clouds as a polynomial fitting problem and propose a novel local structure fitting convolution, called TaylorConv. This convolution learns the low-order basic information and high-order refined information of point clouds from explicit encoding of local geometric structures. Then, using TaylorConv as the basic component, we construct two variants of TaylorSeg: a non-parametric TaylorSeg-NN and a parametric TaylorSeg-PN. The former can achieve performance comparable to existing parametric models without pretraining. For the latter, we equip it with an Adaptive Push-Pull (APP) module to mitigate the feature distribution differences between the query set and the support set. Extensive experiments validate the effectiveness of the proposed method. Notably, under the 2-way 1-shot setting, TaylorSeg-PN achieves improvements of +2.28% and +4.37% mIoU on the S3DIS and ScanNet datasets respectively, compared to the previous state-of-the-art methods. Our code is available at https://github.com/changshuowang/TaylorSeg.

Adaptive Hierarchical Graph Cut for Multi-granularity Out-of-distribution Detection

Dec 20, 2024Abstract:This paper focuses on a significant yet challenging task: out-of-distribution detection (OOD detection), which aims to distinguish and reject test samples with semantic shifts, so as to prevent models trained on in-distribution (ID) data from producing unreliable predictions. Although previous works have made decent success, they are ineffective for real-world challenging applications since these methods simply regard all unlabeled data as OOD data and ignore the case that different datasets have different label granularity. For example, "cat" on CIFAR-10 and "tabby cat" on Tiny-ImageNet share the same semantics but have different labels due to various label granularity. To this end, in this paper, we propose a novel Adaptive Hierarchical Graph Cut network (AHGC) to deeply explore the semantic relationship between different images. Specifically, we construct a hierarchical KNN graph to evaluate the similarities between different images based on the cosine similarity. Based on the linkage and density information of the graph, we cut the graph into multiple subgraphs to integrate these semantics-similar samples. If the labeled percentage in a subgraph is larger than a threshold, we will assign the label with the highest percentage to unlabeled images. To further improve the model generalization, we augment each image into two augmentation versions, and maximize the similarity between the two versions. Finally, we leverage the similarity score for OOD detection. Extensive experiments on two challenging benchmarks (CIFAR- 10 and CIFAR-100) illustrate that in representative cases, AHGC outperforms state-of-the-art OOD detection methods by 81.24% on CIFAR-100 and by 40.47% on CIFAR-10 in terms of "FPR95", which shows the effectiveness of our AHGC.

Multi-Pair Temporal Sentence Grounding via Multi-Thread Knowledge Transfer Network

Dec 20, 2024

Abstract:Given some video-query pairs with untrimmed videos and sentence queries, temporal sentence grounding (TSG) aims to locate query-relevant segments in these videos. Although previous respectable TSG methods have achieved remarkable success, they train each video-query pair separately and ignore the relationship between different pairs. We observe that the similar video/query content not only helps the TSG model better understand and generalize the cross-modal representation but also assists the model in locating some complex video-query pairs. Previous methods follow a single-thread framework that cannot co-train different pairs and usually spends much time re-obtaining redundant knowledge, limiting their real-world applications. To this end, in this paper, we pose a brand-new setting: Multi-Pair TSG, which aims to co-train these pairs. In particular, we propose a novel video-query co-training approach, Multi-Thread Knowledge Transfer Network, to locate a variety of video-query pairs effectively and efficiently. Firstly, we mine the spatial and temporal semantics across different queries to cooperate with each other. To learn intra- and inter-modal representations simultaneously, we design a cross-modal contrast module to explore the semantic consistency by a self-supervised strategy. To fully align visual and textual representations between different pairs, we design a prototype alignment strategy to 1) match object prototypes and phrase prototypes for spatial alignment, and 2) align activity prototypes and sentence prototypes for temporal alignment. Finally, we develop an adaptive negative selection module to adaptively generate a threshold for cross-modal matching. Extensive experiments show the effectiveness and efficiency of our proposed method.

Your Data Is Not Perfect: Towards Cross-Domain Out-of-Distribution Detection in Class-Imbalanced Data

Dec 09, 2024Abstract:Previous OOD detection systems only focus on the semantic gap between ID and OOD samples. Besides the semantic gap, we are faced with two additional gaps: the domain gap between source and target domains, and the class-imbalance gap between different classes. In fact, similar objects from different domains should belong to the same class. In this paper, we introduce a realistic yet challenging setting: class-imbalanced cross-domain OOD detection (CCOD), which contains a well-labeled (but usually small) source set for training and conducts OOD detection on an unlabeled (but usually larger) target set for testing. We do not assume that the target domain contains only OOD classes or that it is class-balanced: the distribution among classes of the target dataset need not be the same as the source dataset. To tackle this challenging setting with an OOD detection system, we propose a novel uncertainty-aware adaptive semantic alignment (UASA) network based on a prototype-based alignment strategy. Specifically, we first build label-driven prototypes in the source domain and utilize these prototypes for target classification to close the domain gap. Rather than utilizing fixed thresholds for OOD detection, we generate adaptive sample-wise thresholds to handle the semantic gap. Finally, we conduct uncertainty-aware clustering to group semantically similar target samples to relieve the class-imbalance gap. Extensive experiments on three challenging benchmarks demonstrate that our proposed UASA outperforms state-of-the-art methods by a large margin.

Uncertainty-Guided Appearance-Motion Association Network for Out-of-Distribution Action Detection

Sep 16, 2024

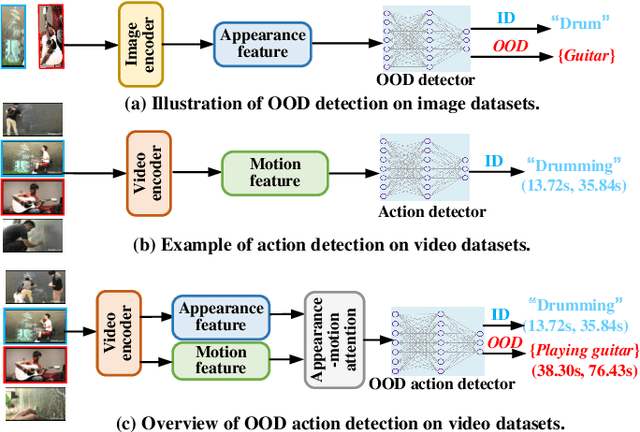

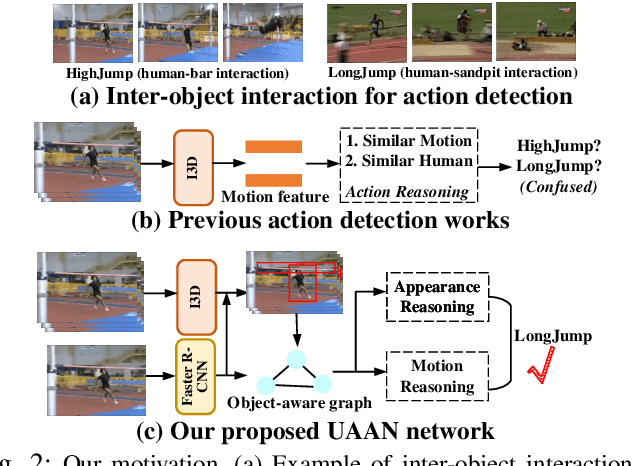

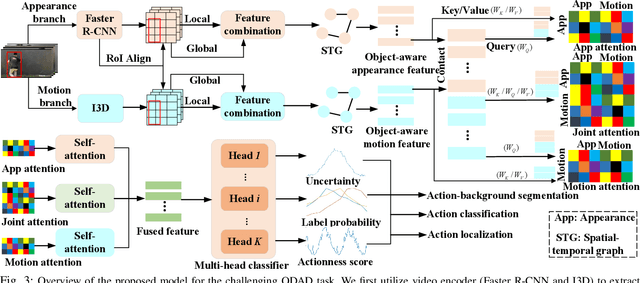

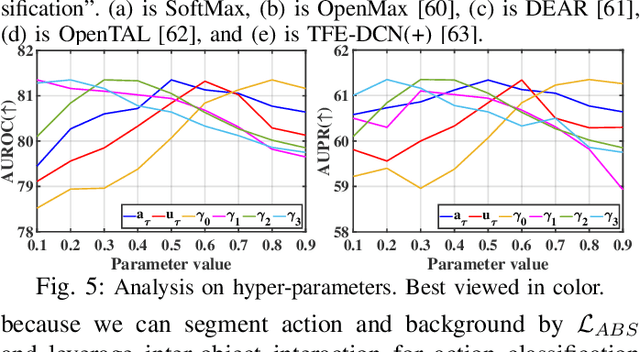

Abstract:Out-of-distribution (OOD) detection targets to detect and reject test samples with semantic shifts, to prevent models trained on in-distribution (ID) dataset from producing unreliable predictions. Existing works only extract the appearance features on image datasets, and cannot handle dynamic multimedia scenarios with much motion information. Therefore, we target a more realistic and challenging OOD detection task: OOD action detection (ODAD). Given an untrimmed video, ODAD first classifies the ID actions and recognizes the OOD actions, and then localizes ID and OOD actions. To this end, in this paper, we propose a novel Uncertainty-Guided Appearance-Motion Association Network (UAAN), which explores both appearance features and motion contexts to reason spatial-temporal inter-object interaction for ODAD.Firstly, we design separate appearance and motion branches to extract corresponding appearance-oriented and motion-aspect object representations. In each branch, we construct a spatial-temporal graph to reason appearance-guided and motion-driven inter-object interaction. Then, we design an appearance-motion attention module to fuse the appearance and motion features for final action detection. Experimental results on two challenging datasets show that UAAN beats state-of-the-art methods by a significant margin, illustrating its effectiveness.

ChinaTelecom System Description to VoxCeleb Speaker Recognition Challenge 2023

Aug 16, 2023Abstract:This technical report describes ChinaTelecom system for Track 1 (closed) of the VoxCeleb2023 Speaker Recognition Challenge (VoxSRC 2023). Our system consists of several ResNet variants trained only on VoxCeleb2, which were fused for better performance later. Score calibration was also applied for each variant and the fused system. The final submission achieved minDCF of 0.1066 and EER of 1.980%.

You Can Ground Earlier than See: An Effective and Efficient Pipeline for Temporal Sentence Grounding in Compressed Videos

Mar 16, 2023

Abstract:Given an untrimmed video, temporal sentence grounding (TSG) aims to locate a target moment semantically according to a sentence query. Although previous respectable works have made decent success, they only focus on high-level visual features extracted from the consecutive decoded frames and fail to handle the compressed videos for query modelling, suffering from insufficient representation capability and significant computational complexity during training and testing. In this paper, we pose a new setting, compressed-domain TSG, which directly utilizes compressed videos rather than fully-decompressed frames as the visual input. To handle the raw video bit-stream input, we propose a novel Three-branch Compressed-domain Spatial-temporal Fusion (TCSF) framework, which extracts and aggregates three kinds of low-level visual features (I-frame, motion vector and residual features) for effective and efficient grounding. Particularly, instead of encoding the whole decoded frames like previous works, we capture the appearance representation by only learning the I-frame feature to reduce delay or latency. Besides, we explore the motion information not only by learning the motion vector feature, but also by exploring the relations of neighboring frames via the residual feature. In this way, a three-branch spatial-temporal attention layer with an adaptive motion-appearance fusion module is further designed to extract and aggregate both appearance and motion information for the final grounding. Experiments on three challenging datasets shows that our TCSF achieves better performance than other state-of-the-art methods with lower complexity.

Hypotheses Tree Building for One-Shot Temporal Sentence Localization

Jan 15, 2023

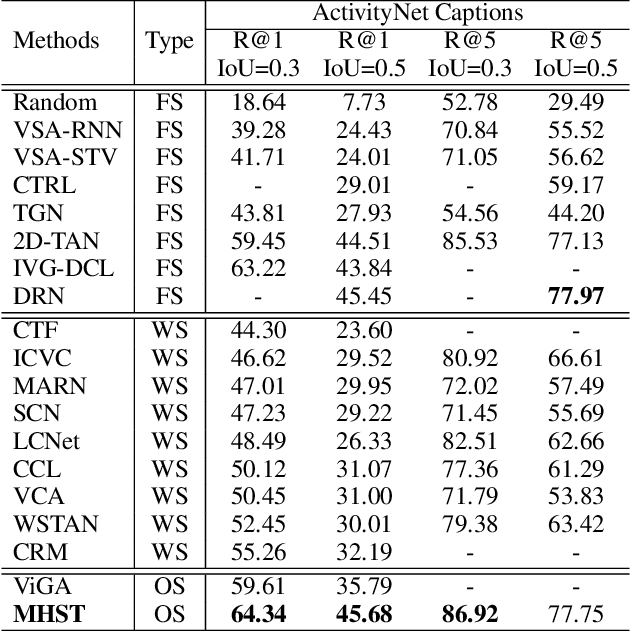

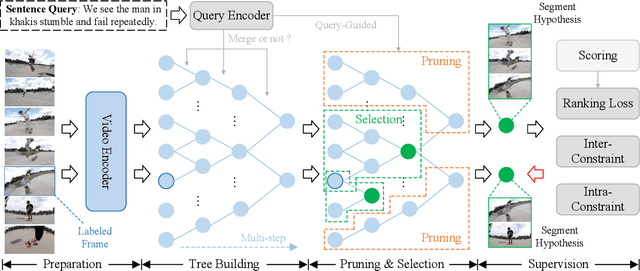

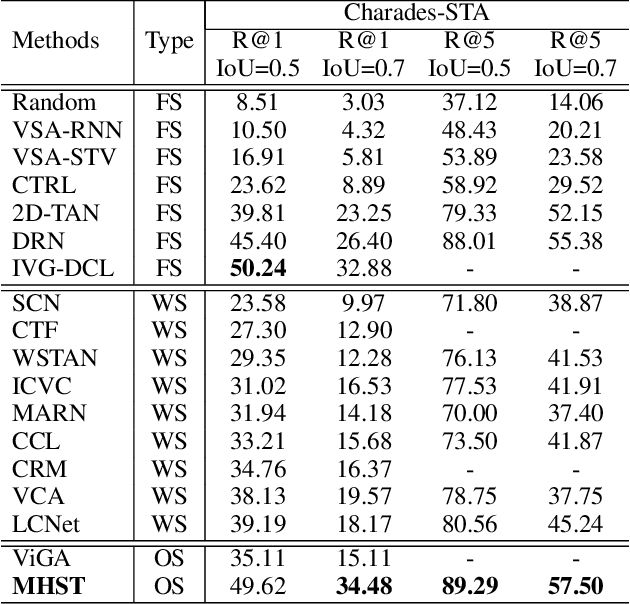

Abstract:Given an untrimmed video, temporal sentence localization (TSL) aims to localize a specific segment according to a given sentence query. Though respectable works have made decent achievements in this task, they severely rely on dense video frame annotations, which require a tremendous amount of human effort to collect. In this paper, we target another more practical and challenging setting: one-shot temporal sentence localization (one-shot TSL), which learns to retrieve the query information among the entire video with only one annotated frame. Particularly, we propose an effective and novel tree-structure baseline for one-shot TSL, called Multiple Hypotheses Segment Tree (MHST), to capture the query-aware discriminative frame-wise information under the insufficient annotations. Each video frame is taken as the leaf-node, and the adjacent frames sharing the same visual-linguistic semantics will be merged into the upper non-leaf node for tree building. At last, each root node is an individual segment hypothesis containing the consecutive frames of its leaf-nodes. During the tree construction, we also introduce a pruning strategy to eliminate the interference of query-irrelevant nodes. With our designed self-supervised loss functions, our MHST is able to generate high-quality segment hypotheses for ranking and selection with the query. Experiments on two challenging datasets demonstrate that MHST achieves competitive performance compared to existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge