Naveed Akhtar

MixFlow: Mixture-Conditioned Flow Matching for Out-of-Distribution Generalization

Jan 16, 2026Abstract:Achieving robust generalization under distribution shift remains a central challenge in conditional generative modeling, as existing conditional flow-based methods often struggle to extrapolate beyond the training conditions. We introduce MixFlow, a conditional flow-matching framework for descriptor-controlled generation that directly targets this limitation by jointly learning a descriptor-conditioned base distribution and a descriptor-conditioned flow field via shortest-path flow matching. By modeling the base distribution as a learnable, descriptor-dependent mixture, MixFlow enables smooth interpolation and extrapolation to unseen conditions, leading to substantially improved out-of-distribution generalization. We provide analytical insights into the behavior of the proposed framework and empirically demonstrate its effectiveness across multiple domains, including prediction of responses to unseen perturbations in single-cell transcriptomic data and high-content microscopy-based drug screening tasks. Across these diverse settings, MixFlow consistently outperforms standard conditional flow-matching baselines. Overall, MixFlow offers a simple yet powerful approach for achieving robust, generalizable, and controllable generative modeling across heterogeneous domains.

DRBD-Mamba for Robust and Efficient Brain Tumor Segmentation with Analytical Insights

Oct 16, 2025Abstract:Accurate brain tumor segmentation is significant for clinical diagnosis and treatment. It is challenging due to the heterogeneity of tumor subregions. Mamba-based State Space Models have demonstrated promising performance. However, they incur significant computational overhead due to sequential feature computation across multiple spatial axes. Moreover, their robustness across diverse BraTS data partitions remains largely unexplored, leaving a critical gap in reliable evaluation. To address these limitations, we propose dual-resolution bi-directional Mamba (DRBD-Mamba), an efficient 3D segmentation model that captures multi-scale long-range dependencies with minimal computational overhead. We leverage a space-filling curve to preserve spatial locality during 3D-to-1D feature mapping, thereby reducing reliance on computationally expensive multi-axial feature scans. To enrich feature representation, we propose a gated fusion module that adaptively integrates forward and reverse contexts, along with a quantization block that discretizes features to improve robustness. In addition, we propose five systematic folds on BraTS2023 for rigorous evaluation of segmentation techniques under diverse conditions and present detailed analysis of common failure scenarios. On the 20\% test set used by recent methods, our model achieves Dice improvements of 0.10\% for whole tumor, 1.75\% for tumor core, and 0.93\% for enhancing tumor. Evaluations on the proposed systematic five folds demonstrate that our model maintains competitive whole tumor accuracy while achieving clear average Dice gains of 0.86\% for tumor core and 1.45\% for enhancing tumor over existing state-of-the-art. Furthermore, our model attains 15 times improvement in efficiency while maintaining high segmentation accuracy, highlighting its robustness and computational advantage over existing approaches.

DoorDet: Semi-Automated Multi-Class Door Detection Dataset via Object Detection and Large Language Models

Aug 11, 2025

Abstract:Accurate detection and classification of diverse door types in floor plans drawings is critical for multiple applications, such as building compliance checking, and indoor scene understanding. Despite their importance, publicly available datasets specifically designed for fine-grained multi-class door detection remain scarce. In this work, we present a semi-automated pipeline that leverages a state-of-the-art object detector and a large language model (LLM) to construct a multi-class door detection dataset with minimal manual effort. Doors are first detected as a unified category using a deep object detection model. Next, an LLM classifies each detected instance based on its visual and contextual features. Finally, a human-in-the-loop stage ensures high-quality labels and bounding boxes. Our method significantly reduces annotation cost while producing a dataset suitable for benchmarking neural models in floor plan analysis. This work demonstrates the potential of combining deep learning and multimodal reasoning for efficient dataset construction in complex real-world domains.

On the Reliability of Vision-Language Models Under Adversarial Frequency-Domain Perturbations

Jul 30, 2025Abstract:Vision-Language Models (VLMs) are increasingly used as perceptual modules for visual content reasoning, including through captioning and DeepFake detection. In this work, we expose a critical vulnerability of VLMs when exposed to subtle, structured perturbations in the frequency domain. Specifically, we highlight how these feature transformations undermine authenticity/DeepFake detection and automated image captioning tasks. We design targeted image transformations, operating in the frequency domain to systematically adjust VLM outputs when exposed to frequency-perturbed real and synthetic images. We demonstrate that the perturbation injection method generalizes across five state-of-the-art VLMs which includes different-parameter Qwen2/2.5 and BLIP models. Experimenting across ten real and generated image datasets reveals that VLM judgments are sensitive to frequency-based cues and may not wholly align with semantic content. Crucially, we show that visually-imperceptible spatial frequency transformations expose the fragility of VLMs deployed for automated image captioning and authenticity detection tasks. Our findings under realistic, black-box constraints challenge the reliability of VLMs, underscoring the need for robust multimodal perception systems.

Deeper Diffusion Models Amplify Bias

May 23, 2025Abstract:Despite the impressive performance of generative Diffusion Models (DMs), their internal working is still not well understood, which is potentially problematic. This paper focuses on exploring the important notion of bias-variance tradeoff in diffusion models. Providing a systematic foundation for this exploration, it establishes that at one extreme the diffusion models may amplify the inherent bias in the training data and, on the other, they may compromise the presumed privacy of the training samples. Our exploration aligns with the memorization-generalization understanding of the generative models, but it also expands further along this spectrum beyond ``generalization'', revealing the risk of bias amplification in deeper models. Building on the insights, we also introduce a training-free method to improve output quality in text-to-image and image-to-image generation. By progressively encouraging temporary high variance in the generation process with partial bypassing of the mid-block's contribution in the denoising process of DMs, our method consistently improves generative image quality with zero training cost. Our claims are validated both theoretically and empirically.

Large Language Models for Computer-Aided Design: A Survey

May 13, 2025Abstract:Large Language Models (LLMs) have seen rapid advancements in recent years, with models like ChatGPT and DeepSeek, showcasing their remarkable capabilities across diverse domains. While substantial research has been conducted on LLMs in various fields, a comprehensive review focusing on their integration with Computer-Aided Design (CAD) remains notably absent. CAD is the industry standard for 3D modeling and plays a vital role in the design and development of products across different industries. As the complexity of modern designs increases, the potential for LLMs to enhance and streamline CAD workflows presents an exciting frontier. This article presents the first systematic survey exploring the intersection of LLMs and CAD. We begin by outlining the industrial significance of CAD, highlighting the need for AI-driven innovation. Next, we provide a detailed overview of the foundation of LLMs. We also examine both closed-source LLMs as well as publicly available models. The core of this review focuses on the various applications of LLMs in CAD, providing a taxonomy of six key areas where these models are making considerable impact. Finally, we propose several promising future directions for further advancements, which offer vast opportunities for innovation and are poised to shape the future of CAD technology. Github: https://github.com/lichengzhanguom/LLMs-CAD-Survey-Taxonomy

On Transfer-based Universal Attacks in Pure Black-box Setting

Apr 11, 2025Abstract:Despite their impressive performance, deep visual models are susceptible to transferable black-box adversarial attacks. Principally, these attacks craft perturbations in a target model-agnostic manner. However, surprisingly, we find that existing methods in this domain inadvertently take help from various priors that violate the black-box assumption such as the availability of the dataset used to train the target model, and the knowledge of the number of classes in the target model. Consequently, the literature fails to articulate the true potency of transferable black-box attacks. We provide an empirical study of these biases and propose a framework that aids in a prior-free transparent study of this paradigm. Using our framework, we analyze the role of prior knowledge of the target model data and number of classes in attack performance. We also provide several interesting insights based on our analysis, and demonstrate that priors cause overestimation in transferability scores. Finally, we extend our framework to query-based attacks. This extension inspires a novel image-blending technique to prepare data for effective surrogate model training.

Leveraging Text-to-Image Generation for Handling Spurious Correlation

Mar 21, 2025Abstract:Deep neural networks trained with Empirical Risk Minimization (ERM) perform well when both training and test data come from the same domain, but they often fail to generalize to out-of-distribution samples. In image classification, these models may rely on spurious correlations that often exist between labels and irrelevant features of images, making predictions unreliable when those features do not exist. We propose a technique to generate training samples with text-to-image (T2I) diffusion models for addressing the spurious correlation problem. First, we compute the best describing token for the visual features pertaining to the causal components of samples by a textual inversion mechanism. Then, leveraging a language segmentation method and a diffusion model, we generate new samples by combining the causal component with the elements from other classes. We also meticulously prune the generated samples based on the prediction probabilities and attribution scores of the ERM model to ensure their correct composition for our objective. Finally, we retrain the ERM model on our augmented dataset. This process reduces the model's reliance on spurious correlations by learning from carefully crafted samples for in which this correlation does not exist. Our experiments show that across different benchmarks, our technique achieves better worst-group accuracy than the existing state-of-the-art methods.

GO-N3RDet: Geometry Optimized NeRF-enhanced 3D Object Detector

Mar 19, 2025Abstract:We propose GO-N3RDet, a scene-geometry optimized multi-view 3D object detector enhanced by neural radiance fields. The key to accurate 3D object detection is in effective voxel representation. However, due to occlusion and lack of 3D information, constructing 3D features from multi-view 2D images is challenging. Addressing that, we introduce a unique 3D positional information embedded voxel optimization mechanism to fuse multi-view features. To prioritize neural field reconstruction in object regions, we also devise a double importance sampling scheme for the NeRF branch of our detector. We additionally propose an opacity optimization module for precise voxel opacity prediction by enforcing multi-view consistency constraints. Moreover, to further improve voxel density consistency across multiple perspectives, we incorporate ray distance as a weighting factor to minimize cumulative ray errors. Our unique modules synergetically form an end-to-end neural model that establishes new state-of-the-art in NeRF-based multi-view 3D detection, verified with extensive experiments on ScanNet and ARKITScenes. Code will be available at https://github.com/ZechuanLi/GO-N3RDet.

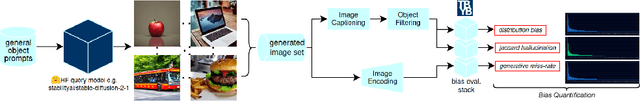

Exploring Bias in over 100 Text-to-Image Generative Models

Mar 11, 2025

Abstract:We investigate bias trends in text-to-image generative models over time, focusing on the increasing availability of models through open platforms like Hugging Face. While these platforms democratize AI, they also facilitate the spread of inherently biased models, often shaped by task-specific fine-tuning. Ensuring ethical and transparent AI deployment requires robust evaluation frameworks and quantifiable bias metrics. To this end, we assess bias across three key dimensions: (i) distribution bias, (ii) generative hallucination, and (iii) generative miss-rate. Analyzing over 100 models, we reveal how bias patterns evolve over time and across generative tasks. Our findings indicate that artistic and style-transferred models exhibit significant bias, whereas foundation models, benefiting from broader training distributions, are becoming progressively less biased. By identifying these systemic trends, we contribute a large-scale evaluation corpus to inform bias research and mitigation strategies, fostering more responsible AI development. Keywords: Bias, Ethical AI, Text-to-Image, Generative Models, Open-Source Models

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge