Nazanin Rahnavard

On Transfer-based Universal Attacks in Pure Black-box Setting

Apr 11, 2025Abstract:Despite their impressive performance, deep visual models are susceptible to transferable black-box adversarial attacks. Principally, these attacks craft perturbations in a target model-agnostic manner. However, surprisingly, we find that existing methods in this domain inadvertently take help from various priors that violate the black-box assumption such as the availability of the dataset used to train the target model, and the knowledge of the number of classes in the target model. Consequently, the literature fails to articulate the true potency of transferable black-box attacks. We provide an empirical study of these biases and propose a framework that aids in a prior-free transparent study of this paradigm. Using our framework, we analyze the role of prior knowledge of the target model data and number of classes in attack performance. We also provide several interesting insights based on our analysis, and demonstrate that priors cause overestimation in transferability scores. Finally, we extend our framework to query-based attacks. This extension inspires a novel image-blending technique to prepare data for effective surrogate model training.

EVLM: Self-Reflective Multimodal Reasoning for Cross-Dimensional Visual Editing

Dec 13, 2024

Abstract:Editing complex visual content based on ambiguous instructions remains a challenging problem in vision-language modeling. While existing models can contextualize content, they often struggle to grasp the underlying intent within a reference image or scene, leading to misaligned edits. We introduce the Editing Vision-Language Model (EVLM), a system designed to interpret such instructions in conjunction with reference visuals, producing precise and context-aware editing prompts. Leveraging Chain-of-Thought (CoT) reasoning and KL-Divergence Target Optimization (KTO) alignment technique, EVLM captures subjective editing preferences without requiring binary labels. Fine-tuned on a dataset of 30,000 CoT examples, with rationale paths rated by human evaluators, EVLM demonstrates substantial improvements in alignment with human intentions. Experiments across image, video, 3D, and 4D editing tasks show that EVLM generates coherent, high-quality instructions, supporting a scalable framework for complex vision-language applications.

Fisher Information guided Purification against Backdoor Attacks

Sep 01, 2024

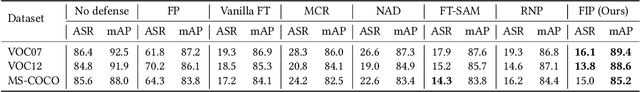

Abstract:Studies on backdoor attacks in recent years suggest that an adversary can compromise the integrity of a deep neural network (DNN) by manipulating a small set of training samples. Our analysis shows that such manipulation can make the backdoor model converge to a bad local minima, i.e., sharper minima as compared to a benign model. Intuitively, the backdoor can be purified by re-optimizing the model to smoother minima. However, a na\"ive adoption of any optimization targeting smoother minima can lead to sub-optimal purification techniques hampering the clean test accuracy. Hence, to effectively obtain such re-optimization, inspired by our novel perspective establishing the connection between backdoor removal and loss smoothness, we propose Fisher Information guided Purification (FIP), a novel backdoor purification framework. Proposed FIP consists of a couple of novel regularizers that aid the model in suppressing the backdoor effects and retaining the acquired knowledge of clean data distribution throughout the backdoor removal procedure through exploiting the knowledge of Fisher Information Matrix (FIM). In addition, we introduce an efficient variant of FIP, dubbed as Fast FIP, which reduces the number of tunable parameters significantly and obtains an impressive runtime gain of almost $5\times$. Extensive experiments show that the proposed method achieves state-of-the-art (SOTA) performance on a wide range of backdoor defense benchmarks: 5 different tasks -- Image Recognition, Object Detection, Video Action Recognition, 3D point Cloud, Language Generation; 11 different datasets including ImageNet, PASCAL VOC, UCF101; diverse model architectures spanning both CNN and vision transformer; 14 different backdoor attacks, e.g., Dynamic, WaNet, LIRA, ISSBA, etc.

Augmented Neural Fine-Tuning for Efficient Backdoor Purification

Jul 14, 2024

Abstract:Recent studies have revealed the vulnerability of deep neural networks (DNNs) to various backdoor attacks, where the behavior of DNNs can be compromised by utilizing certain types of triggers or poisoning mechanisms. State-of-the-art (SOTA) defenses employ too-sophisticated mechanisms that require either a computationally expensive adversarial search module for reverse-engineering the trigger distribution or an over-sensitive hyper-parameter selection module. Moreover, they offer sub-par performance in challenging scenarios, e.g., limited validation data and strong attacks. In this paper, we propose Neural mask Fine-Tuning (NFT) with an aim to optimally re-organize the neuron activities in a way that the effect of the backdoor is removed. Utilizing a simple data augmentation like MixUp, NFT relaxes the trigger synthesis process and eliminates the requirement of the adversarial search module. Our study further reveals that direct weight fine-tuning under limited validation data results in poor post-purification clean test accuracy, primarily due to overfitting issue. To overcome this, we propose to fine-tune neural masks instead of model weights. In addition, a mask regularizer has been devised to further mitigate the model drift during the purification process. The distinct characteristics of NFT render it highly efficient in both runtime and sample usage, as it can remove the backdoor even when a single sample is available from each class. We validate the effectiveness of NFT through extensive experiments covering the tasks of image classification, object detection, video action recognition, 3D point cloud, and natural language processing. We evaluate our method against 14 different attacks (LIRA, WaNet, etc.) on 11 benchmark data sets such as ImageNet, UCF101, Pascal VOC, ModelNet, OpenSubtitles2012, etc.

SAVE: Spectral-Shift-Aware Adaptation of Image Diffusion Models for Text-guided Video Editing

May 30, 2023Abstract:Text-to-Image (T2I) diffusion models have achieved remarkable success in synthesizing high-quality images conditioned on text prompts. Recent methods have tried to replicate the success by either training text-to-video (T2V) models on a very large number of text-video pairs or adapting T2I models on text-video pairs independently. Although the latter is computationally less expensive, it still takes a significant amount of time for per-video adaption. To address this issue, we propose SAVE, a novel spectral-shift-aware adaptation framework, in which we fine-tune the spectral shift of the parameter space instead of the parameters themselves. Specifically, we take the spectral decomposition of the pre-trained T2I weights and only control the change in the corresponding singular values, i.e. spectral shift, while freezing the corresponding singular vectors. To avoid drastic drift from the original T2I weights, we introduce a spectral shift regularizer that confines the spectral shift to be more restricted for large singular values and more relaxed for small singular values. Since we are only dealing with spectral shifts, the proposed method reduces the adaptation time significantly (approx. 10 times) and has fewer resource constrains for training. Such attributes posit SAVE to be more suitable for real-world applications, e.g. editing undesirable content during video streaming. We validate the effectiveness of SAVE with an extensive experimental evaluation under different settings, e.g. style transfer, object replacement, privacy preservation, etc.

CSI-Based Data-driven Localization Frameworking using Small-scale Training Datasets in Single-site MIMO Systems

Apr 22, 2023Abstract:This work presents a date-driven user localization framework for single-site massive Multiple-Input-Multiple-Output (MIMO) systems. The framework is trained on a geo-tagged Channel State Information (CSI) dataset. Unlike the state-of-the-art Convolutional Neural Network (CNN) models, which require large training datasets to perform well, our method is specifically designed to operate with small-scale training datasets. This makes our approach more practical for real-world scenarios, where collecting a large amount of data can be challenging. Our proposed FC-AE-GPR framework combines two components: a Fully-Connected Auto-Encoder (FC-AE) and a Gaussian Process Regression (GPR) model. Our results show that the GPR model outperforms the CNN model when presented with small training datasets. However, the training complexity of GPR models can become an issue when the input sample size is large. To address this, we propose using the FC-AE to reduce the sample size by encoding the CSI before training the GPR model. Although the FC-AE model may require a larger training dataset initially, we demonstrate that the FC-AE is scenario independent. This means that it can be utilized in new and unseen scenarios without prior retraining. Therefore, adapting the FC-AE-GPR model to a new scenario requires only retraining the GPR model with a small training dataset.

C-SFDA: A Curriculum Learning Aided Self-Training Framework for Efficient Source Free Domain Adaptation

Mar 30, 2023

Abstract:Unsupervised domain adaptation (UDA) approaches focus on adapting models trained on a labeled source domain to an unlabeled target domain. UDA methods have a strong assumption that the source data is accessible during adaptation, which may not be feasible in many real-world scenarios due to privacy concerns and resource constraints of devices. In this regard, source-free domain adaptation (SFDA) excels as access to source data is no longer required during adaptation. Recent state-of-the-art (SOTA) methods on SFDA mostly focus on pseudo-label refinement based self-training which generally suffers from two issues: i) inevitable occurrence of noisy pseudo-labels that could lead to early training time memorization, ii) refinement process requires maintaining a memory bank which creates a significant burden in resource constraint scenarios. To address these concerns, we propose C-SFDA, a curriculum learning aided self-training framework for SFDA that adapts efficiently and reliably to changes across domains based on selective pseudo-labeling. Specifically, we employ a curriculum learning scheme to promote learning from a restricted amount of pseudo labels selected based on their reliabilities. This simple yet effective step successfully prevents label noise propagation during different stages of adaptation and eliminates the need for costly memory-bank based label refinement. Our extensive experimental evaluations on both image recognition and semantic segmentation tasks confirm the effectiveness of our method. C-SFDA is readily applicable to online test-time domain adaptation and also outperforms previous SOTA methods in this task.

CNLL: A Semi-supervised Approach For Continual Noisy Label Learning

Apr 21, 2022

Abstract:The task of continual learning requires careful design of algorithms that can tackle catastrophic forgetting. However, the noisy label, which is inevitable in a real-world scenario, seems to exacerbate the situation. While very few studies have addressed the issue of continual learning under noisy labels, long training time and complicated training schemes limit their applications in most cases. In contrast, we propose a simple purification technique to effectively cleanse the online data stream that is both cost-effective and more accurate. After purification, we perform fine-tuning in a semi-supervised fashion that ensures the participation of all available samples. Training in this fashion helps us learn a better representation that results in state-of-the-art (SOTA) performance. Through extensive experimentation on 3 benchmark datasets, MNIST, CIFAR10 and CIFAR100, we show the effectiveness of our proposed approach. We achieve a 24.8% performance gain for CIFAR10 with 20% noise over previous SOTA methods. Our code is publicly available.

RODD: A Self-Supervised Approach for Robust Out-of-Distribution Detection

Apr 13, 2022

Abstract:Recent studies have addressed the concern of detecting and rejecting the out-of-distribution (OOD) samples as a major challenge in the safe deployment of deep learning (DL) models. It is desired that the DL model should only be confident about the in-distribution (ID) data which reinforces the driving principle of the OOD detection. In this paper, we propose a simple yet effective generalized OOD detection method independent of out-of-distribution datasets. Our approach relies on self-supervised feature learning of the training samples, where the embeddings lie on a compact low-dimensional space. Motivated by the recent studies that show self-supervised adversarial contrastive learning helps robustify the model, we empirically show that a pre-trained model with self-supervised contrastive learning yields a better model for uni-dimensional feature learning in the latent space. The method proposed in this work referred to as RODD outperforms SOTA detection performance on an extensive suite of benchmark datasets on OOD detection tasks. On the CIFAR-100 benchmarks, RODD achieves a 26.97 $\%$ lower false-positive rate (FPR@95) compared to SOTA methods.

RF Signal Transformation and Classification using Deep Neural Networks

Apr 06, 2022

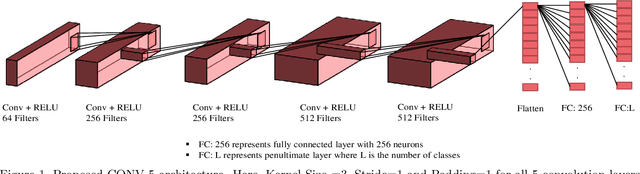

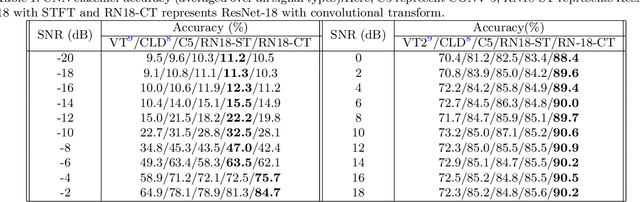

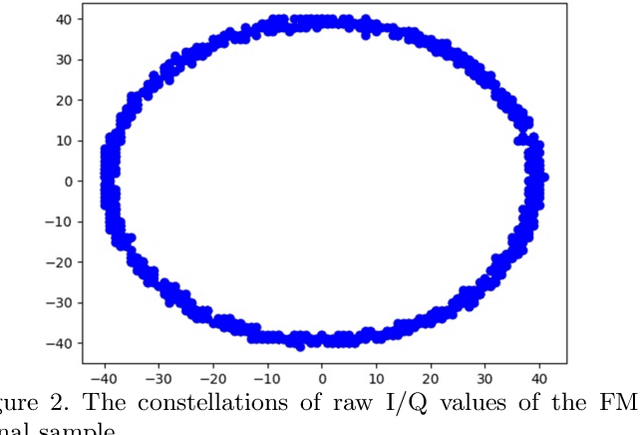

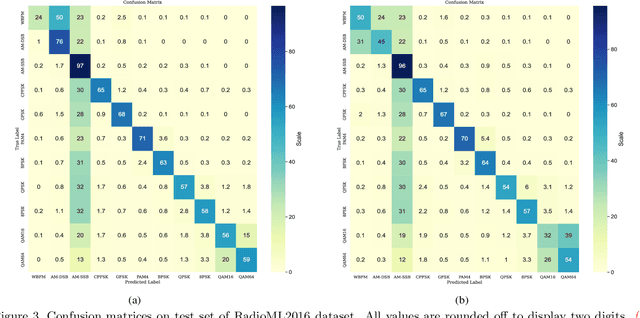

Abstract:Deep neural networks (DNNs) designed for computer vision and natural language processing tasks cannot be directly applied to the radio frequency (RF) datasets. To address this challenge, we propose to convert the raw RF data to data types that are suitable for off-the-shelf DNNs by introducing a convolutional transform technique. In addition, we propose a simple 5-layer convolutional neural network architecture (CONV-5) that can operate with raw RF I/Q data without any transformation. Further, we put forward an RF dataset, referred to as RF1024, to facilitate future RF research. RF1024 consists of 8 different RF modulation classes with each class having 1000/200 training/test samples. Each sample of the RF1024 dataset contains 1024 complex I/Q values. Lastly, the experiments are performed on the RadioML2016 and RF1024 datasets to demonstrate the improved classification performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge