Siddharth Gururani

Cosmos-Reason1: From Physical Common Sense To Embodied Reasoning

Mar 18, 2025Abstract:Physical AI systems need to perceive, understand, and perform complex actions in the physical world. In this paper, we present the Cosmos-Reason1 models that can understand the physical world and generate appropriate embodied decisions (e.g., next step action) in natural language through long chain-of-thought reasoning processes. We begin by defining key capabilities for Physical AI reasoning, with a focus on physical common sense and embodied reasoning. To represent physical common sense, we use a hierarchical ontology that captures fundamental knowledge about space, time, and physics. For embodied reasoning, we rely on a two-dimensional ontology that generalizes across different physical embodiments. Building on these capabilities, we develop two multimodal large language models, Cosmos-Reason1-8B and Cosmos-Reason1-56B. We curate data and train our models in four stages: vision pre-training, general supervised fine-tuning (SFT), Physical AI SFT, and Physical AI reinforcement learning (RL) as the post-training. To evaluate our models, we build comprehensive benchmarks for physical common sense and embodied reasoning according to our ontologies. Evaluation results show that Physical AI SFT and reinforcement learning bring significant improvements. To facilitate the development of Physical AI, we will make our code and pre-trained models available under the NVIDIA Open Model License at https://github.com/nvidia-cosmos/cosmos-reason1.

Cosmos World Foundation Model Platform for Physical AI

Jan 07, 2025

Abstract:Physical AI needs to be trained digitally first. It needs a digital twin of itself, the policy model, and a digital twin of the world, the world model. In this paper, we present the Cosmos World Foundation Model Platform to help developers build customized world models for their Physical AI setups. We position a world foundation model as a general-purpose world model that can be fine-tuned into customized world models for downstream applications. Our platform covers a video curation pipeline, pre-trained world foundation models, examples of post-training of pre-trained world foundation models, and video tokenizers. To help Physical AI builders solve the most critical problems of our society, we make our platform open-source and our models open-weight with permissive licenses available via https://github.com/NVIDIA/Cosmos.

Edify Image: High-Quality Image Generation with Pixel Space Laplacian Diffusion Models

Nov 11, 2024Abstract:We introduce Edify Image, a family of diffusion models capable of generating photorealistic image content with pixel-perfect accuracy. Edify Image utilizes cascaded pixel-space diffusion models trained using a novel Laplacian diffusion process, in which image signals at different frequency bands are attenuated at varying rates. Edify Image supports a wide range of applications, including text-to-image synthesis, 4K upsampling, ControlNets, 360 HDR panorama generation, and finetuning for image customization.

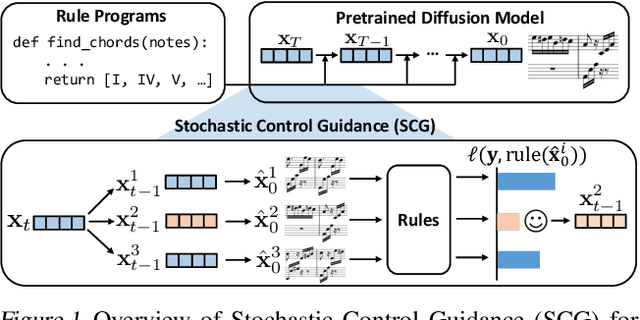

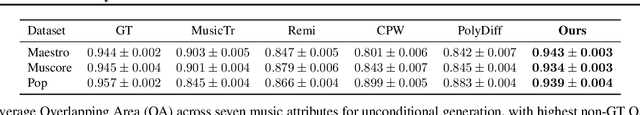

Symbolic Music Generation with Non-Differentiable Rule Guided Diffusion

Feb 23, 2024

Abstract:We study the problem of symbolic music generation (e.g., generating piano rolls), with a technical focus on non-differentiable rule guidance. Musical rules are often expressed in symbolic form on note characteristics, such as note density or chord progression, many of which are non-differentiable which pose a challenge when using them for guided diffusion. We propose Stochastic Control Guidance (SCG), a novel guidance method that only requires forward evaluation of rule functions that can work with pre-trained diffusion models in a plug-and-play way, thus achieving training-free guidance for non-differentiable rules for the first time. Additionally, we introduce a latent diffusion architecture for symbolic music generation with high time resolution, which can be composed with SCG in a plug-and-play fashion. Compared to standard strong baselines in symbolic music generation, this framework demonstrates marked advancements in music quality and rule-based controllability, outperforming current state-of-the-art generators in a variety of settings. For detailed demonstrations, code and model checkpoints, please visit our project website: https://scg-rule-guided-music.github.io/.

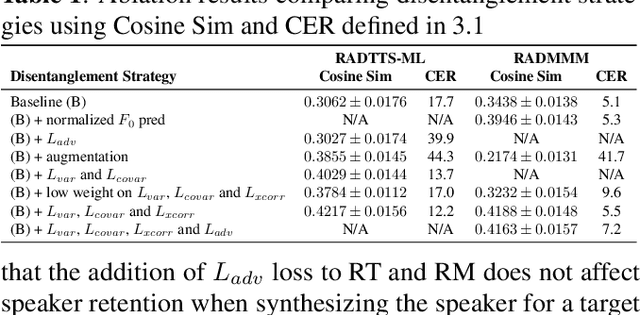

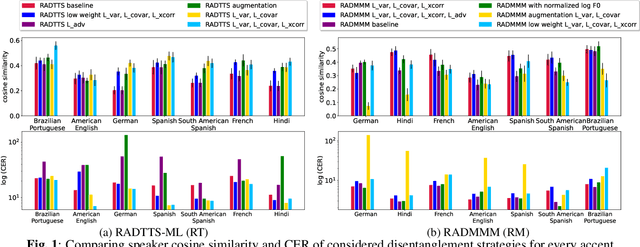

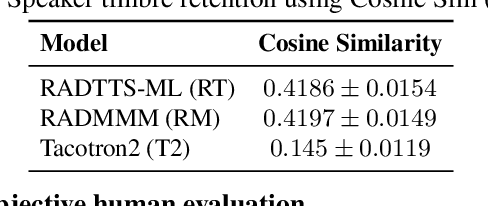

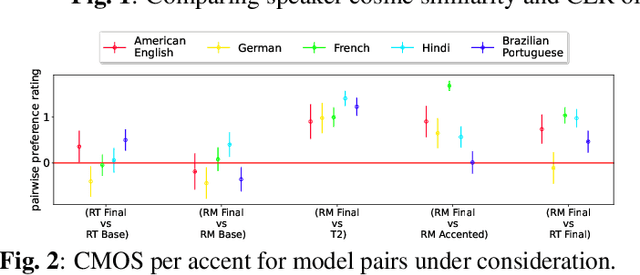

Multilingual Multiaccented Multispeaker TTS with RADTTS

Jan 24, 2023

Abstract:We work to create a multilingual speech synthesis system which can generate speech with the proper accent while retaining the characteristics of an individual voice. This is challenging to do because it is expensive to obtain bilingual training data in multiple languages, and the lack of such data results in strong correlations that entangle speaker, language, and accent, resulting in poor transfer capabilities. To overcome this, we present a multilingual, multiaccented, multispeaker speech synthesis model based on RADTTS with explicit control over accent, language, speaker and fine-grained $F_0$ and energy features. Our proposed model does not rely on bilingual training data. We demonstrate an ability to control synthesized accent for any speaker in an open-source dataset comprising of 7 accents. Human subjective evaluation demonstrates that our model can better retain a speaker's voice and accent quality than controlled baselines while synthesizing fluent speech in all target languages and accents in our dataset.

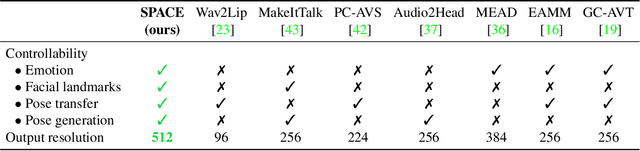

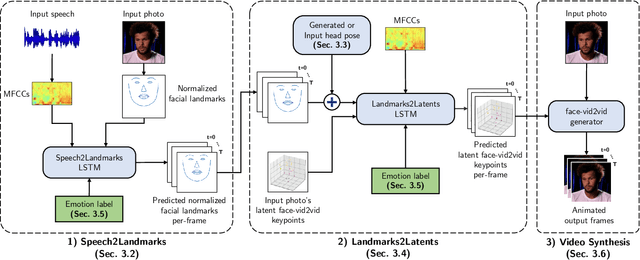

SPACE: Speech-driven Portrait Animation with Controllable Expression

Dec 07, 2022

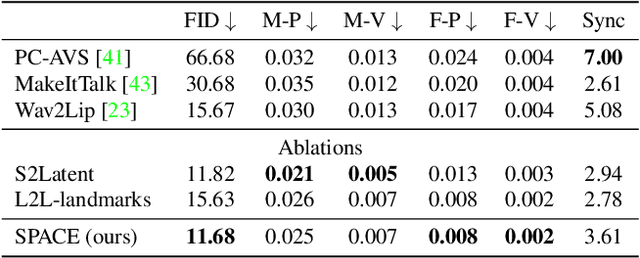

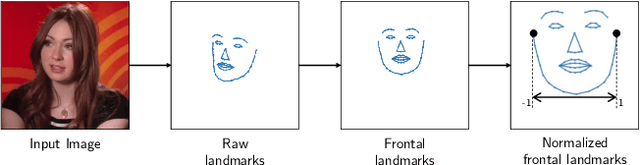

Abstract:Animating portraits using speech has received growing attention in recent years, with various creative and practical use cases. An ideal generated video should have good lip sync with the audio, natural facial expressions and head motions, and high frame quality. In this work, we present SPACE, which uses speech and a single image to generate high-resolution, and expressive videos with realistic head pose, without requiring a driving video. It uses a multi-stage approach, combining the controllability of facial landmarks with the high-quality synthesis power of a pretrained face generator. SPACE also allows for the control of emotions and their intensities. Our method outperforms prior methods in objective metrics for image quality and facial motions and is strongly preferred by users in pair-wise comparisons. The project website is available at https://deepimagination.cc/SPACE/

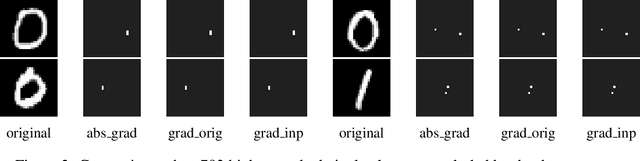

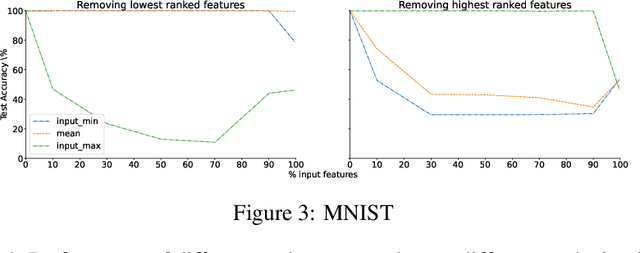

Anomalous behaviour in loss-gradient based interpretability methods

Jul 15, 2022

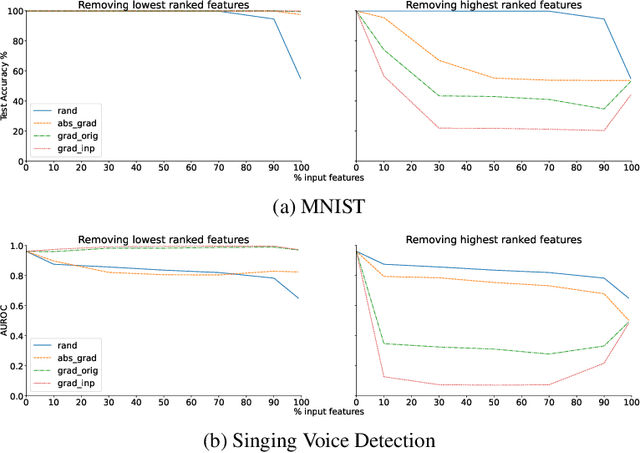

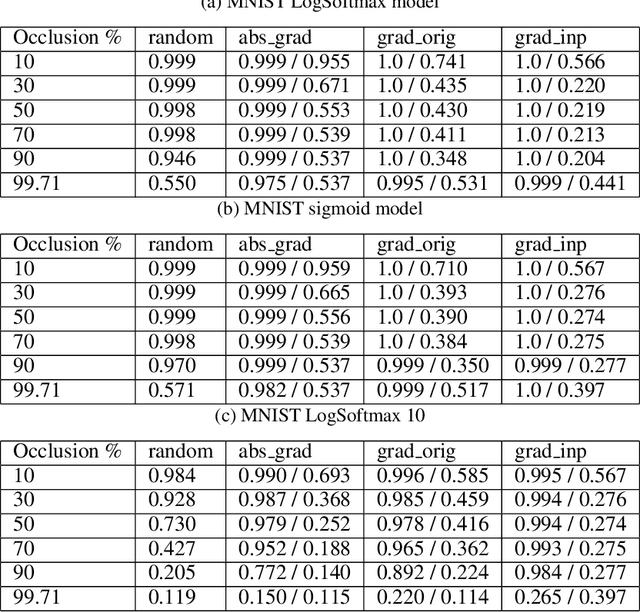

Abstract:Loss-gradients are used to interpret the decision making process of deep learning models. In this work, we evaluate loss-gradient based attribution methods by occluding parts of the input and comparing the performance of the occluded input to the original input. We observe that the occluded input has better performance than the original across the test dataset under certain conditions. Similar behaviour is observed in sound and image recognition tasks. We explore different loss-gradient attribution methods, occlusion levels and replacement values to explain the phenomenon of performance improvement under occlusion.

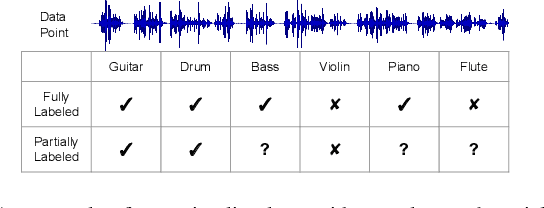

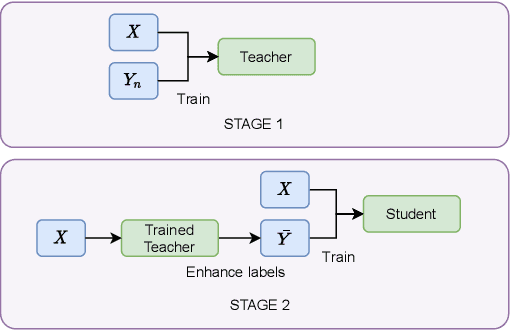

Semi-Supervised Audio Classification with Partially Labeled Data

Nov 24, 2021

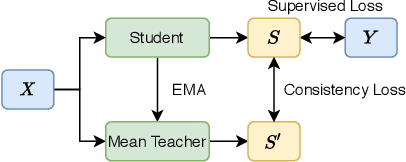

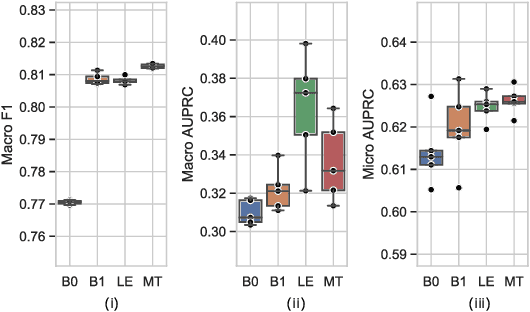

Abstract:Audio classification has seen great progress with the increasing availability of large-scale datasets. These large datasets, however, are often only partially labeled as collecting full annotations is a tedious and expensive process. This paper presents two semi-supervised methods capable of learning with missing labels and evaluates them on two publicly available, partially labeled datasets. The first method relies on label enhancement by a two-stage teacher-student learning process, while the second method utilizes the mean teacher semi-supervised learning algorithm. Our results demonstrate the impact of improperly handling missing labels and compare the benefits of using different strategies leveraging data with few labels. Methods capable of learning with partially labeled data have the potential to improve models for audio classification by utilizing even larger amounts of data without the need for complete annotations.

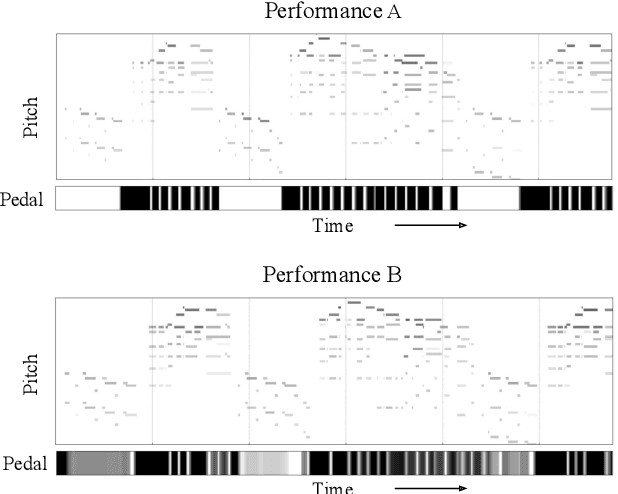

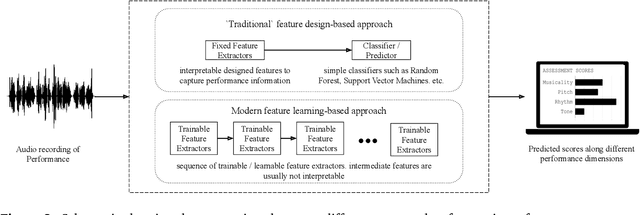

An Interdisciplinary Review of Music Performance Analysis

Apr 19, 2021

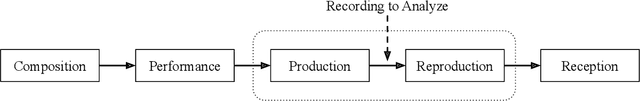

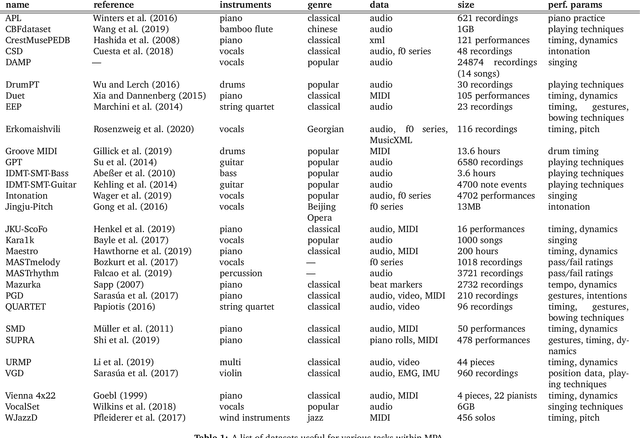

Abstract:A musical performance renders an acoustic realization of a musical score or other representation of a composition. Different performances of the same composition may vary in terms of performance parameters such as timing or dynamics, and these variations may have a major impact on how a listener perceives the music. The analysis of music performance has traditionally been a peripheral topic for the MIR research community, where often a single audio recording is used as representative of a musical work. This paper surveys the field of Music Performance Analysis (MPA) from several perspectives including the measurement of performance parameters, the relation of those parameters to the actions and intentions of a performer or perceptual effects on a listener, and finally the assessment of musical performance. This paper also discusses MPA as it relates to MIR, pointing out opportunities for collaboration and future research in both areas.

* arXiv admin note: substantial text overlap with arXiv:1907.00178

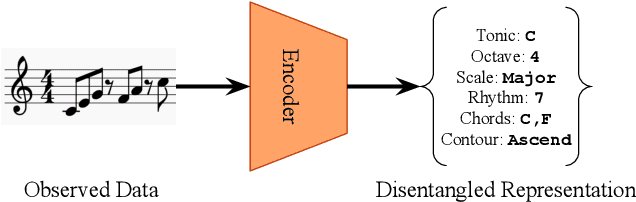

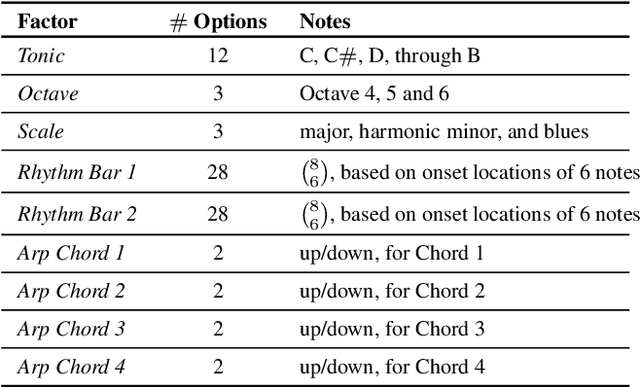

dMelodies: A Music Dataset for Disentanglement Learning

Jul 29, 2020

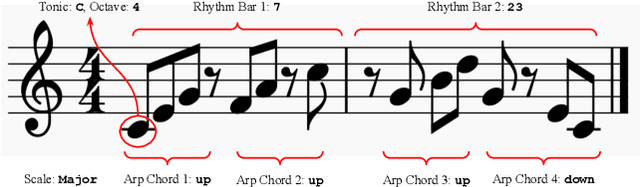

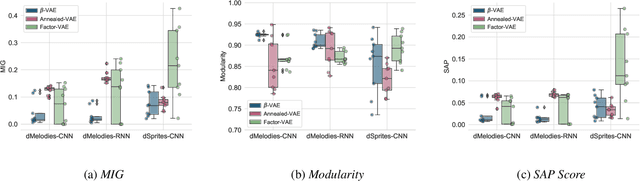

Abstract:Representation learning focused on disentangling the underlying factors of variation in given data has become an important area of research in machine learning. However, most of the studies in this area have relied on datasets from the computer vision domain and thus, have not been readily extended to music. In this paper, we present a new symbolic music dataset that will help researchers working on disentanglement problems demonstrate the efficacy of their algorithms on diverse domains. This will also provide a means for evaluating algorithms specifically designed for music. To this end, we create a dataset comprising of 2-bar monophonic melodies where each melody is the result of a unique combination of nine latent factors that span ordinal, categorical, and binary types. The dataset is large enough (approx. 1.3 million data points) to train and test deep networks for disentanglement learning. In addition, we present benchmarking experiments using popular unsupervised disentanglement algorithms on this dataset and compare the results with those obtained on an image-based dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge