Robert Wang

emg2pose: A Large and Diverse Benchmark for Surface Electromyographic Hand Pose Estimation

Dec 02, 2024

Abstract:Hands are the primary means through which humans interact with the world. Reliable and always-available hand pose inference could yield new and intuitive control schemes for human-computer interactions, particularly in virtual and augmented reality. Computer vision is effective but requires one or multiple cameras and can struggle with occlusions, limited field of view, and poor lighting. Wearable wrist-based surface electromyography (sEMG) presents a promising alternative as an always-available modality sensing muscle activities that drive hand motion. However, sEMG signals are strongly dependent on user anatomy and sensor placement, and existing sEMG models have required hundreds of users and device placements to effectively generalize. To facilitate progress on sEMG pose inference, we introduce the emg2pose benchmark, the largest publicly available dataset of high-quality hand pose labels and wrist sEMG recordings. emg2pose contains 2kHz, 16 channel sEMG and pose labels from a 26-camera motion capture rig for 193 users, 370 hours, and 29 stages with diverse gestures - a scale comparable to vision-based hand pose datasets. We provide competitive baselines and challenging tasks evaluating real-world generalization scenarios: held-out users, sensor placements, and stages. emg2pose provides the machine learning community a platform for exploring complex generalization problems, holding potential to significantly enhance the development of sEMG-based human-computer interactions.

HOT3D: Hand and Object Tracking in 3D from Egocentric Multi-View Videos

Nov 28, 2024Abstract:We introduce HOT3D, a publicly available dataset for egocentric hand and object tracking in 3D. The dataset offers over 833 minutes (more than 3.7M images) of multi-view RGB/monochrome image streams showing 19 subjects interacting with 33 diverse rigid objects, multi-modal signals such as eye gaze or scene point clouds, as well as comprehensive ground-truth annotations including 3D poses of objects, hands, and cameras, and 3D models of hands and objects. In addition to simple pick-up/observe/put-down actions, HOT3D contains scenarios resembling typical actions in a kitchen, office, and living room environment. The dataset is recorded by two head-mounted devices from Meta: Project Aria, a research prototype of light-weight AR/AI glasses, and Quest 3, a production VR headset sold in millions of units. Ground-truth poses were obtained by a professional motion-capture system using small optical markers attached to hands and objects. Hand annotations are provided in the UmeTrack and MANO formats and objects are represented by 3D meshes with PBR materials obtained by an in-house scanner. In our experiments, we demonstrate the effectiveness of multi-view egocentric data for three popular tasks: 3D hand tracking, 6DoF object pose estimation, and 3D lifting of unknown in-hand objects. The evaluated multi-view methods, whose benchmarking is uniquely enabled by HOT3D, significantly outperform their single-view counterparts.

Experimental Design Using Interlacing Polynomials

Oct 15, 2024Abstract:We present a unified deterministic approach for experimental design problems using the method of interlacing polynomials. Our framework recovers the best-known approximation guarantees for the well-studied D/A/E-design problems with simple analysis. Furthermore, we obtain improved non-trivial approximation guarantee for E-design in the challenging small budget regime. Additionally, our approach provides an optimal approximation guarantee for a generalized ratio objective that generalizes both D-design and A-design.

TouchInsight: Uncertainty-aware Rapid Touch and Text Input for Mixed Reality from Egocentric Vision

Oct 08, 2024Abstract:While passive surfaces offer numerous benefits for interaction in mixed reality, reliably detecting touch input solely from head-mounted cameras has been a long-standing challenge. Camera specifics, hand self-occlusion, and rapid movements of both head and fingers introduce considerable uncertainty about the exact location of touch events. Existing methods have thus not been capable of achieving the performance needed for robust interaction. In this paper, we present a real-time pipeline that detects touch input from all ten fingers on any physical surface, purely based on egocentric hand tracking. Our method TouchInsight comprises a neural network to predict the moment of a touch event, the finger making contact, and the touch location. TouchInsight represents locations through a bivariate Gaussian distribution to account for uncertainties due to sensing inaccuracies, which we resolve through contextual priors to accurately infer intended user input. We first evaluated our method offline and found that it locates input events with a mean error of 6.3 mm, and accurately detects touch events (F1=0.99) and identifies the finger used (F1=0.96). In an online evaluation, we then demonstrate the effectiveness of our approach for a core application of dexterous touch input: two-handed text entry. In our study, participants typed 37.0 words per minute with an uncorrected error rate of 2.9% on average.

Introducing HOT3D: An Egocentric Dataset for 3D Hand and Object Tracking

Jun 13, 2024Abstract:We introduce HOT3D, a publicly available dataset for egocentric hand and object tracking in 3D. The dataset offers over 833 minutes (more than 3.7M images) of multi-view RGB/monochrome image streams showing 19 subjects interacting with 33 diverse rigid objects, multi-modal signals such as eye gaze or scene point clouds, as well as comprehensive ground truth annotations including 3D poses of objects, hands, and cameras, and 3D models of hands and objects. In addition to simple pick-up/observe/put-down actions, HOT3D contains scenarios resembling typical actions in a kitchen, office, and living room environment. The dataset is recorded by two head-mounted devices from Meta: Project Aria, a research prototype of light-weight AR/AI glasses, and Quest 3, a production VR headset sold in millions of units. Ground-truth poses were obtained by a professional motion-capture system using small optical markers attached to hands and objects. Hand annotations are provided in the UmeTrack and MANO formats and objects are represented by 3D meshes with PBR materials obtained by an in-house scanner. We aim to accelerate research on egocentric hand-object interaction by making the HOT3D dataset publicly available and by co-organizing public challenges on the dataset at ECCV 2024. The dataset can be downloaded from the project website: https://facebookresearch.github.io/hot3d/.

Analysis of Corrected Graph Convolutions

May 22, 2024Abstract:Machine learning for node classification on graphs is a prominent area driven by applications such as recommendation systems. State-of-the-art models often use multiple graph convolutions on the data, as empirical evidence suggests they can enhance performance. However, it has been shown empirically and theoretically, that too many graph convolutions can degrade performance significantly, a phenomenon known as oversmoothing. In this paper, we provide a rigorous theoretical analysis, based on the contextual stochastic block model (CSBM), of the performance of vanilla graph convolution from which we remove the principal eigenvector to avoid oversmoothing. We perform a spectral analysis for $k$ rounds of corrected graph convolutions, and we provide results for partial and exact classification. For partial classification, we show that each round of convolution can reduce the misclassification error exponentially up to a saturation level, after which performance does not worsen. For exact classification, we show that the separability threshold can be improved exponentially up to $O({\log{n}}/{\log\log{n}})$ corrected convolutions.

Fast Algorithms for Directed Graph Partitioning Using Flows and Reweighted Eigenvalues

Jun 15, 2023Abstract:We consider a new semidefinite programming relaxation for directed edge expansion, which is obtained by adding triangle inequalities to the reweighted eigenvalue formulation. Applying the matrix multiplicative weight update method to this relaxation, we derive almost linear-time algorithms to achieve $O(\sqrt{\log{n}})$-approximation and Cheeger-type guarantee for directed edge expansion, as well as an improved cut-matching game for directed graphs. This provides a primal-dual flow-based framework to obtain the best known algorithms for directed graph partitioning. The same approach also works for vertex expansion and for hypergraphs, providing a simple and unified approach to achieve the best known results for different expansion problems and different algorithmic techniques.

Experimental Design for Any $p$-Norm

May 03, 2023Abstract:We consider a general $p$-norm objective for experimental design problems that captures some well-studied objectives (D/A/E-design) as special cases. We prove that a randomized local search approach provides a unified algorithm to solve this problem for all $p$. This provides the first approximation algorithm for the general $p$-norm objective, and a nice interpolation of the best known bounds of the special cases.

Cheeger Inequalities for Directed Graphs and Hypergraphs Using Reweighted Eigenvalues

Nov 17, 2022Abstract:We derive Cheeger inequalities for directed graphs and hypergraphs using the reweighted eigenvalue approach that was recently developed for vertex expansion in undirected graphs [OZ22,KLT22,JPV22]. The goal is to develop a new spectral theory for directed graphs and an alternative spectral theory for hypergraphs. The first main result is a Cheeger inequality relating the vertex expansion $\vec{\psi}(G)$ of a directed graph $G$ to the vertex-capacitated maximum reweighted second eigenvalue $\vec{\lambda}_2^{v*}$: \[ \vec{\lambda}_2^{v*} \lesssim \vec{\psi}(G) \lesssim \sqrt{\vec{\lambda}_2^{v*} \cdot \log (\Delta/\vec{\lambda}_2^{v*})}. \] This provides a combinatorial characterization of the fastest mixing time of a directed graph by vertex expansion, and builds a new connection between reweighted eigenvalued, vertex expansion, and fastest mixing time for directed graphs. The second main result is a stronger Cheeger inequality relating the edge conductance $\vec{\phi}(G)$ of a directed graph $G$ to the edge-capacitated maximum reweighted second eigenvalue $\vec{\lambda}_2^{e*}$: \[ \vec{\lambda}_2^{e*} \lesssim \vec{\phi}(G) \lesssim \sqrt{\vec{\lambda}_2^{e*} \cdot \log (1/\vec{\lambda}_2^{e*})}. \] This provides a certificate for a directed graph to be an expander and a spectral algorithm to find a sparse cut in a directed graph, playing a similar role as Cheeger's inequality in certifying graph expansion and in the spectral partitioning algorithm for undirected graphs. We also use this reweighted eigenvalue approach to derive the improved Cheeger inequality for directed graphs, and furthermore to derive several Cheeger inequalities for hypergraphs that match and improve the existing results in [Lou15,CLTZ18]. These are supporting results that this provides a unifying approach to lift the spectral theory for undirected graphs to more general settings.

UmeTrack: Unified multi-view end-to-end hand tracking for VR

Oct 31, 2022

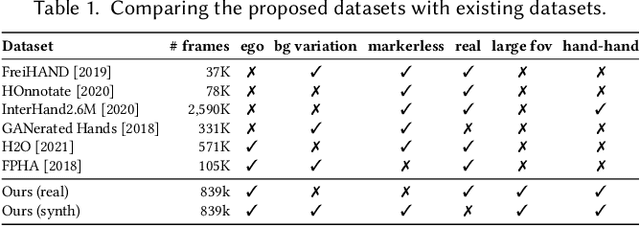

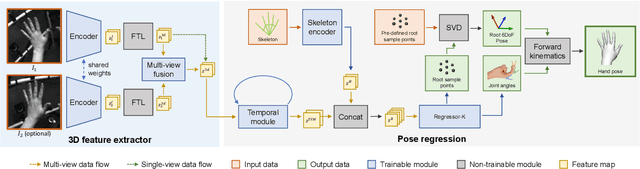

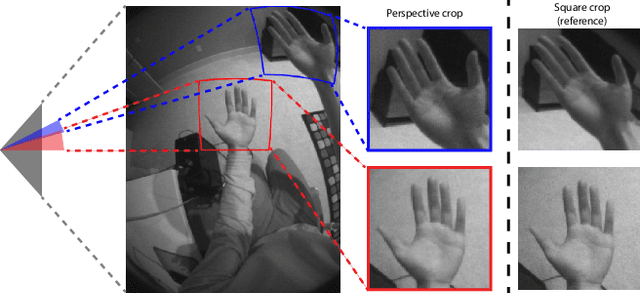

Abstract:Real-time tracking of 3D hand pose in world space is a challenging problem and plays an important role in VR interaction. Existing work in this space are limited to either producing root-relative (versus world space) 3D pose or rely on multiple stages such as generating heatmaps and kinematic optimization to obtain 3D pose. Moreover, the typical VR scenario, which involves multi-view tracking from wide \ac{fov} cameras is seldom addressed by these methods. In this paper, we present a unified end-to-end differentiable framework for multi-view, multi-frame hand tracking that directly predicts 3D hand pose in world space. We demonstrate the benefits of end-to-end differentiabilty by extending our framework with downstream tasks such as jitter reduction and pinch prediction. To demonstrate the efficacy of our model, we further present a new large-scale egocentric hand pose dataset that consists of both real and synthetic data. Experiments show that our system trained on this dataset handles various challenging interactive motions, and has been successfully applied to real-time VR applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge