Quan Yu

Sun Yat-sen University, Qiannan Normal College for Nationalities

Spectral-Spatial Extraction through Layered Tensor Decomposition for Hyperspectral Anomaly Detection

Mar 07, 2025Abstract:Low rank tensor representation (LRTR) methods are very useful for hyperspectral anomaly detection (HAD). To overcome the limitations that they often overlook spectral anomaly and rely on large-scale matrix singular value decomposition, we first apply non-negative matrix factorization (NMF) to alleviate spectral dimensionality redundancy and extract spectral anomaly and then employ LRTR to extract spatial anomaly while mitigating spatial redundancy, yielding a highly efffcient layered tensor decomposition (LTD) framework for HAD. An iterative algorithm based on proximal alternating minimization is developed to solve the proposed LTD model, with convergence guarantees provided. Moreover, we introduce a rank reduction strategy with validation mechanism that adaptively reduces data size while preventing excessive reduction. Theoretically, we rigorously establish the equivalence between the tensor tubal rank and tensor group sparsity regularization (TGSR) and, under mild conditions, demonstrate that the relaxed formulation of TGSR shares the same global minimizers and optimal values as its original counterpart. Experimental results on the Airport-Beach-Urban and MVTec datasets demonstrate that our approach outperforms state-of-the-art methods in the HAD task.

Understanding is Compression

Jun 24, 2024Abstract:We have previously shown all understanding or learning are compression, under reasonable assumptions. In principle, better understanding of data should improve data compression. Traditional compression methodologies focus on encoding frequencies or some other computable properties of data. Large language models approximate the uncomputable Solomonoff distribution, opening up a whole new avenue to justify our theory. Under the new uncomputable paradigm, we present LMCompress based on the understanding of data using large models. LMCompress has significantly better lossless compression ratios than all other lossless data compression methods, doubling the compression ratios of JPEG-XL for images, FLAC for audios and H264 for videos, and tripling or quadrupling the compression ratio of bz2 for texts. The better a large model understands the data, the better LMCompress compresses.

Confocal structured illumination microscopy

May 24, 2024

Abstract:Confocal microscopy, a critical advancement in optical imaging, is widely applied because of its excellent anti-noise ability. However, it has low imaging efficiency and can cause phototoxicity. Optical-sectioning structured illumination microscopy (OS-SIM) can overcome the limitations of confocal microscopy but still face challenges in imaging depth and signal-to-noise ratio (SNR). We introduce the concept of confocal imaging into OS-SIM and propose confocal structured illumination microscopy (CSIM) to enhance the imaging performance of OS-SIM. CSIM exploits the principle of dual photography to reconstruct a dual image from each pixel of the camera. The reconstructed dual image is equivalent to the image obtained by using the spatial light modulator (SLM) as a virtual camera, enabling the separation of the conjugate and non-conjugate signals recorded by the camera pixel. We can reject the non-conjugate signals by extracting the conjugate signal from each dual image to reconstruct a confocal image when establishing the conjugate relationship between the camera and the SLM. We have constructed the theoretical framework of CSIM. Optical-sectioning experimental results demonstrate that CSIM can reconstruct images with superior SNR and greater imaging depth compared with existing OS-SIM. CSIM is expected to expand the application scope of OS-SIM.

Intelligent Credit Limit Management in Consumer Loans Based on Causal Inference

Jul 10, 2020

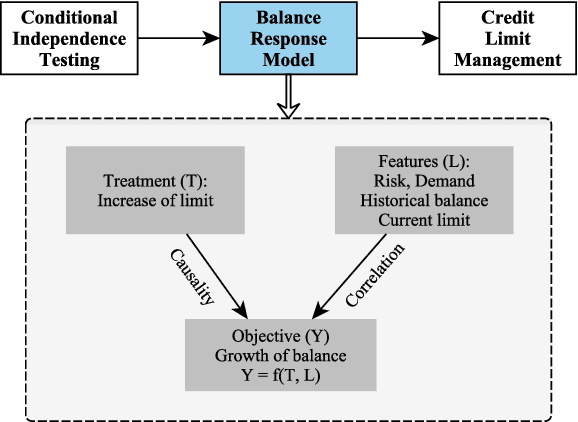

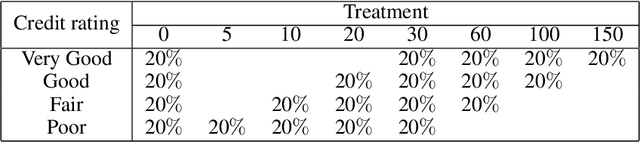

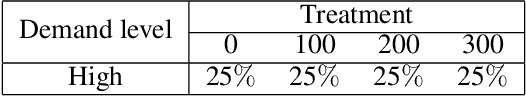

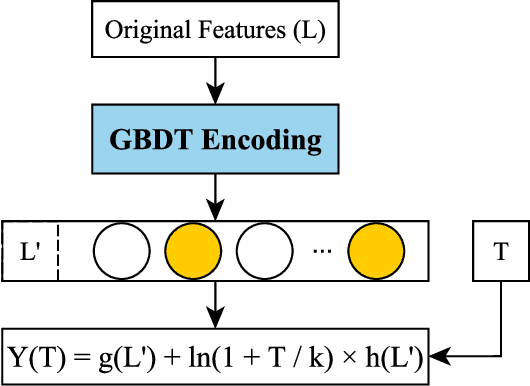

Abstract:Nowadays consumer loan plays an important role in promoting the economic growth, and credit cards are the most popular consumer loan. One of the most essential parts in credit cards is the credit limit management. Traditionally, credit limits are adjusted based on limited heuristic strategies, which are developed by experienced professionals. In this paper, we present a data-driven approach to manage the credit limit intelligently. Firstly, a conditional independence testing is conducted to acquire the data for building models. Based on these testing data, a response model is then built to measure the heterogeneous treatment effect of increasing credit limits (i.e. treatments) for different customers, who are depicted by several control variables (i.e. features). In order to incorporate the diminishing marginal effect, a carefully selected log transformation is introduced to the treatment variable. Moreover, the model's capability can be further enhanced by applying a non-linear transformation on features via GBDT encoding. Finally, a well-designed metric is proposed to properly measure the performances of compared methods. The experimental results demonstrate the effectiveness of the proposed approach.

Large-scale Uncertainty Estimation and Its Application in Revenue Forecast of SMEs

May 02, 2020

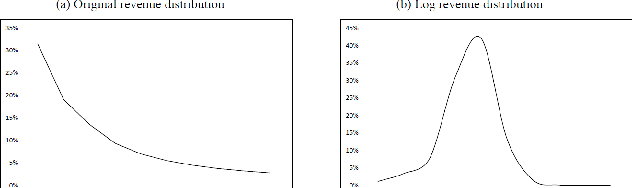

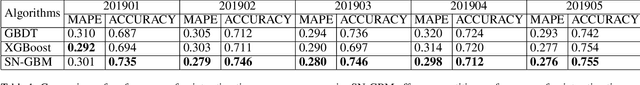

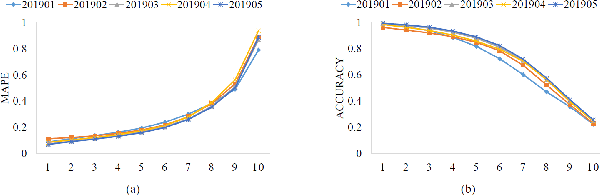

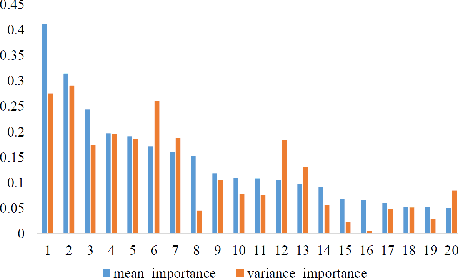

Abstract:The economic and banking importance of the small and medium enterprise (SME) sector is well recognized in contemporary society. Business credit loans are very important for the operation of SMEs, and the revenue is a key indicator of credit limit management. Therefore, it is very beneficial to construct a reliable revenue forecasting model. If the uncertainty of an enterprise's revenue forecasting can be estimated, a more proper credit limit can be granted. Natural gradient boosting approach, which estimates the uncertainty of prediction by a multi-parameter boosting algorithm based on the natural gradient. However, its original implementation is not easy to scale into big data scenarios, and computationally expensive compared to state-of-the-art tree-based models (such as XGBoost). In this paper, we propose a Scalable Natural Gradient Boosting Machines that is simple to implement, readily parallelizable, interpretable and yields high-quality predictive uncertainty estimates. According to the characteristics of revenue distribution, we derive an uncertainty quantification function. We demonstrate that our method can distinguish between samples that are accurate and inaccurate on revenue forecasting of SMEs. What's more, interpretability can be naturally obtained from the model, satisfying the financial needs.

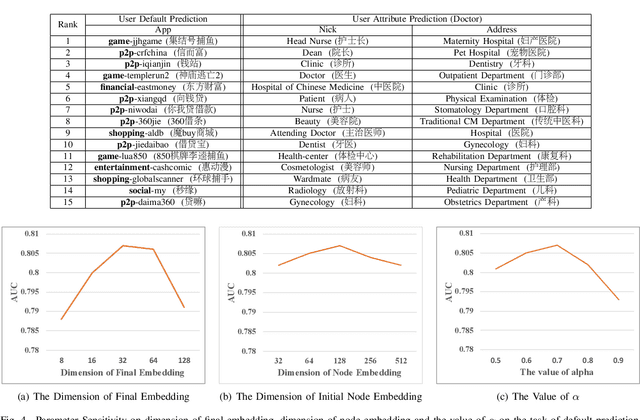

NetDP: An Industrial-Scale Distributed Network Representation Framework for Default Prediction in Ant Credit Pay

Apr 01, 2020

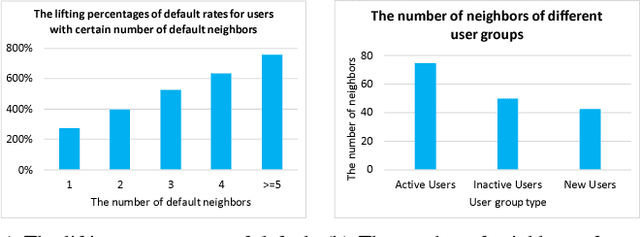

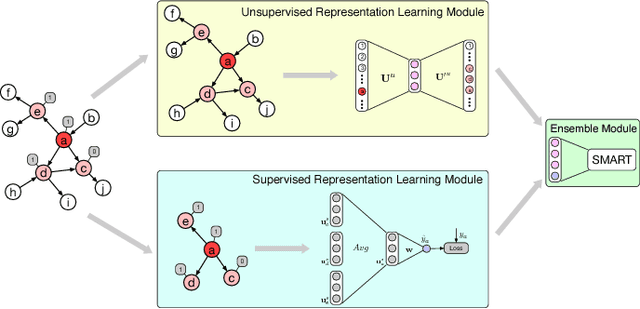

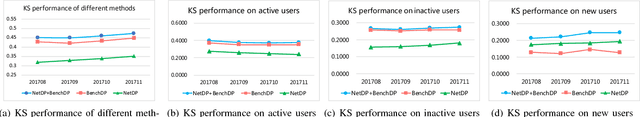

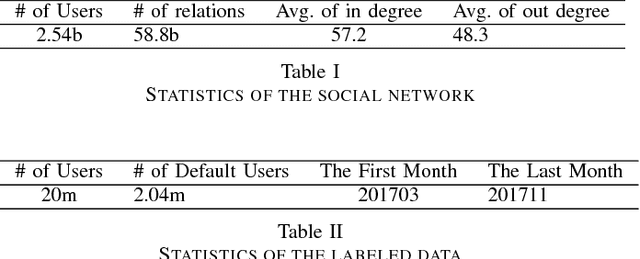

Abstract:Ant Credit Pay is a consumer credit service in Ant Financial Service Group. Similar to credit card, loan default is one of the major risks of this credit product. Hence, effective algorithm for default prediction is the key to losses reduction and profits increment for the company. However, the challenges facing in our scenario are different from those in conventional credit card service. The first one is scalability. The huge volume of users and their behaviors in Ant Financial requires the ability to process industrial-scale data and perform model training efficiently. The second challenges is the cold-start problem. Different from the manual review for credit card application in conventional banks, the credit limit of Ant Credit Pay is automatically offered to users based on the knowledge learned from big data. However, default prediction for new users is suffered from lack of enough credit behaviors. It requires that the proposal should leverage other new data source to alleviate the cold-start problem. Considering the above challenges and the special scenario in Ant Financial, we try to incorporate default prediction with network information to alleviate the cold-start problem. In this paper, we propose an industrial-scale distributed network representation framework, termed NetDP, for default prediction in Ant Credit Pay. The proposal explores network information generated by various interaction between users, and blends unsupervised and supervised network representation in a unified framework for default prediction problem. Moreover, we present a parameter-server-based distributed implement of our proposal to handle the scalability challenge. Experimental results demonstrate the effectiveness of our proposal, especially in cold-start problem, as well as the efficiency for industrial-scale dataset.

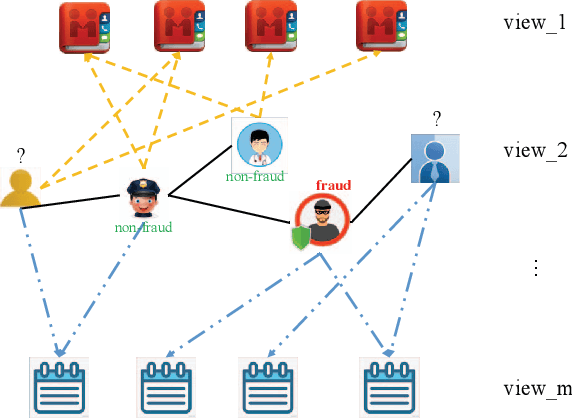

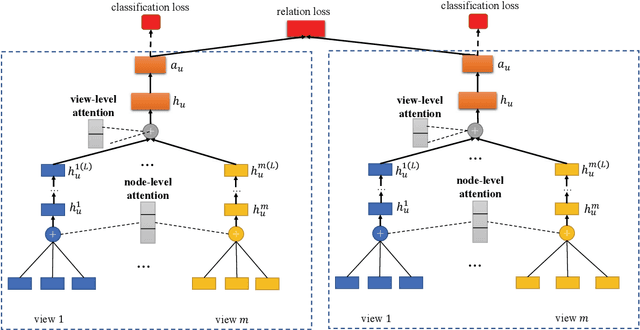

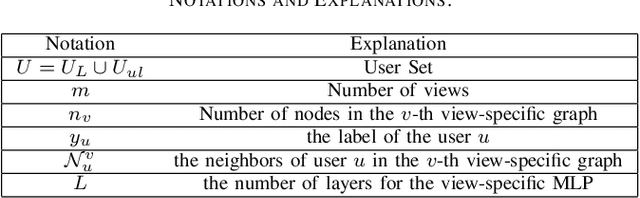

A Semi-supervised Graph Attentive Network for Financial Fraud Detection

Feb 28, 2020

Abstract:With the rapid growth of financial services, fraud detection has been a very important problem to guarantee a healthy environment for both users and providers. Conventional solutions for fraud detection mainly use some rule-based methods or distract some features manually to perform prediction. However, in financial services, users have rich interactions and they themselves always show multifaceted information. These data form a large multiview network, which is not fully exploited by conventional methods. Additionally, among the network, only very few of the users are labelled, which also poses a great challenge for only utilizing labeled data to achieve a satisfied performance on fraud detection. To address the problem, we expand the labeled data through their social relations to get the unlabeled data and propose a semi-supervised attentive graph neural network, namedSemiGNN to utilize the multi-view labeled and unlabeled data for fraud detection. Moreover, we propose a hierarchical attention mechanism to better correlate different neighbors and different views. Simultaneously, the attention mechanism can make the model interpretable and tell what are the important factors for the fraud and why the users are predicted as fraud. Experimentally, we conduct the prediction task on the users of Alipay, one of the largest third-party online and offline cashless payment platform serving more than 4 hundreds of million users in China. By utilizing the social relations and the user attributes, our method can achieve a better accuracy compared with the state-of-the-art methods on two tasks. Moreover, the interpretable results also give interesting intuitions regarding the tasks.

A Dynamic Epistemic Framework for Conformant Planning

Jun 24, 2016Abstract:In this paper, we introduce a lightweight dynamic epistemic logical framework for automated planning under initial uncertainty. We reduce plan verification and conformant planning to model checking problems of our logic. We show that the model checking problem of the iteration-free fragment is PSPACE-complete. By using two non-standard (but equivalent) semantics, we give novel model checking algorithms to the full language and the iteration-free language.

* In Proceedings TARK 2015, arXiv:1606.07295

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge