Per Ola Kristensson

Cost-Aware Bayesian Optimization for Prototyping Interactive Devices

Feb 02, 2026Abstract:Deciding which idea is worth prototyping is a central concern in iterative design. A prototype should be produced when the expected improvement is high and the cost is low. However, this is hard to decide, because costs can vary drastically: a simple parameter tweak may take seconds, while fabricating hardware consumes material and energy. Such asymmetries, can discourage a designer from exploring the design space. In this paper, we present an extension of cost-aware Bayesian optimization to account for diverse prototyping costs. The method builds on the power of Bayesian optimization and requires only a minimal modification to the acquisition function. The key idea is to use designer-estimated costs to guide sampling toward more cost-effective prototypes. In technical evaluations, the method achieved comparable utility to a cost-agnostic baseline while requiring only ${\approx}70\%$ of the cost; under strict budgets, it outperformed the baseline threefold. A within-subjects study with 12 participants in a realistic joystick design task demonstrated similar benefits. These results show that accounting for prototyping costs can make Bayesian optimization more compatible with real-world design projects.

ImageTalk: Designing a Multimodal AAC Text Generation System Driven by Image Recognition and Natural Language Generation

Dec 10, 2025Abstract:People living with Motor Neuron Disease (plwMND) frequently encounter speech and motor impairments that necessitate a reliance on augmentative and alternative communication (AAC) systems. This paper tackles the main challenge that traditional symbol-based AAC systems offer a limited vocabulary, while text entry solutions tend to exhibit low communication rates. To help plwMND articulate their needs about the system efficiently and effectively, we iteratively design and develop a novel multimodal text generation system called ImageTalk through a tailored proxy-user-based and an end-user-based design phase. The system demonstrates pronounced keystroke savings of 95.6%, coupled with consistent performance and high user satisfaction. We distill three design guidelines for AI-assisted text generation systems design and outline four user requirement levels tailored for AAC purposes, guiding future research in this field.

X-LeBench: A Benchmark for Extremely Long Egocentric Video Understanding

Jan 12, 2025Abstract:Long-form egocentric video understanding provides rich contextual information and unique insights into long-term human behaviors, holding significant potential for applications in embodied intelligence, long-term activity analysis, and personalized assistive technologies. However, existing benchmark datasets primarily focus on single, short-duration videos or moderately long videos up to dozens of minutes, leaving a substantial gap in evaluating extensive, ultra-long egocentric video recordings. To address this, we introduce X-LeBench, a novel benchmark dataset specifically crafted for evaluating tasks on extremely long egocentric video recordings. Leveraging the advanced text processing capabilities of large language models (LLMs), X-LeBench develops a life-logging simulation pipeline that produces realistic, coherent daily plans aligned with real-world video data. This approach enables the flexible integration of synthetic daily plans with real-world footage from Ego4D-a massive-scale egocentric video dataset covers a wide range of daily life scenarios-resulting in 432 simulated video life logs that mirror realistic daily activities in contextually rich scenarios. The video life-log durations span from 23 minutes to 16.4 hours. The evaluation of several baseline systems and multimodal large language models (MLLMs) reveals their poor performance across the board, highlighting the inherent challenges of long-form egocentric video understanding and underscoring the need for more advanced models.

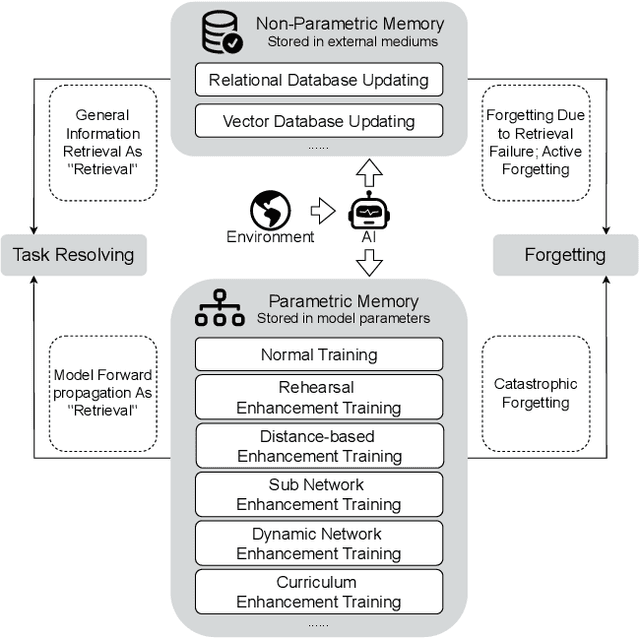

Human-inspired Perspectives: A Survey on AI Long-term Memory

Nov 01, 2024

Abstract:With the rapid advancement of AI systems, their abilities to store, retrieve, and utilize information over the long term - referred to as long-term memory - have become increasingly significant. These capabilities are crucial for enhancing the performance of AI systems across a wide range of tasks. However, there is currently no comprehensive survey that systematically investigates AI's long-term memory capabilities, formulates a theoretical framework, and inspires the development of next-generation AI long-term memory systems. This paper begins by systematically introducing the mechanisms of human long-term memory, then explores AI long-term memory mechanisms, establishing a mapping between the two. Based on the mapping relationships identified, we extend the current cognitive architectures and propose the Cognitive Architecture of Self-Adaptive Long-term Memory (SALM). SALM provides a theoretical framework for the practice of AI long-term memory and holds potential for guiding the creation of next-generation long-term memory driven AI systems. Finally, we delve into the future directions and application prospects of AI long-term memory.

Generative AI for Accessible and Inclusive Extended Reality

Oct 31, 2024Abstract:Artificial Intelligence-Generated Content (AIGC) has the potential to transform how people build and interact with virtual environments. Within this paper, we discuss potential benefits but also challenges that AIGC has for the creation of inclusive and accessible virtual environments. Specifically, we touch upon the decreased need for 3D modeling expertise, benefits of symbolic-only as well as multimodal input, 3D content editing, and 3D model accessibility as well as foundation model-specific challenges.

Analyzing Multimodal Interaction Strategies for LLM-Assisted Manipulation of 3D Scenes

Oct 29, 2024

Abstract:As more applications of large language models (LLMs) for 3D content for immersive environments emerge, it is crucial to study user behaviour to identify interaction patterns and potential barriers to guide the future design of immersive content creation and editing systems which involve LLMs. In an empirical user study with 12 participants, we combine quantitative usage data with post-experience questionnaire feedback to reveal common interaction patterns and key barriers in LLM-assisted 3D scene editing systems. We identify opportunities for improving natural language interfaces in 3D design tools and propose design recommendations for future LLM-integrated 3D content creation systems. Through an empirical study, we demonstrate that LLM-assisted interactive systems can be used productively in immersive environments.

Large Language Model-assisted Speech and Pointing Benefits Multiple 3D Object Selection in Virtual Reality

Oct 28, 2024Abstract:Selection of occluded objects is a challenging problem in virtual reality, even more so if multiple objects are involved. With the advent of new artificial intelligence technologies, we explore the possibility of leveraging large language models to assist multi-object selection tasks in virtual reality via a multimodal speech and raycast interaction technique. We validate the findings in a comparative user study (n=24), where participants selected target objects in a virtual reality scene with different levels of scene perplexity. The performance metrics and user experience metrics are compared against a mini-map based occluded object selection technique that serves as the baseline. Results indicate that the introduced technique, AssistVR, outperforms the baseline technique when there are multiple target objects. Contrary to the common belief for speech interfaces, AssistVR was able to outperform the baseline even when the target objects were difficult to reference verbally. This work demonstrates the viability and interaction potential of an intelligent multimodal interactive system powered by large laguage models. Based on the results, we discuss the implications for design of future intelligent multimodal interactive systems in immersive environments.

Swarm manipulation: An efficient and accurate technique for multi-object manipulation in virtual reality

Oct 24, 2024Abstract:The theory of swarm control shows promise for controlling multiple objects, however, scalability is hindered by cost constraints, such as hardware and infrastructure. Virtual Reality (VR) can overcome these limitations, but research on swarm interaction in VR is limited. This paper introduces a novel Swarm Manipulation interaction technique and compares it with two baseline techniques: Virtual Hand and Controller (ray-casting). We evaluated these techniques in a user study ($N$ = 12) in three tasks (selection, rotation, and resizing) across five conditions. Our results indicate that Swarm Manipulation yielded superior performance, with significantly faster speeds in most conditions across the three tasks. It notably reduced resizing size deviations but introduced a trade-off between speed and accuracy in the rotation task. Additionally, we conducted a follow-up user study ($N$ = 6) using Swarm Manipulation in two complex VR scenarios and obtained insights through semi-structured interviews, shedding light on optimized swarm control mechanisms and perceptual changes induced by this interaction paradigm. These results demonstrate the potential of the Swarm Manipulation technique to enhance the usability and user experience in VR compared to conventional manipulation techniques. In future studies, we aim to understand and improve swarm interaction via internal swarm particle cooperation.

Towards Open-World Gesture Recognition

Jan 20, 2024

Abstract:Static machine learning methods in gesture recognition assume that training and test data come from the same underlying distribution. However, in real-world applications involving gesture recognition on wrist-worn devices, data distribution may change over time. We formulate this problem of adapting recognition models to new tasks, where new data patterns emerge, as open-world gesture recognition (OWGR). We propose leveraging continual learning to make machine learning models adaptive to new tasks without degrading performance on previously learned tasks. However, the exploration of parameters for questions around when and how to train and deploy recognition models requires time-consuming user studies and is sometimes impractical. To address this challenge, we propose a design engineering approach that enables offline analysis on a collected large-scale dataset with various parameters and compares different continual learning methods. Finally, design guidelines are provided to enhance the development of an open-world wrist-worn gesture recognition process.

Promptor: A Conversational and Autonomous Prompt Generation Agent for Intelligent Text Entry Techniques

Oct 15, 2023Abstract:Text entry is an essential task in our day-to-day digital interactions. Numerous intelligent features have been developed to streamline this process, making text entry more effective, efficient, and fluid. These improvements include sentence prediction and user personalization. However, as deep learning-based language models become the norm for these advanced features, the necessity for data collection and model fine-tuning increases. These challenges can be mitigated by harnessing the in-context learning capability of large language models such as GPT-3.5. This unique feature allows the language model to acquire new skills through prompts, eliminating the need for data collection and fine-tuning. Consequently, large language models can learn various text prediction techniques. We initially showed that, for a sentence prediction task, merely prompting GPT-3.5 surpassed a GPT-2 backed system and is comparable with a fine-tuned GPT-3.5 model, with the latter two methods requiring costly data collection, fine-tuning and post-processing. However, the task of prompting large language models to specialize in specific text prediction tasks can be challenging, particularly for designers without expertise in prompt engineering. To address this, we introduce Promptor, a conversational prompt generation agent designed to engage proactively with designers. Promptor can automatically generate complex prompts tailored to meet specific needs, thus offering a solution to this challenge. We conducted a user study involving 24 participants creating prompts for three intelligent text entry tasks, half of the participants used Promptor while the other half designed prompts themselves. The results show that Promptor-designed prompts result in a 35% increase in similarity and 22% in coherence over those by designers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge