Antti Oulasvirta

Department of Communications and Networking, Aalto University, Finland

Adaptive Prompt Elicitation for Text-to-Image Generation

Feb 04, 2026Abstract:Aligning text-to-image generation with user intent remains challenging, for users who provide ambiguous inputs and struggle with model idiosyncrasies. We propose Adaptive Prompt Elicitation (APE), a technique that adaptively asks visual queries to help users refine prompts without extensive writing. Our technical contribution is a formulation of interactive intent inference under an information-theoretic framework. APE represents latent intent as interpretable feature requirements using language model priors, adaptively generates visual queries, and compiles elicited requirements into effective prompts. Evaluation on IDEA-Bench and DesignBench shows that APE achieves stronger alignment with improved efficiency. A user study with challenging user-defined tasks demonstrates 19.8% higher alignment without workload overhead. Our work contributes a principled approach to prompting that, for general users, offers an effective and efficient complement to the prevailing prompt-based interaction paradigm with text-to-image models.

Simulating Human Audiovisual Search Behavior

Feb 02, 2026Abstract:Locating a target based on auditory and visual cues$\unicode{x2013}$such as finding a car in a crowded parking lot or identifying a speaker in a virtual meeting$\unicode{x2013}$requires balancing effort, time, and accuracy under uncertainty. Existing models of audiovisual search often treat perception and action in isolation, overlooking how people adaptively coordinate movement and sensory strategies. We present Sensonaut, a computational model of embodied audiovisual search. The core assumption is that people deploy their body and sensory systems in ways they believe will most efficiently improve their chances of locating a target, trading off time and effort under perceptual constraints. Our model formulates this as a resource-rational decision-making problem under partial observability. We validate the model against newly collected human data, showing that it reproduces both adaptive scaling of search time and effort under task complexity, occlusion, and distraction, and characteristic human errors. Our simulation of human-like resource-rational search informs the design of audiovisual interfaces that minimize search cost and cognitive load.

Cost-Aware Bayesian Optimization for Prototyping Interactive Devices

Feb 02, 2026Abstract:Deciding which idea is worth prototyping is a central concern in iterative design. A prototype should be produced when the expected improvement is high and the cost is low. However, this is hard to decide, because costs can vary drastically: a simple parameter tweak may take seconds, while fabricating hardware consumes material and energy. Such asymmetries, can discourage a designer from exploring the design space. In this paper, we present an extension of cost-aware Bayesian optimization to account for diverse prototyping costs. The method builds on the power of Bayesian optimization and requires only a minimal modification to the acquisition function. The key idea is to use designer-estimated costs to guide sampling toward more cost-effective prototypes. In technical evaluations, the method achieved comparable utility to a cost-agnostic baseline while requiring only ${\approx}70\%$ of the cost; under strict budgets, it outperformed the baseline threefold. A within-subjects study with 12 participants in a realistic joystick design task demonstrated similar benefits. These results show that accounting for prototyping costs can make Bayesian optimization more compatible with real-world design projects.

Log2Motion: Biomechanical Motion Synthesis from Touch Logs

Jan 28, 2026Abstract:Touch data from mobile devices are collected at scale but reveal little about the interactions that produce them. While biomechanical simulations can illuminate motor control processes, they have not yet been developed for touch interactions. To close this gap, we propose a novel computational problem: synthesizing plausible motion directly from logs. Our key insight is a reinforcement learning-driven musculoskeletal forward simulation that generates biomechanically plausible motion sequences consistent with events recorded in touch logs. We achieve this by integrating a software emulator into a physics simulator, allowing biomechanical models to manipulate real applications in real-time. Log2Motion produces rich syntheses of user movements from touch logs, including estimates of motion, speed, accuracy, and effort. We assess the plausibility of generated movements by comparing against human data from a motion capture study and prior findings, and demonstrate Log2Motion in a large-scale dataset. Biomechanical motion synthesis provides a new way to understand log data, illuminating the ergonomics and motor control underlying touch interactions.

Controllable GUI Exploration

Feb 05, 2025

Abstract:During the early stages of interface design, designers need to produce multiple sketches to explore a design space. Design tools often fail to support this critical stage, because they insist on specifying more details than necessary. Although recent advances in generative AI have raised hopes of solving this issue, in practice they fail because expressing loose ideas in a prompt is impractical. In this paper, we propose a diffusion-based approach to the low-effort generation of interface sketches. It breaks new ground by allowing flexible control of the generation process via three types of inputs: A) prompts, B) wireframes, and C) visual flows. The designer can provide any combination of these as input at any level of detail, and will get a diverse gallery of low-fidelity solutions in response. The unique benefit is that large design spaces can be explored rapidly with very little effort in input-specification. We present qualitative results for various combinations of input specifications. Additionally, we demonstrate that our model aligns more accurately with these specifications than other models.

AgentForge: A Flexible Low-Code Platform for Reinforcement Learning Agent Design

Oct 25, 2024

Abstract:Developing a reinforcement learning (RL) agent often involves identifying effective values for a large number of parameters, covering the policy, reward function, environment, and the agent's internal architecture, such as parameters controlling how the peripheral vision and memory modules work. Critically, since these parameters are interrelated in complex ways, optimizing them can be viewed as a black box optimization problem, which is especially challenging for non-experts. Although existing optimization-as-a-service platforms (e.g., Vizier, Optuna) can handle such problems, they are impractical for RL systems, as users must manually map each parameter to different components, making the process cumbersome and error-prone. They also require deep understanding of the optimization process, limiting their application outside ML experts and restricting access for fields like cognitive science, which models human decision-making. To tackle these challenges, we present AgentForge, a flexible low-code framework to optimize any parameter set across an RL system. AgentForge allows the user to perform individual or joint optimization of parameter sets. An optimization problem can be defined in a few lines of code and handed to any of the interfaced optimizers. We evaluated its performance in a challenging vision-based RL problem. AgentForge enables practitioners to develop RL agents without requiring extensive coding or deep expertise in optimization.

Graph4GUI: Graph Neural Networks for Representing Graphical User Interfaces

Apr 21, 2024

Abstract:Present-day graphical user interfaces (GUIs) exhibit diverse arrangements of text, graphics, and interactive elements such as buttons and menus, but representations of GUIs have not kept up. They do not encapsulate both semantic and visuo-spatial relationships among elements. To seize machine learning's potential for GUIs more efficiently, Graph4GUI exploits graph neural networks to capture individual elements' properties and their semantic-visuo-spatial constraints in a layout. The learned representation demonstrated its effectiveness in multiple tasks, especially generating designs in a challenging GUI autocompletion task, which involved predicting the positions of remaining unplaced elements in a partially completed GUI. The new model's suggestions showed alignment and visual appeal superior to the baseline method and received higher subjective ratings for preference. Furthermore, we demonstrate the practical benefits and efficiency advantages designers perceive when utilizing our model as an autocompletion plug-in.

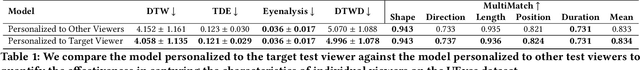

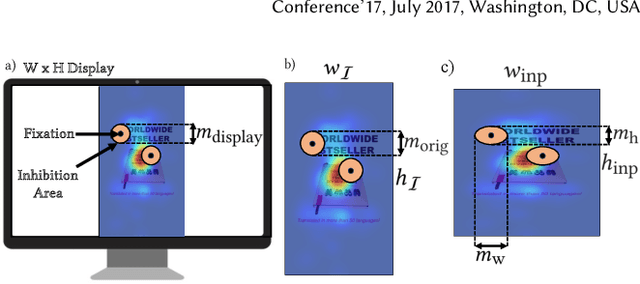

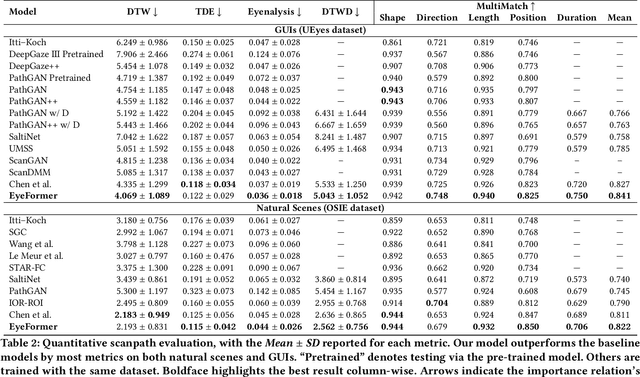

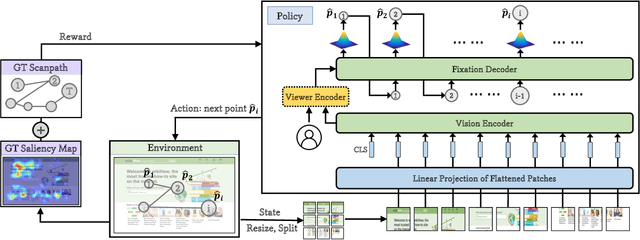

EyeFormer: Predicting Personalized Scanpaths with Transformer-Guided Reinforcement Learning

Apr 15, 2024

Abstract:From a visual perception perspective, modern graphical user interfaces (GUIs) comprise a complex graphics-rich two-dimensional visuospatial arrangement of text, images, and interactive objects such as buttons and menus. While existing models can accurately predict regions and objects that are likely to attract attention ``on average'', so far there is no scanpath model capable of predicting scanpaths for an individual. To close this gap, we introduce EyeFormer, which leverages a Transformer architecture as a policy network to guide a deep reinforcement learning algorithm that controls gaze locations. Our model has the unique capability of producing personalized predictions when given a few user scanpath samples. It can predict full scanpath information, including fixation positions and duration, across individuals and various stimulus types. Additionally, we demonstrate applications in GUI layout optimization driven by our model. Our software and models will be publicly available.

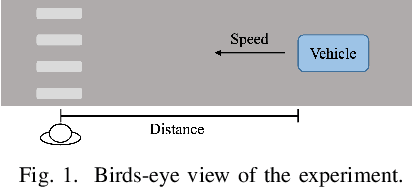

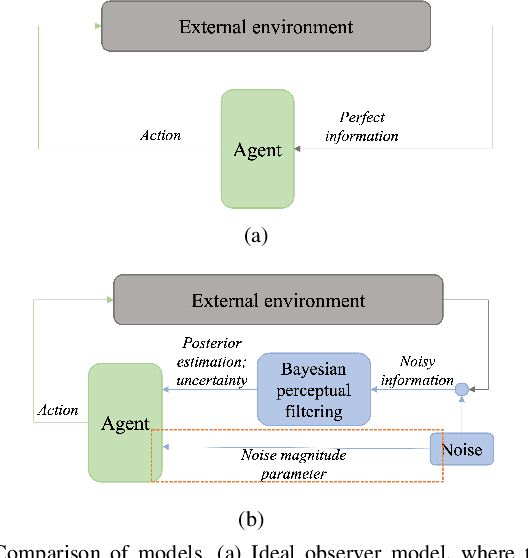

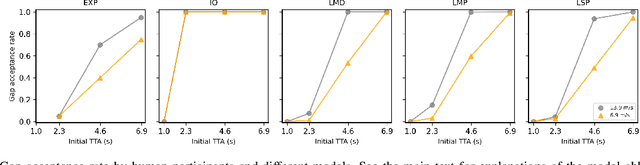

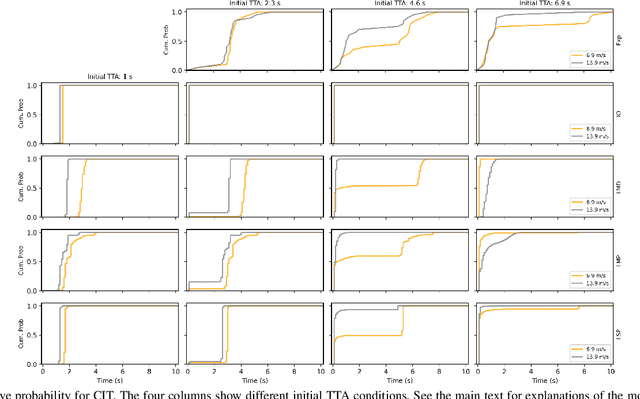

Pedestrian crossing decisions can be explained by bounded optimal decision-making under noisy visual perception

Feb 06, 2024Abstract:This paper presents a model of pedestrian crossing decisions, based on the theory of computational rationality. It is assumed that crossing decisions are boundedly optimal, with bounds on optimality arising from human cognitive limitations. While previous models of pedestrian behaviour have been either 'black-box' machine learning models or mechanistic models with explicit assumptions about cognitive factors, we combine both approaches. Specifically, we model mechanistically noisy human visual perception and assumed rewards in crossing, but we use reinforcement learning to learn bounded optimal behaviour policy. The model reproduces a larger number of known empirical phenomena than previous models, in particular: (1) the effect of the time to arrival of an approaching vehicle on whether the pedestrian accepts the gap, the effect of the vehicle's speed on both (2) gap acceptance and (3) pedestrian timing of crossing in front of yielding vehicles, and (4) the effect on this crossing timing of the stopping distance of the yielding vehicle. Notably, our findings suggest that behaviours previously framed as 'biases' in decision-making, such as speed-dependent gap acceptance, might instead be a product of rational adaptation to the constraints of visual perception. Our approach also permits fitting the parameters of cognitive constraints and rewards per individual, to better account for individual differences. To conclude, by leveraging both RL and mechanistic modelling, our model offers novel insights about pedestrian behaviour, and may provide a useful foundation for more accurate and scalable pedestrian models.

Modeling human road crossing decisions as reward maximization with visual perception limitations

Jan 27, 2023

Abstract:Understanding the interaction between different road users is critical for road safety and automated vehicles (AVs). Existing mathematical models on this topic have been proposed based mostly on either cognitive or machine learning (ML) approaches. However, current cognitive models are incapable of simulating road user trajectories in general scenarios, and ML models lack a focus on the mechanisms generating the behavior and take a high-level perspective which can cause failures to capture important human-like behaviors. Here, we develop a model of human pedestrian crossing decisions based on computational rationality, an approach using deep reinforcement learning (RL) to learn boundedly optimal behavior policies given human constraints, in our case a model of the limited human visual system. We show that the proposed combined cognitive-RL model captures human-like patterns of gap acceptance and crossing initiation time. Interestingly, our model's decisions are sensitive to not only the time gap, but also the speed of the approaching vehicle, something which has been described as a "bias" in human gap acceptance behavior. However, our results suggest that this is instead a rational adaption to human perceptual limitations. Moreover, we demonstrate an approach to accounting for individual differences in computational rationality models, by conditioning the RL policy on the parameters of the human constraints. Our results demonstrate the feasibility of generating more human-like road user behavior by combining RL with cognitive models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge