Pablo Meyer

MetaExplainer: A Framework to Generate Multi-Type User-Centered Explanations for AI Systems

Aug 01, 2025Abstract:Explanations are crucial for building trustworthy AI systems, but a gap often exists between the explanations provided by models and those needed by users. To address this gap, we introduce MetaExplainer, a neuro-symbolic framework designed to generate user-centered explanations. Our approach employs a three-stage process: first, we decompose user questions into machine-readable formats using state-of-the-art large language models (LLM); second, we delegate the task of generating system recommendations to model explainer methods; and finally, we synthesize natural language explanations that summarize the explainer outputs. Throughout this process, we utilize an Explanation Ontology to guide the language models and explainer methods. By leveraging LLMs and a structured approach to explanation generation, MetaExplainer aims to enhance the interpretability and trustworthiness of AI systems across various applications, providing users with tailored, question-driven explanations that better meet their needs. Comprehensive evaluations of MetaExplainer demonstrate a step towards evaluating and utilizing current state-of-the-art explanation frameworks. Our results show high performance across all stages, with a 59.06% F1-score in question reframing, 70% faithfulness in model explanations, and 67% context-utilization in natural language synthesis. User studies corroborate these findings, highlighting the creativity and comprehensiveness of generated explanations. Tested on the Diabetes (PIMA Indian) tabular dataset, MetaExplainer supports diverse explanation types, including Contrastive, Counterfactual, Rationale, Case-Based, and Data explanations. The framework's versatility and traceability from using ontology to guide LLMs suggest broad applicability beyond the tested scenarios, positioning MetaExplainer as a promising tool for enhancing AI explainability across various domains.

Multi-view biomedical foundation models for molecule-target and property prediction

Oct 25, 2024

Abstract:Foundation models applied to bio-molecular space hold promise to accelerate drug discovery. Molecular representation is key to building such models. Previous works have typically focused on a single representation or view of the molecules. Here, we develop a multi-view foundation model approach, that integrates molecular views of graph, image and text. Single-view foundation models are each pre-trained on a dataset of up to 200M molecules and then aggregated into combined representations. Our multi-view model is validated on a diverse set of 18 tasks, encompassing ligand-protein binding, molecular solubility, metabolism and toxicity. We show that the multi-view models perform robustly and are able to balance the strengths and weaknesses of specific views. We then apply this model to screen compounds against a large (>100 targets) set of G Protein-Coupled receptors (GPCRs). From this library of targets, we identify 33 that are related to Alzheimer's disease. On this subset, we employ our model to identify strong binders, which are validated through structure-based modeling and identification of key binding motifs.

Improving Primary Healthcare Workflow Using Extreme Summarization of Scientific Literature Based on Generative AI

Jul 24, 2023

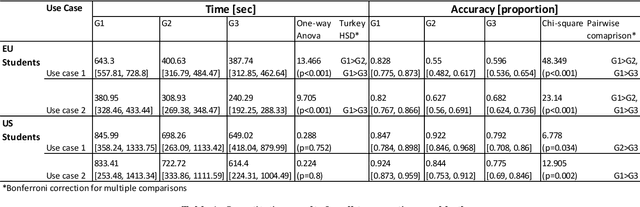

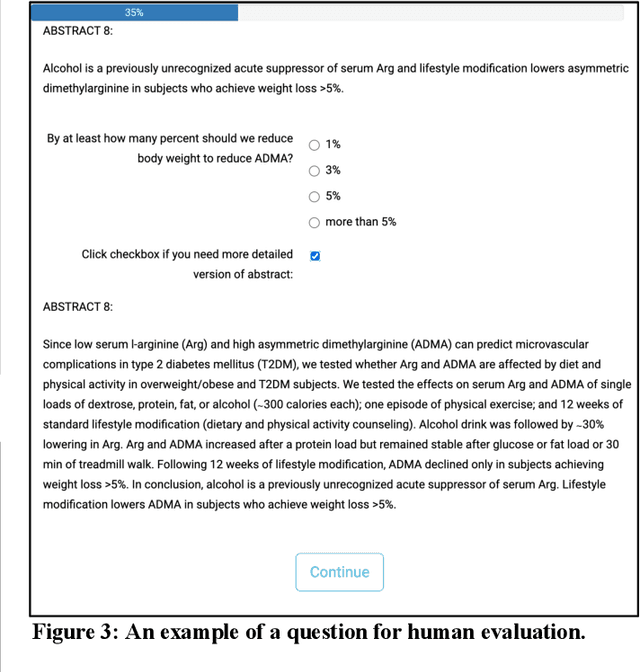

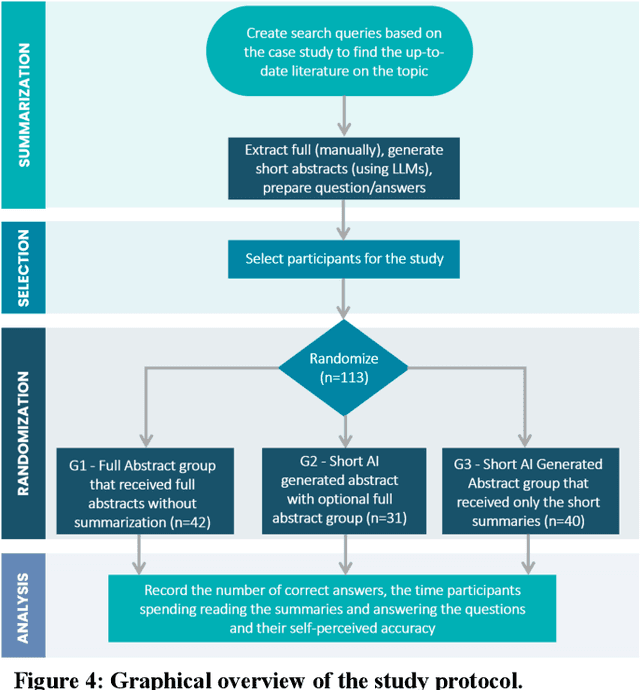

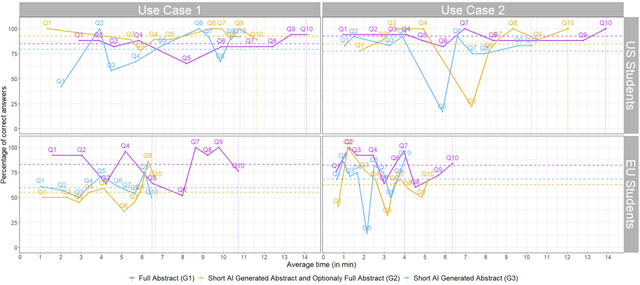

Abstract:Primary care professionals struggle to keep up to date with the latest scientific literature critical in guiding evidence-based practice related to their daily work. To help solve the above-mentioned problem, we employed generative artificial intelligence techniques based on large-scale language models to summarize abstracts of scientific papers. Our objective is to investigate the potential of generative artificial intelligence in diminishing the cognitive load experienced by practitioners, thus exploring its ability to alleviate mental effort and burden. The study participants were provided with two use cases related to preventive care and behavior change, simulating a search for new scientific literature. The study included 113 university students from Slovenia and the United States randomized into three distinct study groups. The first group was assigned to the full abstracts. The second group was assigned to the short abstracts generated by AI. The third group had the option to select a full abstract in addition to the AI-generated short summary. Each use case study included ten retrieved abstracts. Our research demonstrates that the use of generative AI for literature review is efficient and effective. The time needed to answer questions related to the content of abstracts was significantly lower in groups two and three compared to the first group using full abstracts. The results, however, also show significantly lower accuracy in extracted knowledge in cases where full abstract was not available. Such a disruptive technology could significantly reduce the time required for healthcare professionals to keep up with the most recent scientific literature; nevertheless, further developments are needed to help them comprehend the knowledge accurately.

Informing clinical assessment by contextualizing post-hoc explanations of risk prediction models in type-2 diabetes

Feb 11, 2023Abstract:Medical experts may use Artificial Intelligence (AI) systems with greater trust if these are supported by contextual explanations that let the practitioner connect system inferences to their context of use. However, their importance in improving model usage and understanding has not been extensively studied. Hence, we consider a comorbidity risk prediction scenario and focus on contexts regarding the patients clinical state, AI predictions about their risk of complications, and algorithmic explanations supporting the predictions. We explore how relevant information for such dimensions can be extracted from Medical guidelines to answer typical questions from clinical practitioners. We identify this as a question answering (QA) task and employ several state-of-the-art LLMs to present contexts around risk prediction model inferences and evaluate their acceptability. Finally, we study the benefits of contextual explanations by building an end-to-end AI pipeline including data cohorting, AI risk modeling, post-hoc model explanations, and prototyped a visual dashboard to present the combined insights from different context dimensions and data sources, while predicting and identifying the drivers of risk of Chronic Kidney Disease - a common type-2 diabetes comorbidity. All of these steps were performed in engagement with medical experts, including a final evaluation of the dashboard results by an expert medical panel. We show that LLMs, in particular BERT and SciBERT, can be readily deployed to extract some relevant explanations to support clinical usage. To understand the value-add of the contextual explanations, the expert panel evaluated these regarding actionable insights in the relevant clinical setting. Overall, our paper is one of the first end-to-end analyses identifying the feasibility and benefits of contextual explanations in a real-world clinical use case.

Distillation to Enhance the Portability of Risk Models Across Institutions with Large Patient Claims Database

Jul 06, 2022

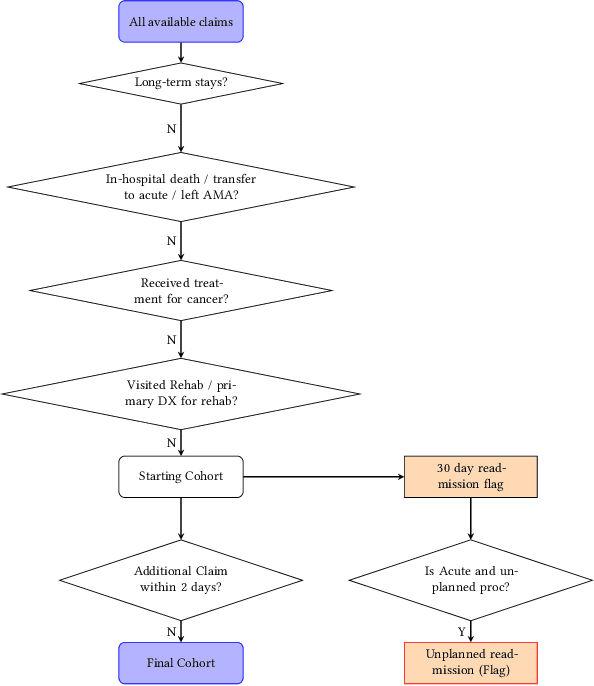

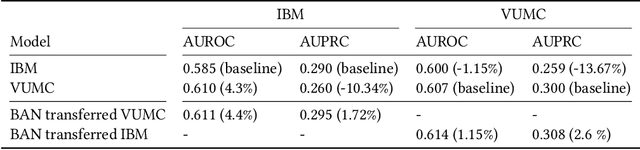

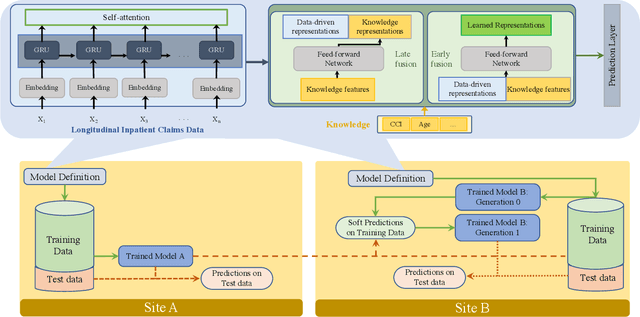

Abstract:Artificial intelligence, and particularly machine learning (ML), is increasingly developed and deployed to support healthcare in a variety of settings. However, clinical decision support (CDS) technologies based on ML need to be portable if they are to be adopted on a broad scale. In this respect, models developed at one institution should be reusable at another. Yet there are numerous examples of portability failure, particularly due to naive application of ML models. Portability failure can lead to suboptimal care and medical errors, which ultimately could prevent the adoption of ML-based CDS in practice. One specific healthcare challenge that could benefit from enhanced portability is the prediction of 30-day readmission risk. Research to date has shown that deep learning models can be effective at modeling such risk. In this work, we investigate the practicality of model portability through a cross-site evaluation of readmission prediction models. To do so, we apply a recurrent neural network, augmented with self-attention and blended with expert features, to build readmission prediction models for two independent large scale claims datasets. We further present a novel transfer learning technique that adapts the well-known method of born-again network (BAN) training. Our experiments show that direct application of ML models trained at one institution and tested at another institution perform worse than models trained and tested at the same institution. We further show that the transfer learning approach based on the BAN produces models that are better than those trained on just a single institution's data. Notably, this improvement is consistent across both sites and occurs after a single retraining, which illustrates the potential for a cheap and general model transfer mechanism of readmission risk prediction.

Disease Progression Modeling Workbench 360

Jun 24, 2021

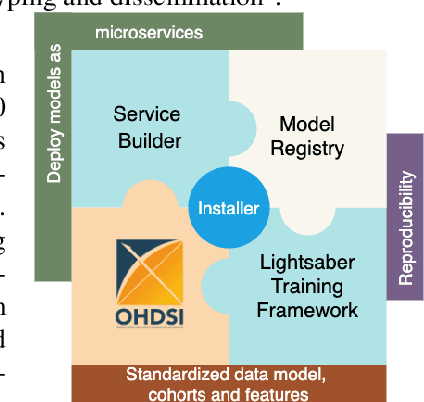

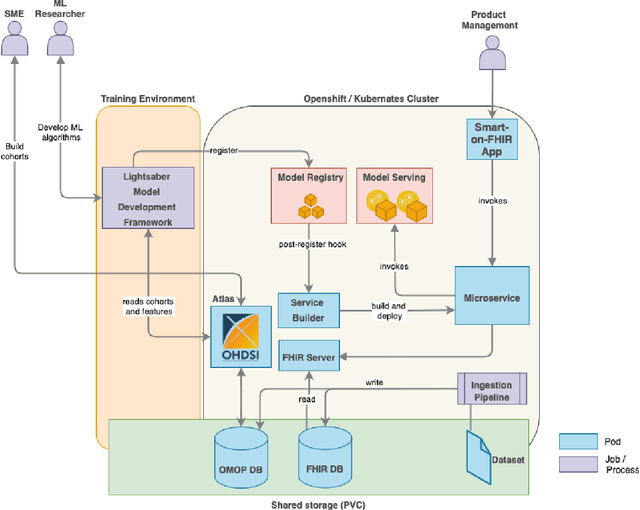

Abstract:In this work we introduce Disease Progression Modeling workbench 360 (DPM360) opensource clinical informatics framework for collaborative research and delivery of healthcare AI. DPM360, when fully developed, will manage the entire modeling life cycle, from data analysis (e.g., cohort identification) to machine learning algorithm development and prototyping. DPM360 augments the advantages of data model standardization and tooling (OMOP-CDM, Athena, ATLAS) provided by the widely-adopted OHDSI initiative with a powerful machine learning training framework, and a mechanism for rapid prototyping through automatic deployment of models as containerized services to a cloud environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge