Fernando Suarez Saiz

Informing clinical assessment by contextualizing post-hoc explanations of risk prediction models in type-2 diabetes

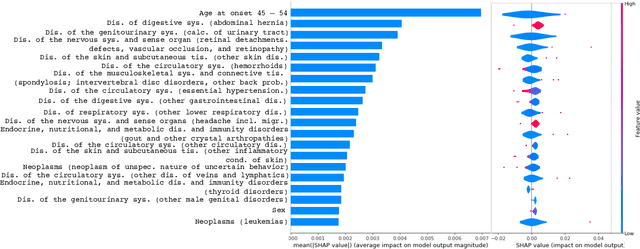

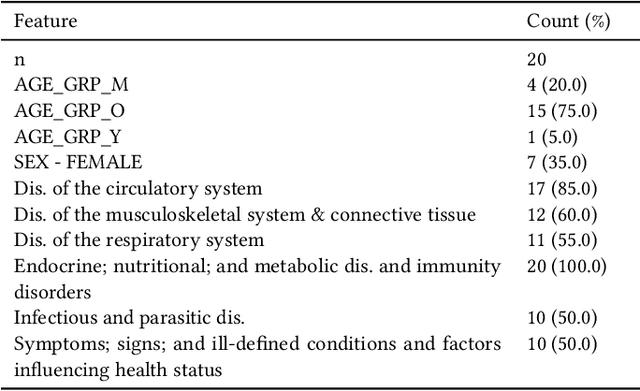

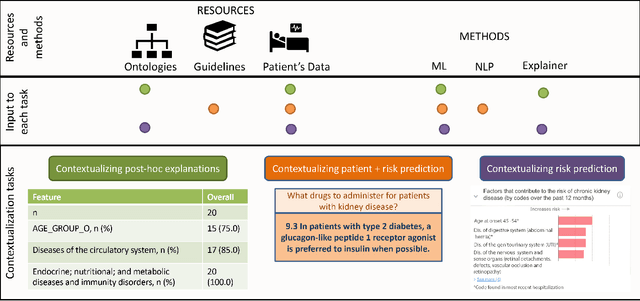

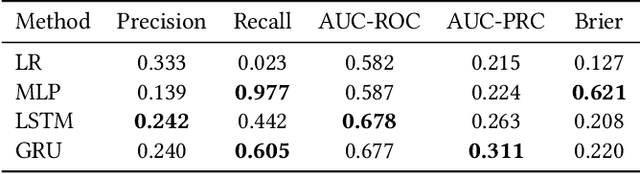

Feb 11, 2023Abstract:Medical experts may use Artificial Intelligence (AI) systems with greater trust if these are supported by contextual explanations that let the practitioner connect system inferences to their context of use. However, their importance in improving model usage and understanding has not been extensively studied. Hence, we consider a comorbidity risk prediction scenario and focus on contexts regarding the patients clinical state, AI predictions about their risk of complications, and algorithmic explanations supporting the predictions. We explore how relevant information for such dimensions can be extracted from Medical guidelines to answer typical questions from clinical practitioners. We identify this as a question answering (QA) task and employ several state-of-the-art LLMs to present contexts around risk prediction model inferences and evaluate their acceptability. Finally, we study the benefits of contextual explanations by building an end-to-end AI pipeline including data cohorting, AI risk modeling, post-hoc model explanations, and prototyped a visual dashboard to present the combined insights from different context dimensions and data sources, while predicting and identifying the drivers of risk of Chronic Kidney Disease - a common type-2 diabetes comorbidity. All of these steps were performed in engagement with medical experts, including a final evaluation of the dashboard results by an expert medical panel. We show that LLMs, in particular BERT and SciBERT, can be readily deployed to extract some relevant explanations to support clinical usage. To understand the value-add of the contextual explanations, the expert panel evaluated these regarding actionable insights in the relevant clinical setting. Overall, our paper is one of the first end-to-end analyses identifying the feasibility and benefits of contextual explanations in a real-world clinical use case.

Leveraging Clinical Context for User-Centered Explainability: A Diabetes Use Case

Jul 15, 2021

Abstract:Academic advances of AI models in high-precision domains, like healthcare, need to be made explainable in order to enhance real-world adoption. Our past studies and ongoing interactions indicate that medical experts can use AI systems with greater trust if there are ways to connect the model inferences about patients to explanations that are tied back to the context of use. Specifically, risk prediction is a complex problem of diagnostic and interventional importance to clinicians wherein they consult different sources to make decisions. To enable the adoption of the ever improving AI risk prediction models in practice, we have begun to explore techniques to contextualize such models along three dimensions of interest: the patients' clinical state, AI predictions about their risk of complications, and algorithmic explanations supporting the predictions. We validate the importance of these dimensions by implementing a proof-of-concept (POC) in type-2 diabetes (T2DM) use case where we assess the risk of chronic kidney disease (CKD) - a common T2DM comorbidity. Within the POC, we include risk prediction models for CKD, post-hoc explainers of the predictions, and other natural-language modules which operationalize domain knowledge and CPGs to provide context. With primary care physicians (PCP) as our end-users, we present our initial results and clinician feedback in this paper. Our POC approach covers multiple knowledge sources and clinical scenarios, blends knowledge to explain data and predictions to PCPs, and received an enthusiastic response from our medical expert.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge