Primoz Kocbek

Plain language adaptations of biomedical text using LLMs: Comparision of evaluation metrics

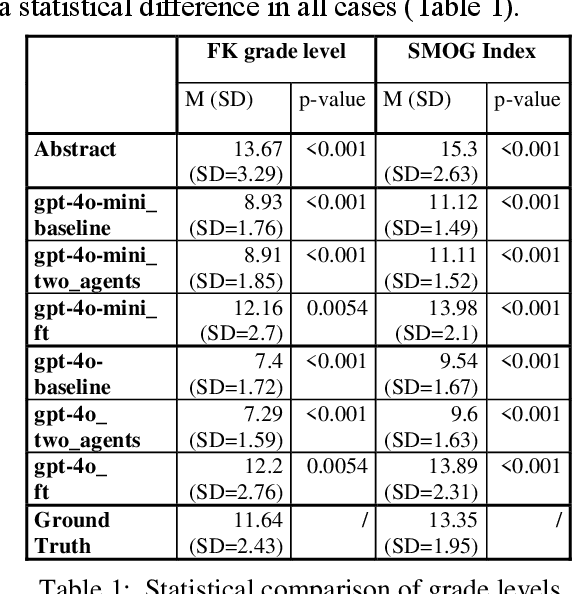

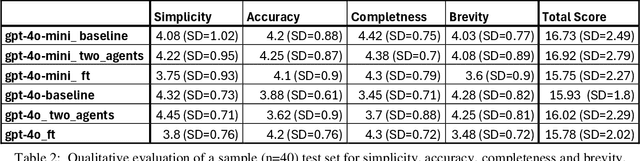

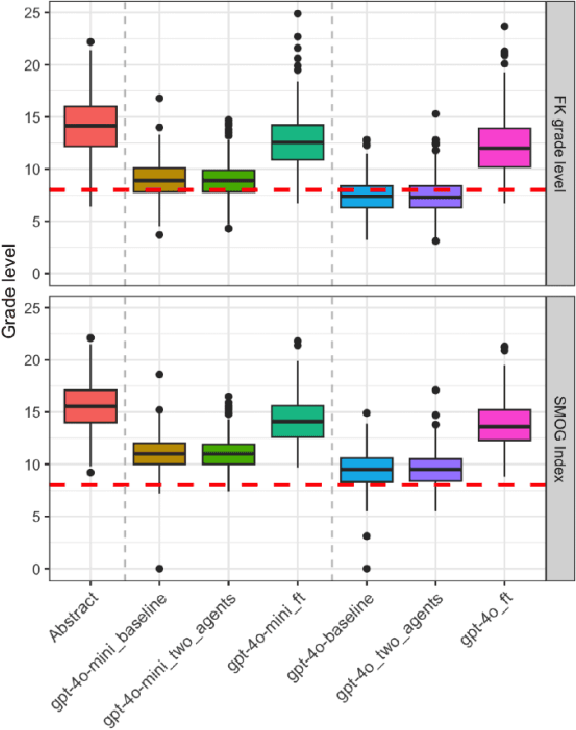

Dec 18, 2025Abstract:This study investigated the application of Large Language Models (LLMs) for simplifying biomedical texts to enhance health literacy. Using a public dataset, which included plain language adaptations of biomedical abstracts, we developed and evaluated several approaches, specifically a baseline approach using a prompt template, a two AI agent approach, and a fine-tuning approach. We selected OpenAI gpt-4o and gpt-4o mini models as baselines for further research. We evaluated our approaches with quantitative metrics, such as Flesch-Kincaid grade level, SMOG Index, SARI, and BERTScore, G-Eval, as well as with qualitative metric, more precisely 5-point Likert scales for simplicity, accuracy, completeness, brevity. Results showed a superior performance of gpt-4o-mini and an underperformance of FT approaches. G-Eval, a LLM based quantitative metric, showed promising results, ranking the approaches similarly as the qualitative metric.

UM_FHS at the CLEF 2025 SimpleText Track: Comparing No-Context and Fine-Tune Approaches for GPT-4.1 Models in Sentence and Document-Level Text Simplification

Dec 18, 2025

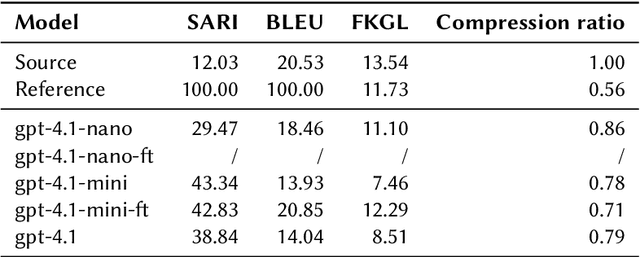

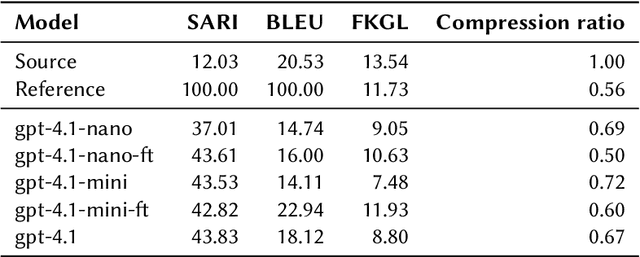

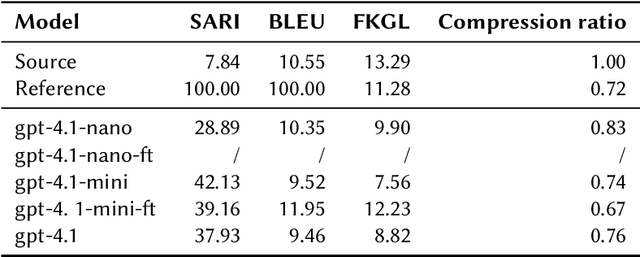

Abstract:This work describes our submission to the CLEF 2025 SimpleText track Task 1, addressing both sentenceand document-level simplification of scientific texts. The methodology centered on using the gpt-4.1, gpt-4.1mini, and gpt-4.1-nano models from OpenAI. Two distinct approaches were compared: a no-context method relying on prompt engineering and a fine-tuned (FT) method across models. The gpt-4.1-mini model with no-context demonstrated robust performance at both levels of simplification, while the fine-tuned models showed mixed results, highlighting the complexities of simplifying text at different granularities, where gpt-4.1-nano-ft performance stands out at document-level simplification in one case.

UM_FHS at TREC 2024 PLABA: Exploration of Fine-tuning and AI agent approach for plain language adaptations of biomedical text

Feb 19, 2025

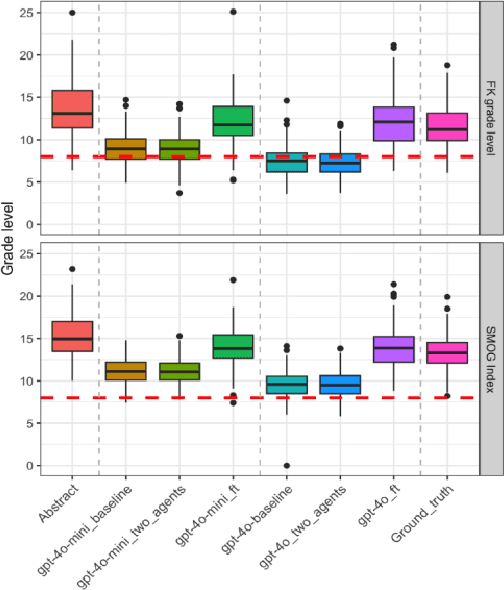

Abstract:This paper describes our submissions to the TREC 2024 PLABA track with the aim to simplify biomedical abstracts for a K8-level audience (13-14 years old students). We tested three approaches using OpenAI's gpt-4o and gpt-4o-mini models: baseline prompt engineering, a two-AI agent approach, and fine-tuning. Adaptations were evaluated using qualitative metrics (5-point Likert scales for simplicity, accuracy, completeness, and brevity) and quantitative readability scores (Flesch-Kincaid grade level, SMOG Index). Results indicated that the two-agent approach and baseline prompt engineering with gpt-4o-mini models show superior qualitative performance, while fine-tuned models excelled in accuracy and completeness but were less simple. The evaluation results demonstrated that prompt engineering with gpt-4o-mini outperforms iterative improvement strategies via two-agent approach as well as fine-tuning with gpt-4o. We intend to expand our investigation of the results and explore advanced evaluations.

Chapter 7 Review of Data-Driven Generative AI Models for Knowledge Extraction from Scientific Literature in Healthcare

Nov 18, 2024

Abstract:This review examines the development of abstractive NLP-based text summarization approaches and compares them to existing techniques for extractive summarization. A brief history of text summarization from the 1950s to the introduction of pre-trained language models such as Bidirectional Encoder Representations from Transformer (BERT) and Generative Pre-training Transformers (GPT) are presented. In total, 60 studies were identified in PubMed and Web of Science, of which 29 were excluded and 24 were read and evaluated for eligibility, resulting in the use of seven studies for further analysis. This chapter also includes a section with examples including an example of a comparison between GPT-3 and state-of-the-art GPT-4 solutions in scientific text summarisation. Natural language processing has not yet reached its full potential in the generation of brief textual summaries. As there are acknowledged concerns that must be addressed, we can expect gradual introduction of such models in practise.

Improving Primary Healthcare Workflow Using Extreme Summarization of Scientific Literature Based on Generative AI

Jul 24, 2023

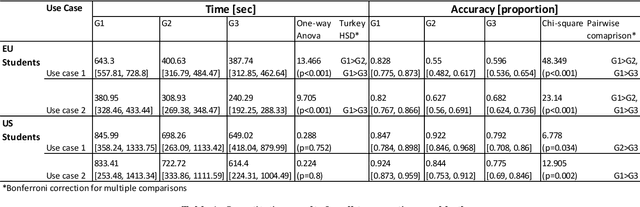

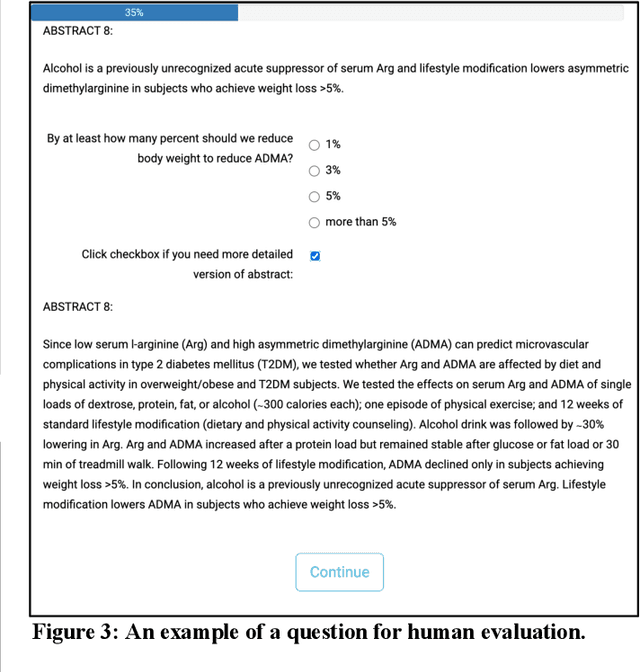

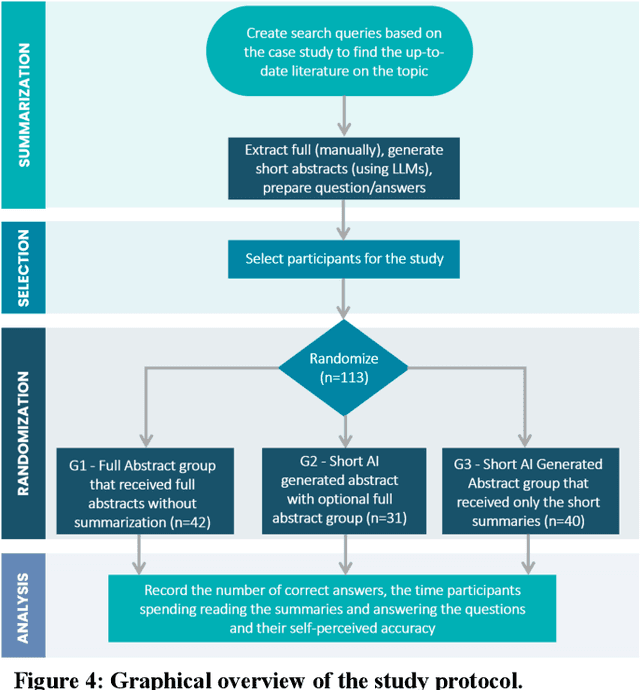

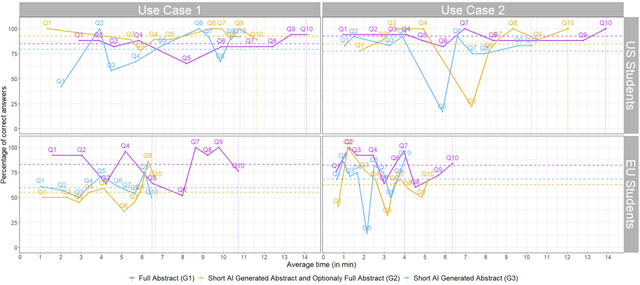

Abstract:Primary care professionals struggle to keep up to date with the latest scientific literature critical in guiding evidence-based practice related to their daily work. To help solve the above-mentioned problem, we employed generative artificial intelligence techniques based on large-scale language models to summarize abstracts of scientific papers. Our objective is to investigate the potential of generative artificial intelligence in diminishing the cognitive load experienced by practitioners, thus exploring its ability to alleviate mental effort and burden. The study participants were provided with two use cases related to preventive care and behavior change, simulating a search for new scientific literature. The study included 113 university students from Slovenia and the United States randomized into three distinct study groups. The first group was assigned to the full abstracts. The second group was assigned to the short abstracts generated by AI. The third group had the option to select a full abstract in addition to the AI-generated short summary. Each use case study included ten retrieved abstracts. Our research demonstrates that the use of generative AI for literature review is efficient and effective. The time needed to answer questions related to the content of abstracts was significantly lower in groups two and three compared to the first group using full abstracts. The results, however, also show significantly lower accuracy in extracted knowledge in cases where full abstract was not available. Such a disruptive technology could significantly reduce the time required for healthcare professionals to keep up with the most recent scientific literature; nevertheless, further developments are needed to help them comprehend the knowledge accurately.

Local Interpretability of Calibrated Prediction Models: A Case of Type 2 Diabetes Mellitus Screening Test

Jun 02, 2020

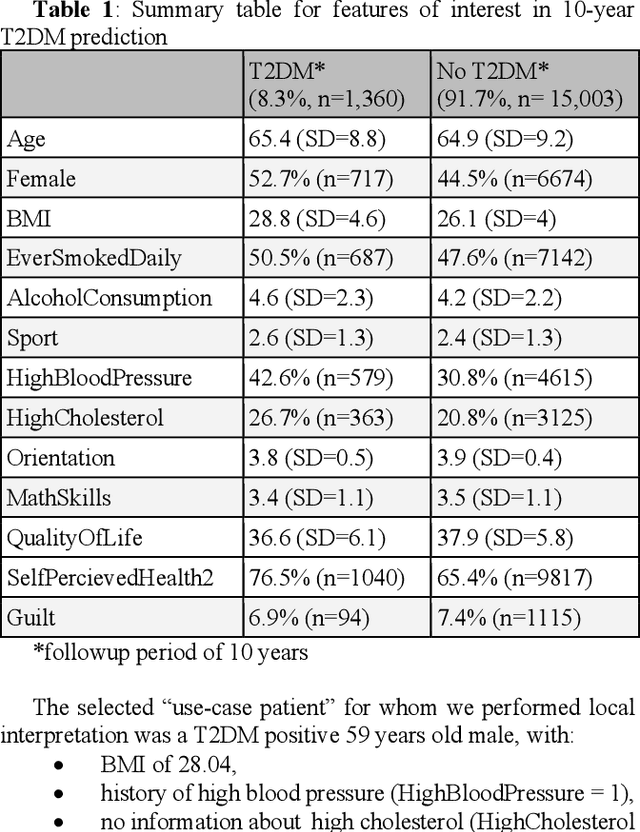

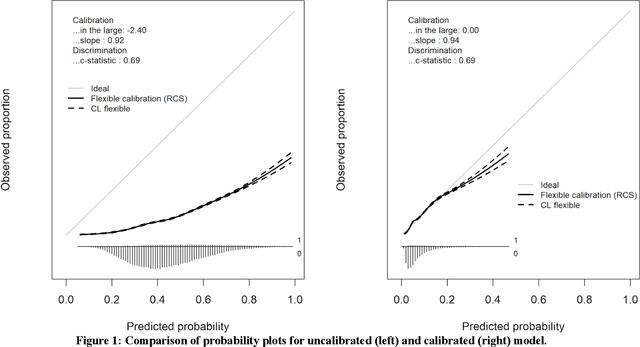

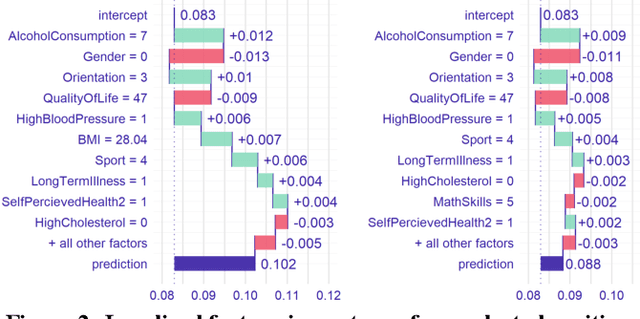

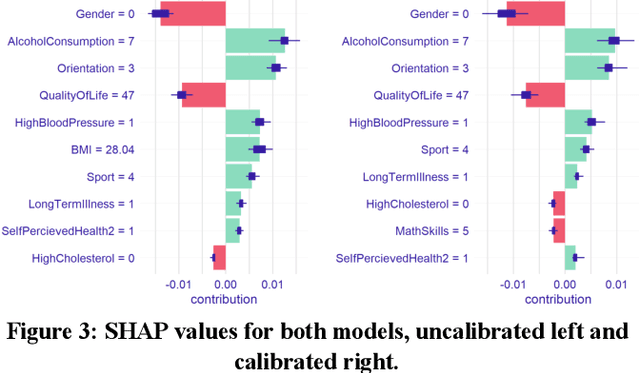

Abstract:Machine Learning (ML) models are often complex and difficult to interpret due to their 'black-box' characteristics. Interpretability of a ML model is usually defined as the degree to which a human can understand the cause of decisions reached by a ML model. Interpretability is of extremely high importance in many fields of healthcare due to high levels of risk related to decisions based on ML models. Calibration of the ML model outputs is another issue often overlooked in the application of ML models in practice. This paper represents an early work in examination of prediction model calibration impact on the interpretability of the results. We present a use case of a patient in diabetes screening prediction scenario and visualize results using three different techniques to demonstrate the differences between calibrated and uncalibrated regularized regression model.

Interpretability of machine learning based prediction models in healthcare

Feb 20, 2020

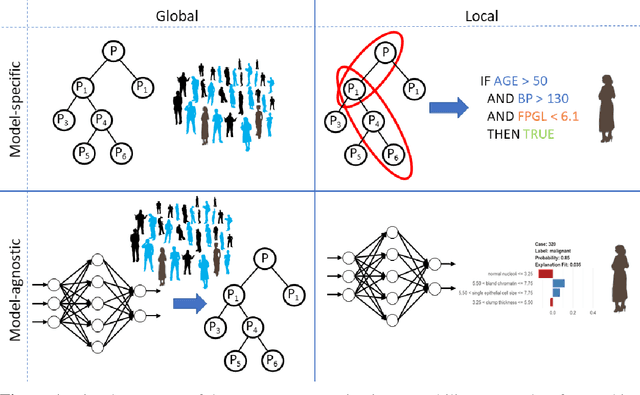

Abstract:There is a need of ensuring machine learning models that are interpretable. Higher interpretability of the model means easier comprehension and explanation of future predictions for end-users. Further, interpretable machine learning models allow healthcare experts to make reasonable and data-driven decisions to provide personalized decisions that can ultimately lead to higher quality of service in healthcare. Generally, we can classify interpretability approaches in two groups where the first focuses on personalized interpretation (local interpretability) while the second summarizes prediction models on a population level (global interpretability). Alternatively, we can group interpretability methods into model-specific techniques, which are designed to interpret predictions generated by a specific model, such as a neural network, and model-agnostic approaches, which provide easy-to-understand explanations of predictions made by any machine learning model. Here, we give an overview of interpretability approaches and provide examples of practical interpretability of machine learning in different areas of healthcare, including prediction of health-related outcomes, optimizing treatments or improving the efficiency of screening for specific conditions. Further, we outline future directions for interpretable machine learning and highlight the importance of developing algorithmic solutions that can enable machine-learning driven decision making in high-stakes healthcare problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge