Murat Kantarcioglu

University of Texas at Dallas

Bypassing AI Control Protocols via Agent-as-a-Proxy Attacks

Feb 04, 2026Abstract:As AI agents automate critical workloads, they remain vulnerable to indirect prompt injection (IPI) attacks. Current defenses rely on monitoring protocols that jointly evaluate an agent's Chain-of-Thought (CoT) and tool-use actions to ensure alignment with user intent. We demonstrate that these monitoring-based defenses can be bypassed via a novel Agent-as-a-Proxy attack, where prompt injection attacks treat the agent as a delivery mechanism, bypassing both agent and monitor simultaneously. While prior work on scalable oversight has focused on whether small monitors can supervise large agents, we show that even frontier-scale monitors are vulnerable. Large-scale monitoring models like Qwen2.5-72B can be bypassed by agents with similar capabilities, such as GPT-4o mini and Llama-3.1-70B. On the AgentDojo benchmark, we achieve a high attack success rate against AlignmentCheck and Extract-and-Evaluate monitors under diverse monitoring LLMs. Our findings suggest current monitoring-based agentic defenses are fundamentally fragile regardless of model scale.

Optimal Transport-Guided Adversarial Attacks on Graph Neural Network-Based Bot Detection

Jan 30, 2026Abstract:The rise of bot accounts on social media poses significant risks to public discourse. To address this threat, modern bot detectors increasingly rely on Graph Neural Networks (GNNs). However, the effectiveness of these GNN-based detectors in real-world settings remains poorly understood. In practice, attackers continuously adapt their strategies as well as must operate under domain-specific and temporal constraints, which can fundamentally limit the applicability of existing attack methods. As a result, there is a critical need for robust GNN-based bot detection methods under realistic, constraint-aware attack scenarios. To address this gap, we introduce BOCLOAK to systematically evaluate the robustness of GNN-based social bot detection via both edge editing and node injection adversarial attacks under realistic constraints. BOCLOAK constructs a probability measure over spatio-temporal neighbor features and learns an optimal transport geometry that separates human and bot behaviors. It then decodes transport plans into sparse, plausible edge edits that evade detection while obeying real-world constraints. We evaluate BOCLOAK across three social bot datasets, five state-of-the-art bot detectors, three adversarial defenses, and compare it against four leading graph adversarial attack baselines. BOCLOAK achieves up to 80.13% higher attack success rates while using 99.80% less GPU memory under realistic real-world constraints. Most importantly, BOCLOAK shows that optimal transport provides a lightweight, principled framework for bridging the gap between adversarial attacks and real-world bot detection.

PROVCREATOR: Synthesizing Complex Heterogenous Graphs with Node and Edge Attributes

Jul 28, 2025Abstract:The rise of graph-structured data has driven interest in graph learning and synthetic data generation. While successful in text and image domains, synthetic graph generation remains challenging -- especially for real-world graphs with complex, heterogeneous schemas. Existing research has focused mostly on homogeneous structures with simple attributes, limiting their usefulness and relevance for application domains requiring semantic fidelity. In this research, we introduce ProvCreator, a synthetic graph framework designed for complex heterogeneous graphs with high-dimensional node and edge attributes. ProvCreator formulates graph synthesis as a sequence generation task, enabling the use of transformer-based large language models. It features a versatile graph-to-sequence encoder-decoder that 1. losslessly encodes graph structure and attributes, 2. efficiently compresses large graphs for contextual modeling, and 3. supports end-to-end, learnable graph generation. To validate our research, we evaluate ProvCreator on two challenging domains: system provenance graphs in cybersecurity and knowledge graphs from IntelliGraph Benchmark Dataset. In both cases, ProvCreator captures intricate dependencies between structure and semantics, enabling the generation of realistic and privacy-aware synthetic datasets.

Graph Generative Models Evaluation with Masked Autoencoder

Mar 17, 2025

Abstract:In recent years, numerous graph generative models (GGMs) have been proposed. However, evaluating these models remains a considerable challenge, primarily due to the difficulty in extracting meaningful graph features that accurately represent real-world graphs. The traditional evaluation techniques, which rely on graph statistical properties like node degree distribution, clustering coefficients, or Laplacian spectrum, overlook node features and lack scalability. There are newly proposed deep learning-based methods employing graph random neural networks or contrastive learning to extract graph features, demonstrating superior performance compared to traditional statistical methods, but their experimental results also demonstrate that these methods do not always working well across different metrics. Although there are overlaps among these metrics, they are generally not interchangeable, each evaluating generative models from a different perspective. In this paper, we propose a novel method that leverages graph masked autoencoders to effectively extract graph features for GGM evaluations. We conduct extensive experiments on graphs and empirically demonstrate that our method can be more reliable and effective than previously proposed methods across a number of GGM evaluation metrics, such as "Fr\'echet Distance (FD)" and "MMD Linear". However, no single method stands out consistently across all metrics and datasets. Therefore, this study also aims to raise awareness of the significance and challenges associated with GGM evaluation techniques, especially in light of recent advances in generative models.

A Review of DeepSeek Models' Key Innovative Techniques

Mar 14, 2025Abstract:DeepSeek-V3 and DeepSeek-R1 are leading open-source Large Language Models (LLMs) for general-purpose tasks and reasoning, achieving performance comparable to state-of-the-art closed-source models from companies like OpenAI and Anthropic -- while requiring only a fraction of their training costs. Understanding the key innovative techniques behind DeepSeek's success is crucial for advancing LLM research. In this paper, we review the core techniques driving the remarkable effectiveness and efficiency of these models, including refinements to the transformer architecture, innovations such as Multi-Head Latent Attention and Mixture of Experts, Multi-Token Prediction, the co-design of algorithms, frameworks, and hardware, the Group Relative Policy Optimization algorithm, post-training with pure reinforcement learning and iterative training alternating between supervised fine-tuning and reinforcement learning. Additionally, we identify several open questions and highlight potential research opportunities in this rapidly advancing field.

A Consensus Privacy Metrics Framework for Synthetic Data

Mar 06, 2025Abstract:Synthetic data generation is one approach for sharing individual-level data. However, to meet legislative requirements, it is necessary to demonstrate that the individuals' privacy is adequately protected. There is no consolidated standard for measuring privacy in synthetic data. Through an expert panel and consensus process, we developed a framework for evaluating privacy in synthetic data. Our findings indicate that current similarity metrics fail to measure identity disclosure, and their use is discouraged. For differentially private synthetic data, a privacy budget other than close to zero was not considered interpretable. There was consensus on the importance of membership and attribute disclosure, both of which involve inferring personal information about an individual without necessarily revealing their identity. The resultant framework provides precise recommendations for metrics that address these types of disclosures effectively. Our findings further present specific opportunities for future research that can help with widespread adoption of synthetic data.

A Systematic Evaluation of Generative Models on Tabular Transportation Data

Feb 13, 2025Abstract:The sharing of large-scale transportation data is beneficial for transportation planning and policymaking. However, it also raises significant security and privacy concerns, as the data may include identifiable personal information, such as individuals' home locations. To address these concerns, synthetic data generation based on real transportation data offers a promising solution that allows privacy protection while potentially preserving data utility. Although there are various synthetic data generation techniques, they are often not tailored to the unique characteristics of transportation data, such as the inherent structure of transportation networks formed by all trips in the datasets. In this paper, we use New York City taxi data as a case study to conduct a systematic evaluation of the performance of widely used tabular data generative models. In addition to traditional metrics such as distribution similarity, coverage, and privacy preservation, we propose a novel graph-based metric tailored specifically for transportation data. This metric evaluates the similarity between real and synthetic transportation networks, providing potentially deeper insights into their structural and functional alignment. We also introduced an improved privacy metric to address the limitations of the commonly-used one. Our experimental results reveal that existing tabular data generative models often fail to perform as consistently as claimed in the literature, particularly when applied to transportation data use cases. Furthermore, our novel graph metric reveals a significant gap between synthetic and real data. This work underscores the potential need to develop generative models specifically tailored to take advantage of the unique characteristics of emerging domains, such as transportation.

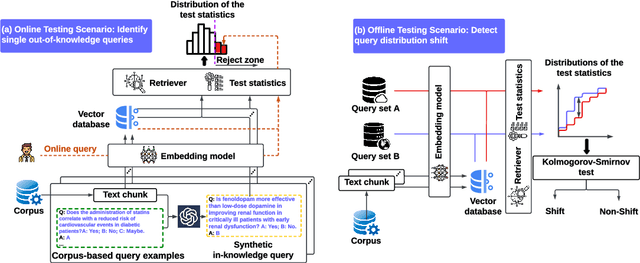

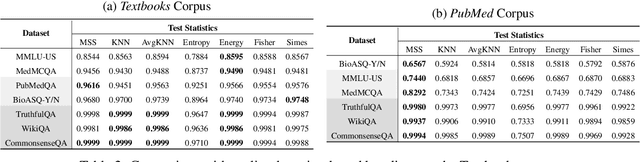

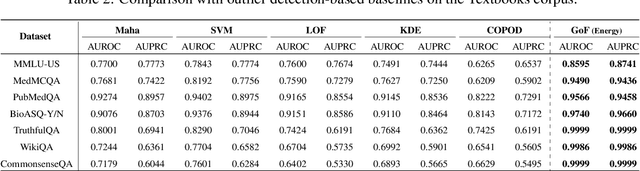

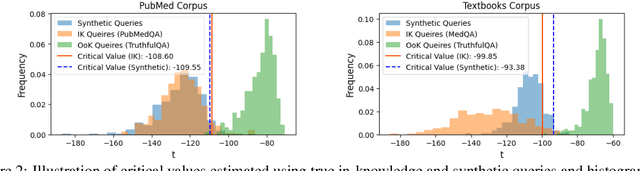

Do You Know What You Are Talking About? Characterizing Query-Knowledge Relevance For Reliable Retrieval Augmented Generation

Oct 10, 2024

Abstract:Language models (LMs) are known to suffer from hallucinations and misinformation. Retrieval augmented generation (RAG) that retrieves verifiable information from an external knowledge corpus to complement the parametric knowledge in LMs provides a tangible solution to these problems. However, the generation quality of RAG is highly dependent on the relevance between a user's query and the retrieved documents. Inaccurate responses may be generated when the query is outside of the scope of knowledge represented in the external knowledge corpus or if the information in the corpus is out-of-date. In this work, we establish a statistical framework that assesses how well a query can be answered by an RAG system by capturing the relevance of knowledge. We introduce an online testing procedure that employs goodness-of-fit (GoF) tests to inspect the relevance of each user query to detect out-of-knowledge queries with low knowledge relevance. Additionally, we develop an offline testing framework that examines a collection of user queries, aiming to detect significant shifts in the query distribution which indicates the knowledge corpus is no longer sufficiently capable of supporting the interests of the users. We demonstrate the capabilities of these strategies through a systematic evaluation on eight question-answering (QA) datasets, the results of which indicate that the new testing framework is an efficient solution to enhance the reliability of existing RAG systems.

EMP: Effective Multidimensional Persistence for Graph Representation Learning

Jan 24, 2024Abstract:Topological data analysis (TDA) is gaining prominence across a wide spectrum of machine learning tasks that spans from manifold learning to graph classification. A pivotal technique within TDA is persistent homology (PH), which furnishes an exclusive topological imprint of data by tracing the evolution of latent structures as a scale parameter changes. Present PH tools are confined to analyzing data through a single filter parameter. However, many scenarios necessitate the consideration of multiple relevant parameters to attain finer insights into the data. We address this issue by introducing the Effective Multidimensional Persistence (EMP) framework. This framework empowers the exploration of data by simultaneously varying multiple scale parameters. The framework integrates descriptor functions into the analysis process, yielding a highly expressive data summary. It seamlessly integrates established single PH summaries into multidimensional counterparts like EMP Landscapes, Silhouettes, Images, and Surfaces. These summaries represent data's multidimensional aspects as matrices and arrays, aligning effectively with diverse ML models. We provide theoretical guarantees and stability proofs for EMP summaries. We demonstrate EMP's utility in graph classification tasks, showing its effectiveness. Results reveal that EMP enhances various single PH descriptors, outperforming cutting-edge methods on multiple benchmark datasets.

* arXiv admin note: text overlap with arXiv:2401.13157

Using AI Uncertainty Quantification to Improve Human Decision-Making

Sep 19, 2023

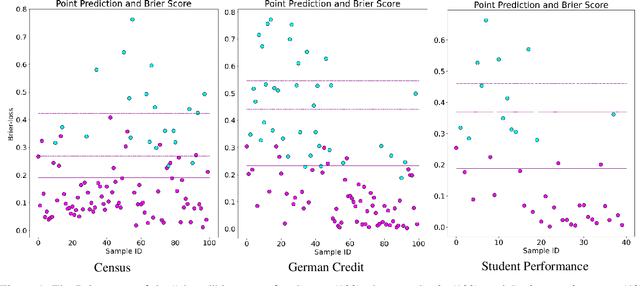

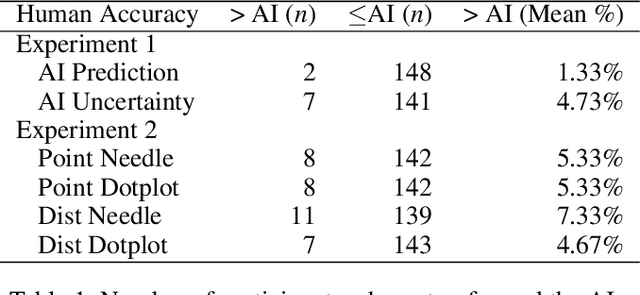

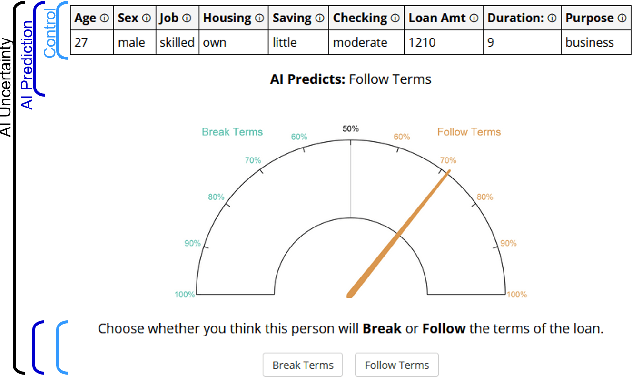

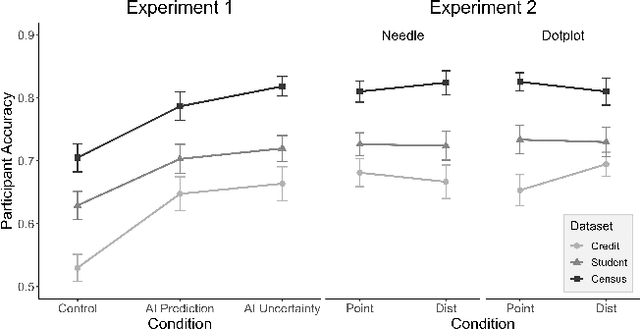

Abstract:AI Uncertainty Quantification (UQ) has the potential to improve human decision-making beyond AI predictions alone by providing additional useful probabilistic information to users. The majority of past research on AI and human decision-making has concentrated on model explainability and interpretability. We implemented instance-based UQ for three real datasets. To achieve this, we trained different AI models for classification for each dataset, and used random samples generated around the neighborhood of the given instance to create confidence intervals for UQ. The computed UQ was calibrated using a strictly proper scoring rule as a form of quality assurance for UQ. We then conducted two preregistered online behavioral experiments that compared objective human decision-making performance under different AI information conditions, including UQ. In Experiment 1, we compared decision-making for no AI (control), AI prediction alone, and AI prediction with a visualization of UQ. We found UQ significantly improved decision-making beyond the other two conditions. In Experiment 2, we focused on comparing different representations of UQ information: Point vs. distribution of uncertainty and visualization type (needle vs. dotplot). We did not find meaningful differences in decision-making performance among these different representations of UQ. Overall, our results indicate that human decision-making can be improved by providing UQ information along with AI predictions, and that this benefit generalizes across a variety of representations of UQ.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge