Yuzhou Chen

Adaptive Domain Shift in Diffusion Models for Cross-Modality Image Translation

Jan 26, 2026Abstract:Cross-modal image translation remains brittle and inefficient. Standard diffusion approaches often rely on a single, global linear transfer between domains. We find that this shortcut forces the sampler to traverse off-manifold, high-cost regions, inflating the correction burden and inviting semantic drift. We refer to this shared failure mode as fixed-schedule domain transfer. In this paper, we embed domain-shift dynamics directly into the generative process. Our model predicts a spatially varying mixing field at every reverse step and injects an explicit, target-consistent restoration term into the drift. This in-step guidance keeps large updates on-manifold and shifts the model's role from global alignment to local residual correction. We provide a continuous-time formulation with an exact solution form and derive a practical first-order sampler that preserves marginal consistency. Empirically, across translation tasks in medical imaging, remote sensing, and electroluminescence semantic mapping, our framework improves structural fidelity and semantic consistency while converging in fewer denoising steps.

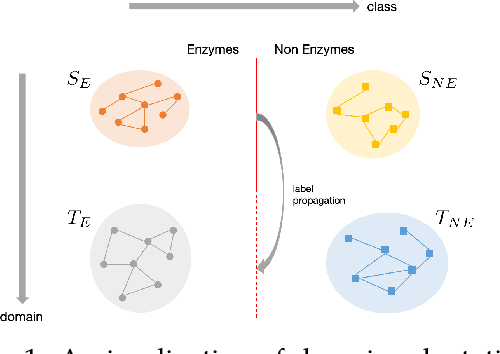

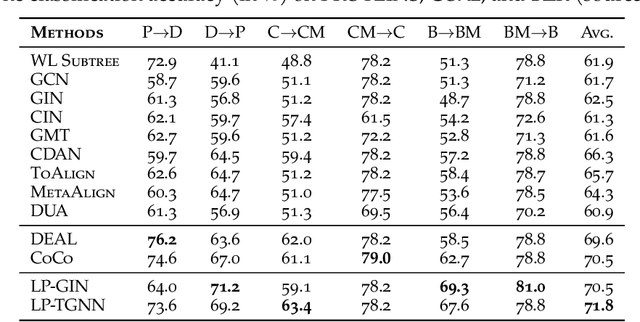

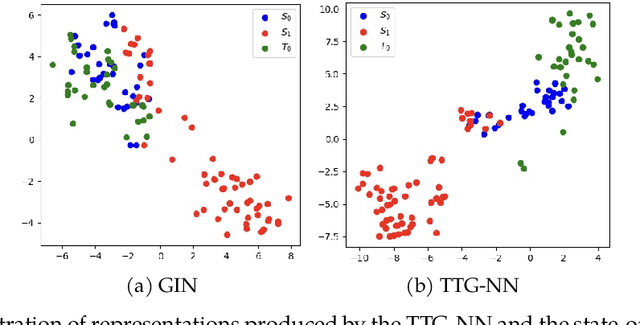

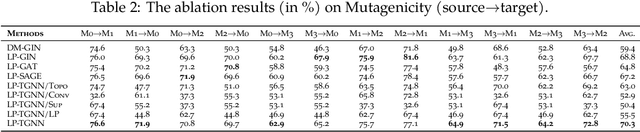

Bridging Domain Adaptation and Graph Neural Networks: A Tensor-Based Framework for Effective Label Propagation

Feb 12, 2025

Abstract:Graph Neural Networks (GNNs) have recently become the predominant tools for studying graph data. Despite state-of-the-art performance on graph classification tasks, GNNs are overwhelmingly trained in a single domain under supervision, thus necessitating a prohibitively high demand for labels and resulting in poorly transferable representations. To address this challenge, we propose the Label-Propagation Tensor Graph Neural Network (LP-TGNN) framework to bridge the gap between graph data and traditional domain adaptation methods. It extracts graph topological information holistically with a tensor architecture and then reduces domain discrepancy through label propagation. It is readily compatible with general GNNs and domain adaptation techniques with minimal adjustment through pseudo-labeling. Experiments on various real-world benchmarks show that our LP-TGNN outperforms baselines by a notable margin. We also validate and analyze each component of the proposed framework in the ablation study.

Predicting Emergency Department Visits for Patients with Type II Diabetes

Dec 12, 2024

Abstract:Over 30 million Americans are affected by Type II diabetes (T2D), a treatable condition with significant health risks. This study aims to develop and validate predictive models using machine learning (ML) techniques to estimate emergency department (ED) visits among patients with T2D. Data for these patients was obtained from the HealthShare Exchange (HSX), focusing on demographic details, diagnoses, and vital signs. Our sample contained 34,151 patients diagnosed with T2D which resulted in 703,065 visits overall between 2017 and 2021. A workflow integrated EMR data with SDoH for ML predictions. A total of 87 out of 2,555 features were selected for model construction. Various machine learning algorithms, including CatBoost, Ensemble Learning, K-nearest Neighbors (KNN), Support Vector Classification (SVC), Random Forest, and Extreme Gradient Boosting (XGBoost), were employed with tenfold cross-validation to predict whether a patient is at risk of an ED visit. The ROC curves for Random Forest, XGBoost, Ensemble Learning, CatBoost, KNN, and SVC, were 0.82, 0.82, 0.82, 0.81, 0.72, 0.68, respectively. Ensemble Learning and Random Forest models demonstrated superior predictive performance in terms of discrimination, calibration, and clinical applicability. These models are reliable tools for predicting risk of ED visits among patients with T2D. They can estimate future ED demand and assist clinicians in identifying critical factors associated with ED utilization, enabling early interventions to reduce such visits. The top five important features were age, the difference between visitation gaps, visitation gaps, R10 or abdominal and pelvic pain, and the Index of Concentration at the Extremes (ICE) for income.

TEAFormers: TEnsor-Augmented Transformers for Multi-Dimensional Time Series Forecasting

Oct 27, 2024

Abstract:Multi-dimensional time series data, such as matrix and tensor-variate time series, are increasingly prevalent in fields such as economics, finance, and climate science. Traditional Transformer models, though adept with sequential data, do not effectively preserve these multi-dimensional structures, as their internal operations in effect flatten multi-dimensional observations into vectors, thereby losing critical multi-dimensional relationships and patterns. To address this, we introduce the Tensor-Augmented Transformer (TEAFormer), a novel method that incorporates tensor expansion and compression within the Transformer framework to maintain and leverage the inherent multi-dimensional structures, thus reducing computational costs and improving prediction accuracy. The core feature of the TEAFormer, the Tensor-Augmentation (TEA) module, utilizes tensor expansion to enhance multi-view feature learning and tensor compression for efficient information aggregation and reduced computational load. The TEA module is not just a specific model architecture but a versatile component that is highly compatible with the attention mechanism and the encoder-decoder structure of Transformers, making it adaptable to existing Transformer architectures. Our comprehensive experiments, which integrate the TEA module into three popular time series Transformer models across three real-world benchmarks, show significant performance enhancements, highlighting the potential of TEAFormers for cutting-edge time series forecasting.

Conditional Uncertainty Quantification for Tensorized Topological Neural Networks

Oct 20, 2024Abstract:Graph Neural Networks (GNNs) have become the de facto standard for analyzing graph-structured data, leveraging message-passing techniques to capture both structural and node feature information. However, recent studies have raised concerns about the statistical reliability of uncertainty estimates produced by GNNs. This paper addresses this crucial challenge by introducing a novel technique for quantifying uncertainty in non-exchangeable graph-structured data, while simultaneously reducing the size of label prediction sets in graph classification tasks. We propose Conformalized Tensor-based Topological Neural Networks (CF-T2NN), a new approach for rigorous prediction inference over graphs. CF-T2NN employs tensor decomposition and topological knowledge learning to navigate and interpret the inherent uncertainty in decision-making processes. This method enables a more nuanced understanding and handling of prediction uncertainties, enhancing the reliability and interpretability of neural network outcomes. Our empirical validation, conducted across 10 real-world datasets, demonstrates the superiority of CF-T2NN over a wide array of state-of-the-art methods on various graph benchmarks. This work not only enhances the GNN framework with robust uncertainty quantification capabilities but also sets a new standard for reliability and precision in graph-structured data analysis.

Conditional Prediction ROC Bands for Graph Classification

Oct 20, 2024

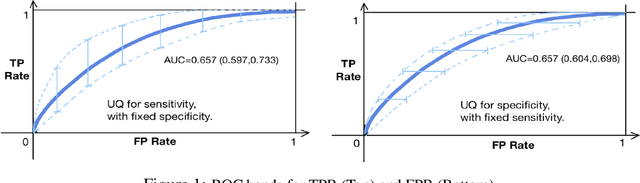

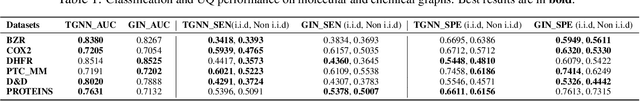

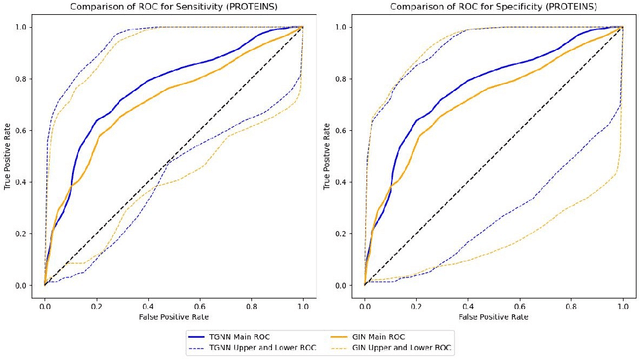

Abstract:Graph classification in medical imaging and drug discovery requires accuracy and robust uncertainty quantification. To address this need, we introduce Conditional Prediction ROC (CP-ROC) bands, offering uncertainty quantification for ROC curves and robustness to distributional shifts in test data. Although developed for Tensorized Graph Neural Networks (TGNNs), CP-ROC is adaptable to general Graph Neural Networks (GNNs) and other machine learning models. We establish statistically guaranteed coverage for CP-ROC under a local exchangeability condition. This addresses uncertainty challenges for ROC curves under non-iid setting, ensuring reliability when test graph distributions differ from training data. Empirically, to establish local exchangeability for TGNNs, we introduce a data-driven approach to construct local calibration sets for graphs. Comprehensive evaluations show that CP-ROC significantly improves prediction reliability across diverse tasks. This method enhances uncertainty quantification efficiency and reliability for ROC curves, proving valuable for real-world applications with non-iid objects.

Tensor-Fused Multi-View Graph Contrastive Learning

Oct 20, 2024

Abstract:Graph contrastive learning (GCL) has emerged as a promising approach to enhance graph neural networks' (GNNs) ability to learn rich representations from unlabeled graph-structured data. However, current GCL models face challenges with computational demands and limited feature utilization, often relying only on basic graph properties like node degrees and edge attributes. This constrains their capacity to fully capture the complex topological characteristics of real-world phenomena represented by graphs. To address these limitations, we propose Tensor-Fused Multi-View Graph Contrastive Learning (TensorMV-GCL), a novel framework that integrates extended persistent homology (EPH) with GCL representations and facilitates multi-scale feature extraction. Our approach uniquely employs tensor aggregation and compression to fuse information from graph and topological features obtained from multiple augmented views of the same graph. By incorporating tensor concatenation and contraction modules, we reduce computational overhead by separating feature tensor aggregation and transformation. Furthermore, we enhance the quality of learned topological features and model robustness through noise-injected EPH. Experiments on molecular, bioinformatic, and social network datasets demonstrate TensorMV-GCL's superiority, outperforming 15 state-of-the-art methods in graph classification tasks across 9 out of 11 benchmarks while achieving comparable results on the remaining two. The code for this paper is publicly available at https://github.com/CS-SAIL/Tensor-MV-GCL.git.

HumanFT: A Human-like Fingertip Multimodal Visuo-Tactile Sensor

Oct 14, 2024

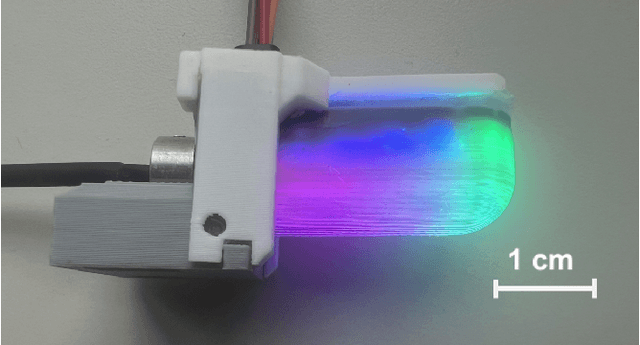

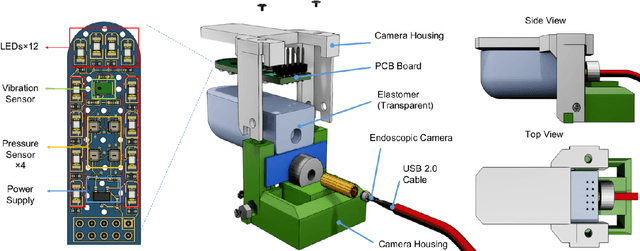

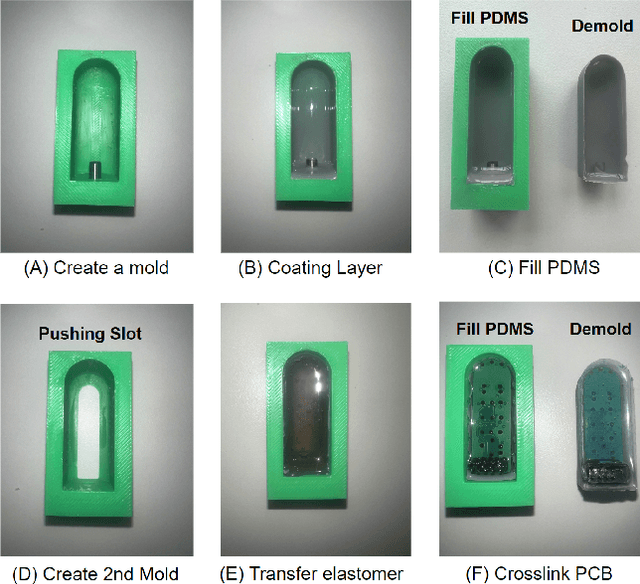

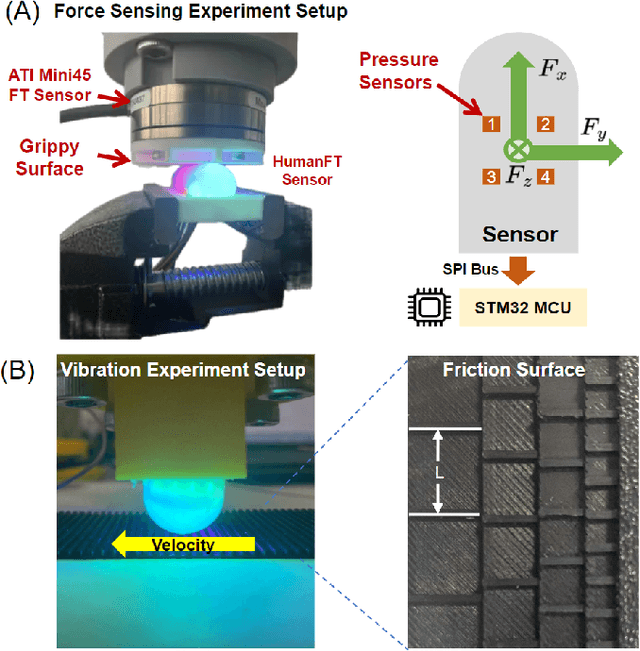

Abstract:Tactile sensors play a crucial role in enabling robots to interact effectively and safely with objects in everyday tasks. In particular, visuotactile sensors have seen increasing usage in two and three-fingered grippers due to their high-quality feedback. However, a significant gap remains in the development of sensors suitable for humanoid robots, especially five-fingered dexterous hands. One reason is because of the challenges in designing and manufacturing sensors that are compact in size. In this paper, we propose HumanFT, a multimodal visuotactile sensor that replicates the shape and functionality of a human fingertip. To bridge the gap between human and robotic tactile sensing, our sensor features real-time force measurements, high-frequency vibration detection, and overtemperature alerts. To achieve this, we developed a suite of fabrication techniques for a new type of elastomer optimized for force propagation and temperature sensing. Besides, our sensor integrates circuits capable of sensing pressure and vibration. These capabilities have been validated through experiments. The proposed design is simple and cost-effective to fabricate. We believe HumanFT can enhance humanoid robots' perception by capturing and interpreting multimodal tactile information.

When Witnesses Defend: A Witness Graph Topological Layer for Adversarial Graph Learning

Sep 24, 2024

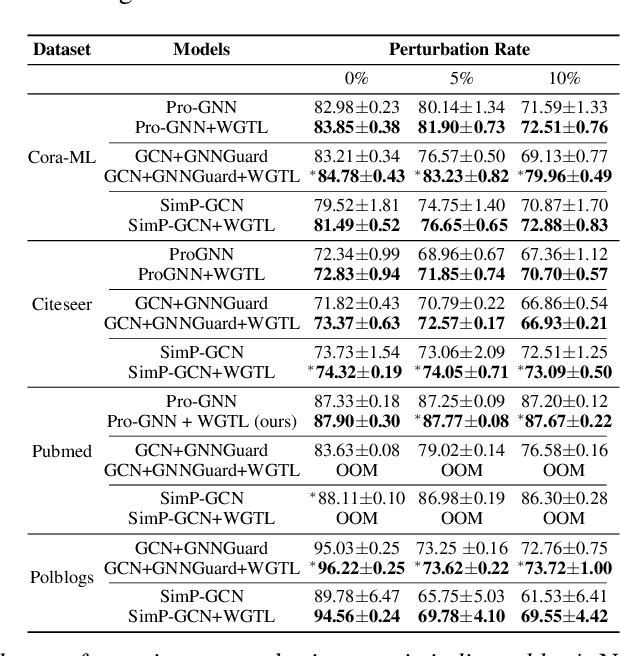

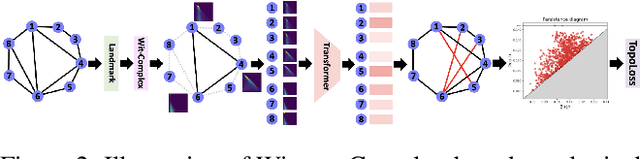

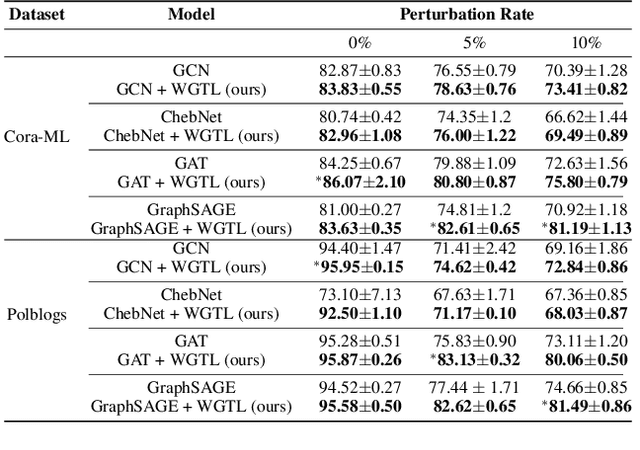

Abstract:Capitalizing on the intuitive premise that shape characteristics are more robust to perturbations, we bridge adversarial graph learning with the emerging tools from computational topology, namely, persistent homology representations of graphs. We introduce the concept of witness complex to adversarial analysis on graphs, which allows us to focus only on the salient shape characteristics of graphs, yielded by the subset of the most essential nodes (i.e., landmarks), with minimal loss of topological information on the whole graph. The remaining nodes are then used as witnesses, governing which higher-order graph substructures are incorporated into the learning process. Armed with the witness mechanism, we design Witness Graph Topological Layer (WGTL), which systematically integrates both local and global topological graph feature representations, the impact of which is, in turn, automatically controlled by the robust regularized topological loss. Given the attacker's budget, we derive the important stability guarantees of both local and global topology encodings and the associated robust topological loss. We illustrate the versatility and efficiency of WGTL by its integration with five GNNs and three existing non-topological defense mechanisms. Our extensive experiments across six datasets demonstrate that WGTL boosts the robustness of GNNs across a range of perturbations and against a range of adversarial attacks, leading to relative gains of up to 18%.

TopoGCL: Topological Graph Contrastive Learning

Jun 25, 2024

Abstract:Graph contrastive learning (GCL) has recently emerged as a new concept which allows for capitalizing on the strengths of graph neural networks (GNNs) to learn rich representations in a wide variety of applications which involve abundant unlabeled information. However, existing GCL approaches largely tend to overlook the important latent information on higher-order graph substructures. We address this limitation by introducing the concepts of topological invariance and extended persistence on graphs to GCL. In particular, we propose a new contrastive mode which targets topological representations of the two augmented views from the same graph, yielded by extracting latent shape properties of the graph at multiple resolutions. Along with the extended topological layer, we introduce a new extended persistence summary, namely, extended persistence landscapes (EPL) and derive its theoretical stability guarantees. Our extensive numerical results on biological, chemical, and social interaction graphs show that the new Topological Graph Contrastive Learning (TopoGCL) model delivers significant performance gains in unsupervised graph classification for 11 out of 12 considered datasets and also exhibits robustness under noisy scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge