Marcos Escudero-Viñolo

GBT-SAM: A Parameter-Efficient Depth-Aware Model for Generalizable Brain tumour Segmentation on mp-MRI

Mar 07, 2025

Abstract:Gliomas are brain tumours that stand out for their highly lethal and aggressive nature, which demands a precise approach in their diagnosis. Medical image segmentation plays a crucial role in the evaluation and follow-up of these tumours, allowing specialists to analyse their morphology. However, existing methods for automatic glioma segmentation often lack generalization capability across other brain tumour domains, require extensive computational resources, or fail to fully utilize the multi-parametric MRI (mp-MRI) data used to delineate them. In this work, we introduce GBT-SAM, a novel Generalizable Brain Tumour (GBT) framework that extends the Segment Anything Model (SAM) to brain tumour segmentation tasks. Our method employs a two-step training protocol: first, fine-tuning the patch embedding layer to process the entire mp-MRI modalities, and second, incorporating parameter-efficient LoRA blocks and a Depth-Condition block into the Vision Transformer (ViT) to capture inter-slice correlations. GBT-SAM achieves state-of-the-art performance on the Adult Glioma dataset (Dice Score of $93.54$) while demonstrating robust generalization across Meningioma, Pediatric Glioma, and Sub-Saharan Glioma datasets. Furthermore, GBT-SAM uses less than 6.5M trainable parameters, thus offering an efficient solution for brain tumour segmentation. \\ Our code and models are available at https://github.com/vpulab/med-sam-brain .

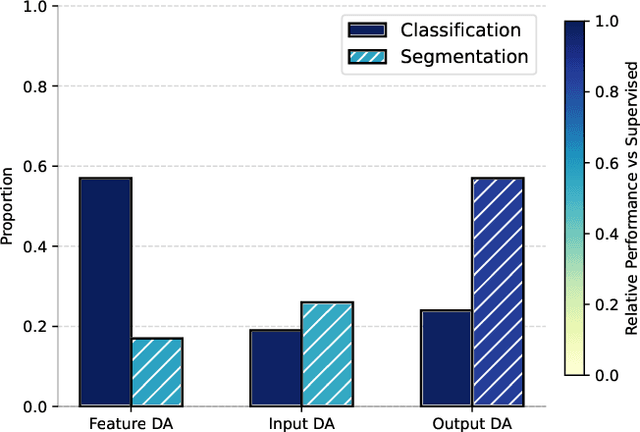

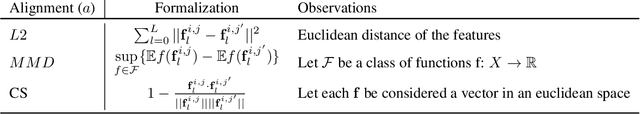

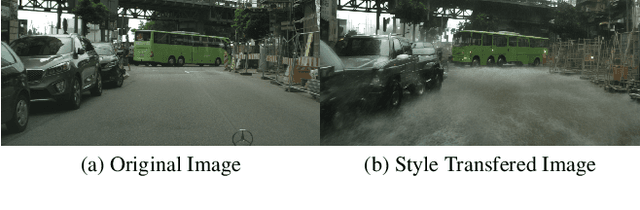

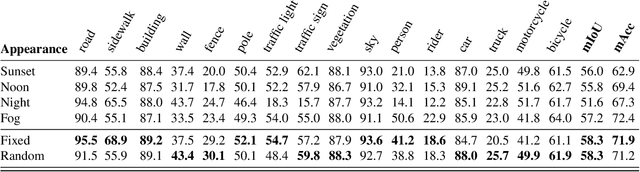

Leveraging Contrastive Learning for Semantic Segmentation with Consistent Labels Across Varying Appearances

Dec 21, 2024

Abstract:This paper introduces a novel synthetic dataset that captures urban scenes under a variety of weather conditions, providing pixel-perfect, ground-truth-aligned images to facilitate effective feature alignment across domains. Additionally, we propose a method for domain adaptation and generalization that takes advantage of the multiple versions of each scene, enforcing feature consistency across different weather scenarios. Our experimental results demonstrate the impact of our dataset in improving performance across several alignment metrics, addressing key challenges in domain adaptation and generalization for segmentation tasks. This research also explores critical aspects of synthetic data generation, such as optimizing the balance between the volume and variability of generated images to enhance segmentation performance. Ultimately, this work sets forth a new paradigm for synthetic data generation and domain adaptation.

VLMs meet UDA: Boosting Transferability of Open Vocabulary Segmentation with Unsupervised Domain Adaptation

Dec 12, 2024

Abstract:Segmentation models are typically constrained by the categories defined during training. To address this, researchers have explored two independent approaches: adapting Vision-Language Models (VLMs) and leveraging synthetic data. However, VLMs often struggle with granularity, failing to disentangle fine-grained concepts, while synthetic data-based methods remain limited by the scope of available datasets. This paper proposes enhancing segmentation accuracy across diverse domains by integrating Vision-Language reasoning with key strategies for Unsupervised Domain Adaptation (UDA). First, we improve the fine-grained segmentation capabilities of VLMs through multi-scale contextual data, robust text embeddings with prompt augmentation, and layer-wise fine-tuning in our proposed Foundational-Retaining Open Vocabulary Semantic Segmentation (FROVSS) framework. Next, we incorporate these enhancements into a UDA framework by employing distillation to stabilize training and cross-domain mixed sampling to boost adaptability without compromising generalization. The resulting UDA-FROVSS framework is the first UDA approach to effectively adapt across domains without requiring shared categories.

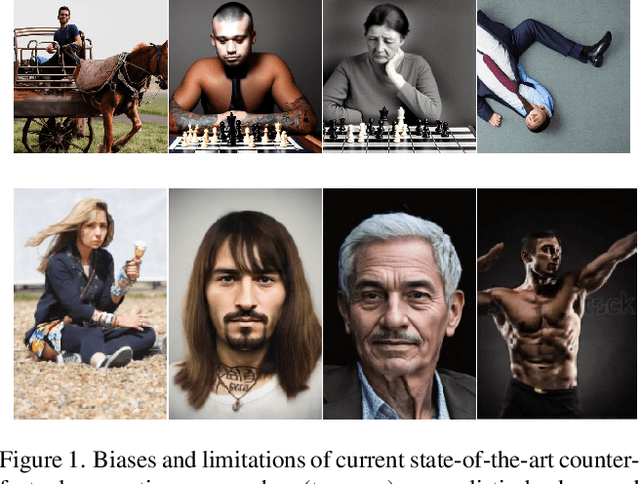

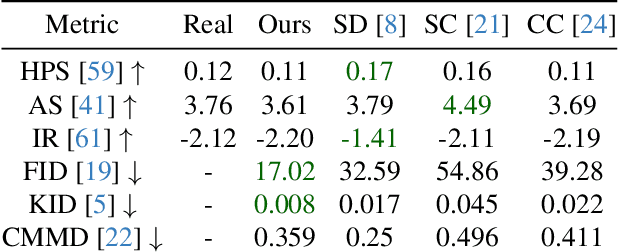

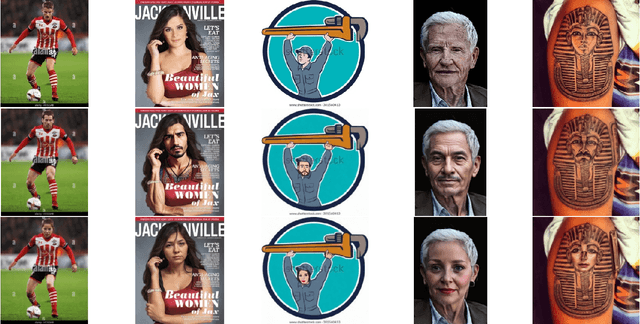

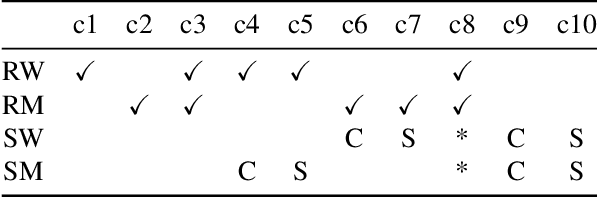

Pinpoint Counterfactuals: Reducing social bias in foundation models via localized counterfactual generation

Dec 12, 2024

Abstract:Foundation models trained on web-scraped datasets propagate societal biases to downstream tasks. While counterfactual generation enables bias analysis, existing methods introduce artifacts by modifying contextual elements like clothing and background. We present a localized counterfactual generation method that preserves image context by constraining counterfactual modifications to specific attribute-relevant regions through automated masking and guided inpainting. When applied to the Conceptual Captions dataset for creating gender counterfactuals, our method results in higher visual and semantic fidelity than state-of-the-art alternatives, while maintaining the performance of models trained using only real data on non-human-centric tasks. Models fine-tuned with our counterfactuals demonstrate measurable bias reduction across multiple metrics, including a decrease in gender classification disparity and balanced person preference scores, while preserving ImageNet zero-shot performance. The results establish a framework for creating balanced datasets that enable both accurate bias profiling and effective mitigation.

Layer-wise Model Merging for Unsupervised Domain Adaptation in Segmentation Tasks

Sep 24, 2024

Abstract:Merging parameters of multiple models has resurfaced as an effective strategy to enhance task performance and robustness, but prior work is limited by the high costs of ensemble creation and inference. In this paper, we leverage the abundance of freely accessible trained models to introduce a cost-free approach to model merging. It focuses on a layer-wise integration of merged models, aiming to maintain the distinctiveness of the task-specific final layers while unifying the initial layers, which are primarily associated with feature extraction. This approach ensures parameter consistency across all layers, essential for boosting performance. Moreover, it facilitates seamless integration of knowledge, enabling effective merging of models from different datasets and tasks. Specifically, we investigate its applicability in Unsupervised Domain Adaptation (UDA), an unexplored area for model merging, for Semantic and Panoptic Segmentation. Experimental results demonstrate substantial UDA improvements without additional costs for merging same-architecture models from distinct datasets ($\uparrow 2.6\%$ mIoU) and different-architecture models with a shared backbone ($\uparrow 6.8\%$ mIoU). Furthermore, merging Semantic and Panoptic Segmentation models increases mPQ by $\uparrow 7\%$. These findings are validated across a wide variety of UDA strategies, architectures, and datasets.

Gradient-based Class Weighting for Unsupervised Domain Adaptation in Dense Prediction Visual Tasks

Jul 01, 2024

Abstract:In unsupervised domain adaptation (UDA), where models are trained on source data (e.g., synthetic) and adapted to target data (e.g., real-world) without target annotations, addressing the challenge of significant class imbalance remains an open issue. Despite considerable progress in bridging the domain gap, existing methods often experience performance degradation when confronted with highly imbalanced dense prediction visual tasks like semantic and panoptic segmentation. This discrepancy becomes especially pronounced due to the lack of equivalent priors between the source and target domains, turning class imbalanced techniques used for other areas (e.g., image classification) ineffective in UDA scenarios. This paper proposes a class-imbalance mitigation strategy that incorporates class-weights into the UDA learning losses, but with the novelty of estimating these weights dynamically through the loss gradient, defining a Gradient-based class weighting (GBW) learning. GBW naturally increases the contribution of classes whose learning is hindered by large-represented classes, and has the advantage of being able to automatically and quickly adapt to the iteration training outcomes, avoiding explicitly curricular learning patterns common in loss-weighing strategies. Extensive experimentation validates the effectiveness of GBW across architectures (convolutional and transformer), UDA strategies (adversarial, self-training and entropy minimization), tasks (semantic and panoptic segmentation), and datasets (GTA and Synthia). Analysing the source of advantage, GBW consistently increases the recall of low represented classes.

The Robust Semantic Segmentation UNCV2023 Challenge Results

Sep 27, 2023

Abstract:This paper outlines the winning solutions employed in addressing the MUAD uncertainty quantification challenge held at ICCV 2023. The challenge was centered around semantic segmentation in urban environments, with a particular focus on natural adversarial scenarios. The report presents the results of 19 submitted entries, with numerous techniques drawing inspiration from cutting-edge uncertainty quantification methodologies presented at prominent conferences in the fields of computer vision and machine learning and journals over the past few years. Within this document, the challenge is introduced, shedding light on its purpose and objectives, which primarily revolved around enhancing the robustness of semantic segmentation in urban scenes under varying natural adversarial conditions. The report then delves into the top-performing solutions. Moreover, the document aims to provide a comprehensive overview of the diverse solutions deployed by all participants. By doing so, it seeks to offer readers a deeper insight into the array of strategies that can be leveraged to effectively handle the inherent uncertainties associated with autonomous driving and semantic segmentation, especially within urban environments.

Self-Supervised Curricular Deep Learning for Chest X-Ray Image Classification

Jan 25, 2023

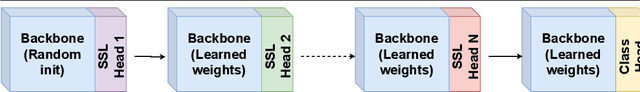

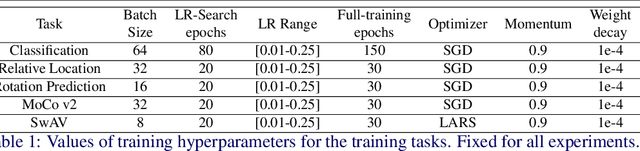

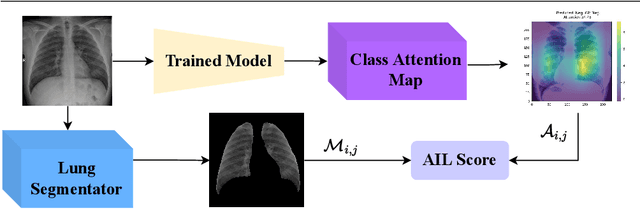

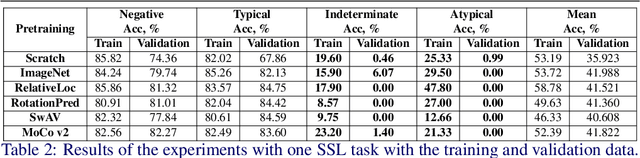

Abstract:Deep learning technologies have already demonstrated a high potential to build diagnosis support systems from medical imaging data, such as Chest X-Ray images. However, the shortage of labeled data in the medical field represents one key obstacle to narrow down the performance gap with respect to applications in other image domains. In this work, we investigate the benefits of a curricular Self-Supervised Learning (SSL) pretraining scheme with respect to fully-supervised training regimes for pneumonia recognition on Chest X-Ray images of Covid-19 patients. We show that curricular SSL pretraining, which leverages unlabeled data, outperforms models trained from scratch, or pretrained on ImageNet, indicating the potential of performance gains by SSL pretraining on massive unlabeled datasets. Finally, we demonstrate that top-performing SSLpretrained models show a higher degree of attention in the lung regions, embodying models that may be more robust to possible external confounding factors in the training datasets, identified by previous works.

Graph Convolutional Network for Multi-Target Multi-Camera Vehicle Tracking

Nov 28, 2022

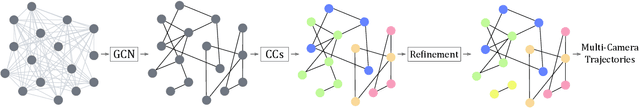

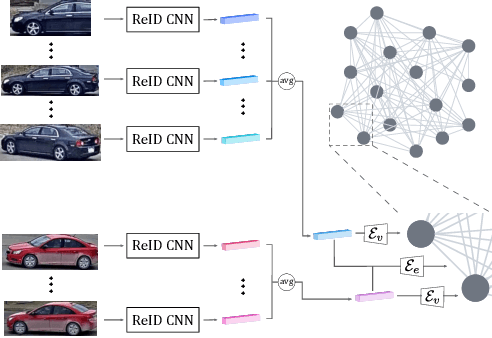

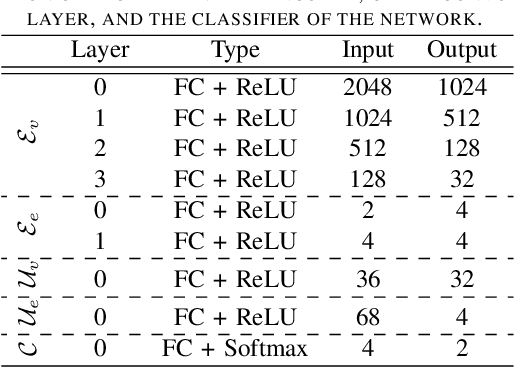

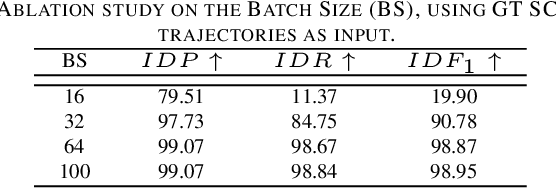

Abstract:This letter focuses on the task of Multi-Target Multi-Camera vehicle tracking. We propose to associate single-camera trajectories into multi-camera global trajectories by training a Graph Convolutional Network. Our approach simultaneously processes all cameras providing a global solution, and it is also robust to large cameras unsynchronizations. Furthermore, we design a new loss function to deal with class imbalance. Our proposal outperforms the related work showing better generalization and without requiring ad-hoc manual annotations or thresholds, unlike compared approaches.

Impact of a DCT-driven Loss in Attention-based Knowledge-Distillation for Scene Recognition

May 04, 2022

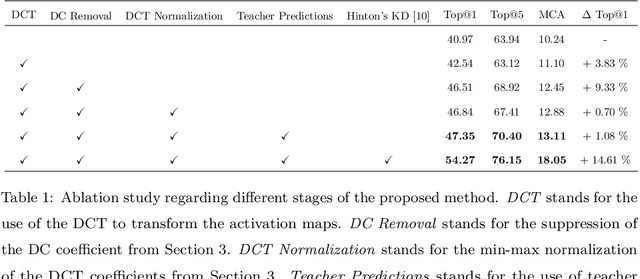

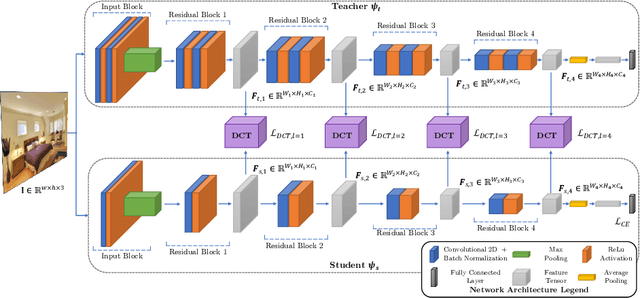

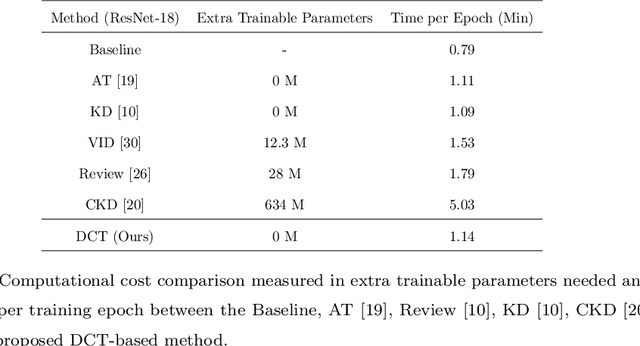

Abstract:Knowledge Distillation (KD) is a strategy for the definition of a set of transferability gangways to improve the efficiency of Convolutional Neural Networks. Feature-based Knowledge Distillation is a subfield of KD that relies on intermediate network representations, either unaltered or depth-reduced via maximum activation maps, as the source knowledge. In this paper, we propose and analyse the use of a 2D frequency transform of the activation maps before transferring them. We pose that\textemdash by using global image cues rather than pixel estimates, this strategy enhances knowledge transferability in tasks such as scene recognition, defined by strong spatial and contextual relationships between multiple and varied concepts. To validate the proposed method, an extensive evaluation of the state-of-the-art in scene recognition is presented. Experimental results provide strong evidences that the proposed strategy enables the student network to better focus on the relevant image areas learnt by the teacher network, hence leading to better descriptive features and higher transferred performance than every other state-of-the-art alternative. We publicly release the training and evaluation framework used along this paper at http://www-vpu.eps.uam.es/publications/DCTBasedKDForSceneRecognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge