Elena Luna

Graph Convolutional Network for Multi-Target Multi-Camera Vehicle Tracking

Nov 28, 2022

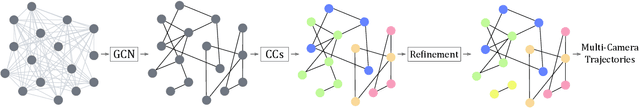

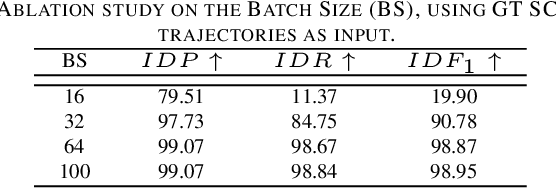

Abstract:This letter focuses on the task of Multi-Target Multi-Camera vehicle tracking. We propose to associate single-camera trajectories into multi-camera global trajectories by training a Graph Convolutional Network. Our approach simultaneously processes all cameras providing a global solution, and it is also robust to large cameras unsynchronizations. Furthermore, we design a new loss function to deal with class imbalance. Our proposal outperforms the related work showing better generalization and without requiring ad-hoc manual annotations or thresholds, unlike compared approaches.

Graph Neural Networks for Cross-Camera Data Association

Jan 17, 2022

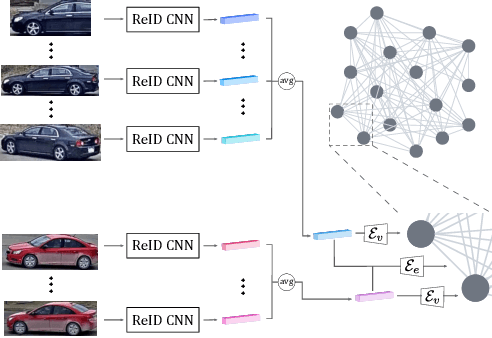

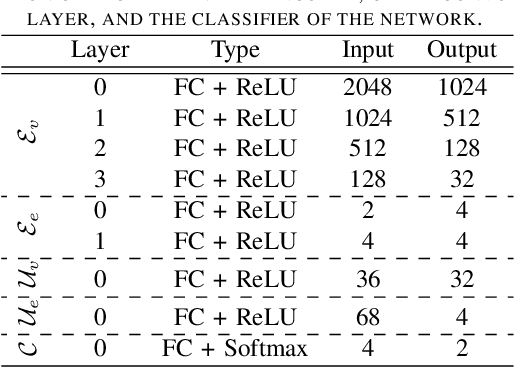

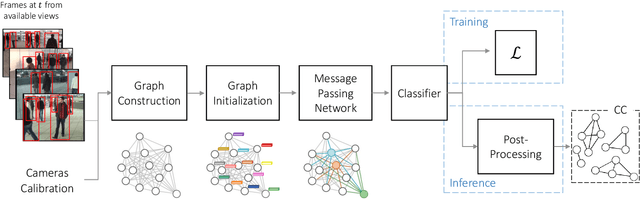

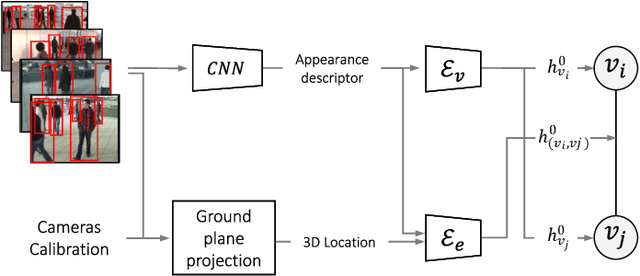

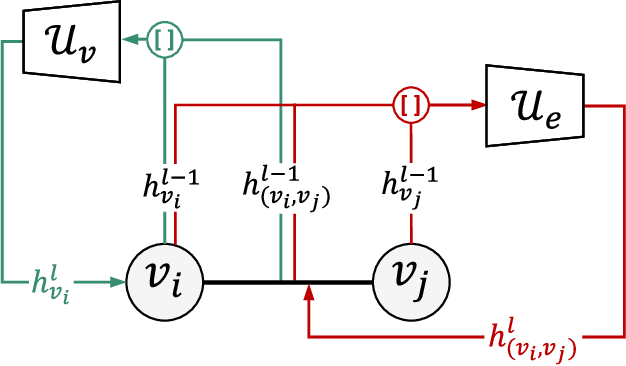

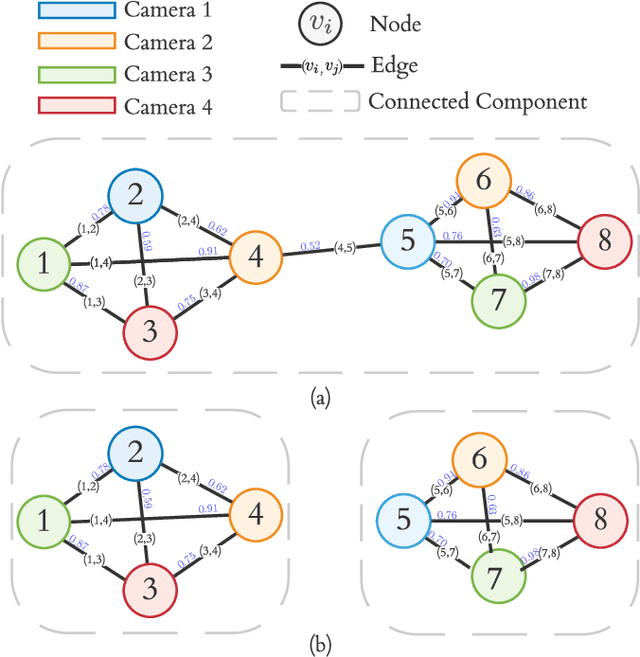

Abstract:Cross-camera image data association is essential for many multi-camera computer vision tasks, such as multi-camera pedestrian detection, multi-camera multi-target tracking, 3D pose estimation, etc. This association task is typically stated as a bipartite graph matching problem and often solved by applying minimum-cost flow techniques, which may be computationally inefficient with large data. Furthermore, cameras are usually treated by pairs, obtaining local solutions, rather than finding a global solution at once. Other key issue is that of the affinity measurement: the widespread usage of non-learnable pre-defined distances, such as the Euclidean and Cosine ones. This paper proposes an efficient approach for cross-cameras data-association focused on a global solution, instead of processing cameras by pairs. To avoid the usage of fixed distances, we leverage the connectivity of Graph Neural Networks, previously unused in this scope, using a Message Passing Network to jointly learn features and similarity. We validate the proposal for pedestrian multi-view association, showing results over the EPFL multi-camera pedestrian dataset. Our approach considerably outperforms the literature data association techniques, without requiring to be trained in the same scenario in which it is tested. Our code is available at \url{http://www-vpu.eps.uam.es/publications/gnn_cca}.

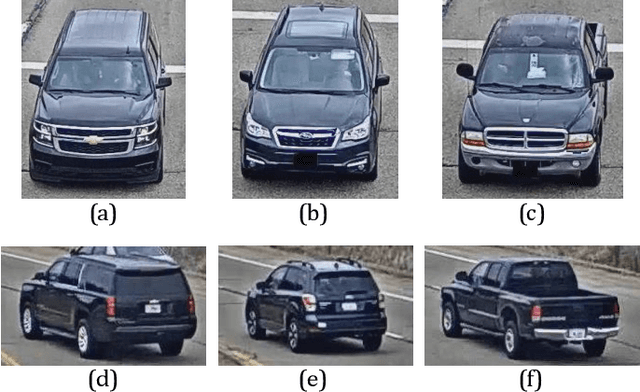

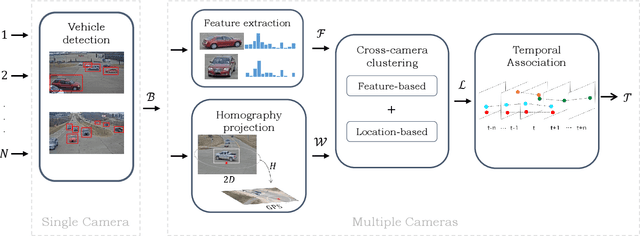

Online Clustering-based Multi-Camera Vehicle Tracking in Scenarios with overlapping FOVs

Feb 08, 2021

Abstract:Multi-Target Multi-Camera (MTMC) vehicle tracking is an essential task of visual traffic monitoring, one of the main research fields of Intelligent Transportation Systems. Several offline approaches have been proposed to address this task; however, they are not compatible with real-world applications due to their high latency and post-processing requirements. In this paper, we present a new low-latency online approach for MTMC tracking in scenarios with partially overlapping fields of view (FOVs), such as road intersections. Firstly, the proposed approach detects vehicles at each camera. Then, the detections are merged between cameras by applying cross-camera clustering based on appearance and location. Lastly, the clusters containing different detections of the same vehicle are temporally associated to compute the tracks on a frame-by-frame basis. The experiments show promising low-latency results while addressing real-world challenges such as the a priori unknown and time-varying number of targets and the continuous state estimation of them without performing any post-processing of the trajectories.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge