Lipeng Chen

Compliance while resisting: a shear-thickening fluid controller for physical human-robot interaction

Feb 03, 2025

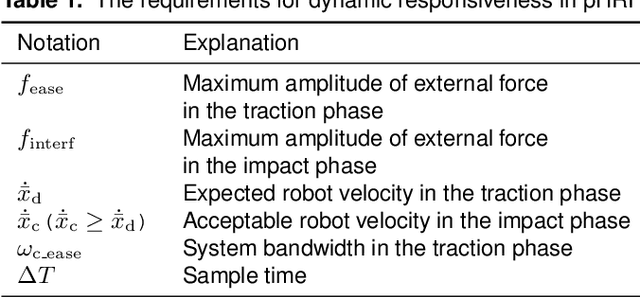

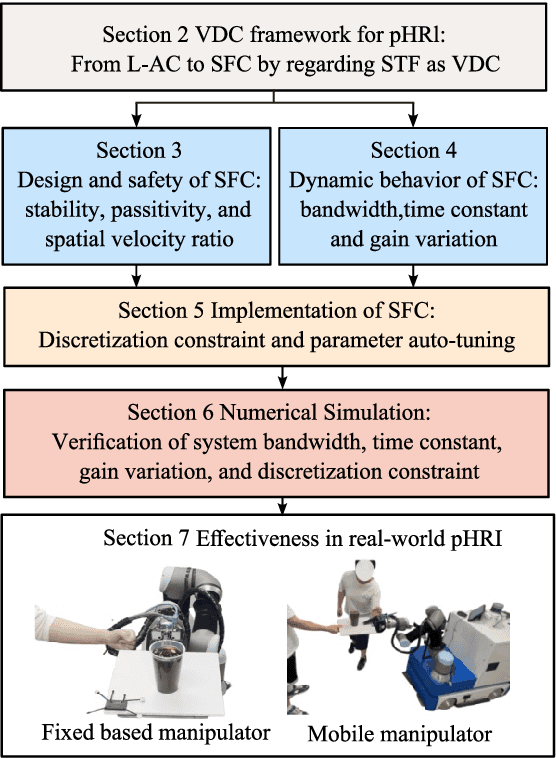

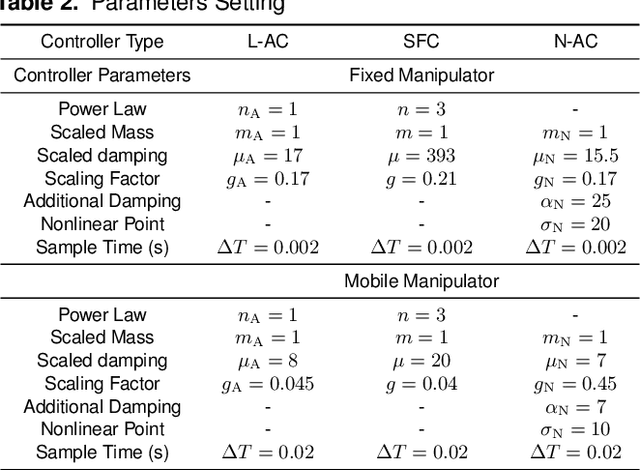

Abstract:Physical human-robot interaction (pHRI) is widely needed in many fields, such as industrial manipulation, home services, and medical rehabilitation, and puts higher demands on the safety of robots. Due to the uncertainty of the working environment, the pHRI may receive unexpected impact interference, which affects the safety and smoothness of the task execution. The commonly used linear admittance control (L-AC) can cope well with high-frequency small-amplitude noise, but for medium-frequency high-intensity impact, the effect is not as good. Inspired by the solid-liquid phase change nature of shear-thickening fluid, we propose a Shear-thickening Fluid Control (SFC) that can achieve both an easy human-robot collaboration and resistance to impact interference. The SFC's stability, passivity, and phase trajectory are analyzed in detail, the frequency and time domain properties are quantified, and parameter constraints in discrete control and coupled stability conditions are provided. We conducted simulations to compare the frequency and time domain characteristics of L-AC, nonlinear admittance controller (N-AC), and SFC, and validated their dynamic properties. In real-world experiments, we compared the performance of L-AC, N-AC, and SFC in both fixed and mobile manipulators. L-AC exhibits weak resistance to impact. N-AC can resist moderate impacts but not high-intensity ones, and may exhibit self-excited oscillations. In contrast, SFC demonstrated superior impact resistance and maintained stable collaboration, enhancing comfort in cooperative water delivery tasks. Additionally, a case study was conducted in a factory setting, further affirming the SFC's capability in facilitating human-robot collaborative manipulation and underscoring its potential in industrial applications.

Neural Quantum Propagators for Driven-Dissipative Quantum Dynamics

Oct 21, 2024

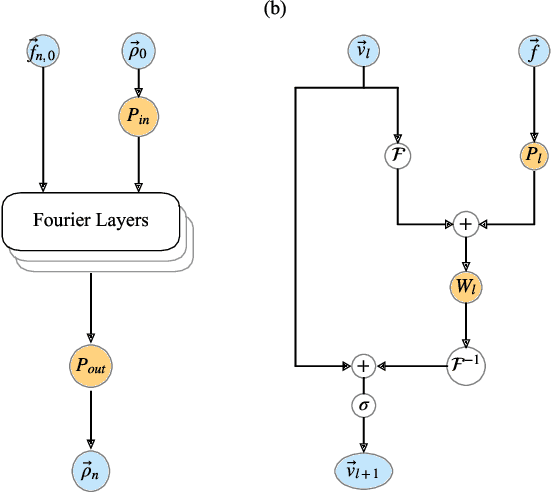

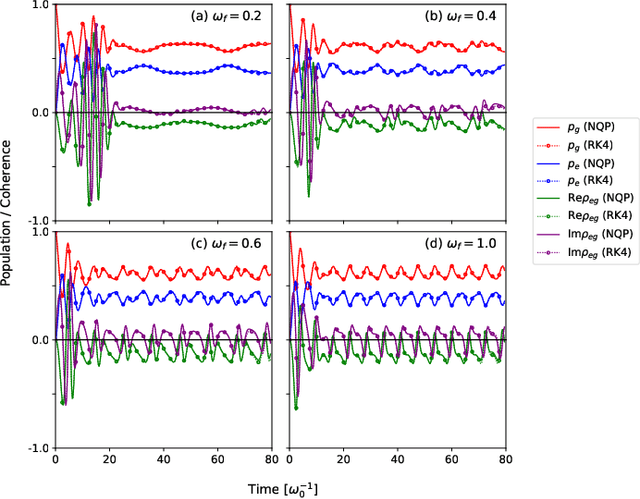

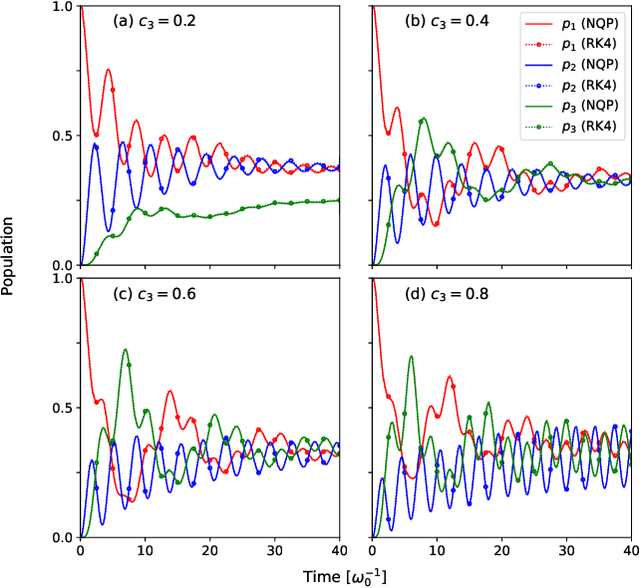

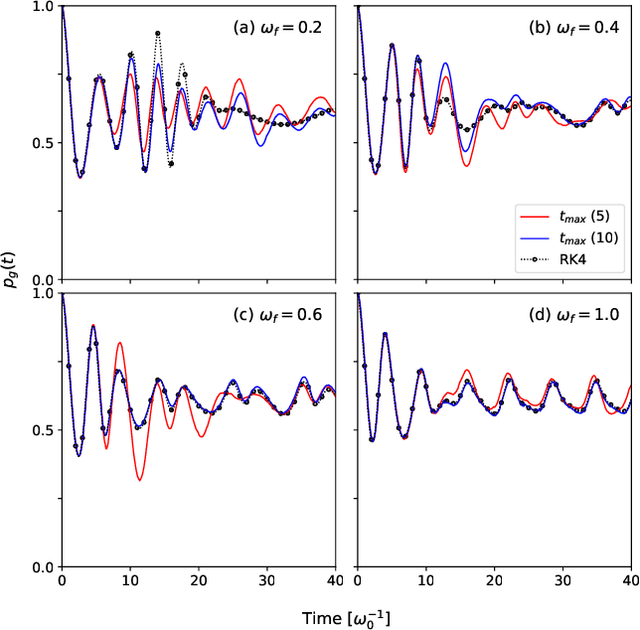

Abstract:Describing the dynamics of strong-laser driven open quantum systems is a very challenging task that requires the solution of highly involved equations of motion. While machine learning techniques are being applied with some success to simulate the time evolution of individual quantum states, their use to approximate time-dependent operators (that can evolve various states) remains largely unexplored. In this work, we develop driven neural quantum propagators (NQP), a universal neural network framework that solves driven-dissipative quantum dynamics by approximating propagators rather than wavefunctions or density matrices. NQP can handle arbitrary initial quantum states, adapt to various external fields, and simulate long-time dynamics, even when trained on far shorter time windows. Furthermore, by appropriately configuring the external fields, our trained NQP can be transferred to systems governed by different Hamiltonians. We demonstrate the effectiveness of our approach by studying the spin-boson and the three-state transition Gamma models.

SD-Net: Symmetric-Aware Keypoint Prediction and Domain Adaptation for 6D Pose Estimation In Bin-picking Scenarios

Mar 14, 2024

Abstract:Despite the success in 6D pose estimation in bin-picking scenarios, existing methods still struggle to produce accurate prediction results for symmetry objects and real world scenarios. The primary bottlenecks include 1) the ambiguity keypoints caused by object symmetries; 2) the domain gap between real and synthetic data. To circumvent these problem, we propose a new 6D pose estimation network with symmetric-aware keypoint prediction and self-training domain adaptation (SD-Net). SD-Net builds on pointwise keypoint regression and deep hough voting to perform reliable detection keypoint under clutter and occlusion. Specifically, at the keypoint prediction stage, we designe a robust 3D keypoints selection strategy considering the symmetry class of objects and equivalent keypoints, which facilitate locating 3D keypoints even in highly occluded scenes. Additionally, we build an effective filtering algorithm on predicted keypoint to dynamically eliminate multiple ambiguity and outlier keypoint candidates. At the domain adaptation stage, we propose the self-training framework using a student-teacher training scheme. To carefully distinguish reliable predictions, we harnesses a tailored heuristics for 3D geometry pseudo labelling based on semi-chamfer distance. On public Sil'eane dataset, SD-Net achieves state-of-the-art results, obtaining an average precision of 96%. Testing learning and generalization abilities on public Parametric datasets, SD-Net is 8% higher than the state-of-the-art method. The code is available at https://github.com/dingthuang/SD-Net.

Deep Reinforcement Learning Based on Local GNN for Goal-conditioned Deformable Object Rearranging

Feb 21, 2023

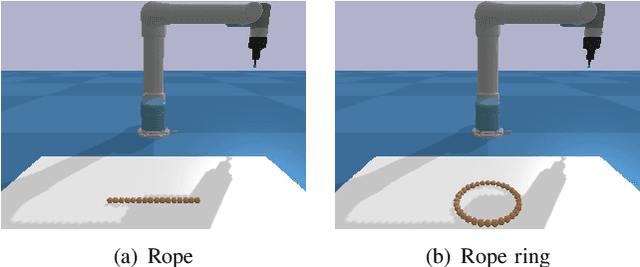

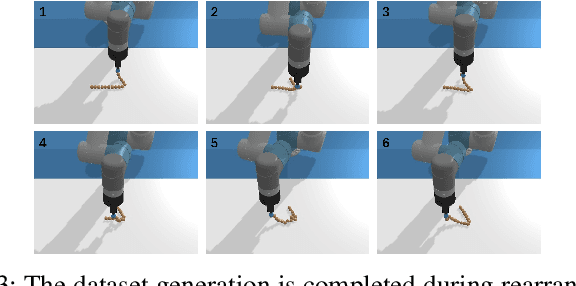

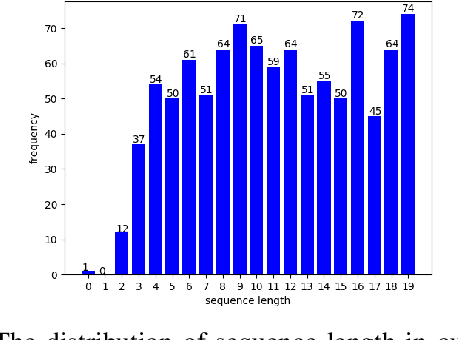

Abstract:Object rearranging is one of the most common deformable manipulation tasks, where the robot needs to rearrange a deformable object into a goal configuration. Previous studies focus on designing an expert system for each specific task by model-based or data-driven approaches and the application scenarios are therefore limited. Some research has been attempting to design a general framework to obtain more advanced manipulation capabilities for deformable rearranging tasks, with lots of progress achieved in simulation. However, transferring from simulation to reality is difficult due to the limitation of the end-to-end CNN architecture. To address these challenges, we design a local GNN (Graph Neural Network) based learning method, which utilizes two representation graphs to encode keypoints detected from images. Self-attention is applied for graph updating and cross-attention is applied for generating manipulation actions. Extensive experiments have been conducted to demonstrate that our framework is effective in multiple 1-D (rope, rope ring) and 2-D (cloth) rearranging tasks in simulation and can be easily transferred to a real robot by fine-tuning a keypoint detector.

* has been accepted by IEEE/RSJ International Conference on Intelligent Robots and Systems 2022

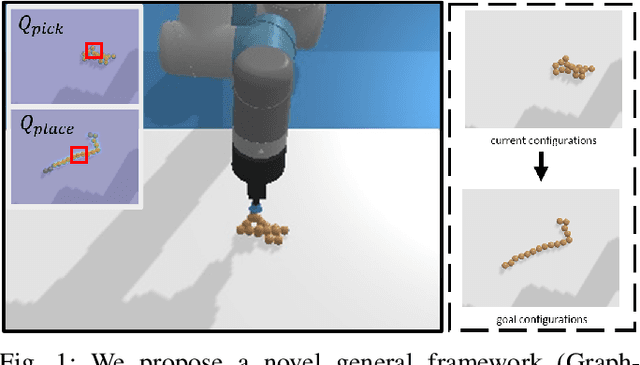

Graph-Transporter: A Graph-based Learning Method for Goal-Conditioned Deformable Object Rearranging Task

Feb 21, 2023

Abstract:Rearranging deformable objects is a long-standing challenge in robotic manipulation for the high dimensionality of configuration space and the complex dynamics of deformable objects. We present a novel framework, Graph-Transporter, for goal-conditioned deformable object rearranging tasks. To tackle the challenge of complex configuration space and dynamics, we represent the configuration space of a deformable object with a graph structure and the graph features are encoded by a graph convolution network. Our framework adopts an architecture based on Fully Convolutional Network (FCN) to output pixel-wise pick-and-place actions from only visual input. Extensive experiments have been conducted to validate the effectiveness of the graph representation of deformable object configuration. The experimental results also demonstrate that our framework is effective and general in handling goal-conditioned deformable object rearranging tasks.

* has been accepted by IEEE International Conference on Systems, Man and Cybernetics 2022

Egocentric Human Trajectory Forecasting with a Wearable Camera and Multi-Modal Fusion

Nov 04, 2021

Abstract:In this paper, we address the problem of forecasting the trajectory of an egocentric camera wearer (ego-person) in crowded spaces. The trajectory forecasting ability learned from the data of different camera wearers walking around in the real world can be transferred to assist visually impaired people in navigation, as well as to instill human navigation behaviours in mobile robots, enabling better human-robot interactions. To this end, a novel egocentric human trajectory forecasting dataset was constructed, containing real trajectories of people navigating in crowded spaces wearing a camera, as well as extracted rich contextual data. We extract and utilize three different modalities to forecast the trajectory of the camera wearer, i.e., his/her past trajectory, the past trajectories of nearby people, and the environment such as the scene semantics or the depth of the scene. A Transformer-based encoder-decoder neural network model, integrated with a novel cascaded cross-attention mechanism that fuses multiple modalities, has been designed to predict the future trajectory of the camera wearer. Extensive experiments have been conducted, and the results have shown that our model outperforms the state-of-the-art methods in egocentric human trajectory forecasting.

Improving Redundancy Availability: Dynamic Subtasks Modulation for Robots with Redundancy Insufficiency

Dec 10, 2020

Abstract:This work presents an approach for robots to suitably carry out complex applications characterized by the presence of multiple additional constraints or subtasks (e.g. obstacle and self-collision avoidance) but subject to redundancy insufficiency. The proposed approach, based on a novel subtask merging strategy, enforces all subtasks in due course by dynamically modulating a virtual secondary task, where the task status and soft priority are incorporated to improve the overall efficiency of redundancy resolution. The proposed approach greatly improves the redundancy availability by unitizing and deploying subtasks in a fine-grained and compact manner. We build up our control framework on the null space projection, which guarantees the execution of subtasks does not interfere with the primary task. Experimental results on two case studies are presented to show the performance of our approach.

Planning for Muscular and Peripersonal-Space Comfort during Human-Robot Forceful Collaboration

Oct 19, 2018

Abstract:This paper presents a planning algorithm designed to improve cooperative robot behavior concerning human comfort during forceful human-robot physical interaction. Particularly, we are interested in planning for object grasping and positioning ensuring not only stability against the exerted human force but also empowering the robot with capabilities to address and improve human experience and comfort. Herein, comfort is addressed as both the muscular activation level required to exert the cooperative task, and the human spatial perception during the interaction, namely, the peripersonal space. By maximizing both comfort criteria, the robotic system can plan for the task (ensuring grasp stability) and for the human (improving human comfort). We believe this to be a key element to achieve intuitive and fluid human-robot interaction in real applications. Real HRI drilling and cutting experiments illustrated the efficiency of the proposed planner in improving overall comfort and HRI experience without compromising grasp stability.

Manipulation Planning under Changing External Forces

Jul 30, 2018

Abstract:In this work, we present a manipulation planning algorithm for a robot to keep an object stable under changing external forces. We particularly focus on the case where a human may be applying forceful operations, e.g. cutting or drilling, on an object that the robot is holding. The planner produces an efficient plan by intelligently deciding when the robot should change its grasp on the object as the human applies the forces. The planner also tries to choose subsequent grasps such that they will minimize the number of regrasps that will be required in the long-term. Furthermore, as it switches from one grasp to the other, the planner solves the problem of bimanual regrasp planning, where the object is not placed on a support surface, but instead it is held by a single gripper until the second gripper moves to a new position on the object. This requires the planner to also reason about the stability of the object under gravity.We provide an implementation on a bimanual robot and present experiments to show the performance of our planner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge