Lili Chen

Expanding the Capabilities of Reinforcement Learning via Text Feedback

Feb 02, 2026Abstract:The success of RL for LLM post-training stems from an unreasonably uninformative source: a single bit of information per rollout as binary reward or preference label. At the other extreme, distillation offers dense supervision but requires demonstrations, which are costly and difficult to scale. We study text feedback as an intermediate signal: richer than scalar rewards, yet cheaper than complete demonstrations. Textual feedback is a natural mode of human interaction and is already abundant in many real-world settings, where users, annotators, and automated judges routinely critique LLM outputs. Towards leveraging text feedback at scale, we formalize a multi-turn RL setup, RL from Text Feedback (RLTF), where text feedback is available during training but not at inference. Therefore, models must learn to internalize the feedback in order to improve their test-time single-turn performance. To do this, we propose two methods: Self Distillation (RLTF-SD), which trains the single-turn policy to match its own feedback-conditioned second-turn generations; and Feedback Modeling (RLTF-FM), which predicts the feedback as an auxiliary objective. We provide theoretical analysis on both methods, and empirically evaluate on reasoning puzzles, competition math, and creative writing tasks. Our results show that both methods consistently outperform strong baselines across benchmarks, highlighting the potential of RL with an additional source of rich supervision at scale.

Bridging Qualitative Rubrics and AI: A Binary Question Framework for Criterion-Referenced Grading in Engineering

Jan 22, 2026Abstract:PURPOSE OR GOAL: This study investigates how GenAI can be integrated with a criterion-referenced grading framework to improve the efficiency and quality of grading for mathematical assessments in engineering. It specifically explores the challenges demonstrators face with manual, model solution-based grading and how a GenAI-supported system can be designed to reliably identify student errors, provide high-quality feedback, and support human graders. The research also examines human graders' perceptions of the effectiveness of this GenAI-assisted approach. ACTUAL OR ANTICIPATED OUTCOMES: The study found that GenAI achieved an overall grading accuracy of 92.5%, comparable to two experienced human graders. The two researchers, who also served as subject demonstrators, perceived the GenAI as a helpful second reviewer that improved accuracy by catching small errors and provided more complete feedback than they could manually. A central outcome was the significant enhancement of formative feedback. However, they noted the GenAI tool is not yet reliable enough for autonomous use, especially with unconventional solutions. CONCLUSIONS/RECOMMENDATIONS/SUMMARY: This study demonstrates that GenAI, when paired with a structured, criterion-referenced framework using binary questions, can grade engineering mathematical assessments with an accuracy comparable to human experts. Its primary contribution is a novel methodological approach that embeds the generation of high-quality, scalable formative feedback directly into the assessment workflow. Future work should investigate student perceptions of GenAI grading and feedback.

Enhancing Temporal Awareness in LLMs for Temporal Point Processes

Dec 29, 2025Abstract:Temporal point processes (TPPs) are crucial for analyzing events over time and are widely used in fields such as finance, healthcare, and social systems. These processes are particularly valuable for understanding how events unfold over time, accounting for their irregularity and dependencies. Despite the success of large language models (LLMs) in sequence modeling, applying them to temporal point processes remains challenging. A key issue is that current methods struggle to effectively capture the complex interaction between temporal information and semantic context, which is vital for accurate event modeling. In this context, we introduce TPP-TAL (Temporal Point Processes with Enhanced Temporal Awareness in LLMs), a novel plug-and-play framework designed to enhance temporal reasoning within LLMs. Rather than using the conventional method of simply concatenating event time and type embeddings, TPP-TAL explicitly aligns temporal dynamics with contextual semantics before feeding this information into the LLM. This alignment allows the model to better perceive temporal dependencies and long-range interactions between events and their surrounding contexts. Through comprehensive experiments on several benchmark datasets, it is shown that TPP-TAL delivers substantial improvements in temporal likelihood estimation and event prediction accuracy, highlighting the importance of enhancing temporal awareness in LLMs for continuous-time event modeling. The code is made available at https://github.com/chenlilil/TPP-TAL

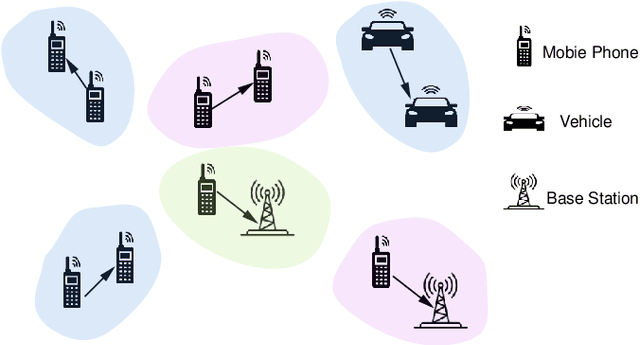

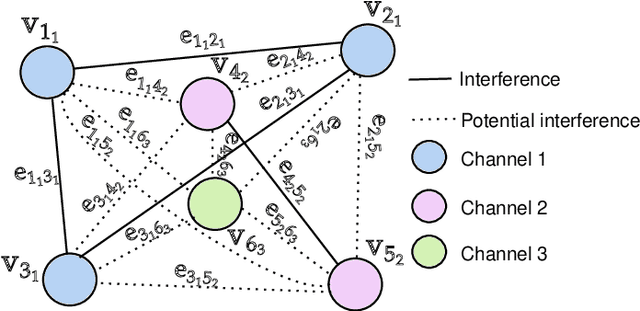

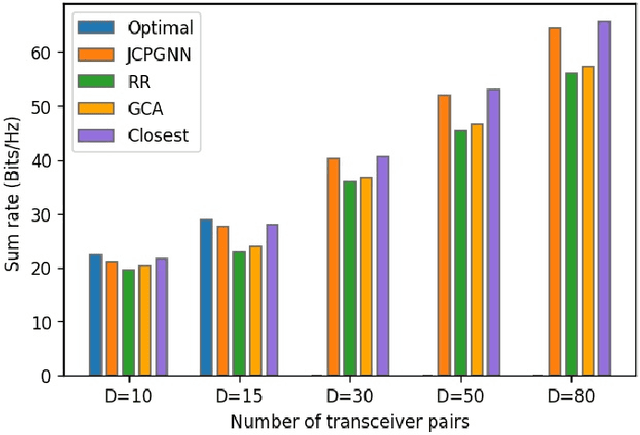

Graph Neural Networks for Resource Allocation in Multi-Channel Wireless Networks

Jun 04, 2025Abstract:As the number of mobile devices continues to grow, interference has become a major bottleneck in improving data rates in wireless networks. Efficient joint channel and power allocation (JCPA) is crucial for managing interference. In this paper, we first propose an enhanced WMMSE (eWMMSE) algorithm to solve the JCPA problem in multi-channel wireless networks. To reduce the computational complexity of iterative optimization, we further introduce JCPGNN-M, a graph neural network-based solution that enables simultaneous multi-channel allocation for each user. We reformulate the problem as a Lagrangian function, which allows us to enforce the total power constraints systematically. Our solution involves combining this Lagrangian framework with GNNs and iteratively updating the Lagrange multipliers and resource allocation scheme. Unlike existing GNN-based methods that limit each user to a single channel, JCPGNN-M supports efficient spectrum reuse and scales well in dense network scenarios. Simulation results show that JCPGNN-M achieves better data rate compared to eWMMSE. Meanwhile, the inference time of JCPGNN-M is much lower than eWMMS, and it can generalize well to larger networks.

Maximizing Confidence Alone Improves Reasoning

May 29, 2025Abstract:Reinforcement learning (RL) has enabled machine learning models to achieve significant advances in many fields. Most recently, RL has empowered frontier language models to solve challenging math, science, and coding problems. However, central to any RL algorithm is the reward function, and reward engineering is a notoriously difficult problem in any domain. In this paper, we propose RENT: Reinforcement Learning via Entropy Minimization -- a fully unsupervised RL method that requires no external reward or ground-truth answers, and instead uses the model's entropy of its underlying distribution as an intrinsic reward. We find that by reinforcing the chains of thought that yield high model confidence on its generated answers, the model improves its reasoning ability. In our experiments, we showcase these improvements on an extensive suite of commonly-used reasoning benchmarks, including GSM8K, MATH500, AMC, AIME, and GPQA, and models of varying sizes from the Qwen and Mistral families. The generality of our unsupervised learning method lends itself to applicability in a wide range of domains where external supervision is unavailable.

Methodological Explainability Evaluation of an Interpretable Deep Learning Model for Post-Hepatectomy Liver Failure Prediction Incorporating Counterfactual Explanations and Layerwise Relevance Propagation: A Prospective In Silico Trial

Aug 07, 2024

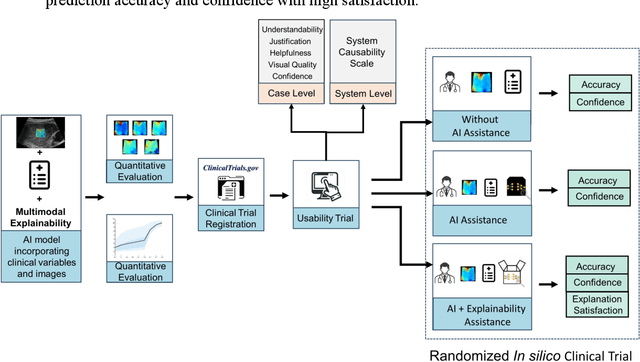

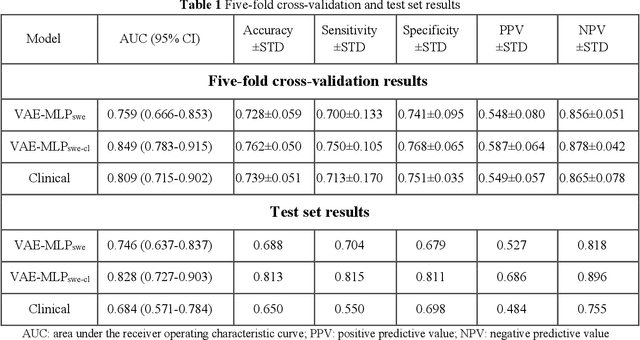

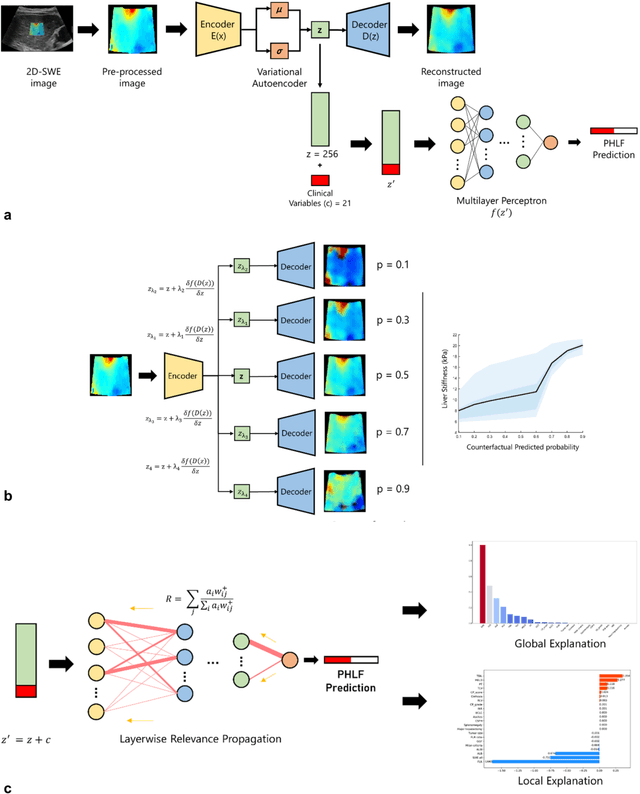

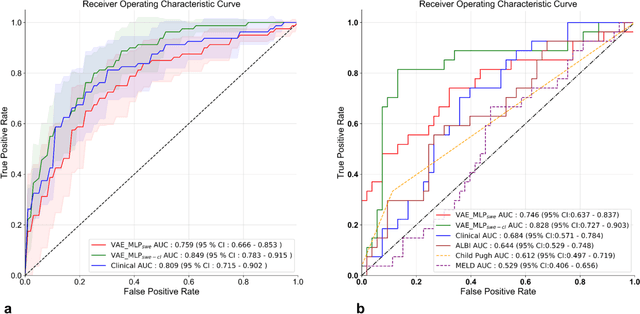

Abstract:Artificial intelligence (AI)-based decision support systems have demonstrated value in predicting post-hepatectomy liver failure (PHLF) in hepatocellular carcinoma (HCC). However, they often lack transparency, and the impact of model explanations on clinicians' decisions has not been thoroughly evaluated. Building on prior research, we developed a variational autoencoder-multilayer perceptron (VAE-MLP) model for preoperative PHLF prediction. This model integrated counterfactuals and layerwise relevance propagation (LRP) to provide insights into its decision-making mechanism. Additionally, we proposed a methodological framework for evaluating the explainability of AI systems. This framework includes qualitative and quantitative assessments of explanations against recognized biomarkers, usability evaluations, and an in silico clinical trial. Our evaluations demonstrated that the model's explanation correlated with established biomarkers and exhibited high usability at both the case and system levels. Furthermore, results from the three-track in silico clinical trial showed that clinicians' prediction accuracy and confidence increased when AI explanations were provided.

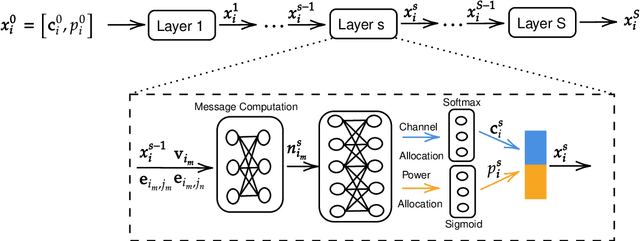

GNN-Based Joint Channel and Power Allocation in Heterogeneous Wireless Networks

Jul 28, 2024

Abstract:The optimal allocation of channels and power resources plays a crucial role in ensuring minimal interference, maximal data rates, and efficient energy utilisation. As a successful approach for tackling resource management problems in wireless networks, Graph Neural Networks (GNNs) have attracted a lot of attention. This article proposes a GNN-based algorithm to address the joint resource allocation problem in heterogeneous wireless networks. Concretely, we model the heterogeneous wireless network as a heterogeneous graph and then propose a graph neural network structure intending to allocate the available channels and transmit power to maximise the network throughput. Our proposed joint channel and power allocation graph neural network (JCPGNN) comprises a shared message computation layer and two task-specific layers, with a dedicated focus on channel and power allocation tasks, respectively. Comprehensive experiments demonstrate that the proposed algorithm achieves satisfactory performance but with higher computational efficiency compared to traditional optimisation algorithms.

Momentum-Based Federated Reinforcement Learning with Interaction and Communication Efficiency

May 29, 2024

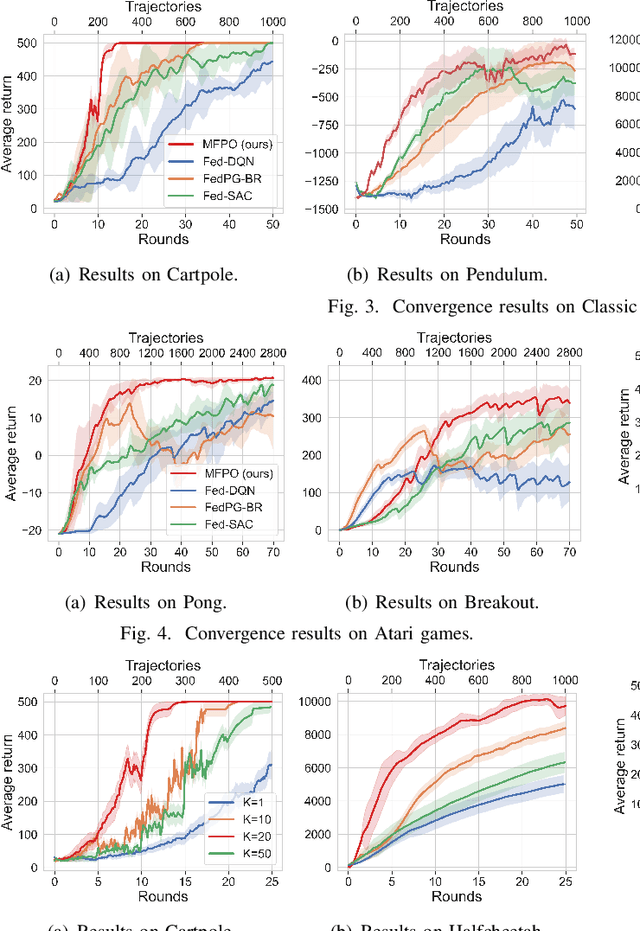

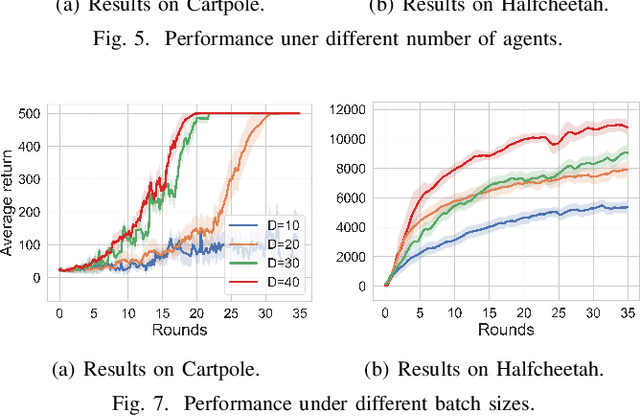

Abstract:Federated Reinforcement Learning (FRL) has garnered increasing attention recently. However, due to the intrinsic spatio-temporal non-stationarity of data distributions, the current approaches typically suffer from high interaction and communication costs. In this paper, we introduce a new FRL algorithm, named $\texttt{MFPO}$, that utilizes momentum, importance sampling, and additional server-side adjustment to control the shift of stochastic policy gradients and enhance the efficiency of data utilization. We prove that by proper selection of momentum parameters and interaction frequency, $\texttt{MFPO}$ can achieve $\tilde{\mathcal{O}}(H N^{-1}\epsilon^{-3/2})$ and $\tilde{\mathcal{O}}(\epsilon^{-1})$ interaction and communication complexities ($N$ represents the number of agents), where the interaction complexity achieves linear speedup with the number of agents, and the communication complexity aligns the best achievable of existing first-order FL algorithms. Extensive experiments corroborate the substantial performance gains of $\texttt{MFPO}$ over existing methods on a suite of complex and high-dimensional benchmarks.

Tele-Aloha: A Low-budget and High-authenticity Telepresence System Using Sparse RGB Cameras

May 23, 2024

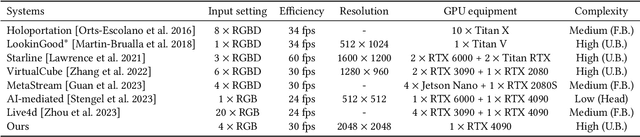

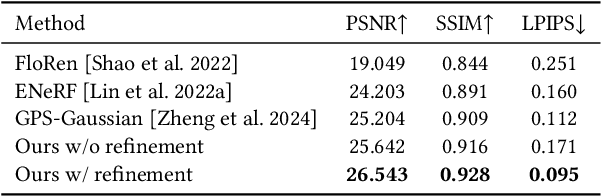

Abstract:In this paper, we present a low-budget and high-authenticity bidirectional telepresence system, Tele-Aloha, targeting peer-to-peer communication scenarios. Compared to previous systems, Tele-Aloha utilizes only four sparse RGB cameras, one consumer-grade GPU, and one autostereoscopic screen to achieve high-resolution (2048x2048), real-time (30 fps), low-latency (less than 150ms) and robust distant communication. As the core of Tele-Aloha, we propose an efficient novel view synthesis algorithm for upper-body. Firstly, we design a cascaded disparity estimator for obtaining a robust geometry cue. Additionally a neural rasterizer via Gaussian Splatting is introduced to project latent features onto target view and to decode them into a reduced resolution. Further, given the high-quality captured data, we leverage weighted blending mechanism to refine the decoded image into the final resolution of 2K. Exploiting world-leading autostereoscopic display and low-latency iris tracking, users are able to experience a strong three-dimensional sense even without any wearable head-mounted display device. Altogether, our telepresence system demonstrates the sense of co-presence in real-life experiments, inspiring the next generation of communication.

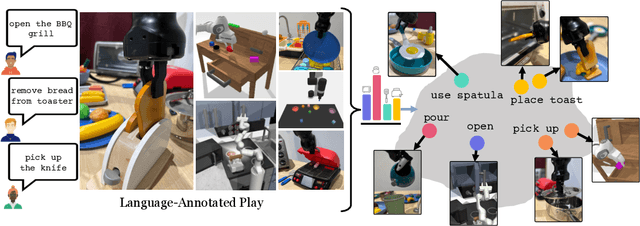

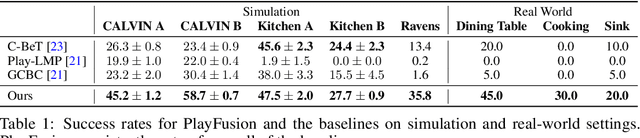

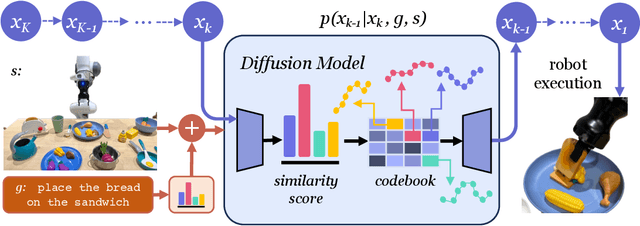

PlayFusion: Skill Acquisition via Diffusion from Language-Annotated Play

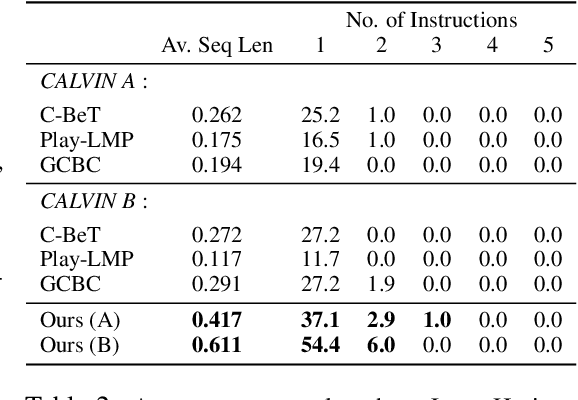

Dec 07, 2023

Abstract:Learning from unstructured and uncurated data has become the dominant paradigm for generative approaches in language and vision. Such unstructured and unguided behavior data, commonly known as play, is also easier to collect in robotics but much more difficult to learn from due to its inherently multimodal, noisy, and suboptimal nature. In this paper, we study this problem of learning goal-directed skill policies from unstructured play data which is labeled with language in hindsight. Specifically, we leverage advances in diffusion models to learn a multi-task diffusion model to extract robotic skills from play data. Using a conditional denoising diffusion process in the space of states and actions, we can gracefully handle the complexity and multimodality of play data and generate diverse and interesting robot behaviors. To make diffusion models more useful for skill learning, we encourage robotic agents to acquire a vocabulary of skills by introducing discrete bottlenecks into the conditional behavior generation process. In our experiments, we demonstrate the effectiveness of our approach across a wide variety of environments in both simulation and the real world. Results visualizations and videos at https://play-fusion.github.io

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge