Jinhua Zhao

RAST-MoE-RL: A Regime-Aware Spatio-Temporal MoE Framework for Deep Reinforcement Learning in Ride-Hailing

Dec 13, 2025Abstract:Ride-hailing platforms face the challenge of balancing passenger waiting times with overall system efficiency under highly uncertain supply-demand conditions. Adaptive delayed matching creates a trade-off between matching and pickup delays by deciding whether to assign drivers immediately or batch requests. Since outcomes accumulate over long horizons with stochastic dynamics, reinforcement learning (RL) is a suitable framework. However, existing approaches often oversimplify traffic dynamics or use shallow encoders that miss complex spatiotemporal patterns. We introduce the Regime-Aware Spatio-Temporal Mixture-of-Experts (RAST-MoE), which formalizes adaptive delayed matching as a regime-aware MDP equipped with a self-attention MoE encoder. Unlike monolithic networks, our experts specialize automatically, improving representation capacity while maintaining computational efficiency. A physics-informed congestion surrogate preserves realistic density-speed feedback, enabling millions of efficient rollouts, while an adaptive reward scheme guards against pathological strategies. With only 12M parameters, our framework outperforms strong baselines. On real-world Uber trajectory data (San Francisco), it improves total reward by over 13%, reducing average matching and pickup delays by 10% and 15% respectively. It demonstrates robustness across unseen demand regimes and stable training. These findings highlight the potential of MoE-enhanced RL for large-scale decision-making with complex spatiotemporal dynamics.

Reproducibility in the Control of Autonomous Mobility-on-Demand Systems

Jun 09, 2025Abstract:Autonomous Mobility-on-Demand (AMoD) systems, powered by advances in robotics, control, and Machine Learning (ML), offer a promising paradigm for future urban transportation. AMoD offers fast and personalized travel services by leveraging centralized control of autonomous vehicle fleets to optimize operations and enhance service performance. However, the rapid growth of this field has outpaced the development of standardized practices for evaluating and reporting results, leading to significant challenges in reproducibility. As AMoD control algorithms become increasingly complex and data-driven, a lack of transparency in modeling assumptions, experimental setups, and algorithmic implementation hinders scientific progress and undermines confidence in the results. This paper presents a systematic study of reproducibility in AMoD research. We identify key components across the research pipeline, spanning system modeling, control problems, simulation design, algorithm specification, and evaluation, and analyze common sources of irreproducibility. We survey prevalent practices in the literature, highlight gaps, and propose a structured framework to assess and improve reproducibility. Specifically, concrete guidelines are offered, along with a "reproducibility checklist", to support future work in achieving replicable, comparable, and extensible results. While focused on AMoD, the principles and practices we advocate generalize to a broader class of cyber-physical systems that rely on networked autonomy and data-driven control. This work aims to lay the foundation for a more transparent and reproducible research culture in the design and deployment of intelligent mobility systems.

Position: Simulating Society Requires Simulating Thought

Jun 08, 2025Abstract:Simulating society with large language models (LLMs), we argue, requires more than generating plausible behavior -- it demands cognitively grounded reasoning that is structured, revisable, and traceable. LLM-based agents are increasingly used to emulate individual and group behavior -- primarily through prompting and supervised fine-tuning. Yet they often lack internal coherence, causal reasoning, and belief traceability -- making them unreliable for analyzing how people reason, deliberate, or respond to interventions. To address this, we present a conceptual modeling paradigm, Generative Minds (GenMinds), which draws from cognitive science to support structured belief representations in generative agents. To evaluate such agents, we introduce the RECAP (REconstructing CAusal Paths) framework, a benchmark designed to assess reasoning fidelity via causal traceability, demographic grounding, and intervention consistency. These contributions advance a broader shift: from surface-level mimicry to generative agents that simulate thought -- not just language -- for social simulations.

Generative AI for Urban Design: A Stepwise Approach Integrating Human Expertise with Multimodal Diffusion Models

May 30, 2025

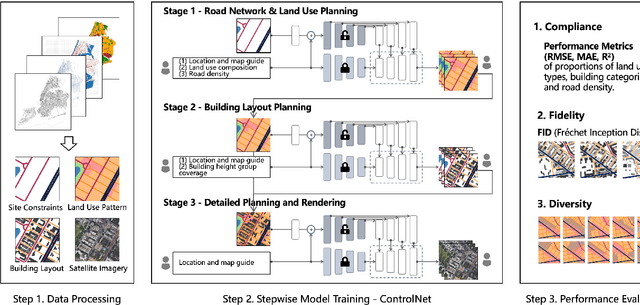

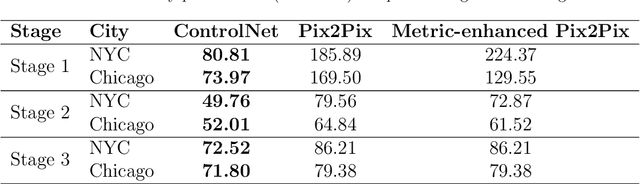

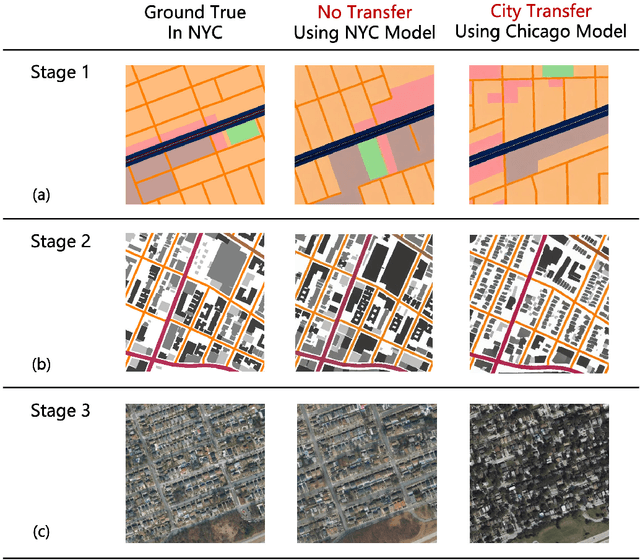

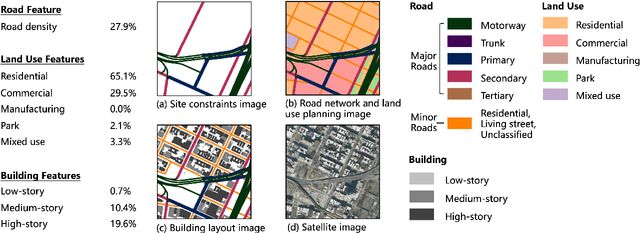

Abstract:Urban design is a multifaceted process that demands careful consideration of site-specific constraints and collaboration among diverse professionals and stakeholders. The advent of generative artificial intelligence (GenAI) offers transformative potential by improving the efficiency of design generation and facilitating the communication of design ideas. However, most existing approaches are not well integrated with human design workflows. They often follow end-to-end pipelines with limited control, overlooking the iterative nature of real-world design. This study proposes a stepwise generative urban design framework that integrates multimodal diffusion models with human expertise to enable more adaptive and controllable design processes. Instead of generating design outcomes in a single end-to-end process, the framework divides the process into three key stages aligned with established urban design workflows: (1) road network and land use planning, (2) building layout planning, and (3) detailed planning and rendering. At each stage, multimodal diffusion models generate preliminary designs based on textual prompts and image-based constraints, which can then be reviewed and refined by human designers. We design an evaluation framework to assess the fidelity, compliance, and diversity of the generated designs. Experiments using data from Chicago and New York City demonstrate that our framework outperforms baseline models and end-to-end approaches across all three dimensions. This study underscores the benefits of multimodal diffusion models and stepwise generation in preserving human control and facilitating iterative refinements, laying the groundwork for human-AI interaction in urban design solutions.

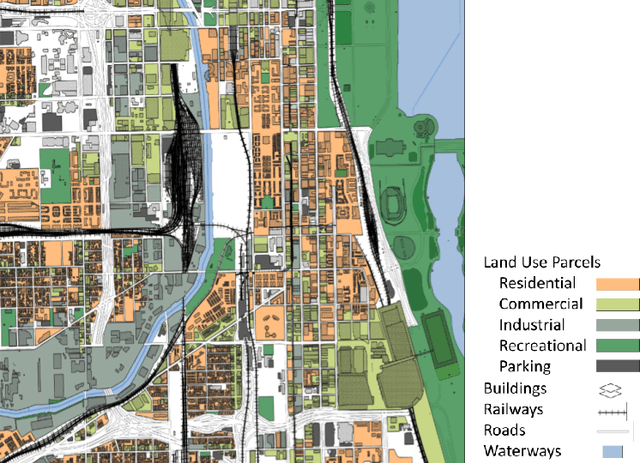

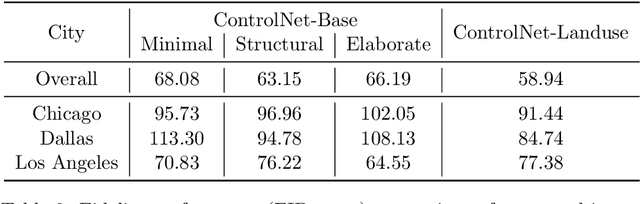

Generative AI for Urban Planning: Synthesizing Satellite Imagery via Diffusion Models

May 13, 2025

Abstract:Generative AI offers new opportunities for automating urban planning by creating site-specific urban layouts and enabling flexible design exploration. However, existing approaches often struggle to produce realistic and practical designs at scale. Therefore, we adapt a state-of-the-art Stable Diffusion model, extended with ControlNet, to generate high-fidelity satellite imagery conditioned on land use descriptions, infrastructure, and natural environments. To overcome data availability limitations, we spatially link satellite imagery with structured land use and constraint information from OpenStreetMap. Using data from three major U.S. cities, we demonstrate that the proposed diffusion model generates realistic and diverse urban landscapes by varying land-use configurations, road networks, and water bodies, facilitating cross-city learning and design diversity. We also systematically evaluate the impacts of varying language prompts and control imagery on the quality of satellite imagery generation. Our model achieves high FID and KID scores and demonstrates robustness across diverse urban contexts. Qualitative assessments from urban planners and the general public show that generated images align closely with design descriptions and constraints, and are often preferred over real images. This work establishes a benchmark for controlled urban imagery generation and highlights the potential of generative AI as a tool for enhancing planning workflows and public engagement.

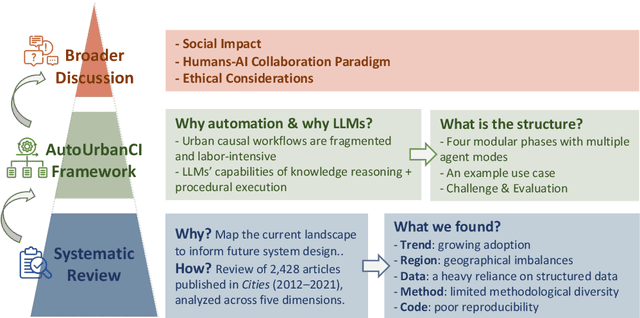

Reimagining Urban Science: Scaling Causal Inference with Large Language Models

Apr 15, 2025

Abstract:Urban causal research is essential for understanding the complex dynamics of cities and informing evidence-based policies. However, it is challenged by the inefficiency and bias of hypothesis generation, barriers to multimodal data complexity, and the methodological fragility of causal experimentation. Recent advances in large language models (LLMs) present an opportunity to rethink how urban causal analysis is conducted. This Perspective examines current urban causal research by analyzing taxonomies that categorize research topics, data sources, and methodological approaches to identify structural gaps. We then introduce an LLM-driven conceptual framework, AutoUrbanCI, composed of four distinct modular agents responsible for hypothesis generation, data engineering, experiment design and execution, and results interpretation with policy recommendations. We propose evaluation criteria for rigor and transparency and reflect on implications for human-AI collaboration, equity, and accountability. We call for a new research agenda that embraces AI-augmented workflows not as replacements for human expertise but as tools to broaden participation, improve reproducibility, and unlock more inclusive forms of urban causal reasoning.

Analyzing sequential activity and travel decisions with interpretable deep inverse reinforcement learning

Mar 17, 2025Abstract:Travel demand modeling has shifted from aggregated trip-based models to behavior-oriented activity-based models because daily trips are essentially driven by human activities. To analyze the sequential activity-travel decisions, deep inverse reinforcement learning (DIRL) has proven effective in learning the decision mechanisms by approximating a reward function to represent preferences and a policy function to replicate observed behavior using deep neural networks (DNNs). However, most existing research has focused on using DIRL to enhance only prediction accuracy, with limited exploration into interpreting the underlying decision mechanisms guiding sequential decision-making. To address this gap, we introduce an interpretable DIRL framework for analyzing activity-travel decision processes, bridging the gap between data-driven machine learning and theory-driven behavioral models. Our proposed framework adapts an adversarial IRL approach to infer the reward and policy functions of activity-travel behavior. The policy function is interpreted through a surrogate interpretable model based on choice probabilities from the policy function, while the reward function is interpreted by deriving both short-term rewards and long-term returns for various activity-travel patterns. Our analysis of real-world travel survey data reveals promising results in two key areas: (i) behavioral pattern insights from the policy function, highlighting critical factors in decision-making and variations among socio-demographic groups, and (ii) behavioral preference insights from the reward function, indicating the utility individuals gain from specific activity sequences.

Mitigating Spatial Disparity in Urban Prediction Using Residual-Aware Spatiotemporal Graph Neural Networks: A Chicago Case Study

Jan 20, 2025Abstract:Urban prediction tasks, such as forecasting traffic flow, temperature, and crime rates, are crucial for efficient urban planning and management. However, existing Spatiotemporal Graph Neural Networks (ST-GNNs) often rely solely on accuracy, overlooking spatial and demographic disparities in their predictions. This oversight can lead to imbalanced resource allocation and exacerbate existing inequities in urban areas. This study introduces a Residual-Aware Attention (RAA) Block and an equality-enhancing loss function to address these disparities. By adapting the adjacency matrix during training and incorporating spatial disparity metrics, our approach aims to reduce local segregation of residuals and errors. We applied our methodology to urban prediction tasks in Chicago, utilizing a travel demand dataset as an example. Our model achieved a 48% significant improvement in fairness metrics with only a 9% increase in error metrics. Spatial analysis of residual distributions revealed that models with RAA Blocks produced more equitable prediction results, particularly by reducing errors clustered in central regions. Attention maps demonstrated the model's ability to dynamically adjust focus, leading to more balanced predictions. Case studies of various community areas in Chicago further illustrated the effectiveness of our approach in addressing spatial and demographic disparities, supporting more balanced and equitable urban planning and policy-making.

Virtual Nodes Improve Long-term Traffic Prediction

Jan 17, 2025

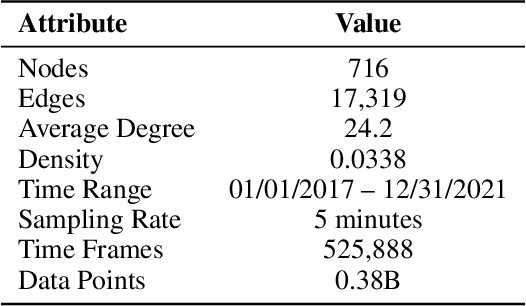

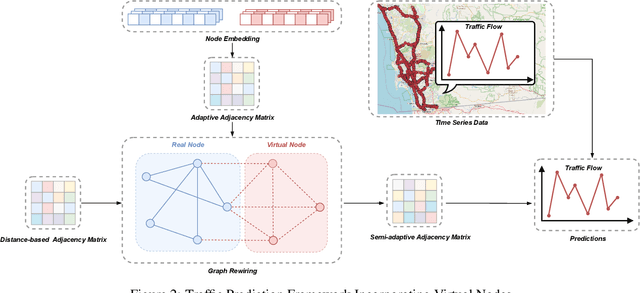

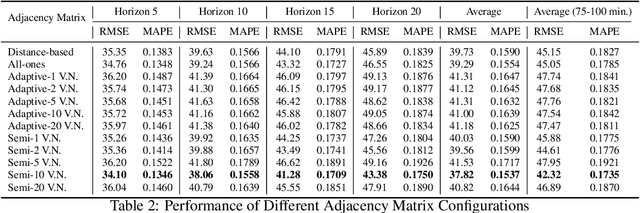

Abstract:Effective traffic prediction is a cornerstone of intelligent transportation systems, enabling precise forecasts of traffic flow, speed, and congestion. While traditional spatio-temporal graph neural networks (ST-GNNs) have achieved notable success in short-term traffic forecasting, their performance in long-term predictions remains limited. This challenge arises from over-squashing problem, where bottlenecks and limited receptive fields restrict information flow and hinder the modeling of global dependencies. To address these challenges, this study introduces a novel framework that incorporates virtual nodes, which are additional nodes added to the graph and connected to existing nodes, in order to aggregate information across the entire graph within a single GNN layer. Our proposed model incorporates virtual nodes by constructing a semi-adaptive adjacency matrix. This matrix integrates distance-based and adaptive adjacency matrices, allowing the model to leverage geographical information while also learning task-specific features from data. Experimental results demonstrate that the inclusion of virtual nodes significantly enhances long-term prediction accuracy while also improving layer-wise sensitivity to mitigate the over-squashing problem. Virtual nodes also offer enhanced explainability by focusing on key intersections and high-traffic areas, as shown by the visualization of their adjacency matrix weights on road network heat maps. Our advanced approach enhances the understanding and management of urban traffic systems, making it particularly well-suited for real-world applications.

Modular Conversational Agents for Surveys and Interviews

Dec 22, 2024

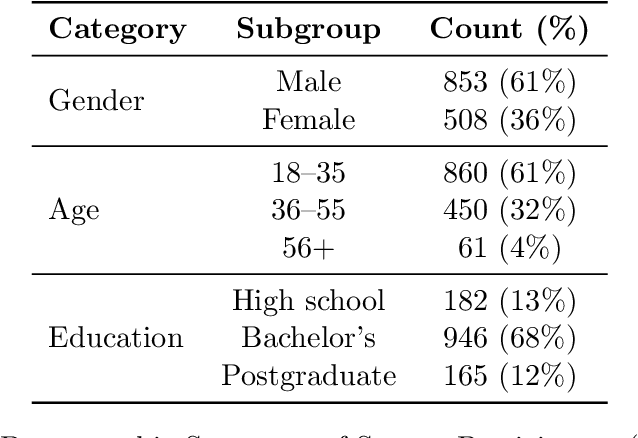

Abstract:Surveys and interviews (structured, semi-structured, or unstructured) are widely used for collecting insights on emerging or hypothetical scenarios. Traditional human-led methods often face challenges related to cost, scalability, and consistency. Recently, various domains have begun to explore the use of conversational agents (chatbots) powered by large language models (LLMs). However, as public investments and policies on infrastructure and services often involve substantial public stakes and environmental risks, there is a need for a rigorous, transparent, privacy-preserving, and cost-efficient development framework tailored for such major decision-making processes. This paper addresses this gap by introducing a modular approach and its resultant parameterized process for designing conversational agents. We detail the system architecture, integrating engineered prompts, specialized knowledge bases, and customizable, goal-oriented conversational logic in the proposed approach. We demonstrate the adaptability, generalizability, and efficacy of our modular approach through three empirical studies: (1) travel preference surveys, highlighting multimodal (voice, text, and image generation) capabilities; (2) public opinion elicitation on a newly constructed, novel infrastructure project, showcasing question customization and multilingual (English and French) capabilities; and (3) transportation expert consultation about future transportation systems, highlighting real-time, clarification request capabilities for open-ended questions, resilience in handling erratic inputs, and efficient transcript post-processing. The results show the effectiveness of this modular approach and how it addresses key ethical, privacy, security, and token consumption concerns, setting the stage for the next-generation surveys and interviews.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge