Ao Qu

Position: Simulating Society Requires Simulating Thought

Jun 08, 2025Abstract:Simulating society with large language models (LLMs), we argue, requires more than generating plausible behavior -- it demands cognitively grounded reasoning that is structured, revisable, and traceable. LLM-based agents are increasingly used to emulate individual and group behavior -- primarily through prompting and supervised fine-tuning. Yet they often lack internal coherence, causal reasoning, and belief traceability -- making them unreliable for analyzing how people reason, deliberate, or respond to interventions. To address this, we present a conceptual modeling paradigm, Generative Minds (GenMinds), which draws from cognitive science to support structured belief representations in generative agents. To evaluate such agents, we introduce the RECAP (REconstructing CAusal Paths) framework, a benchmark designed to assess reasoning fidelity via causal traceability, demographic grounding, and intervention consistency. These contributions advance a broader shift: from surface-level mimicry to generative agents that simulate thought -- not just language -- for social simulations.

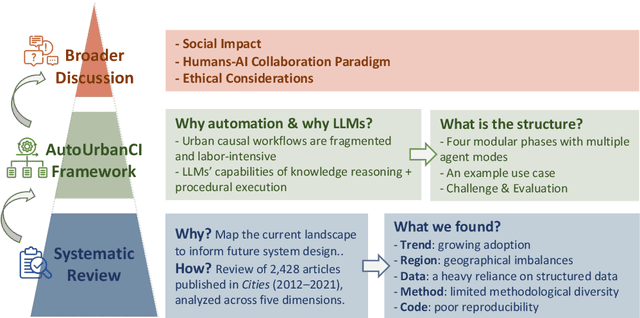

Reimagining Urban Science: Scaling Causal Inference with Large Language Models

Apr 15, 2025

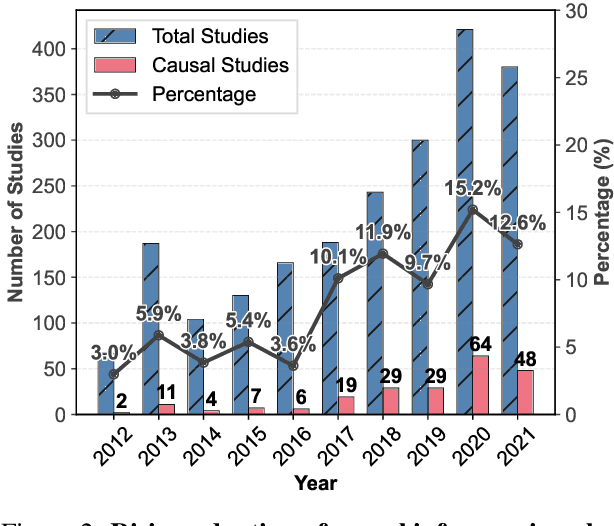

Abstract:Urban causal research is essential for understanding the complex dynamics of cities and informing evidence-based policies. However, it is challenged by the inefficiency and bias of hypothesis generation, barriers to multimodal data complexity, and the methodological fragility of causal experimentation. Recent advances in large language models (LLMs) present an opportunity to rethink how urban causal analysis is conducted. This Perspective examines current urban causal research by analyzing taxonomies that categorize research topics, data sources, and methodological approaches to identify structural gaps. We then introduce an LLM-driven conceptual framework, AutoUrbanCI, composed of four distinct modular agents responsible for hypothesis generation, data engineering, experiment design and execution, and results interpretation with policy recommendations. We propose evaluation criteria for rigor and transparency and reflect on implications for human-AI collaboration, equity, and accountability. We call for a new research agenda that embraces AI-augmented workflows not as replacements for human expertise but as tools to broaden participation, improve reproducibility, and unlock more inclusive forms of urban causal reasoning.

Sparkle: Mastering Basic Spatial Capabilities in Vision Language Models Elicits Generalization to Composite Spatial Reasoning

Oct 21, 2024Abstract:Vision language models (VLMs) have demonstrated impressive performance across a wide range of downstream tasks. However, their proficiency in spatial reasoning remains limited, despite its crucial role in tasks involving navigation and interaction with physical environments. Specifically, much of the spatial reasoning in these tasks occurs in two-dimensional (2D) environments, and our evaluation reveals that state-of-the-art VLMs frequently generate implausible and incorrect responses to composite spatial reasoning problems, including simple pathfinding tasks that humans can solve effortlessly at a glance. To address this, we explore an effective approach to enhance 2D spatial reasoning within VLMs by training the model on basic spatial capabilities. We begin by disentangling the key components of 2D spatial reasoning: direction comprehension, distance estimation, and localization. Our central hypothesis is that mastering these basic spatial capabilities can significantly enhance a model's performance on composite spatial tasks requiring advanced spatial understanding and combinatorial problem-solving. To investigate this hypothesis, we introduce Sparkle, a framework that fine-tunes VLMs on these three basic spatial capabilities by synthetic data generation and targeted supervision to form an instruction dataset for each capability. Our experiments demonstrate that VLMs fine-tuned with Sparkle achieve significant performance gains, not only in the basic tasks themselves but also in generalizing to composite and out-of-distribution spatial reasoning tasks (e.g., improving from 13.5% to 40.0% on the shortest path problem). These findings underscore the effectiveness of mastering basic spatial capabilities in enhancing composite spatial problem-solving, offering insights for improving VLMs' spatial reasoning capabilities.

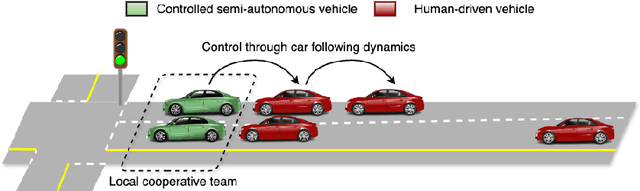

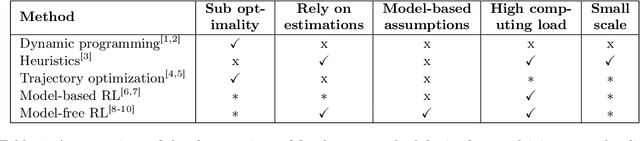

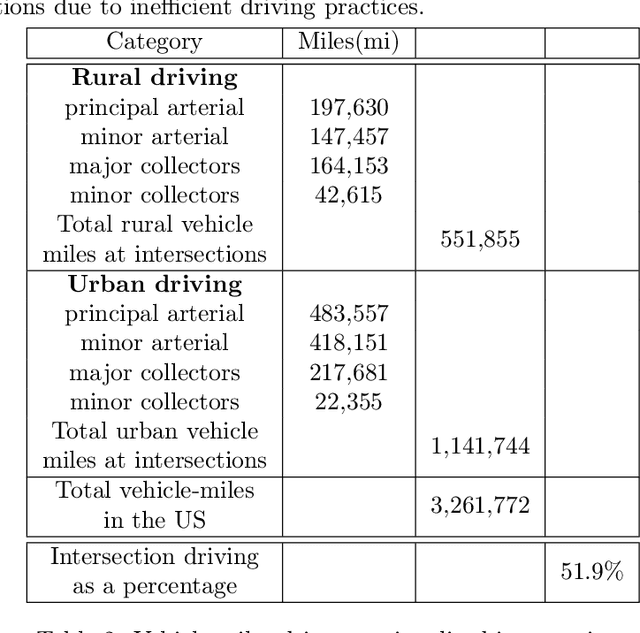

IntersectionZoo: Eco-driving for Benchmarking Multi-Agent Contextual Reinforcement Learning

Oct 19, 2024Abstract:Despite the popularity of multi-agent reinforcement learning (RL) in simulated and two-player applications, its success in messy real-world applications has been limited. A key challenge lies in its generalizability across problem variations, a common necessity for many real-world problems. Contextual reinforcement learning (CRL) formalizes learning policies that generalize across problem variations. However, the lack of standardized benchmarks for multi-agent CRL has hindered progress in the field. Such benchmarks are desired to be based on real-world applications to naturally capture the many open challenges of real-world problems that affect generalization. To bridge this gap, we propose IntersectionZoo, a comprehensive benchmark suite for multi-agent CRL through the real-world application of cooperative eco-driving in urban road networks. The task of cooperative eco-driving is to control a fleet of vehicles to reduce fleet-level vehicular emissions. By grounding IntersectionZoo in a real-world application, we naturally capture real-world problem characteristics, such as partial observability and multiple competing objectives. IntersectionZoo is built on data-informed simulations of 16,334 signalized intersections derived from 10 major US cities, modeled in an open-source industry-grade microscopic traffic simulator. By modeling factors affecting vehicular exhaust emissions (e.g., temperature, road conditions, travel demand), IntersectionZoo provides one million data-driven traffic scenarios. Using these traffic scenarios, we benchmark popular multi-agent RL and human-like driving algorithms and demonstrate that the popular multi-agent RL algorithms struggle to generalize in CRL settings.

Mitigating Metropolitan Carbon Emissions with Dynamic Eco-driving at Scale

Aug 10, 2024

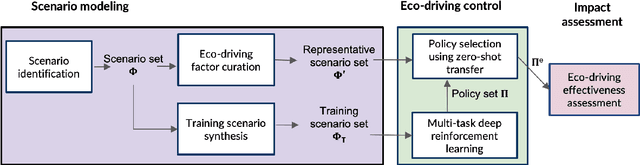

Abstract:The sheer scale and diversity of transportation make it a formidable sector to decarbonize. Here, we consider an emerging opportunity to reduce carbon emissions: the growing adoption of semi-autonomous vehicles, which can be programmed to mitigate stop-and-go traffic through intelligent speed commands and, thus, reduce emissions. But would such dynamic eco-driving move the needle on climate change? A comprehensive impact analysis has been out of reach due to the vast array of traffic scenarios and the complexity of vehicle emissions. We address this challenge with large-scale scenario modeling efforts and by using multi-task deep reinforcement learning with a carefully designed network decomposition strategy. We perform an in-depth prospective impact assessment of dynamic eco-driving at 6,011 signalized intersections across three major US metropolitan cities, simulating a million traffic scenarios. Overall, we find that vehicle trajectories optimized for emissions can cut city-wide intersection carbon emissions by 11-22%, without harming throughput or safety, and with reasonable assumptions, equivalent to the national emissions of Israel and Nigeria, respectively. We find that 10% eco-driving adoption yields 25%-50% of the total reduction, and nearly 70% of the benefits come from 20% of intersections, suggesting near-term implementation pathways. However, the composition of this high-impact subset of intersections varies considerably across different adoption levels, with minimal overlap, calling for careful strategic planning for eco-driving deployments. Moreover, the impact of eco-driving, when considered jointly with projections of vehicle electrification and hybrid vehicle adoption remains significant. More broadly, this work paves the way for large-scale analysis of traffic externalities, such as time, safety, and air quality, and the potential impact of solution strategies.

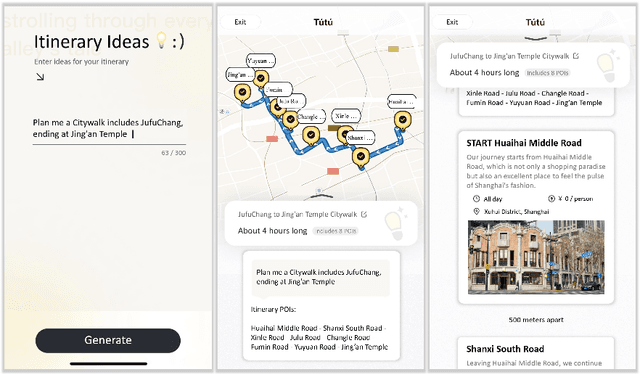

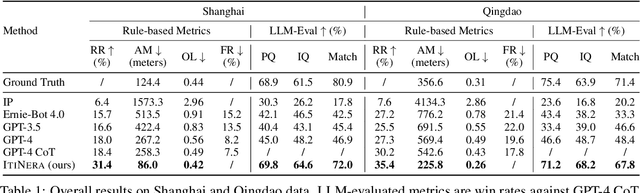

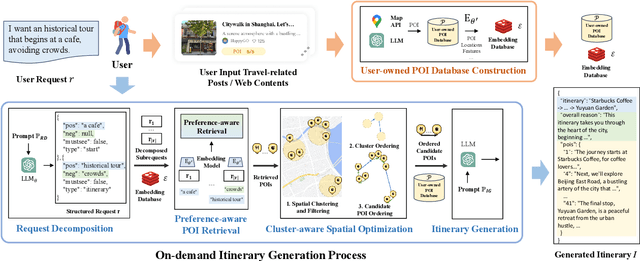

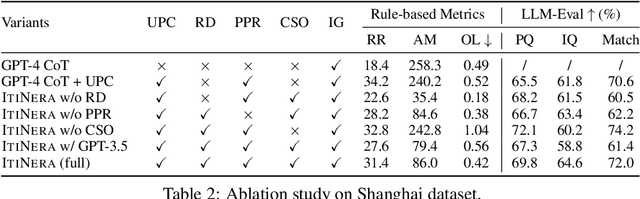

Synergizing Spatial Optimization with Large Language Models for Open-Domain Urban Itinerary Planning

Feb 11, 2024

Abstract:In this paper, we for the first time propose the task of Open-domain Urban Itinerary Planning (OUIP) for citywalk, which directly generates itineraries based on users' requests described in natural language. OUIP is different from conventional itinerary planning, which limits users from expressing more detailed needs and hinders true personalization. Recently, large language models (LLMs) have shown potential in handling diverse tasks. However, due to non-real-time information, incomplete knowledge, and insufficient spatial awareness, they are unable to independently deliver a satisfactory user experience in OUIP. Given this, we present ItiNera, an OUIP system that synergizes spatial optimization with Large Language Models (LLMs) to provide services that customize urban itineraries based on users' needs. Specifically, we develop an LLM-based pipeline for extracting and updating POI features to create a user-owned personalized POI database. For each user request, we leverage LLM in cooperation with an embedding-based module for retrieving candidate POIs from the user's POI database. Then, a spatial optimization module is used to order these POIs, followed by LLM crafting a personalized, spatially coherent itinerary. To the best of our knowledge, this study marks the first integration of LLMs to innovate itinerary planning solutions. Extensive experiments on offline datasets and online subjective evaluation have demonstrated the capacities of our system to deliver more responsive and spatially coherent itineraries than current LLM-based solutions. Our system has been deployed in production at the TuTu online travel service and has attracted thousands of users for their urban travel planning.

SEIP: Simulation-based Design and Evaluation of Infrastructure-based Collective Perception

May 29, 2023

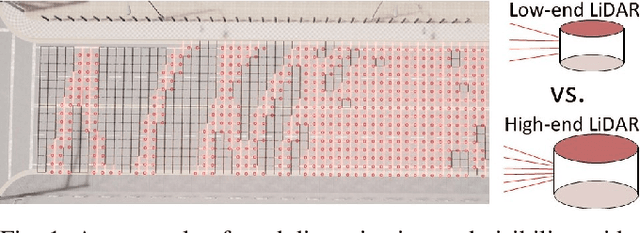

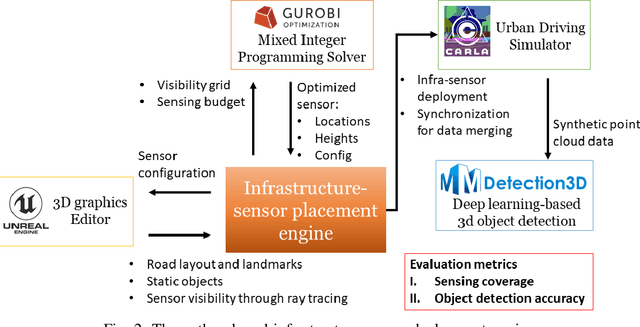

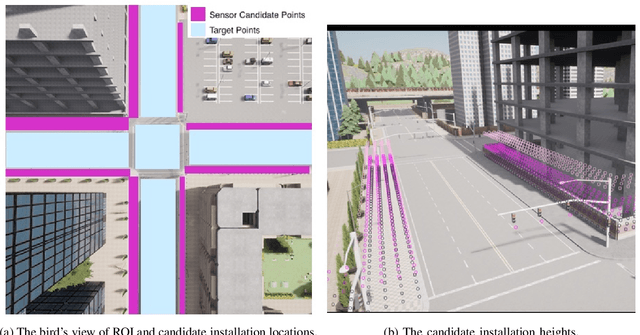

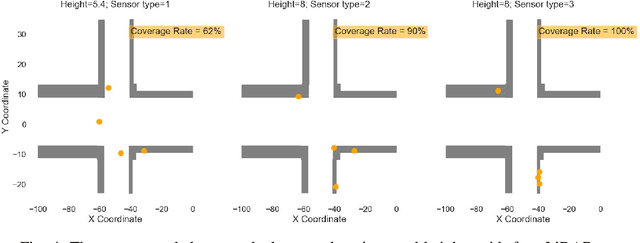

Abstract:Infrastructure-based collective perception, which entails the real-time sharing and merging of sensing data from different roadside sensors for object detection, has shown promise in preventing occlusions for traffic safety and efficiency. However, its adoption has been hindered by the lack of guidance for roadside sensor placement and high costs for ex-post evaluation. For infrastructure projects with limited budgets, the ex-ante evaluation for optimizing the configurations and placements of infrastructure sensors is crucial to minimize occlusion risks at a low cost. This paper presents algorithms and simulation tools to support the ex-ante evaluation of the cost-performance tradeoff in infrastructure sensor deployment for collective perception. More specifically, the deployment of infrastructure sensors is framed as an integer programming problem that can be efficiently solved in polynomial time, achieving near-optimal results with the use of certain heuristic algorithms. The solutions provide guidance on deciding sensor locations, installation heights, and configurations to achieve the balance between procurement cost, physical constraints for installation, and sensing coverage. Additionally, we implement the proposed algorithms in a simulation engine. This allows us to evaluate the effectiveness of each sensor deployment solution through the lens of object detection. The application of the proposed methods was illustrated through a case study on traffic monitoring by using infrastructure LiDARs. Preliminary findings indicate that when working with a tight sensing budget, it is possible that the incremental benefit derived from integrating additional low-resolution LiDARs could surpass that of incorporating more high-resolution ones. The results reinforce the necessity of investigating the cost-performance tradeoff.

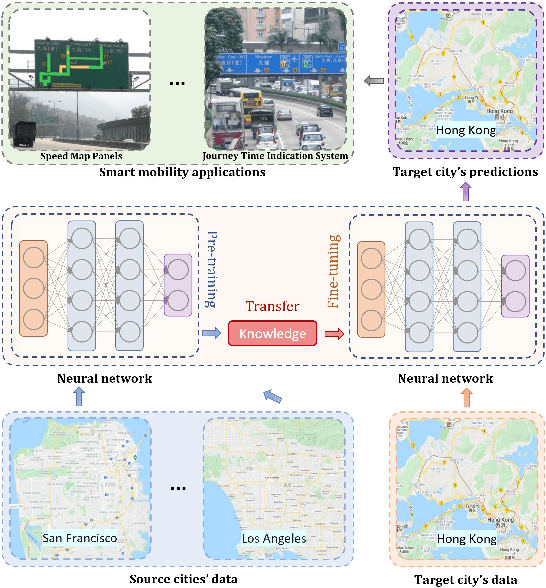

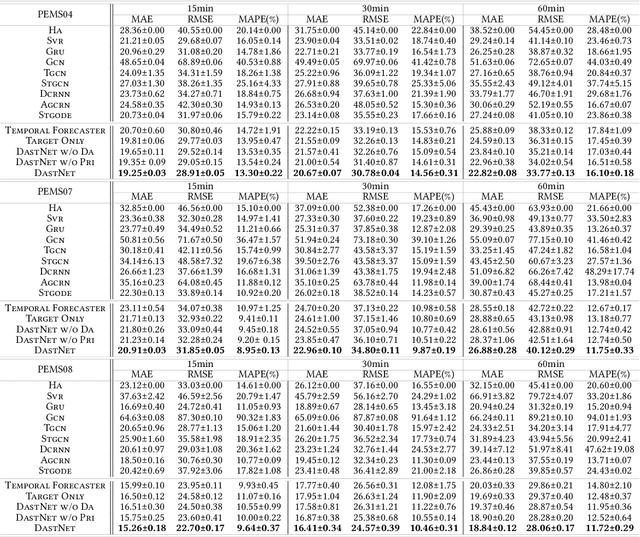

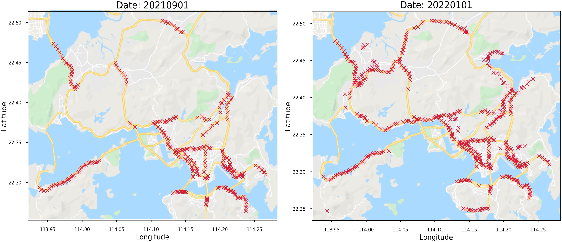

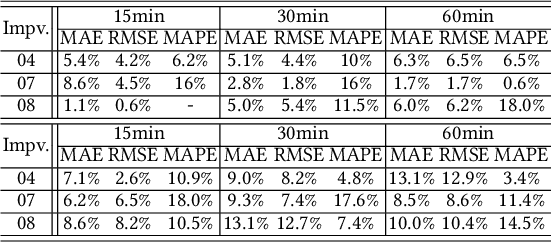

Domain Adversarial Spatial-Temporal Network: A Transferable Framework for Short-term Traffic Forecasting across Cities

Feb 08, 2022

Abstract:Accurate real-time traffic forecast is critical for intelligent transportation systems (ITS) and it serves as the cornerstone of various smart mobility applications. Though this research area is dominated by deep learning, recent studies indicate that the accuracy improvement by developing new model structures is becoming marginal. Instead, we envision that the improvement can be achieved by transferring the "forecasting-related knowledge" across cities with different data distributions and network topologies. To this end, this paper aims to propose a novel transferable traffic forecasting framework: Domain Adversarial Spatial-Temporal Network (DASTNet). DASTNet is pre-trained on multiple source networks and fine-tuned with the target network's traffic data. Specifically, we leverage the graph representation learning and adversarial domain adaptation techniques to learn the domain-invariant node embeddings, which are further incorporated to model the temporal traffic data. To the best of our knowledge, we are the first to employ adversarial multi-domain adaptation for network-wide traffic forecasting problems. DASTNet consistently outperforms all state-of-the-art baseline methods on three benchmark datasets. The trained DASTNet is applied to Hong Kong's new traffic detectors, and accurate traffic predictions can be delivered immediately (within one day) when the detector is available. Overall, this study suggests an alternative to enhance the traffic forecasting methods and provides practical implications for cities lacking historical traffic data.

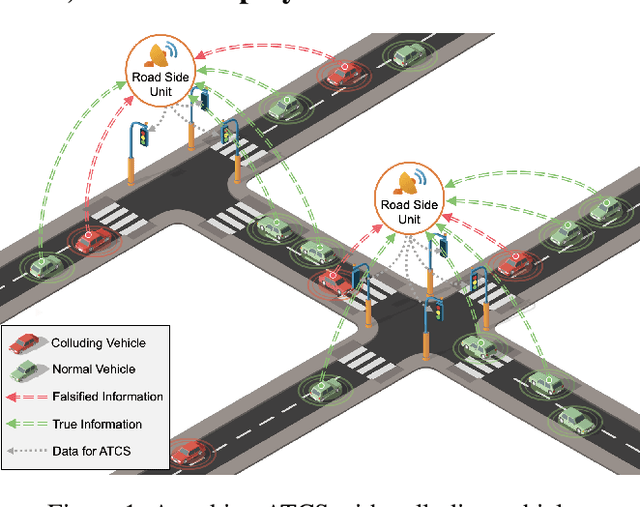

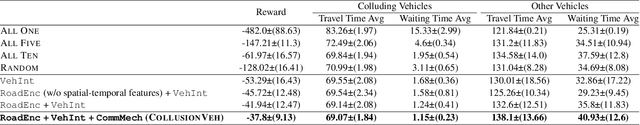

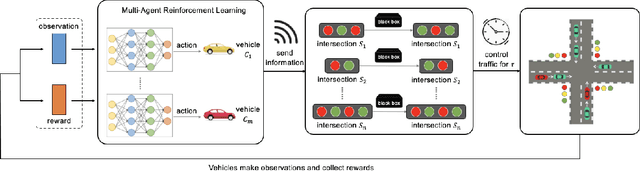

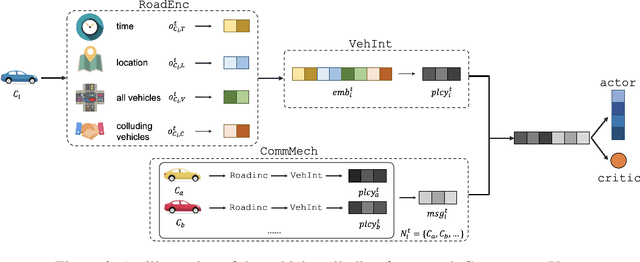

Attacking Deep Reinforcement Learning-Based Traffic Signal Control Systems with Colluding Vehicles

Nov 04, 2021

Abstract:The rapid advancements of Internet of Things (IoT) and artificial intelligence (AI) have catalyzed the development of adaptive traffic signal control systems (ATCS) for smart cities. In particular, deep reinforcement learning (DRL) methods produce the state-of-the-art performance and have great potentials for practical applications. In the existing DRL-based ATCS, the controlled signals collect traffic state information from nearby vehicles, and then optimal actions (e.g., switching phases) can be determined based on the collected information. The DRL models fully "trust" that vehicles are sending the true information to the signals, making the ATCS vulnerable to adversarial attacks with falsified information. In view of this, this paper first time formulates a novel task in which a group of vehicles can cooperatively send falsified information to "cheat" DRL-based ATCS in order to save their total travel time. To solve the proposed task, we develop CollusionVeh, a generic and effective vehicle-colluding framework composed of a road situation encoder, a vehicle interpreter, and a communication mechanism. We employ our method to attack established DRL-based ATCS and demonstrate that the total travel time for the colluding vehicles can be significantly reduced with a reasonable number of learning episodes, and the colluding effect will decrease if the number of colluding vehicles increases. Additionally, insights and suggestions for the real-world deployment of DRL-based ATCS are provided. The research outcomes could help improve the reliability and robustness of the ATCS and better protect the smart mobility systems.

Streaming data preprocessing via online tensor recovery for large environmental sensor networks

Sep 01, 2021

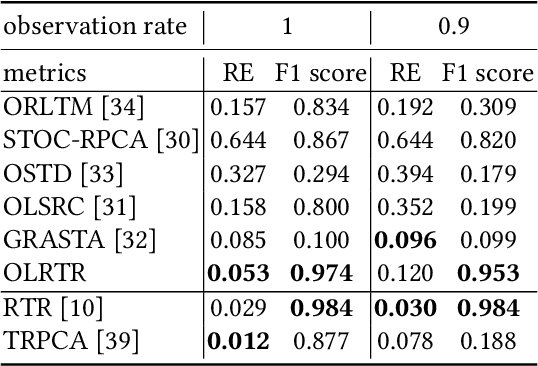

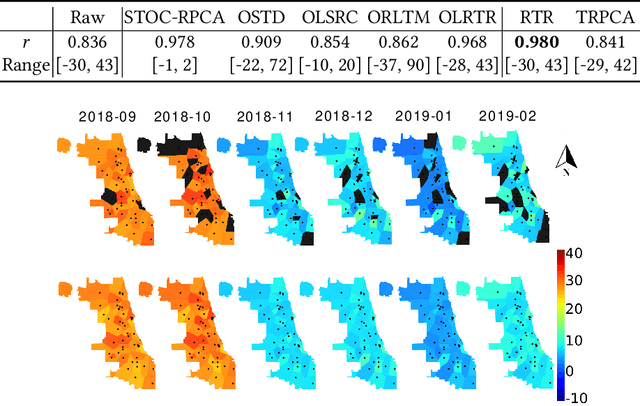

Abstract:Measuring the built and natural environment at a fine-grained scale is now possible with low-cost urban environmental sensor networks. However, fine-grained city-scale data analysis is complicated by tedious data cleaning including removing outliers and imputing missing data. While many methods exist to automatically correct anomalies and impute missing entries, challenges still exist on data with large spatial-temporal scales and shifting patterns. To address these challenges, we propose an online robust tensor recovery (OLRTR) method to preprocess streaming high-dimensional urban environmental datasets. A small-sized dictionary that captures the underlying patterns of the data is computed and constantly updated with new data. OLRTR enables online recovery for large-scale sensor networks that provide continuous data streams, with a lower computational memory usage compared to offline batch counterparts. In addition, we formulate the objective function so that OLRTR can detect structured outliers, such as faulty readings over a long period of time. We validate OLRTR on a synthetically degraded National Oceanic and Atmospheric Administration temperature dataset, with a recovery error of 0.05, and apply it to the Array of Things city-scale sensor network in Chicago, IL, showing superior results compared with several established online and batch-based low rank decomposition methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge