Yanbing Wang

Discovering the Precursors of Traffic Breakdowns Using Spatiotemporal Graph Attribution Networks

Apr 23, 2025

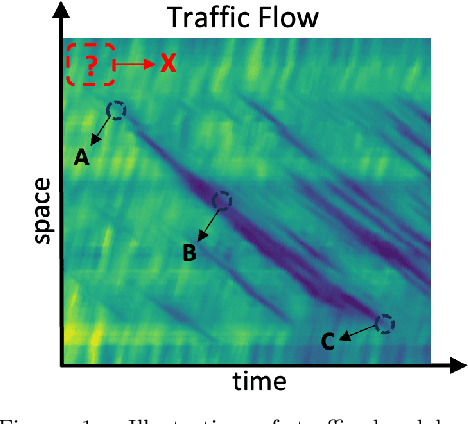

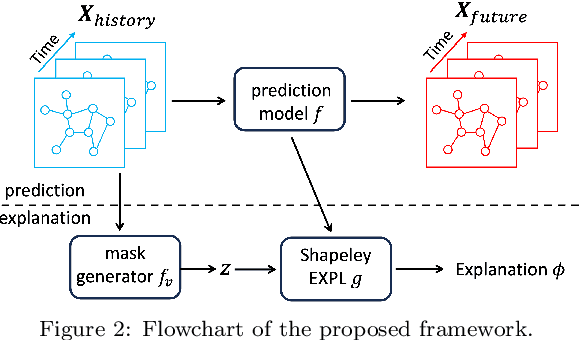

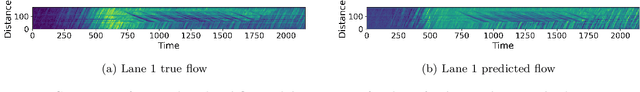

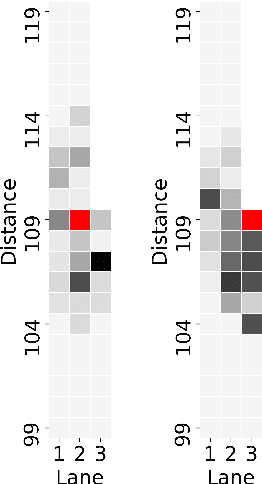

Abstract:Understanding and predicting the precursors of traffic breakdowns is critical for improving road safety and traffic flow management. This paper presents a novel approach combining spatiotemporal graph neural networks (ST-GNNs) with Shapley values to identify and interpret traffic breakdown precursors. By extending Shapley explanation methods to a spatiotemporal setting, our proposed method bridges the gap between black-box neural network predictions and interpretable causes. We demonstrate the method on the Interstate-24 data, and identify that road topology and abrupt braking are major factors that lead to traffic breakdowns.

Virtual trajectories for I-24 MOTION: data and tools

Nov 17, 2023

Abstract:This article introduces a new virtual trajectory dataset derived from the I-24 MOTION INCEPTION v1.0.0 dataset to address challenges in analyzing large but noisy trajectory datasets. Building on the concept of virtual trajectories, we provide a Python implementation to generate virtual trajectories from large raw datasets that are typically challenging to process due to their size. We demonstrate the practical utility of these trajectories in assessing speed variability and travel times across different lanes within the INCEPTION dataset. The virtual trajectory dataset opens future research on traffic waves and their impact on energy.

MARVEL: Multi-Agent Reinforcement-Learning for Large-Scale Variable Speed Limits

Oct 18, 2023

Abstract:Variable speed limit (VSL) control is a promising traffic management strategy for enhancing safety and mobility. This work introduces MARVEL, a multi-agent reinforcement learning (MARL) framework for implementing large-scale VSL control on freeway corridors using only commonly available data. The agents learn through a reward structure that incorporates adaptability to traffic conditions, safety, and mobility; enabling coordination among the agents. The proposed framework scales to cover corridors with many gantries thanks to a parameter sharing among all VSL agents. The agents are trained in a microsimulation environment based on a short freeway stretch with 8 gantries spanning 7 miles and tested with 34 gantries spanning 17 miles of I-24 near Nashville, TN. MARVEL improves traffic safety by 63.4% compared to the no control scenario and enhances traffic mobility by 14.6% compared to a state-of-the-practice algorithm that has been deployed on I-24. An explainability analysis is undertaken to explore the learned policy under different traffic conditions and the results provide insights into the decision-making process of agents. Finally, we test the policy learned from the simulation-based experiments on real input data from I-24 to illustrate the potential deployment capability of the learned policy.

So you think you can track?

Sep 13, 2023

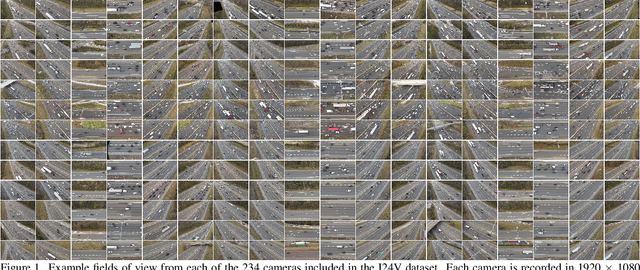

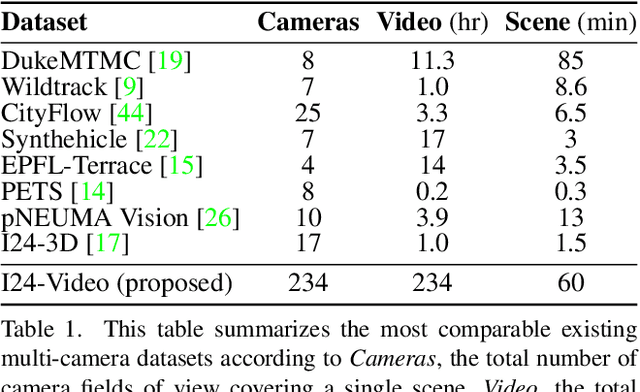

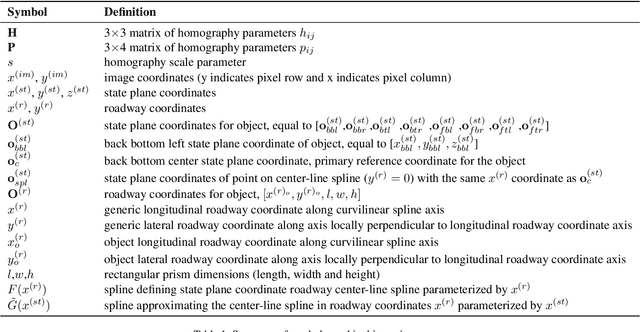

Abstract:This work introduces a multi-camera tracking dataset consisting of 234 hours of video data recorded concurrently from 234 overlapping HD cameras covering a 4.2 mile stretch of 8-10 lane interstate highway near Nashville, TN. The video is recorded during a period of high traffic density with 500+ objects typically visible within the scene and typical object longevities of 3-15 minutes. GPS trajectories from 270 vehicle passes through the scene are manually corrected in the video data to provide a set of ground-truth trajectories for recall-oriented tracking metrics, and object detections are provided for each camera in the scene (159 million total before cross-camera fusion). Initial benchmarking of tracking-by-detection algorithms is performed against the GPS trajectories, and a best HOTA of only 9.5% is obtained (best recall 75.9% at IOU 0.1, 47.9 average IDs per ground truth object), indicating the benchmarked trackers do not perform sufficiently well at the long temporal and spatial durations required for traffic scene understanding.

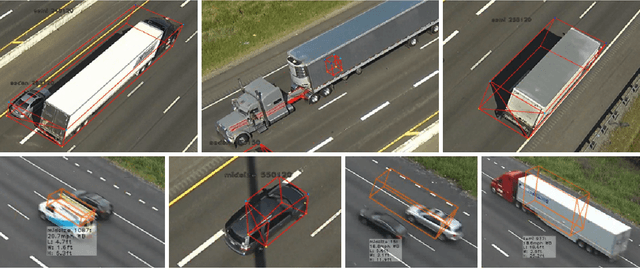

The Interstate-24 3D Dataset: a new benchmark for 3D multi-camera vehicle tracking

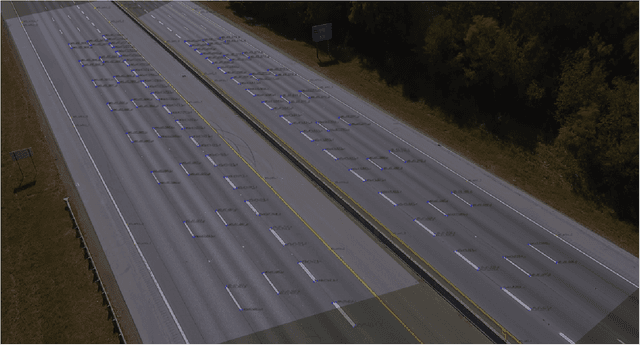

Aug 28, 2023

Abstract:This work presents a novel video dataset recorded from overlapping highway traffic cameras along an urban interstate, enabling multi-camera 3D object tracking in a traffic monitoring context. Data is released from 3 scenes containing video from at least 16 cameras each, totaling 57 minutes in length. 877,000 3D bounding boxes and corresponding object tracklets are fully and accurately annotated for each camera field of view and are combined into a spatially and temporally continuous set of vehicle trajectories for each scene. Lastly, existing algorithms are combined to benchmark a number of 3D multi-camera tracking pipelines on the dataset, with results indicating that the dataset is challenging due to the difficulty of matching objects traveling at high speeds across cameras and heavy object occlusion, potentially for hundreds of frames, during congested traffic. This work aims to enable the development of accurate and automatic vehicle trajectory extraction algorithms, which will play a vital role in understanding impacts of autonomous vehicle technologies on the safety and efficiency of traffic.

Detecting Socially Abnormal Highway Driving Behaviors via Recurrent Graph Attention Networks

Apr 23, 2023

Abstract:With the rapid development of Internet of Things technologies, the next generation traffic monitoring infrastructures are connected via the web, to aid traffic data collection and intelligent traffic management. One of the most important tasks in traffic is anomaly detection, since abnormal drivers can reduce traffic efficiency and cause safety issues. This work focuses on detecting abnormal driving behaviors from trajectories produced by highway video surveillance systems. Most of the current abnormal driving behavior detection methods focus on a limited category of abnormal behaviors that deal with a single vehicle without considering vehicular interactions. In this work, we consider the problem of detecting a variety of socially abnormal driving behaviors, i.e., behaviors that do not conform to the behavior of other nearby drivers. This task is complicated by the variety of vehicular interactions and the spatial-temporal varying nature of highway traffic. To solve this problem, we propose an autoencoder with a Recurrent Graph Attention Network that can capture the highway driving behaviors contextualized on the surrounding cars, and detect anomalies that deviate from learned patterns. Our model is scalable to large freeways with thousands of cars. Experiments on data generated from traffic simulation software show that our model is the only one that can spot the exact vehicle conducting socially abnormal behaviors, among the state-of-the-art anomaly detection models. We further show the performance on real world HighD traffic dataset, where our model detects vehicles that violate the local driving norms.

I-24 MOTION: An instrument for freeway traffic science

Jan 30, 2023

Abstract:The Interstate-24 MObility Technology Interstate Observation Network (I-24 MOTION) is a new instrument for traffic science located near Nashville, Tennessee. I-24 MOTION consists of 276 pole-mounted high-resolution traffic cameras that provide seamless coverage of approximately 4.2 miles I-24, a 4-5 lane (each direction) freeway with frequently observed congestion. The cameras are connected via fiber optic network to a compute facility where vehicle trajectories are extracted from the video imagery using computer vision techniques. Approximately 230 million vehicle miles of travel occur within I-24 MOTION annually. The main output of the instrument are vehicle trajectory datasets that contain the position of each vehicle on the freeway, as well as other supplementary information vehicle dimensions and class. This article describes the design and creation of the instrument, and provides the first publicly available datasets generated from the instrument. The datasets published with this article contains at least 4 hours of vehicle trajectory data for each of 10 days. As the system continues to mature, all trajectory data will be made publicly available at i24motion.org/data.

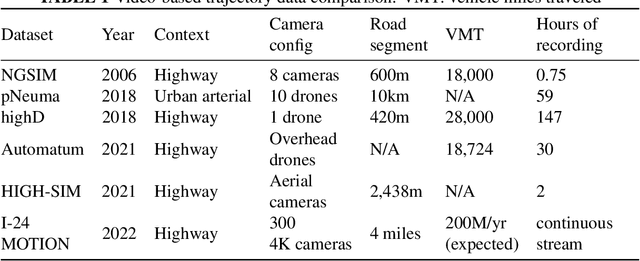

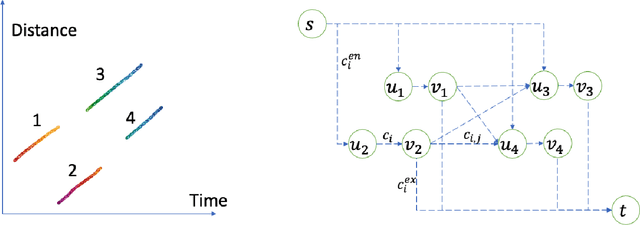

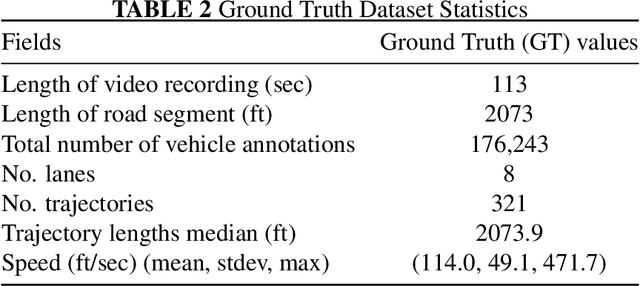

Automatic vehicle trajectory data reconstruction at scale

Dec 15, 2022

Abstract:Vehicle trajectory data has received increasing research attention over the past decades. With the technological sensing improvements such as high-resolution video cameras, in-vehicle radars and lidars, abundant individual and contextual traffic data is now available. However, though the data quantity is massive, it is by itself of limited utility for traffic research because of noise and systematic sensing errors, thus necessitates proper processing to ensure data quality. We draw particular attention to extracting high-resolution vehicle trajectory data from video cameras as traffic monitoring cameras are becoming increasingly ubiquitous. We explore methods for automatic trajectory data reconciliation, given "raw" vehicle detection and tracking information from automatic video processing algorithms. We propose a pipeline including a) an online data association algorithm to match fragments that are associated to the same object (vehicle), which is formulated as a min-cost network flow problem of a graph, and b) a trajectory reconciliation method formulated as a quadratic program to enhance raw detection data. The pipeline leverages vehicle dynamics and physical constraints to associate tracked objects when they become fragmented, remove measurement noise on trajectories and impute missing data due to fragmentations. The accuracy is benchmarked on a sample of manually-labeled data, which shows that the reconciled trajectories improve the accuracy on all the tested input data for a wide range of measures. An online version of the reconciliation pipeline is implemented and will be applied in a continuous video processing system running on a camera network covering a 4-mile stretch of Interstate-24 near Nashville, Tennessee.

Streaming data preprocessing via online tensor recovery for large environmental sensor networks

Sep 01, 2021

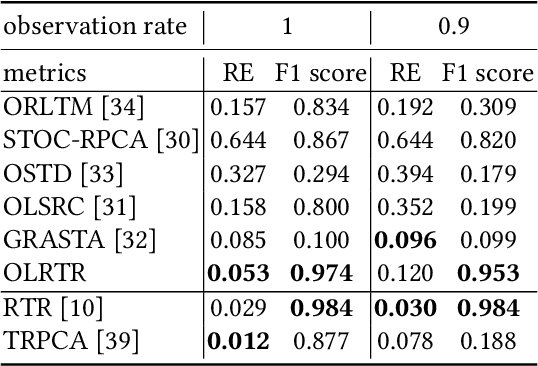

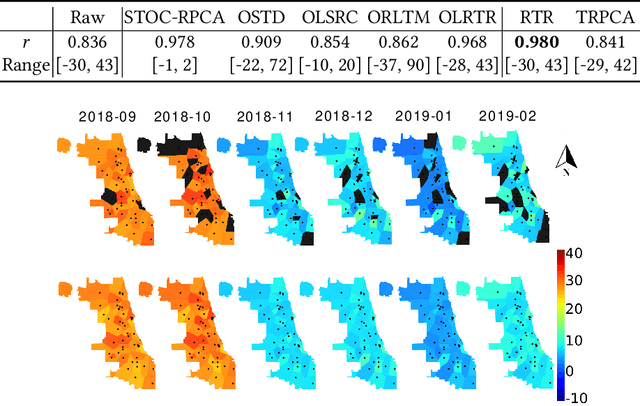

Abstract:Measuring the built and natural environment at a fine-grained scale is now possible with low-cost urban environmental sensor networks. However, fine-grained city-scale data analysis is complicated by tedious data cleaning including removing outliers and imputing missing data. While many methods exist to automatically correct anomalies and impute missing entries, challenges still exist on data with large spatial-temporal scales and shifting patterns. To address these challenges, we propose an online robust tensor recovery (OLRTR) method to preprocess streaming high-dimensional urban environmental datasets. A small-sized dictionary that captures the underlying patterns of the data is computed and constantly updated with new data. OLRTR enables online recovery for large-scale sensor networks that provide continuous data streams, with a lower computational memory usage compared to offline batch counterparts. In addition, we formulate the objective function so that OLRTR can detect structured outliers, such as faulty readings over a long period of time. We validate OLRTR on a synthetically degraded National Oceanic and Atmospheric Administration temperature dataset, with a recovery error of 0.05, and apply it to the Array of Things city-scale sensor network in Chicago, IL, showing superior results compared with several established online and batch-based low rank decomposition methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge