Daniel B. Work

Calibrating Adaptive Smoothing Methods for Freeway Traffic Reconstruction

Feb 02, 2026Abstract:The adaptive smoothing method (ASM) is a widely used approach for traffic state reconstruction. This article presents a Python implementation of ASM, featuring end-to-end calibration using real-world ground truth data. The calibration is formulated as a parameterized kernel optimization problem. The model is calibrated using data from a full-state observation testbed, with input from a sparse radar sensor network. The implementation is developed in PyTorch, enabling integration with various deep learning methods. We evaluate the results in terms of speed distribution, spatio-temporal error distribution, and spatial error to provide benchmark metrics for the traffic reconstruction problem. We further demonstrate the usability of the calibrated method across multiple freeways. Finally, we discuss the challenges of reproducibility in general traffic model calibration and the limitations of ASM. This article is reproducible and can serve as a benchmark for various freeway operation tasks.

FT-AED: Benchmark Dataset for Early Freeway Traffic Anomalous Event Detection

Jun 24, 2024

Abstract:Early and accurate detection of anomalous events on the freeway, such as accidents, can improve emergency response and clearance. However, existing delays and errors in event identification and reporting make it a difficult problem to solve. Current large-scale freeway traffic datasets are not designed for anomaly detection and ignore these challenges. In this paper, we introduce the first large-scale lane-level freeway traffic dataset for anomaly detection. Our dataset consists of a month of weekday radar detection sensor data collected in 4 lanes along an 18-mile stretch of Interstate 24 heading toward Nashville, TN, comprising over 3.7 million sensor measurements. We also collect official crash reports from the Nashville Traffic Management Center and manually label all other potential anomalies in the dataset. To show the potential for our dataset to be used in future machine learning and traffic research, we benchmark numerous deep learning anomaly detection models on our dataset. We find that unsupervised graph neural network autoencoders are a promising solution for this problem and that ignoring spatial relationships leads to decreased performance. We demonstrate that our methods can reduce reporting delays by over 10 minutes on average while detecting 75% of crashes. Our dataset and all preprocessing code needed to get started are publicly released at https://vu.edu/ft-aed/ to facilitate future research.

Reinforcement Learning Based Oscillation Dampening: Scaling up Single-Agent RL algorithms to a 100 AV highway field operational test

Feb 26, 2024Abstract:In this article, we explore the technical details of the reinforcement learning (RL) algorithms that were deployed in the largest field test of automated vehicles designed to smooth traffic flow in history as of 2023, uncovering the challenges and breakthroughs that come with developing RL controllers for automated vehicles. We delve into the fundamental concepts behind RL algorithms and their application in the context of self-driving cars, discussing the developmental process from simulation to deployment in detail, from designing simulators to reward function shaping. We present the results in both simulation and deployment, discussing the flow-smoothing benefits of the RL controller. From understanding the basics of Markov decision processes to exploring advanced techniques such as deep RL, our article offers a comprehensive overview and deep dive of the theoretical foundations and practical implementations driving this rapidly evolving field. We also showcase real-world case studies and alternative research projects that highlight the impact of RL controllers in revolutionizing autonomous driving. From tackling complex urban environments to dealing with unpredictable traffic scenarios, these intelligent controllers are pushing the boundaries of what automated vehicles can achieve. Furthermore, we examine the safety considerations and hardware-focused technical details surrounding deployment of RL controllers into automated vehicles. As these algorithms learn and evolve through interactions with the environment, ensuring their behavior aligns with safety standards becomes crucial. We explore the methodologies and frameworks being developed to address these challenges, emphasizing the importance of building reliable control systems for automated vehicles.

Virtual trajectories for I-24 MOTION: data and tools

Nov 17, 2023

Abstract:This article introduces a new virtual trajectory dataset derived from the I-24 MOTION INCEPTION v1.0.0 dataset to address challenges in analyzing large but noisy trajectory datasets. Building on the concept of virtual trajectories, we provide a Python implementation to generate virtual trajectories from large raw datasets that are typically challenging to process due to their size. We demonstrate the practical utility of these trajectories in assessing speed variability and travel times across different lanes within the INCEPTION dataset. The virtual trajectory dataset opens future research on traffic waves and their impact on energy.

Polygon Intersection-over-Union Loss for Viewpoint-Agnostic Monocular 3D Vehicle Detection

Sep 13, 2023

Abstract:Monocular 3D object detection is a challenging task because depth information is difficult to obtain from 2D images. A subset of viewpoint-agnostic monocular 3D detection methods also do not explicitly leverage scene homography or geometry during training, meaning that a model trained thusly can detect objects in images from arbitrary viewpoints. Such works predict the projections of the 3D bounding boxes on the image plane to estimate the location of the 3D boxes, but these projections are not rectangular so the calculation of IoU between these projected polygons is not straightforward. This work proposes an efficient, fully differentiable algorithm for the calculation of IoU between two convex polygons, which can be utilized to compute the IoU between two 3D bounding box footprints viewed from an arbitrary angle. We test the performance of the proposed polygon IoU loss (PIoU loss) on three state-of-the-art viewpoint-agnostic 3D detection models. Experiments demonstrate that the proposed PIoU loss converges faster than L1 loss and that in 3D detection models, a combination of PIoU loss and L1 loss gives better results than L1 loss alone (+1.64% AP70 for MonoCon on cars, +0.18% AP70 for RTM3D on cars, and +0.83%/+2.46% AP50/AP25 for MonoRCNN on cyclists).

So you think you can track?

Sep 13, 2023

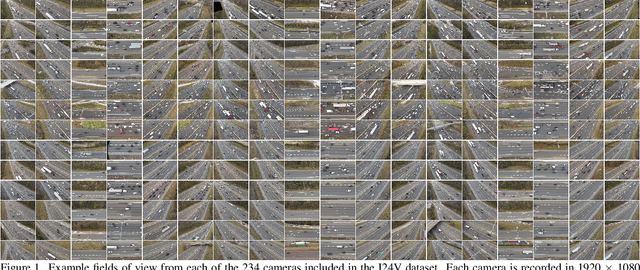

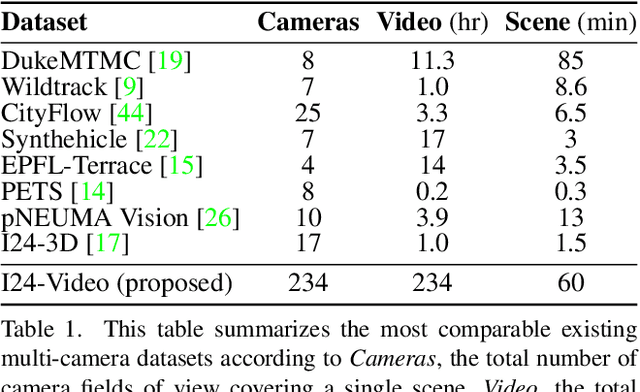

Abstract:This work introduces a multi-camera tracking dataset consisting of 234 hours of video data recorded concurrently from 234 overlapping HD cameras covering a 4.2 mile stretch of 8-10 lane interstate highway near Nashville, TN. The video is recorded during a period of high traffic density with 500+ objects typically visible within the scene and typical object longevities of 3-15 minutes. GPS trajectories from 270 vehicle passes through the scene are manually corrected in the video data to provide a set of ground-truth trajectories for recall-oriented tracking metrics, and object detections are provided for each camera in the scene (159 million total before cross-camera fusion). Initial benchmarking of tracking-by-detection algorithms is performed against the GPS trajectories, and a best HOTA of only 9.5% is obtained (best recall 75.9% at IOU 0.1, 47.9 average IDs per ground truth object), indicating the benchmarked trackers do not perform sufficiently well at the long temporal and spatial durations required for traffic scene understanding.

The Interstate-24 3D Dataset: a new benchmark for 3D multi-camera vehicle tracking

Aug 28, 2023

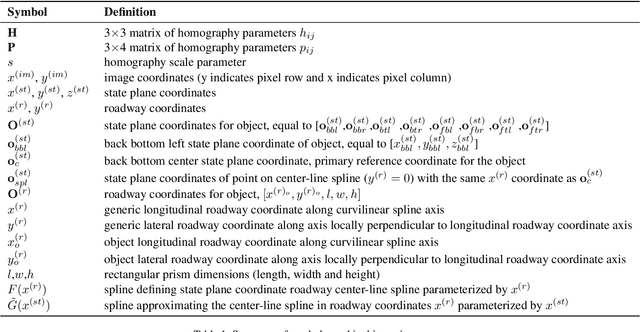

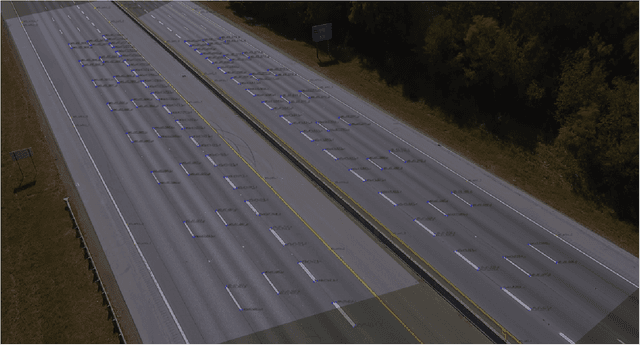

Abstract:This work presents a novel video dataset recorded from overlapping highway traffic cameras along an urban interstate, enabling multi-camera 3D object tracking in a traffic monitoring context. Data is released from 3 scenes containing video from at least 16 cameras each, totaling 57 minutes in length. 877,000 3D bounding boxes and corresponding object tracklets are fully and accurately annotated for each camera field of view and are combined into a spatially and temporally continuous set of vehicle trajectories for each scene. Lastly, existing algorithms are combined to benchmark a number of 3D multi-camera tracking pipelines on the dataset, with results indicating that the dataset is challenging due to the difficulty of matching objects traveling at high speeds across cameras and heavy object occlusion, potentially for hundreds of frames, during congested traffic. This work aims to enable the development of accurate and automatic vehicle trajectory extraction algorithms, which will play a vital role in understanding impacts of autonomous vehicle technologies on the safety and efficiency of traffic.

I-24 MOTION: An instrument for freeway traffic science

Jan 30, 2023

Abstract:The Interstate-24 MObility Technology Interstate Observation Network (I-24 MOTION) is a new instrument for traffic science located near Nashville, Tennessee. I-24 MOTION consists of 276 pole-mounted high-resolution traffic cameras that provide seamless coverage of approximately 4.2 miles I-24, a 4-5 lane (each direction) freeway with frequently observed congestion. The cameras are connected via fiber optic network to a compute facility where vehicle trajectories are extracted from the video imagery using computer vision techniques. Approximately 230 million vehicle miles of travel occur within I-24 MOTION annually. The main output of the instrument are vehicle trajectory datasets that contain the position of each vehicle on the freeway, as well as other supplementary information vehicle dimensions and class. This article describes the design and creation of the instrument, and provides the first publicly available datasets generated from the instrument. The datasets published with this article contains at least 4 hours of vehicle trajectory data for each of 10 days. As the system continues to mature, all trajectory data will be made publicly available at i24motion.org/data.

Localization-Based Tracking

Apr 12, 2021

Abstract:End-to-end production of object tracklets from high resolution video in real-time and with high accuracy remains a challenging problem due to the cost of object detection on each frame. In this work we present Localization-based Tracking (LBT), an extension to any tracker that follows the tracking by detection or joint detection and tracking paradigms. Localization-based Tracking focuses only on regions likely to contain objects to boost detection speed and avoid matching errors. We evaluate LBT as an extension to two example trackers (KIOU and SORT) on the UA-DETRAC and MOT20 datasets. LBT-extended trackers outperform all other reported algorithms in terms of PR-MOTA, PR-MOTP, and mostly tracked objects on the UA-DETRAC benchmark, establishing a new state-of-the art. relative to tracking by detection with KIOU, LBT-extended KIOU achieves a 25% higher frame-rate and is 1.1% more accurate in terms of PR-MOTA on the UA-DETRAC dataset. LBT-extended SORT achieves a 62% speedup and a 3.2% increase in PR-MOTA on the UA-DETRAC dataset. On MOT20, LBT-extended KIOU has a 50% higher frame-rate than tracking by detection and is 0.4% more accurate in terms of MOTA. As of submission time, our LBT-extended KIOU tracker places 10th overall on the MOT20 benchmark.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge