Jinghao Miao

Optimal Trajectory Generation for Autonomous Vehicles Under Centripetal Acceleration Constraints for In-lane Driving Scenarios

Dec 03, 2021

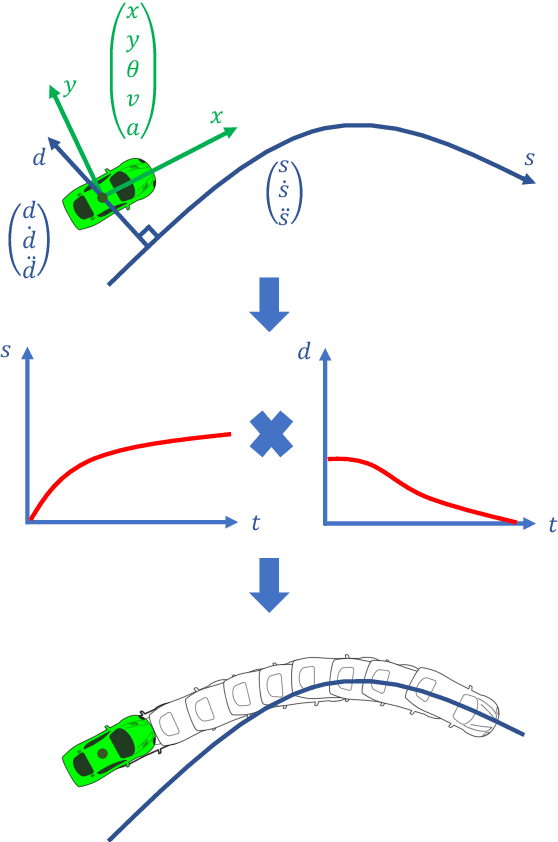

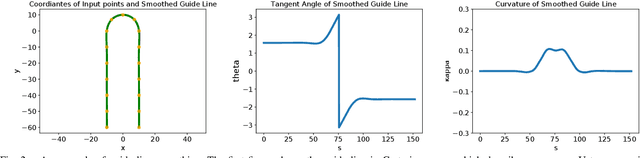

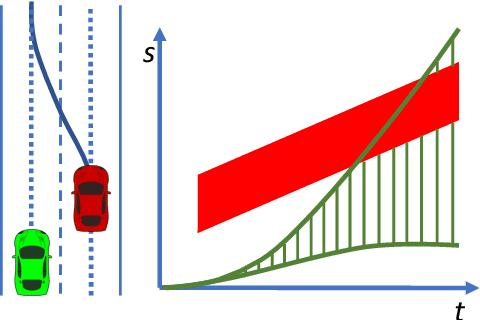

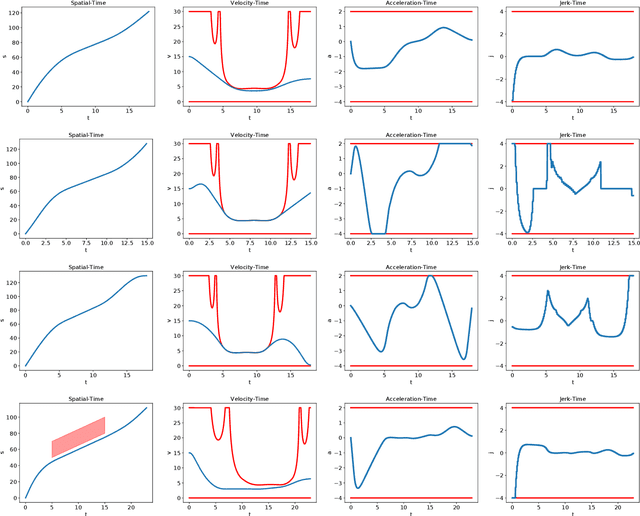

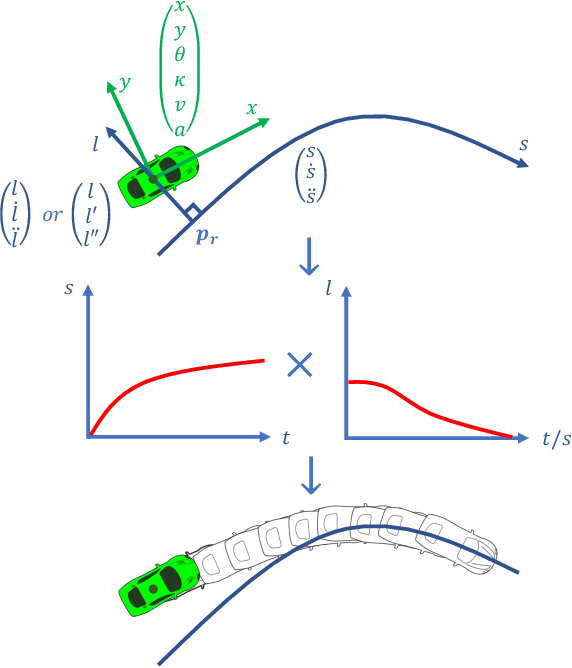

Abstract:This paper presents a noval method that generates optimal trajectories for autonomous vehicles for in-lane driving scenarios. The method computes a trajectory using a two-phase optimization procedure. In the first phase, the optimization procedure generates a close-form driving guide line with differetiable curvatures. In the second phase, the procedure takes the driving guide line as input, and outputs dynamically feasible, jerk and time optimal trajectories for vehicles driving along the guide line. This method is especially useful for generating trajectories at curvy road where the vehicles need to apply frequent accelerations and decelerations to accommodate centripetal acceleration limits.

Optimal Vehicle Path Planning Using Quadratic Optimization for Baidu Apollo Open Platform

Dec 03, 2021

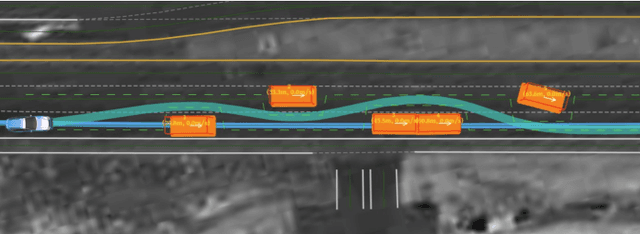

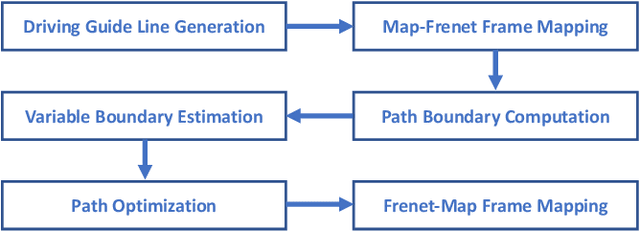

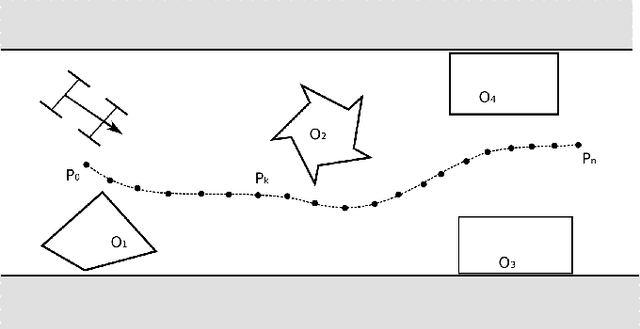

Abstract:Path planning is a key component in motion planning for autonomous vehicles. A path specifies the geometrical shape that the vehicle will travel, thus, it is critical to safe and comfortable vehicle motions. For urban driving scenarios, autonomous vehicles need the ability to navigate in cluttered environment, e.g., roads partially blocked by a number of vehicles/obstacles on the sides. How to generate a kinematically feasible and smooth path, that can avoid collision in complex environment, makes path planning a challenging problem. In this paper, we present a novel quadratic programming approach that generates optimal paths with resolution-complete collision avoidance capability.

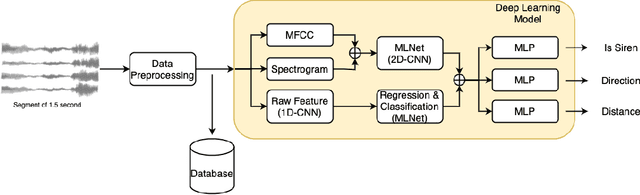

Emergency Vehicles Audio Detection and Localization in Autonomous Driving

Oct 02, 2021

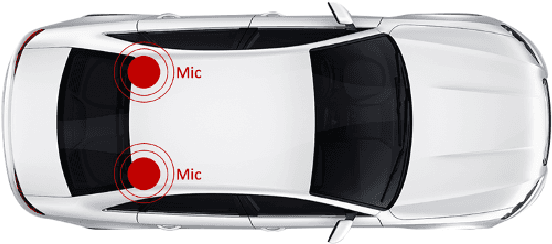

Abstract:Emergency vehicles in service have right-of-way over all other vehicles. Hence, all other vehicles are supposed to take proper actions to yield emergency vehicles with active sirens. As this task requires the cooperation between ears and eyes for human drivers, it also needs audio detection as a supplement to vision-based algorithms for fully autonomous driving vehicles. In urban driving scenarios, we need to know both the existence of emergency vehicles and their relative positions to us to decide the proper actions. We present a novel system from collecting the real-world siren data to the deployment of models using only two cost-efficient microphones. We are able to achieve promising performance for each task separately, especially within the crucial 10m to 50m distance range to react (the size of our ego vehicle is around 5m in length and 2m in width). The recall rate to determine the existence of sirens is 99.16% , the median and mean angle absolute error is 9.64{\deg} and 19.18{\deg} respectively, and the median and mean distance absolute error of 9.30m and 10.58m respectively within that range. We also benchmark various machine learning approaches that can determine the siren existence and sound source localization which includes direction and distance simultaneously within 50ms of latency.

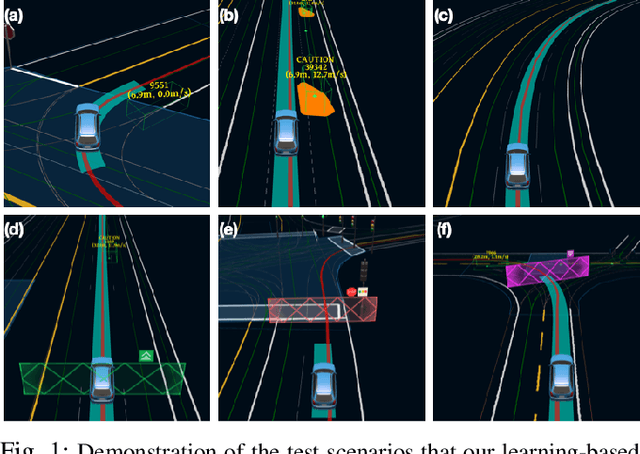

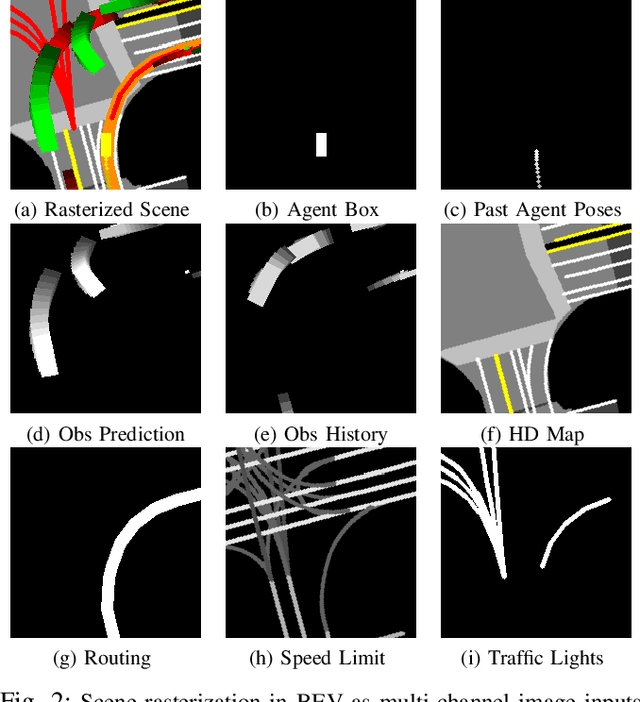

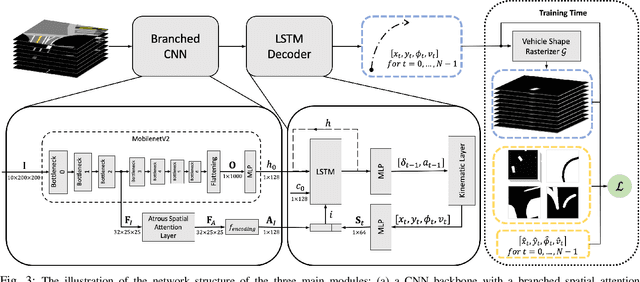

Exploring Imitation Learning for Autonomous Driving with Feedback Synthesizer and Differentiable Rasterization

Mar 02, 2021

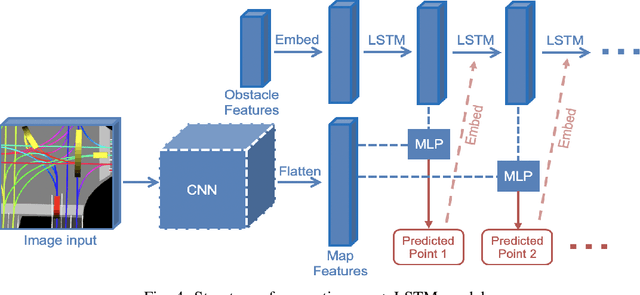

Abstract:We present a learning-based planner that aims to robustly drive a vehicle by mimicking human drivers' driving behavior. We leverage a mid-to-mid approach that allows us to manipulate the input to our imitation learning network freely. With that in mind, we propose a novel feedback synthesizer for data augmentation. It allows our agent to gain more driving experience in various previously unseen environments that are likely to encounter, thus improving overall performance. This is in contrast to prior works that rely purely on random synthesizers. Furthermore, rather than completely commit to imitating, we introduce task losses that penalize undesirable behaviors, such as collision, off-road, and so on. Unlike prior works, this is done by introducing a differentiable vehicle rasterizer that directly converts the waypoints output by the network into images. This effectively avoids the usage of heavyweight ConvLSTM networks, therefore, yields a faster model inference time. About the network architecture, we exploit an attention mechanism that allows the network to reason critical objects in the scene and produce better interpretable attention heatmaps. To further enhance the safety and robustness of the network, we add an optional optimization-based post-processing planner improving the driving comfort. We comprehensively validate our method's effectiveness in different scenarios that are specifically created for evaluating self-driving vehicles. Results demonstrate that our learning-based planner achieves high intelligence and can handle complex situations. Detailed ablation and visualization analysis are included to further demonstrate each of our proposed modules' effectiveness in our method.

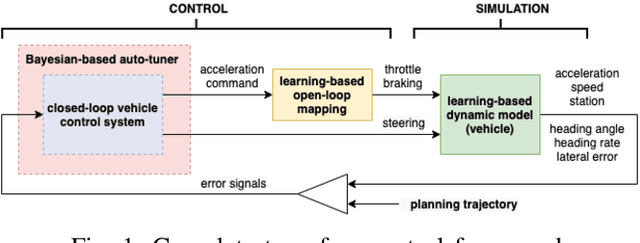

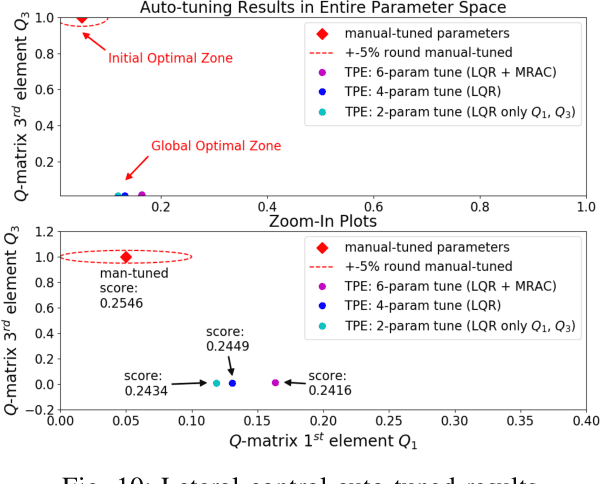

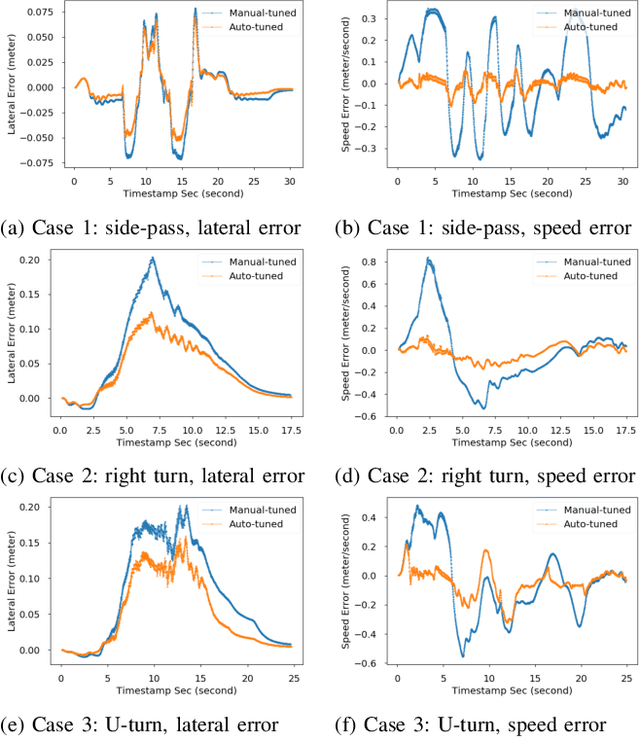

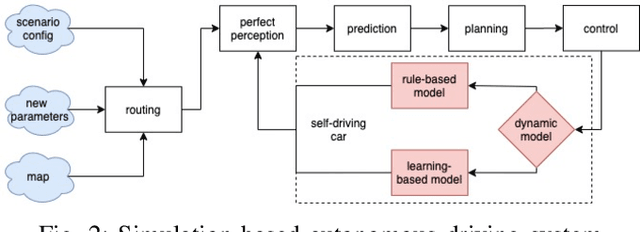

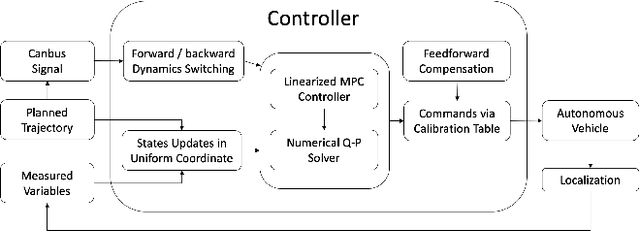

A Learning-Based Tune-Free Control Framework for Large Scale Autonomous Driving System Deployment

Nov 09, 2020

Abstract:This paper presents the design of a tune-free (human-out-of-the-loop parameter tuning) control framework, aiming at accelerating large scale autonomous driving system deployed on various vehicles and driving environments. The framework consists of three machine-learning-based procedures, which jointly automate the control parameter tuning for autonomous driving, including: a learning-based dynamic modeling procedure, to enable the control-in-the-loop simulation with highly accurate vehicle dynamics for parameter tuning; a learning-based open-loop mapping procedure, to solve the feedforward control parameters tuning; and more significantly, a Bayesian-optimization-based closed-loop parameter tuning procedure, to automatically tune feedback control (PID, LQR, MRAC, MPC, etc.) parameters in simulation environment. The paper shows an improvement in control performance with a significant increase in parameter tuning efficiency, in both simulation and road tests. This framework has been validated on different vehicles in US and China.

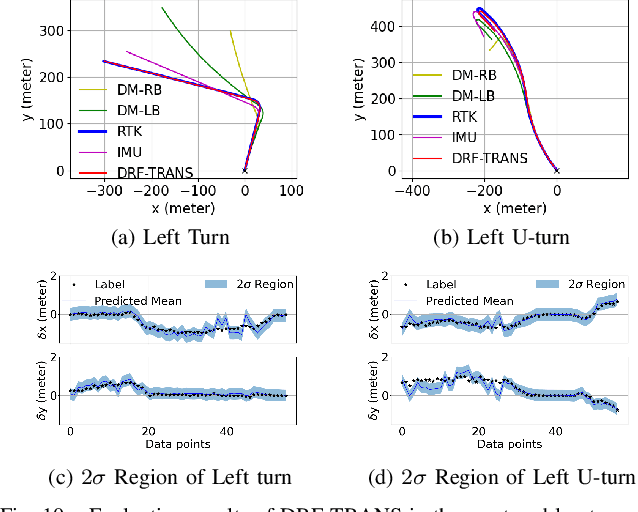

DRF: A Framework for High-Accuracy Autonomous Driving Vehicle Modeling

Nov 01, 2020

Abstract:An accurate vehicle dynamic model is the key to bridge the gap between simulation and real road test in autonomous driving. In this paper, we present a Dynamic model-Residual correction model Framework (DRF) for vehicle dynamic modeling. On top of any existing open-loop dynamic model, this framework builds a Residual Correction Model (RCM) by integrating deep Neural Networks (NN) with Sparse Variational Gaussian Process (SVGP) model. RCM takes a sequence of vehicle control commands and dynamic status for a certain time duration as modeling inputs, extracts underlying context from this sequence with deep encoder networks, and predicts open-loop dynamic model prediction errors. Five vehicle dynamic models are derived from DRF via encoder variation. Our contribution is consolidated by experiments on evaluation of absolute trajectory error and similarity between DRF outputs and the ground truth. Compared to classic rule-based and learning-based vehicle dynamic models, DRF accomplishes as high as 74.12% to 85.02% of absolute trajectory error drop among all DRF variations.

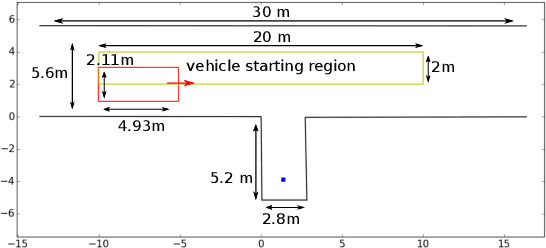

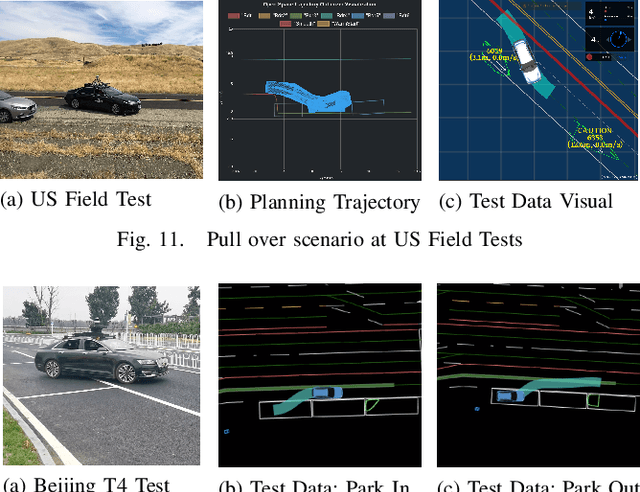

TDR-OBCA: A Reliable Planner for Autonomous Driving in Free-Space Environment

Sep 23, 2020

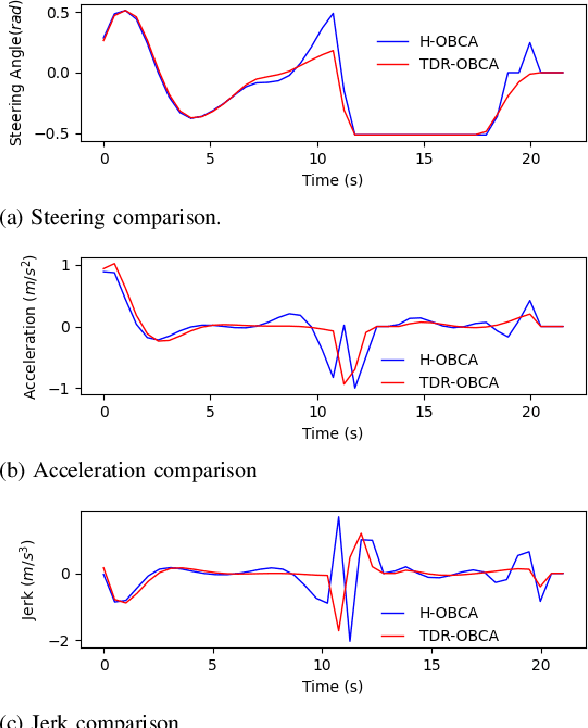

Abstract:This paper presents an optimization-based collision avoidance trajectory generation method for autonomous driving in free-space environments, with enhanced robust-ness, driving comfort and efficiency. Starting from the hybrid optimization-based framework, we introduces two warm start methods, temporal and dual variable warm starts, to improve the efficiency. We also reformulates the problem to improve the robustness and efficiency. We name this new algorithm TDR-OBCA. With these changes, compared with original hybrid optimization we achieve a 96.67% failure rate decrease with respect to initial conditions, 13.53% increase in driving comforts and 3.33% to 44.82% increase in planner efficiency as obstacles number scales. We validate our results in hundreds of simulation scenarios and hundreds of hours of public road tests in both U.S. and China. Our source code is availableathttps://github.com/ApolloAuto/apollo.

DL-IAPS and PJSO: A Path/Speed Decoupled Trajectory Optimization and its Application in Autonomous Driving

Sep 23, 2020

Abstract:This paper presents a free space trajectory optimization algorithm of autonomous driving vehicle, which decouples the collision-free trajectory planning problem into a Dual-Loop Iterative Anchoring Path Smoothing (DL-IAPS) and a Piece-wise Jerk Speed Optimization (PJSO). The work leads to remarkable driving performance improvements including more precise collision avoidance, higher control feasibility and better driving comfort, as those are often hard to realize in other existing path/speed decoupled trajectory optimization methods. Our algorithm's efficiency, robustness and adaptiveness to complex driving scenarios have been validated by both simulations and real on-road tests.

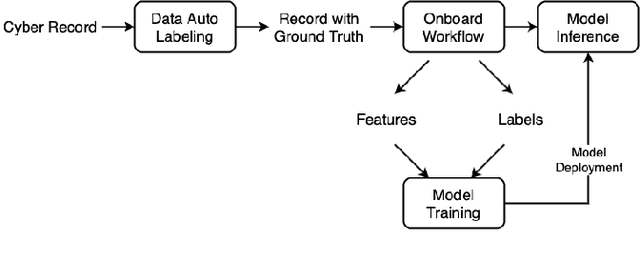

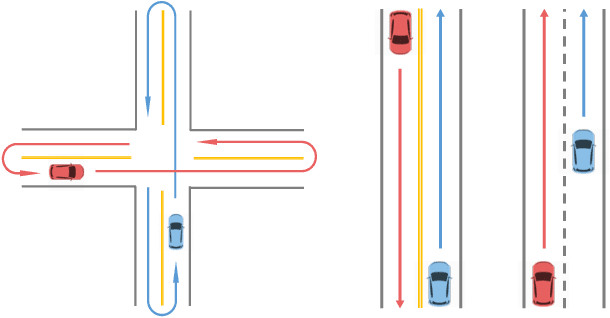

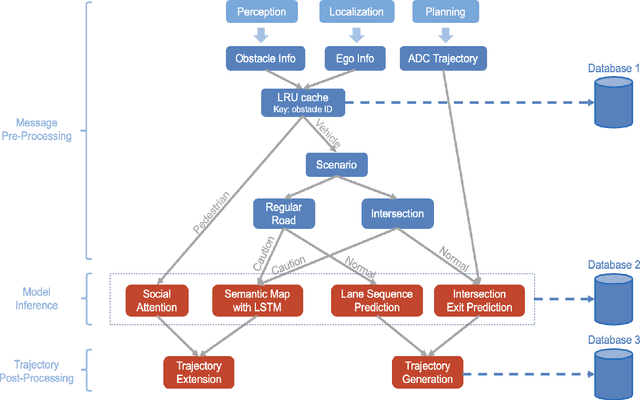

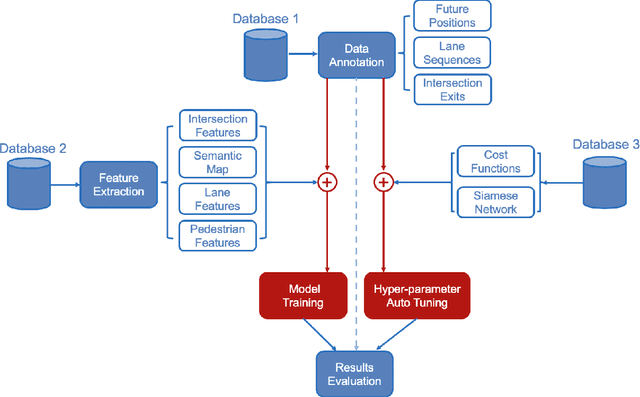

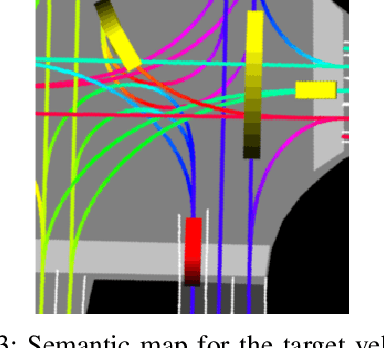

Data Driven Prediction Architecture for Autonomous Driving and its Application on Apollo Platform

Jun 11, 2020

Abstract:Autonomous Driving vehicles (ADV) are on road with large scales. For safe and efficient operations, ADVs must be able to predict the future states and iterative with road entities in complex, real-world driving scenarios. How to migrate a well-trained prediction model from one geo-fenced area to another is essential in scaling the ADV operation and is difficult most of the time since the terrains, traffic rules, entities distributions, driving/walking patterns would be largely different in different geo-fenced operation areas. In this paper, we introduce a highly automated learning-based prediction model pipeline, which has been deployed on Baidu Apollo self-driving platform, to support different prediction learning sub-modules' data annotation, feature extraction, model training/tuning and deployment. This pipeline is completely automatic without any human intervention and shows an up to 400\% efficiency increase in parameter tuning, when deployed at scale in different scenarios across nations.

Gen-LaneNet: A Generalized and Scalable Approach for 3D Lane Detection

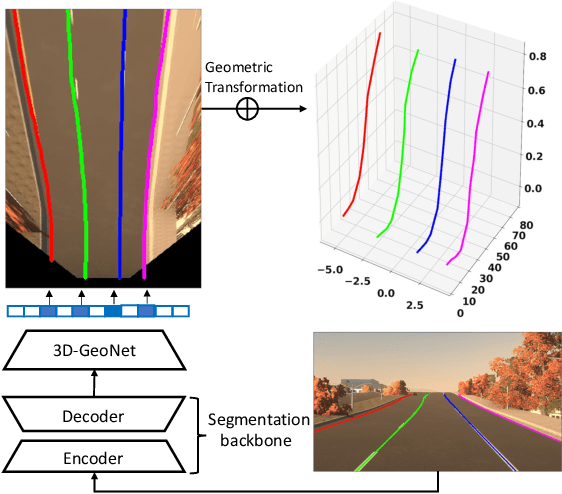

Mar 24, 2020

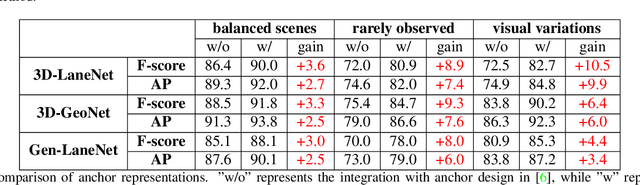

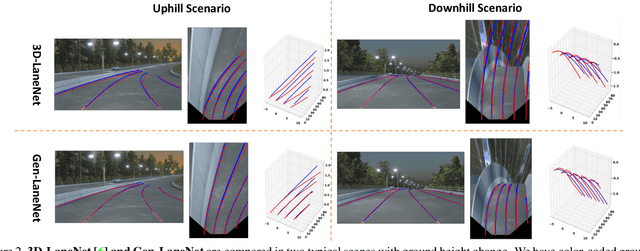

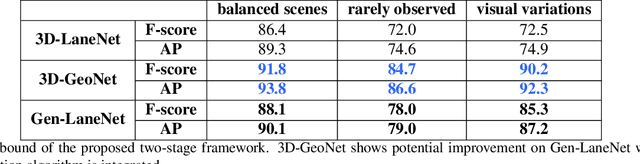

Abstract:We present a generalized and scalable method, called Gen-LaneNet, to detect 3D lanes from a single image. The method, inspired by the latest state-of-the-art 3D-LaneNet, is a unified framework solving image encoding, spatial transform of features and 3D lane prediction in a single network. However, we propose unique designs for Gen-LaneNet in two folds. First, we introduce a new geometry-guided lane anchor representation in a new coordinate frame and apply a specific geometric transformation to directly calculate real 3D lane points from the network output. We demonstrate that aligning the lane points with the underlying top-view features in the new coordinate frame is critical towards a generalized method in handling unfamiliar scenes. Second, we present a scalable two-stage framework that decouples the learning of image segmentation subnetwork and geometry encoding subnetwork. Compared to 3D-LaneNet, the proposed Gen-LaneNet drastically reduces the amount of 3D lane labels required to achieve a robust solution in real-world application. Moreover, we release a new synthetic dataset and its construction strategy to encourage the development and evaluation of 3D lane detection methods. In experiments, we conduct extensive ablation study to substantiate the proposed Gen-LaneNet significantly outperforms 3D-LaneNet in average precision(AP) and F-score.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge