Kecheng Xu

Emergency Vehicles Audio Detection and Localization in Autonomous Driving

Oct 02, 2021

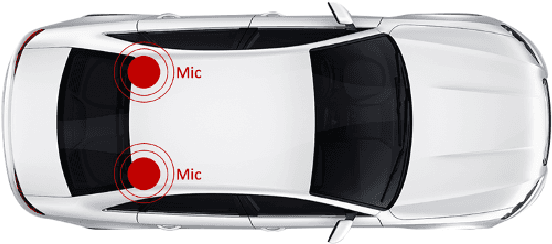

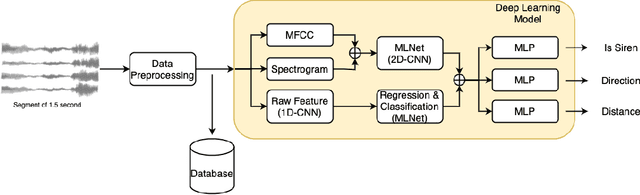

Abstract:Emergency vehicles in service have right-of-way over all other vehicles. Hence, all other vehicles are supposed to take proper actions to yield emergency vehicles with active sirens. As this task requires the cooperation between ears and eyes for human drivers, it also needs audio detection as a supplement to vision-based algorithms for fully autonomous driving vehicles. In urban driving scenarios, we need to know both the existence of emergency vehicles and their relative positions to us to decide the proper actions. We present a novel system from collecting the real-world siren data to the deployment of models using only two cost-efficient microphones. We are able to achieve promising performance for each task separately, especially within the crucial 10m to 50m distance range to react (the size of our ego vehicle is around 5m in length and 2m in width). The recall rate to determine the existence of sirens is 99.16% , the median and mean angle absolute error is 9.64{\deg} and 19.18{\deg} respectively, and the median and mean distance absolute error of 9.30m and 10.58m respectively within that range. We also benchmark various machine learning approaches that can determine the siren existence and sound source localization which includes direction and distance simultaneously within 50ms of latency.

Data Driven Prediction Architecture for Autonomous Driving and its Application on Apollo Platform

Jun 11, 2020

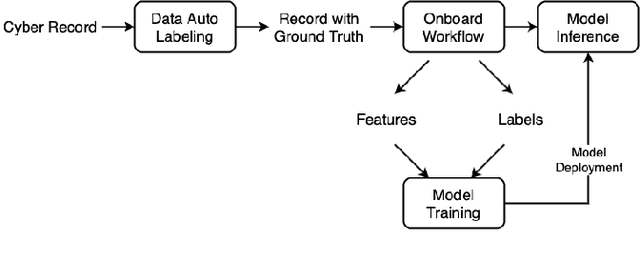

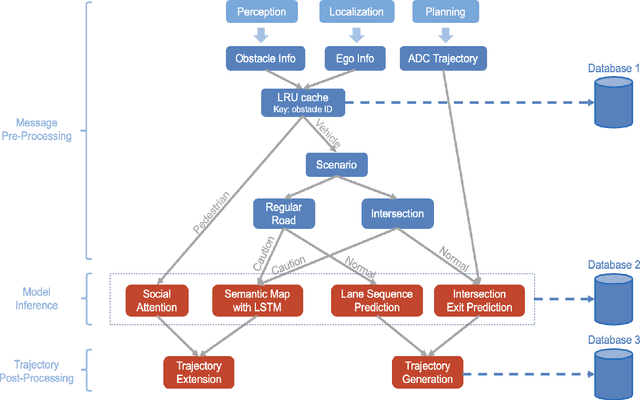

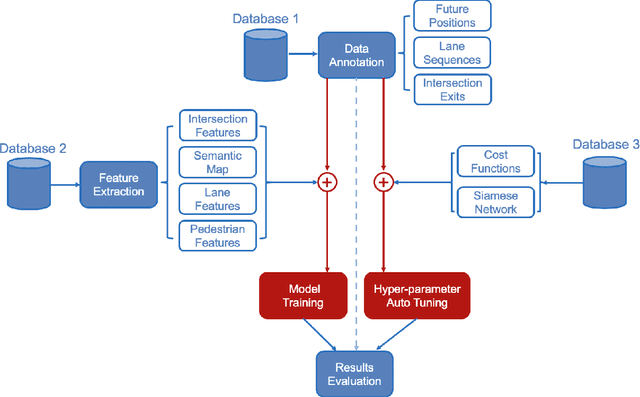

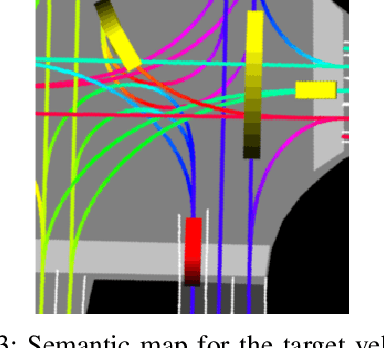

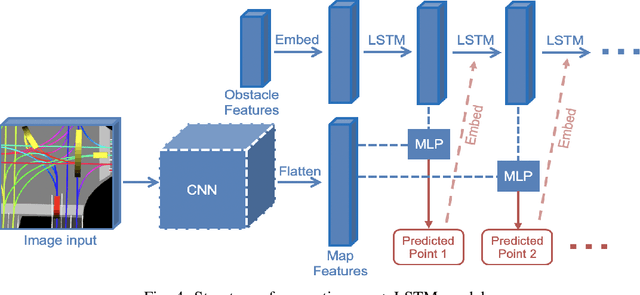

Abstract:Autonomous Driving vehicles (ADV) are on road with large scales. For safe and efficient operations, ADVs must be able to predict the future states and iterative with road entities in complex, real-world driving scenarios. How to migrate a well-trained prediction model from one geo-fenced area to another is essential in scaling the ADV operation and is difficult most of the time since the terrains, traffic rules, entities distributions, driving/walking patterns would be largely different in different geo-fenced operation areas. In this paper, we introduce a highly automated learning-based prediction model pipeline, which has been deployed on Baidu Apollo self-driving platform, to support different prediction learning sub-modules' data annotation, feature extraction, model training/tuning and deployment. This pipeline is completely automatic without any human intervention and shows an up to 400\% efficiency increase in parameter tuning, when deployed at scale in different scenarios across nations.

Lane Attention: Predicting Vehicles' Moving Trajectories by Learning Their Attention over Lanes

Sep 29, 2019

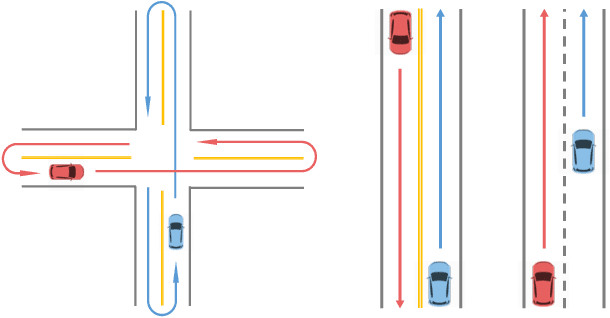

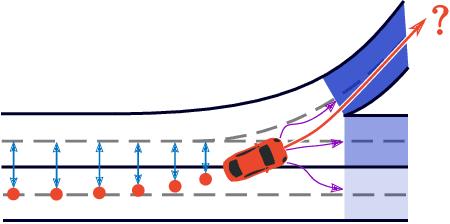

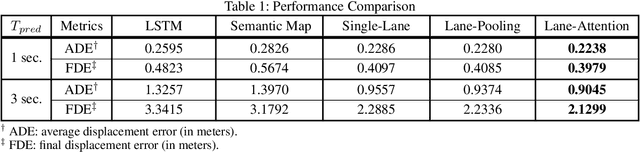

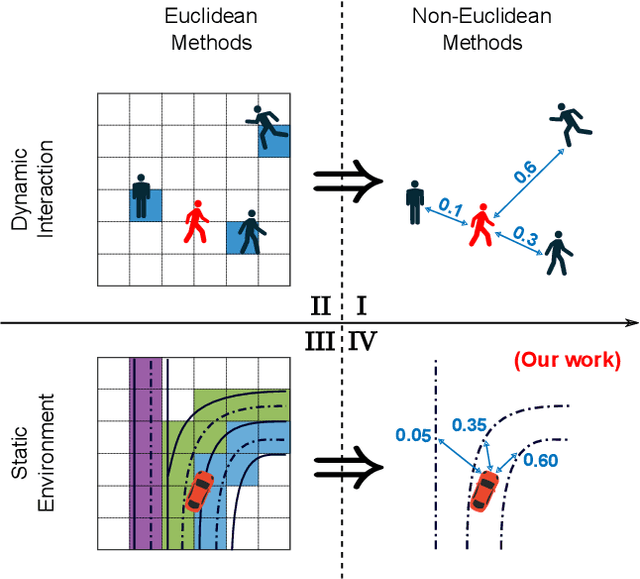

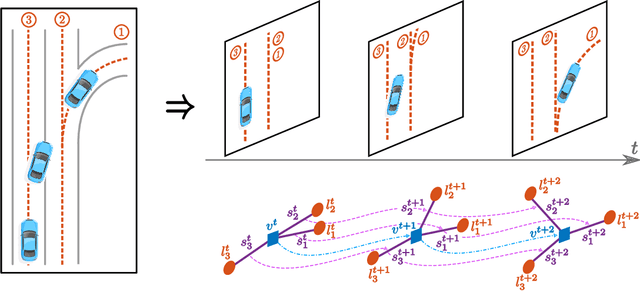

Abstract:Accurately forecasting the future movements of surrounding vehicles is essential for safe and efficient operations of autonomous driving cars. This task is difficult because a vehicle's moving trajectory is greatly determined by its driver's intention, which is often hard to estimate. By leveraging attention mechanisms along with long short-term memory (LSTM) networks, this work learns the relation between a driver's intention and the vehicle's changing positions relative to road infrastructures, and uses it to guide the prediction. Different from other state-of-the-art solutions, our work treats the on-road lanes as non-Euclidean structures, unfolds the vehicle's moving history to form a spatio-temporal graph, and uses methods from Graph Neural Networks to solve the problem. Not only is our approach a pioneering attempt in using non-Euclidean methods to process static environmental features around a predicted object, our model also outperforms other state-of-the-art models in several metrics. The practicability and interpretability analysis of the model shows great potential for large-scale deployment in various autonomous driving systems in addition to our own.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge