Jiang Li

McBE: A Multi-task Chinese Bias Evaluation Benchmark for Large Language Models

Jul 02, 2025Abstract:As large language models (LLMs) are increasingly applied to various NLP tasks, their inherent biases are gradually disclosed. Therefore, measuring biases in LLMs is crucial to mitigate its ethical risks. However, most existing bias evaluation datasets focus on English and North American culture, and their bias categories are not fully applicable to other cultures. The datasets grounded in the Chinese language and culture are scarce. More importantly, these datasets usually only support single evaluation tasks and cannot evaluate the bias from multiple aspects in LLMs. To address these issues, we present a Multi-task Chinese Bias Evaluation Benchmark (McBE) that includes 4,077 bias evaluation instances, covering 12 single bias categories, 82 subcategories and introducing 5 evaluation tasks, providing extensive category coverage, content diversity, and measuring comprehensiveness. Additionally, we evaluate several popular LLMs from different series and with parameter sizes. In general, all these LLMs demonstrated varying degrees of bias. We conduct an in-depth analysis of results, offering novel insights into bias in LLMs.

Degradation-Aware Image Enhancement via Vision-Language Classification

Jun 05, 2025Abstract:Image degradation is a prevalent issue in various real-world applications, affecting visual quality and downstream processing tasks. In this study, we propose a novel framework that employs a Vision-Language Model (VLM) to automatically classify degraded images into predefined categories. The VLM categorizes an input image into one of four degradation types: (A) super-resolution degradation (including noise, blur, and JPEG compression), (B) reflection artifacts, (C) motion blur, or (D) no visible degradation (high-quality image). Once classified, images assigned to categories A, B, or C undergo targeted restoration using dedicated models tailored for each specific degradation type. The final output is a restored image with improved visual quality. Experimental results demonstrate the effectiveness of our approach in accurately classifying image degradations and enhancing image quality through specialized restoration models. Our method presents a scalable and automated solution for real-world image enhancement tasks, leveraging the capabilities of VLMs in conjunction with state-of-the-art restoration techniques.

Joint Masked Reconstruction and Contrastive Learning for Mining Interactions Between Proteins

Mar 06, 2025

Abstract:Protein-protein interaction (PPI) prediction is an instrumental means in elucidating the mechanisms underlying cellular operations, holding significant practical implications for the realms of pharmaceutical development and clinical treatment. Presently, the majority of research methods primarily concentrate on the analysis of amino acid sequences, while investigations predicated on protein structures remain in the nascent stages of exploration. Despite the emergence of several structure-based algorithms in recent years, these are still confronted with inherent challenges: (1) the extraction of intrinsic structural information of proteins typically necessitates the expenditure of substantial computational resources; (2) these models are overly reliant on seen protein data, struggling to effectively unearth interaction cues between unknown proteins. To further propel advancements in this domain, this paper introduces a novel PPI prediction method jointing masked reconstruction and contrastive learning, termed JmcPPI. This methodology dissects the PPI prediction task into two distinct phases: during the residue structure encoding phase, JmcPPI devises two feature reconstruction tasks and employs graph attention mechanism to capture structural information between residues; during the protein interaction inference phase, JmcPPI perturbs the original PPI graph and employs a multi-graph contrastive learning strategy to thoroughly mine extrinsic interaction information of novel proteins. Extensive experiments conducted on three widely utilized PPI datasets demonstrate that JmcPPI surpasses existing optimal baseline models across various data partition schemes. The associated code can be accessed via https://github.com/lijfrank-open/JmcPPI.

Extracting Inter-Protein Interactions Via Multitasking Graph Structure Learning

Jan 29, 2025

Abstract:Identifying protein-protein interactions (PPI) is crucial for gaining in-depth insights into numerous biological processes within cells and holds significant guiding value in areas such as drug development and disease treatment. Currently, most PPI prediction methods focus primarily on the study of protein sequences, neglecting the critical role of the internal structure of proteins. This paper proposes a novel PPI prediction method named MgslaPPI, which utilizes graph attention to mine protein structural information and enhances the expressive power of the protein encoder through multitask learning strategy. Specifically, we decompose the end-to-end PPI prediction process into two stages: amino acid residue reconstruction (A2RR) and protein interaction prediction (PIP). In the A2RR stage, we employ a graph attention-based residue reconstruction method to explore the internal relationships and features of proteins. In the PIP stage, in addition to the basic interaction prediction task, we introduce two auxiliary tasks, i.e., protein feature reconstruction (PFR) and masked interaction prediction (MIP). The PFR task aims to reconstruct the representation of proteins in the PIP stage, while the MIP task uses partially masked protein features for PPI prediction, with both working in concert to prompt MgslaPPI to capture more useful information. Experimental results demonstrate that MgslaPPI significantly outperforms existing state-of-the-art methods under various data partitioning schemes.

Data-Driven Gradient Optimization for Field Emission Management in a Superconducting Radio-Frequency Linac

Nov 11, 2024

Abstract:Field emission can cause significant problems in superconducting radio-frequency linear accelerators (linacs). When cavity gradients are pushed higher, radiation levels within the linacs may rise exponentially, causing degradation of many nearby systems. This research aims to utilize machine learning with uncertainty quantification to predict radiation levels at multiple locations throughout the linacs and ultimately optimize cavity gradients to reduce field emission induced radiation while maintaining the total linac energy gain necessary for the experimental physics program. The optimized solutions show over 40% reductions for both neutron and gamma radiation from the standard operational settings.

Unleashing the Power of Large Language Models in Zero-shot Relation Extraction via Self-Prompting

Oct 02, 2024

Abstract:Recent research in zero-shot Relation Extraction (RE) has focused on using Large Language Models (LLMs) due to their impressive zero-shot capabilities. However, current methods often perform suboptimally, mainly due to a lack of detailed, context-specific prompts needed for understanding various sentences and relations. To address this, we introduce the Self-Prompting framework, a novel method designed to fully harness the embedded RE knowledge within LLMs. Specifically, our framework employs a three-stage diversity approach to prompt LLMs, generating multiple synthetic samples that encapsulate specific relations from scratch. These generated samples act as in-context learning samples, offering explicit and context-specific guidance to efficiently prompt LLMs for RE. Experimental evaluations on benchmark datasets show our approach outperforms existing LLM-based zero-shot RE methods. Additionally, our experiments confirm the effectiveness of our generation pipeline in producing high-quality synthetic data that enhances performance.

Tracing Intricate Cues in Dialogue: Joint Graph Structure and Sentiment Dynamics for Multimodal Emotion Recognition

Jul 31, 2024

Abstract:Multimodal emotion recognition in conversation (MERC) has garnered substantial research attention recently. Existing MERC methods face several challenges: (1) they fail to fully harness direct inter-modal cues, possibly leading to less-than-thorough cross-modal modeling; (2) they concurrently extract information from the same and different modalities at each network layer, potentially triggering conflicts from the fusion of multi-source data; (3) they lack the agility required to detect dynamic sentimental changes, perhaps resulting in inaccurate classification of utterances with abrupt sentiment shifts. To address these issues, a novel approach named GraphSmile is proposed for tracking intricate emotional cues in multimodal dialogues. GraphSmile comprises two key components, i.e., GSF and SDP modules. GSF ingeniously leverages graph structures to alternately assimilate inter-modal and intra-modal emotional dependencies layer by layer, adequately capturing cross-modal cues while effectively circumventing fusion conflicts. SDP is an auxiliary task to explicitly delineate the sentiment dynamics between utterances, promoting the model's ability to distinguish sentimental discrepancies. Furthermore, GraphSmile is effortlessly applied to multimodal sentiment analysis in conversation (MSAC), forging a unified multimodal affective model capable of executing MERC and MSAC tasks. Empirical results on multiple benchmarks demonstrate that GraphSmile can handle complex emotional and sentimental patterns, significantly outperforming baseline models.

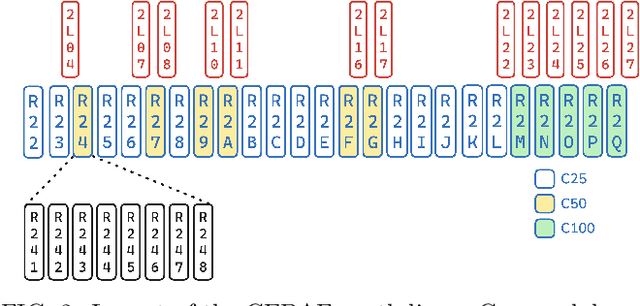

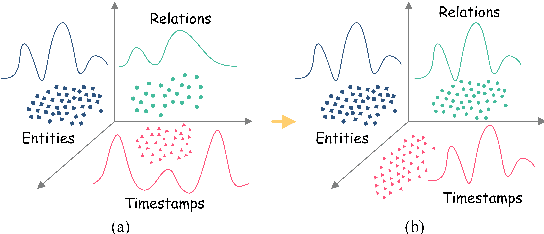

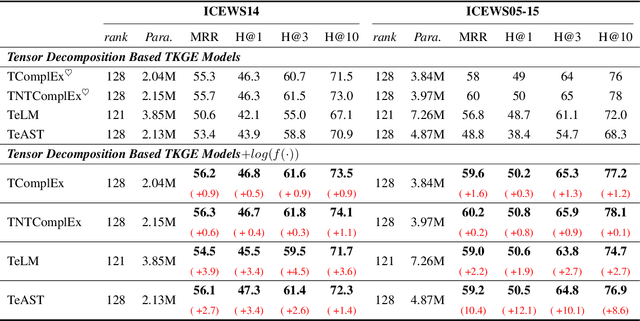

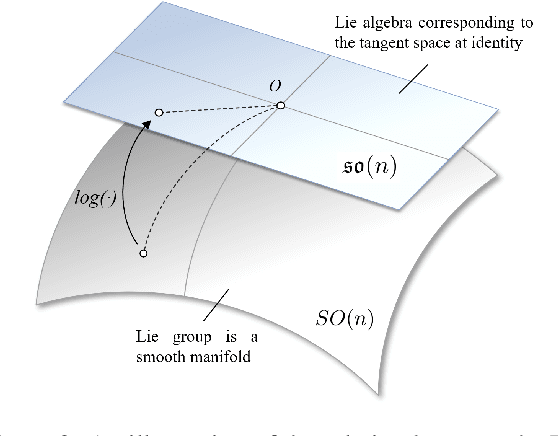

Mitigating Heterogeneity among Factor Tensors via Lie Group Manifolds for Tensor Decomposition Based Temporal Knowledge Graph Embedding

Apr 14, 2024

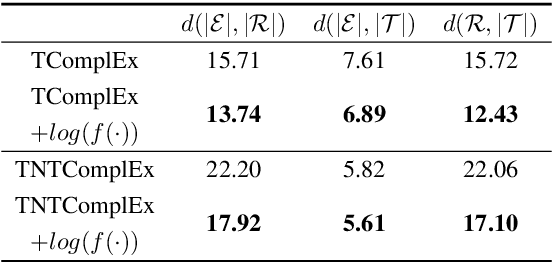

Abstract:Recent studies have highlighted the effectiveness of tensor decomposition methods in the Temporal Knowledge Graphs Embedding (TKGE) task. However, we found that inherent heterogeneity among factor tensors in tensor decomposition significantly hinders the tensor fusion process and further limits the performance of link prediction. To overcome this limitation, we introduce a novel method that maps factor tensors onto a unified smooth Lie group manifold to make the distribution of factor tensors approximating homogeneous in tensor decomposition. We provide the theoretical proof of our motivation that homogeneous tensors are more effective than heterogeneous tensors in tensor fusion and approximating the target for tensor decomposition based TKGE methods. The proposed method can be directly integrated into existing tensor decomposition based TKGE methods without introducing extra parameters. Extensive experiments demonstrate the effectiveness of our method in mitigating the heterogeneity and in enhancing the tensor decomposition based TKGE models.

Towards Architecture-Insensitive Untrained Network Priors for Accelerated MRI Reconstruction

Dec 15, 2023

Abstract:Untrained neural networks pioneered by Deep Image Prior (DIP) have recently enabled MRI reconstruction without requiring fully-sampled measurements for training. Their success is widely attributed to the implicit regularization induced by suitable network architectures. However, the lack of understanding of such architectural priors results in superfluous design choices and sub-optimal outcomes. This work aims to simplify the architectural design decisions for DIP-MRI to facilitate its practical deployment. We observe that certain architectural components are more prone to causing overfitting regardless of the number of parameters, incurring severe reconstruction artifacts by hindering accurate extrapolation on the un-acquired measurements. We interpret this phenomenon from a frequency perspective and find that the architectural characteristics favoring low frequencies, i.e., deep and narrow with unlearnt upsampling, can lead to enhanced generalization and hence better reconstruction. Building on this insight, we propose two architecture-agnostic remedies: one to constrain the frequency range of the white-noise input and the other to penalize the Lipschitz constants of the network. We demonstrate that even with just one extra line of code on the input, the performance gap between the ill-designed models and the high-performing ones can be closed. These results signify that for the first time, architectural biases on untrained MRI reconstruction can be mitigated without architectural modifications.

Watch the Speakers: A Hybrid Continuous Attribution Network for Emotion Recognition in Conversation With Emotion Disentanglement

Sep 19, 2023Abstract:Emotion Recognition in Conversation (ERC) has attracted widespread attention in the natural language processing field due to its enormous potential for practical applications. Existing ERC methods face challenges in achieving generalization to diverse scenarios due to insufficient modeling of context, ambiguous capture of dialogue relationships and overfitting in speaker modeling. In this work, we present a Hybrid Continuous Attributive Network (HCAN) to address these issues in the perspective of emotional continuation and emotional attribution. Specifically, HCAN adopts a hybrid recurrent and attention-based module to model global emotion continuity. Then a novel Emotional Attribution Encoding (EAE) is proposed to model intra- and inter-emotional attribution for each utterance. Moreover, aiming to enhance the robustness of the model in speaker modeling and improve its performance in different scenarios, A comprehensive loss function emotional cognitive loss $\mathcal{L}_{\rm EC}$ is proposed to alleviate emotional drift and overcome the overfitting of the model to speaker modeling. Our model achieves state-of-the-art performance on three datasets, demonstrating the superiority of our work. Another extensive comparative experiments and ablation studies on three benchmarks are conducted to provided evidence to support the efficacy of each module. Further exploration of generalization ability experiments shows the plug-and-play nature of the EAE module in our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge