Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Riad Suleiman

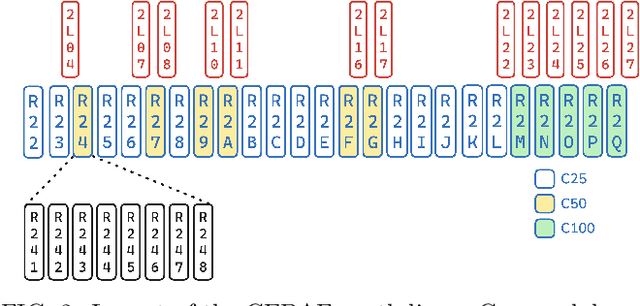

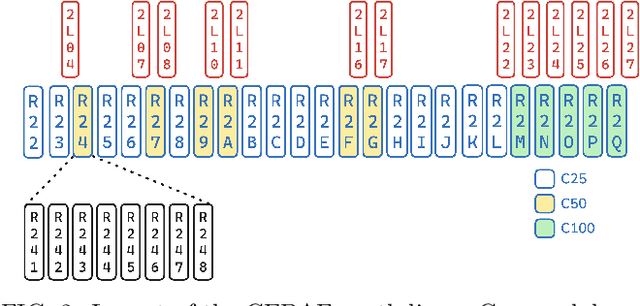

Data-Driven Gradient Optimization for Field Emission Management in a Superconducting Radio-Frequency Linac

Nov 11, 2024Figures and Tables:

Abstract:Field emission can cause significant problems in superconducting radio-frequency linear accelerators (linacs). When cavity gradients are pushed higher, radiation levels within the linacs may rise exponentially, causing degradation of many nearby systems. This research aims to utilize machine learning with uncertainty quantification to predict radiation levels at multiple locations throughout the linacs and ultimately optimize cavity gradients to reduce field emission induced radiation while maintaining the total linac energy gain necessary for the experimental physics program. The optimized solutions show over 40% reductions for both neutron and gamma radiation from the standard operational settings.

* 14 pages, 6 figures, 10 tables

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge