Jianda Han

RINGO: Real-time Navigation with a Guiding Trajectory for Aerial Manipulators in Unknown Environments

Apr 14, 2025Abstract:Motion planning for aerial manipulators in constrained environments has typically been limited to known environments or simplified to that of multi-rotors, which leads to poor adaptability and overly conservative trajectories. This paper presents RINGO: Real-time Navigation with a Guiding Trajectory, a novel planning framework that enables aerial manipulators to navigate unknown environments in real time. The proposed method simultaneously considers the positions of both the multi-rotor and the end-effector. A pre-obtained multi-rotor trajectory serves as a guiding reference, allowing the end-effector to generate a smooth, collision-free, and workspace-compatible trajectory. Leveraging the convex hull property of B-spline curves, we theoretically guarantee that the trajectory remains within the reachable workspace. To the best of our knowledge, this is the first work that enables real-time navigation of aerial manipulators in unknown environments. The simulation and experimental results show the effectiveness of the proposed method. The proposed method generates less conservative trajectories than approaches that consider only the multi-rotor.

Adaptive RISE Control for Dual-Arm Unmanned Aerial Manipulator Systems with Deep Neural Networks

Apr 08, 2025

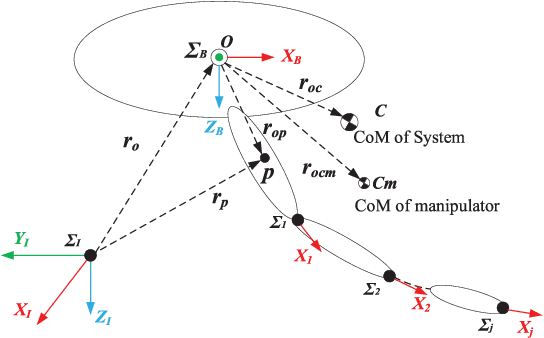

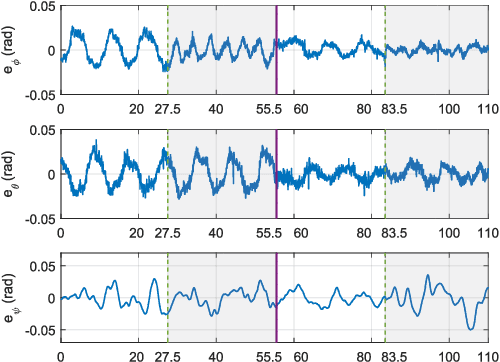

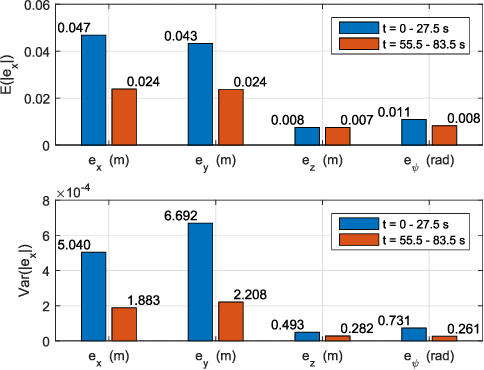

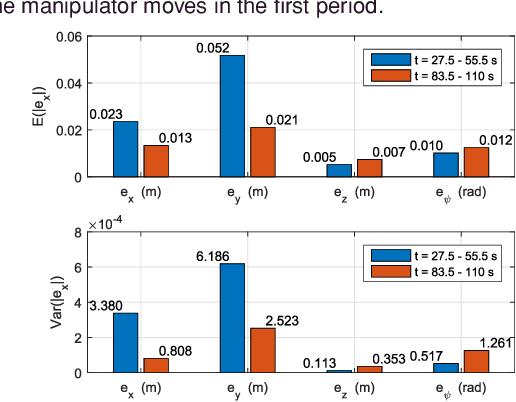

Abstract:The unmanned aerial manipulator system, consisting of a multirotor UAV (unmanned aerial vehicle) and a manipulator, has attracted considerable interest from researchers. Nevertheless, the operation of a dual-arm manipulator poses a dynamic challenge, as the CoM (center of mass) of the system changes with manipulator movement, potentially impacting the multirotor UAV. Additionally, unmodeled effects, parameter uncertainties, and external disturbances can significantly degrade control performance, leading to unforeseen dangers. To tackle these issues, this paper proposes a nonlinear adaptive RISE (robust integral of the sign of the error) controller based on DNN (deep neural network). The first step involves establishing the kinematic and dynamic model of the dual-arm aerial manipulator. Subsequently, the adaptive RISE controller is proposed with a DNN feedforward term to effectively address both internal and external challenges. By employing Lyapunov techniques, the asymptotic convergence of the tracking error signals are guaranteed rigorously. Notably, this paper marks a pioneering effort by presenting the first DNN-based adaptive RISE controller design accompanied by a comprehensive stability analysis. To validate the practicality and robustness of the proposed control approach, several groups of actual hardware experiments are conducted. The results confirm the efficacy of the developed methodology in handling real-world scenarios, thereby offering valuable insights into the performance of the dual-arm aerial manipulator system.

General Place Recognition Survey: Towards Real-World Autonomy

May 08, 2024

Abstract:In the realm of robotics, the quest for achieving real-world autonomy, capable of executing large-scale and long-term operations, has positioned place recognition (PR) as a cornerstone technology. Despite the PR community's remarkable strides over the past two decades, garnering attention from fields like computer vision and robotics, the development of PR methods that sufficiently support real-world robotic systems remains a challenge. This paper aims to bridge this gap by highlighting the crucial role of PR within the framework of Simultaneous Localization and Mapping (SLAM) 2.0. This new phase in robotic navigation calls for scalable, adaptable, and efficient PR solutions by integrating advanced artificial intelligence (AI) technologies. For this goal, we provide a comprehensive review of the current state-of-the-art (SOTA) advancements in PR, alongside the remaining challenges, and underscore its broad applications in robotics. This paper begins with an exploration of PR's formulation and key research challenges. We extensively review literature, focusing on related methods on place representation and solutions to various PR challenges. Applications showcasing PR's potential in robotics, key PR datasets, and open-source libraries are discussed. We also emphasizes our open-source package, aimed at new development and benchmark for general PR. We conclude with a discussion on PR's future directions, accompanied by a summary of the literature covered and access to our open-source library, available to the robotics community at: https://github.com/MetaSLAM/GPRS.

Robust Control of An Aerial Manipulator Based on A Variable Inertia Parameters Model

Jan 09, 2024

Abstract:Aerial manipulator, which is composed of an UAV (Unmanned Aerial Vehicle) and a multi-link manipulator and can perform aerial manipulation, has shown great potential of applications. However, dynamic coupling between the UAV and the manipulator makes it difficult to control the aerial manipulator with high performance. In this paper, system modeling and control problem of the aerial manipulator are studied. Firstly, an UAV dynamic model is proposed with consideration of the dynamic coupling from an attached manipulator, which is treated as disturbance for the UAV. In the dynamic model, the disturbance is affected by the variable inertia parameters of the aerial manipulator system. Then, based on the proposed dynamic model, a disturbance compensation robust $H_{\infty}$ controller is designed to stabilize flight of the UAV while the manipulator is in operation. Finally, experiments are conducted and the experimental results demonstrate the feasibility and validity of the proposed control scheme.

Synchronous Adversarial Feature Learning for LiDAR based Loop Closure Detection

Apr 05, 2018

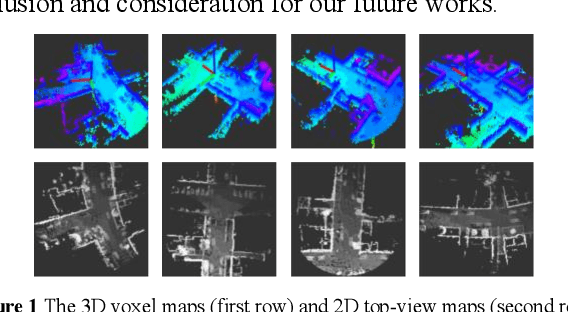

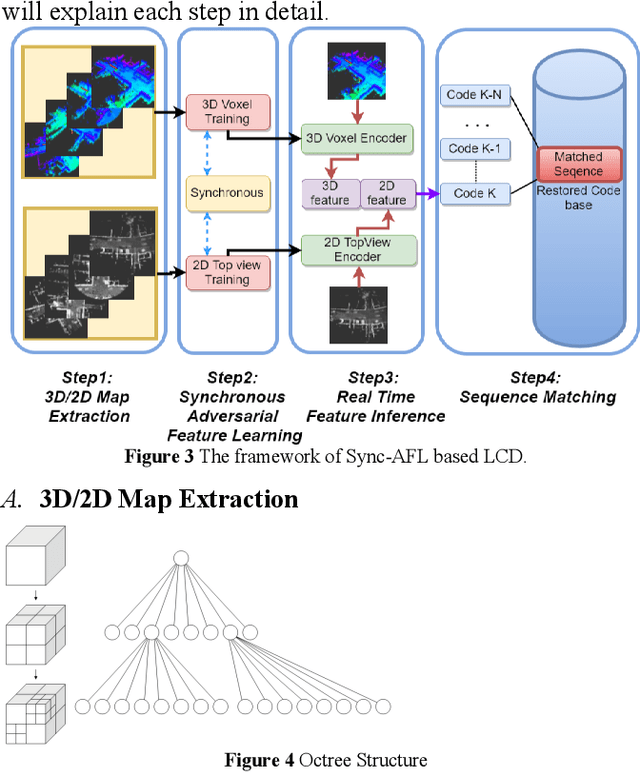

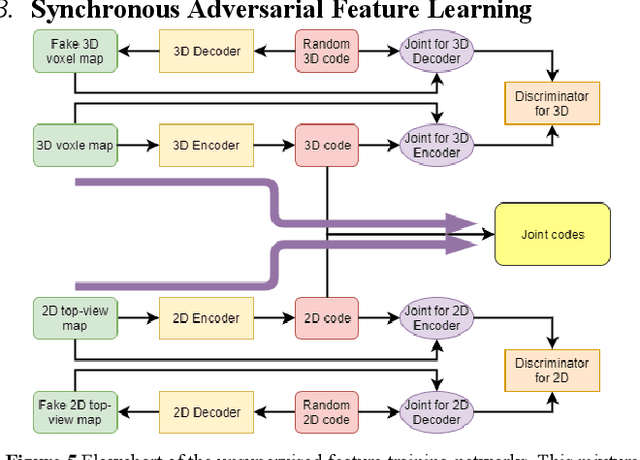

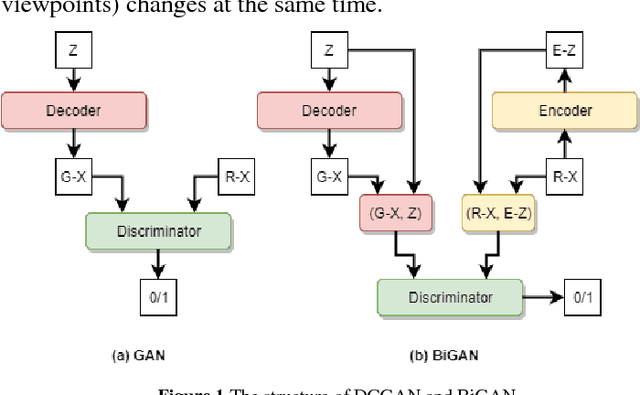

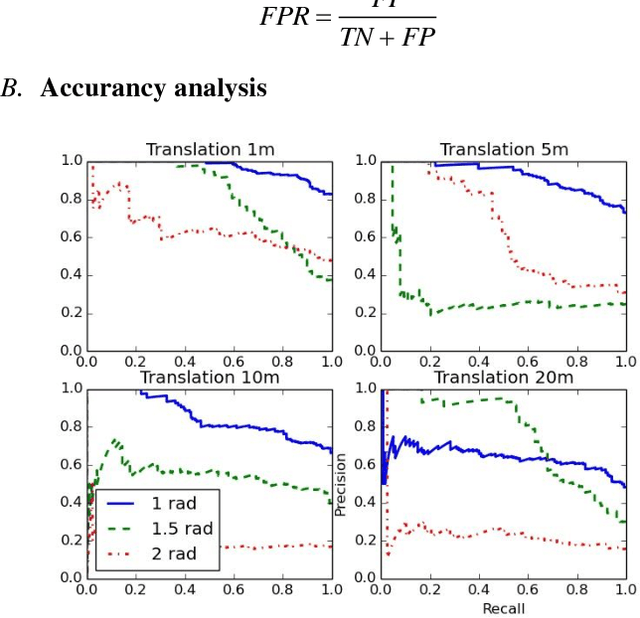

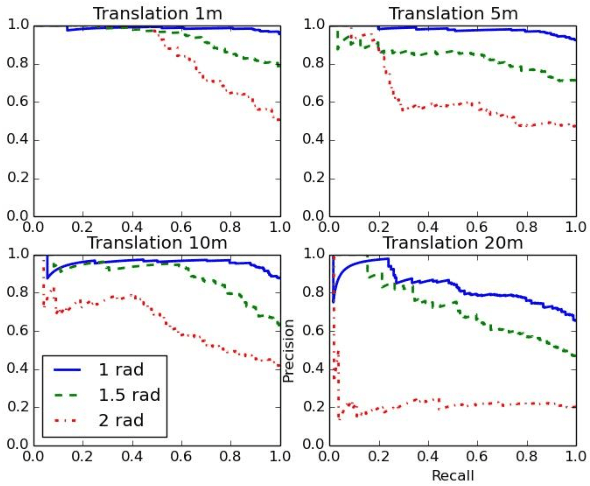

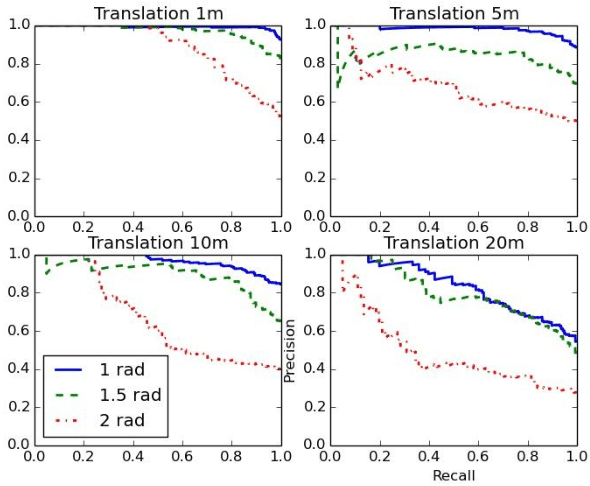

Abstract:Loop Closure Detection (LCD) is the essential module in the simultaneous localization and mapping (SLAM) task. In the current appearance-based SLAM methods, the visual inputs are usually affected by illumination, appearance and viewpoints changes. Comparing to the visual inputs, with the active property, light detection and ranging (LiDAR) based point-cloud inputs are invariant to the illumination and appearance changes. In this paper, we extract 3D voxel maps and 2D top view maps from LiDAR inputs, and the former could capture the local geometry into a simplified 3D voxel format, the later could capture the local road structure into a 2D image format. However, the most challenge problem is to obtain efficient features from 3D and 2D maps to against the viewpoints difference. In this paper, we proposed a synchronous adversarial feature learning method for the LCD task, which could learn the higher level abstract features from different domains without any label data. To the best of our knowledge, this work is the first to extract multi-domain adversarial features for the LCD task in real time. To investigate the performance, we test the proposed method on the KITTI odometry dataset. The extensive experiments results show that, the proposed method could largely improve LCD accuracy even under huge viewpoints differences.

Towards Stable Adversarial Feature Learning for LiDAR based Loop Closure Detection

Nov 21, 2017

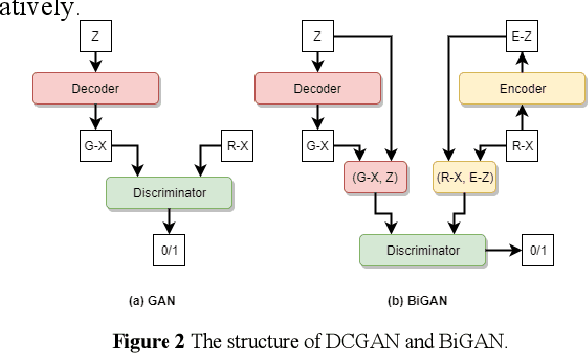

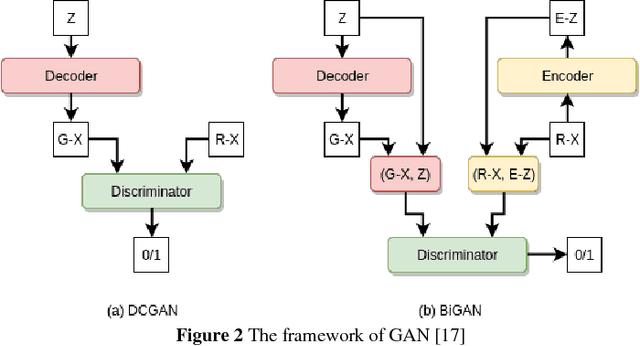

Abstract:Stable feature extraction is the key for the Loop closure detection (LCD) task in the simultaneously localization and mapping (SLAM) framework. In our paper, the feature extraction is operated by using a generative adversarial networks (GANs) based unsupervised learning. GANs are powerful generative models, however, GANs based adversarial learning suffers from training instability. We find that the data-code joint distribution in the adversarial learning is a more complex manifold than in the original GANs. And the loss function that drive the attractive force between synthesis and target distributions is unable for efficient latent code learning for LCD task. To relieve this problem, we combines the original adversarial learning with an inner cycle restriction module and a side updating module. To our best knowledge, we are the first to extract the adversarial features from the light detection and ranging (LiDAR) based inputs, which is invariant to the changes caused by illumination and appearance as in the visual inputs. We use the KITTI odometry datasets to investigate the performance of our method. The extensive experiments results shows that, with the same LiDAR projection maps, the proposed features are more stable in training, and could significantly improve the robustness on viewpoints differences than other state-of-art methods.

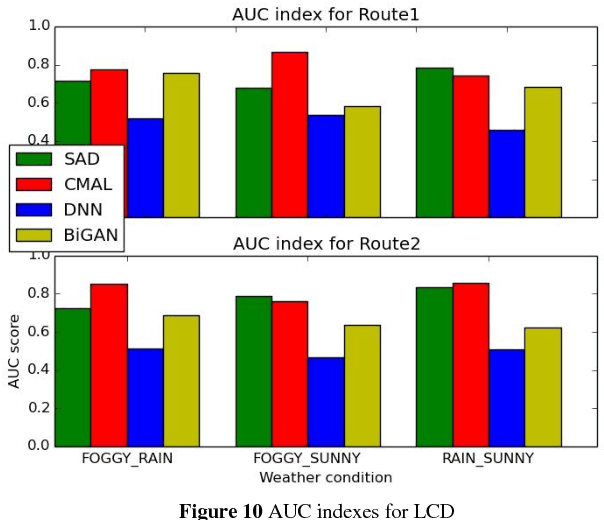

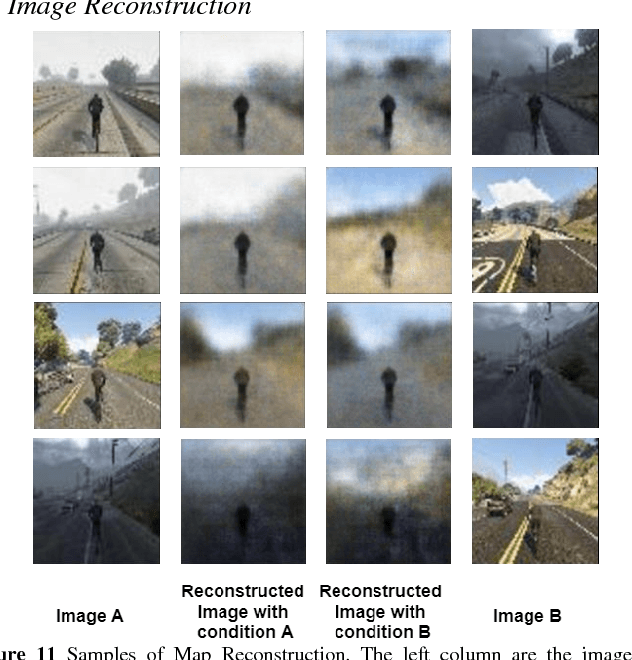

Condition directed Multi-domain Adversarial Learning for Loop Closure Detection

Nov 21, 2017

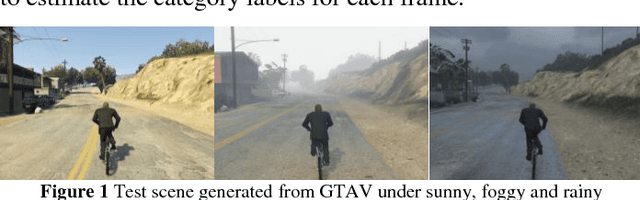

Abstract:Loop closure detection (LCD) is the key module in appearance based simultaneously localization and mapping (SLAM). However, in the real life, the appearance of visual inputs are usually affected by the illumination changes and texture changes under different weather conditions. Traditional methods in LCD usually rely on handcraft features, however, such methods are unable to capture the common descriptions under different weather conditions, such as rainy, foggy and sunny. Furthermore, traditional handcraft features could not capture the highly level understanding for the local scenes. In this paper, we proposed a novel condition directed multi-domain adversarial learning method, where we use the weather condition as the direction for feature inference. Based on the generative adversarial networks (GANs) and a classification networks, the proposed method could extract the high-level weather-invariant features directly from the raw data. The only labels required here are the weather condition of each visual input. Experiments are conducted in the GTAV game simulator, which could generated lifelike outdoor scenes under different weather conditions. The performance of LCD results shows that our method outperforms the state-of-arts significantly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge