Jian Pu

Decoupling Scene Perception and Ego Status: A Multi-Context Fusion Approach for Enhanced Generalization in End-to-End Autonomous Driving

Nov 18, 2025

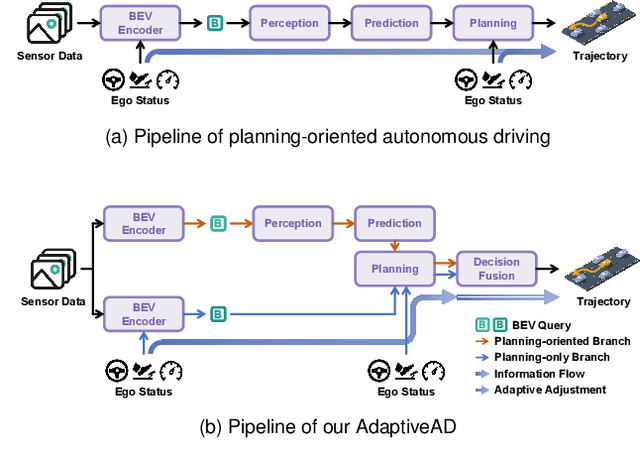

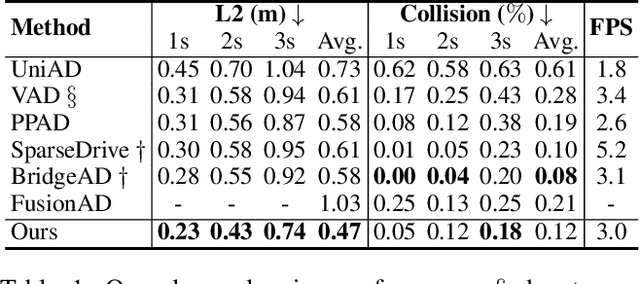

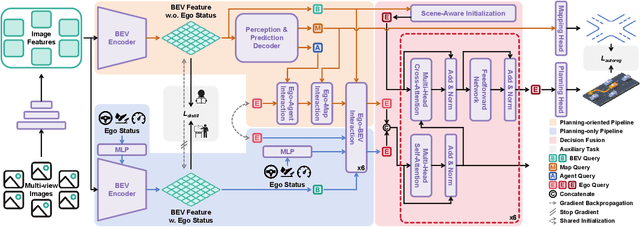

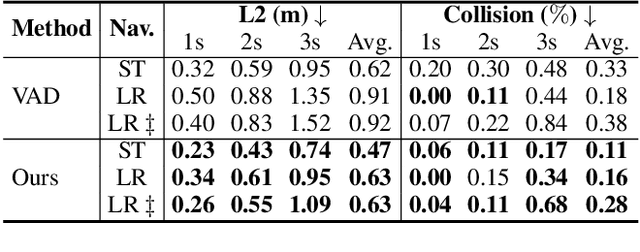

Abstract:Modular design of planning-oriented autonomous driving has markedly advanced end-to-end systems. However, existing architectures remain constrained by an over-reliance on ego status, hindering generalization and robust scene understanding. We identify the root cause as an inherent design within these architectures that allows ego status to be easily leveraged as a shortcut. Specifically, the premature fusion of ego status in the upstream BEV encoder allows an information flow from this strong prior to dominate the downstream planning module. To address this challenge, we propose AdaptiveAD, an architectural-level solution based on a multi-context fusion strategy. Its core is a dual-branch structure that explicitly decouples scene perception and ego status. One branch performs scene-driven reasoning based on multi-task learning, but with ego status deliberately omitted from the BEV encoder, while the other conducts ego-driven reasoning based solely on the planning task. A scene-aware fusion module then adaptively integrates the complementary decisions from the two branches to form the final planning trajectory. To ensure this decoupling does not compromise multi-task learning, we introduce a path attention mechanism for ego-BEV interaction and add two targeted auxiliary tasks: BEV unidirectional distillation and autoregressive online mapping. Extensive evaluations on the nuScenes dataset demonstrate that AdaptiveAD achieves state-of-the-art open-loop planning performance. Crucially, it significantly mitigates the over-reliance on ego status and exhibits impressive generalization capabilities across diverse scenarios.

Learning Global Representation from Queries for Vectorized HD Map Construction

Oct 08, 2025Abstract:The online construction of vectorized high-definition (HD) maps is a cornerstone of modern autonomous driving systems. State-of-the-art approaches, particularly those based on the DETR framework, formulate this as an instance detection problem. However, their reliance on independent, learnable object queries results in a predominantly local query perspective, neglecting the inherent global representation within HD maps. In this work, we propose \textbf{MapGR} (\textbf{G}lobal \textbf{R}epresentation learning for HD \textbf{Map} construction), an architecture designed to learn and utilize a global representations from queries. Our method introduces two synergistic modules: a Global Representation Learning (GRL) module, which encourages the distribution of all queries to better align with the global map through a carefully designed holistic segmentation task, and a Global Representation Guidance (GRG) module, which endows each individual query with explicit, global-level contextual information to facilitate its optimization. Evaluations on the nuScenes and Argoverse2 datasets validate the efficacy of our approach, demonstrating substantial improvements in mean Average Precision (mAP) compared to leading baselines.

Dark-ISP: Enhancing RAW Image Processing for Low-Light Object Detection

Sep 11, 2025

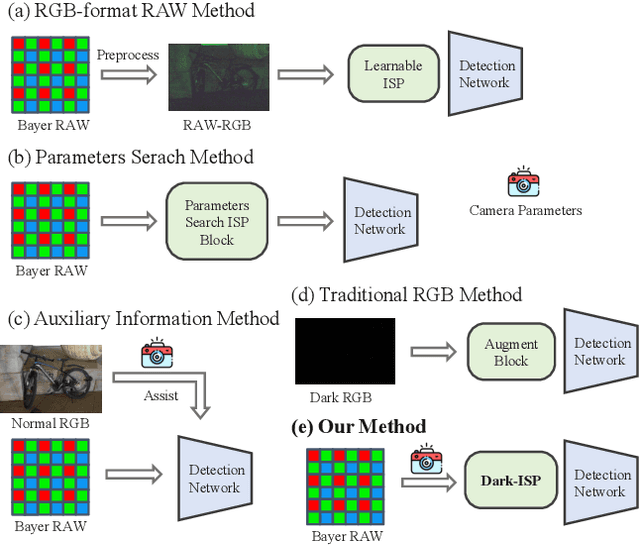

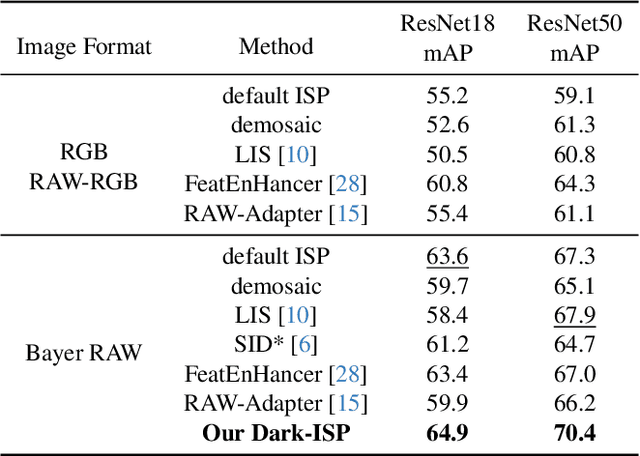

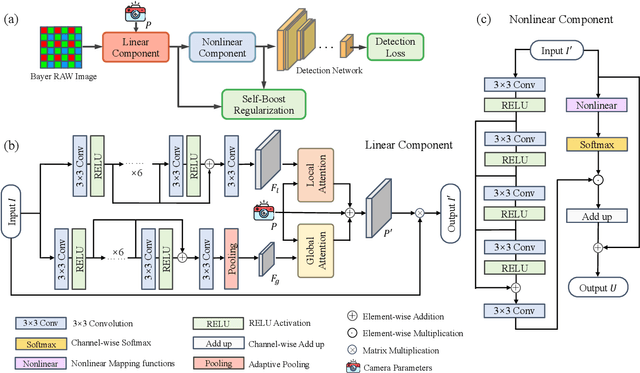

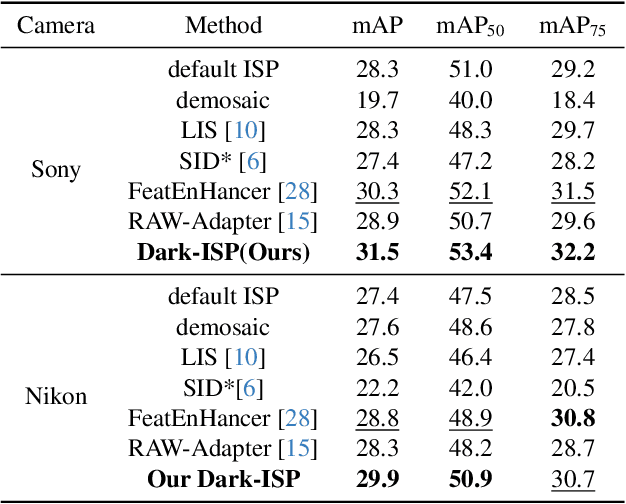

Abstract:Low-light Object detection is crucial for many real-world applications but remains challenging due to degraded image quality. While recent studies have shown that RAW images offer superior potential over RGB images, existing approaches either use RAW-RGB images with information loss or employ complex frameworks. To address these, we propose a lightweight and self-adaptive Image Signal Processing (ISP) plugin, Dark-ISP, which directly processes Bayer RAW images in dark environments, enabling seamless end-to-end training for object detection. Our key innovations are: (1) We deconstruct conventional ISP pipelines into sequential linear (sensor calibration) and nonlinear (tone mapping) sub-modules, recasting them as differentiable components optimized through task-driven losses. Each module is equipped with content-aware adaptability and physics-informed priors, enabling automatic RAW-to-RGB conversion aligned with detection objectives. (2) By exploiting the ISP pipeline's intrinsic cascade structure, we devise a Self-Boost mechanism that facilitates cooperation between sub-modules. Through extensive experiments on three RAW image datasets, we demonstrate that our method outperforms state-of-the-art RGB- and RAW-based detection approaches, achieving superior results with minimal parameters in challenging low-light environments.

* 11 pages, 6 figures, conference

FA-BARF: Frequency Adapted Bundle-Adjusting Neural Radiance Fields

Mar 15, 2025

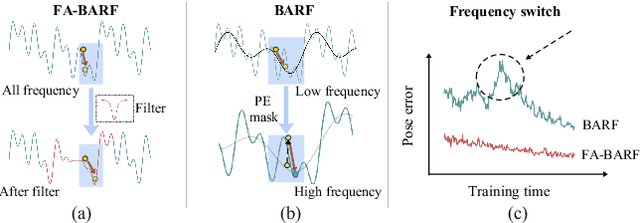

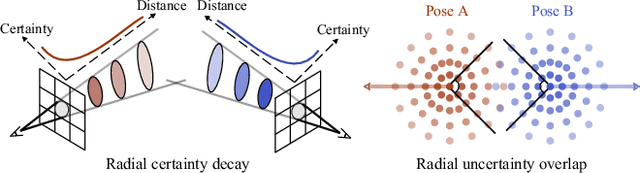

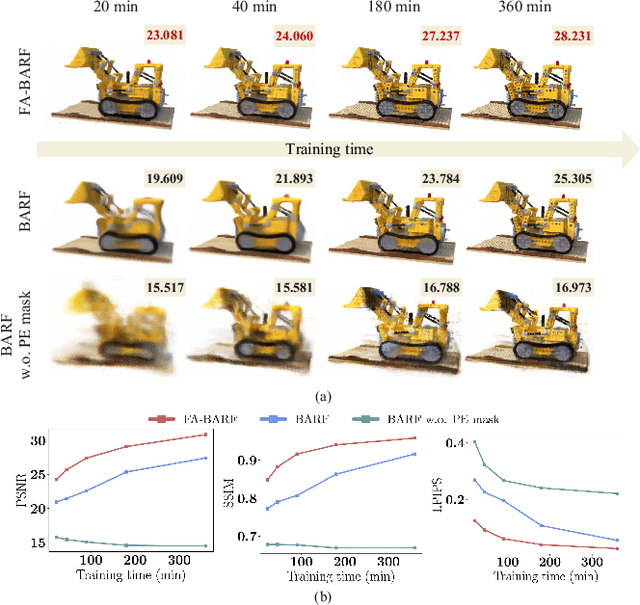

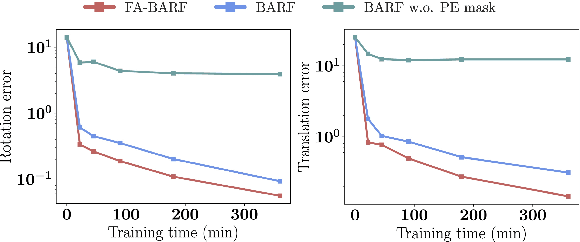

Abstract:Neural Radiance Fields (NeRF) have exhibited highly effective performance for photorealistic novel view synthesis recently. However, the key limitation it meets is the reliance on a hand-crafted frequency annealing strategy to recover 3D scenes with imperfect camera poses. The strategy exploits a temporal low-pass filter to guarantee convergence while decelerating the joint optimization of implicit scene reconstruction and camera registration. In this work, we introduce the Frequency Adapted Bundle Adjusting Radiance Field (FA-BARF), substituting the temporal low-pass filter for a frequency-adapted spatial low-pass filter to address the decelerating problem. We establish a theoretical framework to interpret the relationship between position encoding of NeRF and camera registration and show that our frequency-adapted filter can mitigate frequency fluctuation caused by the temporal filter. Furthermore, we show that applying a spatial low-pass filter in NeRF can optimize camera poses productively through radial uncertainty overlaps among various views. Extensive experiments show that FA-BARF can accelerate the joint optimization process under little perturbations in object-centric scenes and recover real-world scenes with unknown camera poses. This implies wider possibilities for NeRF applied in dense 3D mapping and reconstruction under real-time requirements. The code will be released upon paper acceptance.

A2DO: Adaptive Anti-Degradation Odometry with Deep Multi-Sensor Fusion for Autonomous Navigation

Feb 28, 2025Abstract:Accurate localization is essential for the safe and effective navigation of autonomous vehicles, and Simultaneous Localization and Mapping (SLAM) is a cornerstone technology in this context. However, The performance of the SLAM system can deteriorate under challenging conditions such as low light, adverse weather, or obstructions due to sensor degradation. We present A2DO, a novel end-to-end multi-sensor fusion odometry system that enhances robustness in these scenarios through deep neural networks. A2DO integrates LiDAR and visual data, employing a multi-layer, multi-scale feature encoding module augmented by an attention mechanism to mitigate sensor degradation dynamically. The system is pre-trained extensively on simulated datasets covering a broad range of degradation scenarios and fine-tuned on a curated set of real-world data, ensuring robust adaptation to complex scenarios. Our experiments demonstrate that A2DO maintains superior localization accuracy and robustness across various degradation conditions, showcasing its potential for practical implementation in autonomous vehicle systems.

Detecting OOD Samples via Optimal Transport Scoring Function

Feb 22, 2025Abstract:To deploy machine learning models in the real world, researchers have proposed many OOD detection algorithms to help models identify unknown samples during the inference phase and prevent them from making untrustworthy predictions. Unlike methods that rely on extra data for outlier exposure training, post hoc methods detect Out-of-Distribution (OOD) samples by developing scoring functions, which are model agnostic and do not require additional training. However, previous post hoc methods may fail to capture the geometric cues embedded in network representations. Thus, in this study, we propose a novel score function based on the optimal transport theory, named OTOD, for OOD detection. We utilize information from features, logits, and the softmax probability space to calculate the OOD score for each test sample. Our experiments show that combining this information can boost the performance of OTOD with a certain margin. Experiments on the CIFAR-10 and CIFAR-100 benchmarks demonstrate the superior performance of our method. Notably, OTOD outperforms the state-of-the-art method GEN by 7.19% in the mean FPR@95 on the CIFAR-10 benchmark using ResNet-18 as the backbone, and by 12.51% in the mean FPR@95 using WideResNet-28 as the backbone. In addition, we provide theoretical guarantees for OTOD. The code is available in https://github.com/HengGao12/OTOD.

PC-BEV: An Efficient Polar-Cartesian BEV Fusion Framework for LiDAR Semantic Segmentation

Dec 19, 2024Abstract:Although multiview fusion has demonstrated potential in LiDAR segmentation, its dependence on computationally intensive point-based interactions, arising from the lack of fixed correspondences between views such as range view and Bird's-Eye View (BEV), hinders its practical deployment. This paper challenges the prevailing notion that multiview fusion is essential for achieving high performance. We demonstrate that significant gains can be realized by directly fusing Polar and Cartesian partitioning strategies within the BEV space. Our proposed BEV-only segmentation model leverages the inherent fixed grid correspondences between these partitioning schemes, enabling a fusion process that is orders of magnitude faster (170$\times$ speedup) than conventional point-based methods. Furthermore, our approach facilitates dense feature fusion, preserving richer contextual information compared to sparse point-based alternatives. To enhance scene understanding while maintaining inference efficiency, we also introduce a hybrid Transformer-CNN architecture. Extensive evaluation on the SemanticKITTI and nuScenes datasets provides compelling evidence that our method outperforms previous multiview fusion approaches in terms of both performance and inference speed, highlighting the potential of BEV-based fusion for LiDAR segmentation. Code is available at \url{https://github.com/skyshoumeng/PC-BEV.}

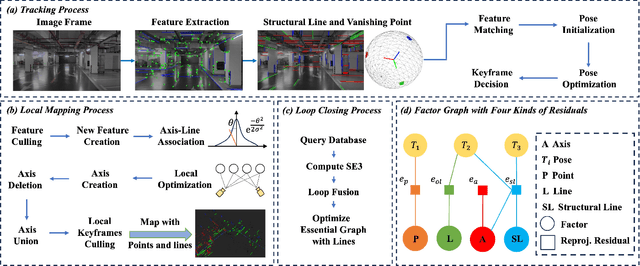

PAPL-SLAM: Principal Axis-Anchored Monocular Point-Line SLAM

Oct 16, 2024

Abstract:In point-line SLAM systems, the utilization of line structural information and the optimization of lines are two significant problems. The former is usually addressed through structural regularities, while the latter typically involves using minimal parameter representations of lines in optimization. However, separating these two steps leads to the loss of constraint information to each other. We anchor lines with similar directions to a principal axis and optimize them with $n+2$ parameters for $n$ lines, solving both problems together. Our method considers scene structural information, which can be easily extended to different world hypotheses while significantly reducing the number of line parameters to be optimized, enabling rapid and accurate mapping and tracking. To further enhance the system's robustness and avoid mismatch, we have modeled the line-axis probabilistic data association and provided the algorithm for axis creation, updating, and optimization. Additionally, considering that most real-world scenes conform to the Atlanta World hypothesis, we provide a structural line detection strategy based on vertical priors and vanishing points. Experimental results and ablation studies on various indoor and outdoor datasets demonstrate the effectiveness of our system.

GlobalMapNet: An Online Framework for Vectorized Global HD Map Construction

Sep 17, 2024

Abstract:High-definition (HD) maps are essential for autonomous driving systems. Traditionally, an expensive and labor-intensive pipeline is implemented to construct HD maps, which is limited in scalability. In recent years, crowdsourcing and online mapping have emerged as two alternative methods, but they have limitations respectively. In this paper, we provide a novel methodology, namely global map construction, to perform direct generation of vectorized global maps, combining the benefits of crowdsourcing and online mapping. We introduce GlobalMapNet, the first online framework for vectorized global HD map construction, which updates and utilizes a global map on the ego vehicle. To generate the global map from scratch, we propose GlobalMapBuilder to match and merge local maps continuously. We design a new algorithm, Map NMS, to remove duplicate map elements and produce a clean map. We also propose GlobalMapFusion to aggregate historical map information, improving consistency of prediction. We examine GlobalMapNet on two widely recognized datasets, Argoverse2 and nuScenes, showing that our framework is capable of generating globally consistent results.

Make a Strong Teacher with Label Assistance: A Novel Knowledge Distillation Approach for Semantic Segmentation

Jul 18, 2024

Abstract:In this paper, we introduce a novel knowledge distillation approach for the semantic segmentation task. Unlike previous methods that rely on power-trained teachers or other modalities to provide additional knowledge, our approach does not require complex teacher models or information from extra sensors. Specifically, for the teacher model training, we propose to noise the label and then incorporate it into input to effectively boost the lightweight teacher performance. To ensure the robustness of the teacher model against the introduced noise, we propose a dual-path consistency training strategy featuring a distance loss between the outputs of two paths. For the student model training, we keep it consistent with the standard distillation for simplicity. Our approach not only boosts the efficacy of knowledge distillation but also increases the flexibility in selecting teacher and student models. To demonstrate the advantages of our Label Assisted Distillation (LAD) method, we conduct extensive experiments on five challenging datasets including Cityscapes, ADE20K, PASCAL-VOC, COCO-Stuff 10K, and COCO-Stuff 164K, five popular models: FCN, PSPNet, DeepLabV3, STDC, and OCRNet, and results show the effectiveness and generalization of our approach. We posit that incorporating labels into the input, as demonstrated in our work, will provide valuable insights into related fields. Code is available at https://github.com/skyshoumeng/Label_Assisted_Distillation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge