Zeyu Fu

Training-Free and Interpretable Hateful Video Detection via Multi-stage Adversarial Reasoning

Jan 21, 2026Abstract:Hateful videos pose serious risks by amplifying discrimination, inciting violence, and undermining online safety. Existing training-based hateful video detection methods are constrained by limited training data and lack of interpretability, while directly prompting large vision-language models often struggle to deliver reliable hate detection. To address these challenges, this paper introduces MARS, a training-free Multi-stage Adversarial ReaSoning framework that enables reliable and interpretable hateful content detection. MARS begins with the objective description of video content, establishing a neutral foundation for subsequent analysis. Building on this, it develops evidence-based reasoning that supports potential hateful interpretations, while in parallel incorporating counter-evidence reasoning to capture plausible non-hateful perspectives. Finally, these perspectives are synthesized into a conclusive and explainable decision. Extensive evaluation on two real-world datasets shows that MARS achieves up to 10% improvement under certain backbones and settings compared to other training-free approaches and outperforms state-of-the-art training-based methods on one dataset. In addition, MARS produces human-understandable justifications, thereby supporting compliance oversight and enhancing the transparency of content moderation workflows. The code is available at https://github.com/Multimodal-Intelligence-Lab-MIL/MARS.

Beyond Segmentation: An Oil Spill Change Detection Framework Using Synthetic SAR Imagery

Jan 05, 2026Abstract:Marine oil spills are urgent environmental hazards that demand rapid and reliable detection to minimise ecological and economic damage. While Synthetic Aperture Radar (SAR) imagery has become a key tool for large-scale oil spill monitoring, most existing detection methods rely on deep learning-based segmentation applied to single SAR images. These static approaches struggle to distinguish true oil spills from visually similar oceanic features (e.g., biogenic slicks or low-wind zones), leading to high false positive rates and limited generalizability, especially under data-scarce conditions. To overcome these limitations, we introduce Oil Spill Change Detection (OSCD), a new bi-temporal task that focuses on identifying changes between pre- and post-spill SAR images. As real co-registered pre-spill imagery is not always available, we propose the Temporal-Aware Hybrid Inpainting (TAHI) framework, which generates synthetic pre-spill images from post-spill SAR data. TAHI integrates two key components: High-Fidelity Hybrid Inpainting for oil-free reconstruction, and Temporal Realism Enhancement for radiometric and sea-state consistency. Using TAHI, we construct the first OSCD dataset and benchmark several state-of-the-art change detection models. Results show that OSCD significantly reduces false positives and improves detection accuracy compared to conventional segmentation, demonstrating the value of temporally-aware methods for reliable, scalable oil spill monitoring in real-world scenarios.

MultiHateLoc: Towards Temporal Localisation of Multimodal Hate Content in Online Videos

Dec 11, 2025Abstract:The rapid growth of video content on platforms such as TikTok and YouTube has intensified the spread of multimodal hate speech, where harmful cues emerge subtly and asynchronously across visual, acoustic, and textual streams. Existing research primarily focuses on video-level classification, leaving the practically crucial task of temporal localisation, identifying when hateful segments occur, largely unaddressed. This challenge is even more noticeable under weak supervision, where only video-level labels are available, and static fusion or classification-based architectures struggle to capture cross-modal and temporal dynamics. To address these challenges, we propose MultiHateLoc, the first framework designed for weakly-supervised multimodal hate localisation. MultiHateLoc incorporates (1) modality-aware temporal encoders to model heterogeneous sequential patterns, including a tailored text-based preprocessing module for feature enhancement; (2) dynamic cross-modal fusion to adaptively emphasise the most informative modality at each moment and a cross-modal contrastive alignment strategy to enhance multimodal feature consistency; (3) a modality-aware MIL objective to identify discriminative segments under video-level supervision. Despite relying solely on coarse labels, MultiHateLoc produces fine-grained, interpretable frame-level predictions. Experiments on HateMM and MultiHateClip show that our method achieves state-of-the-art performance in the localisation task.

Revealing Temporal Label Noise in Multimodal Hateful Video Classification

Aug 06, 2025Abstract:The rapid proliferation of online multimedia content has intensified the spread of hate speech, presenting critical societal and regulatory challenges. While recent work has advanced multimodal hateful video detection, most approaches rely on coarse, video-level annotations that overlook the temporal granularity of hateful content. This introduces substantial label noise, as videos annotated as hateful often contain long non-hateful segments. In this paper, we investigate the impact of such label ambiguity through a fine-grained approach. Specifically, we trim hateful videos from the HateMM and MultiHateClip English datasets using annotated timestamps to isolate explicitly hateful segments. We then conduct an exploratory analysis of these trimmed segments to examine the distribution and characteristics of both hateful and non-hateful content. This analysis highlights the degree of semantic overlap and the confusion introduced by coarse, video-level annotations. Finally, controlled experiments demonstrated that time-stamp noise fundamentally alters model decision boundaries and weakens classification confidence, highlighting the inherent context dependency and temporal continuity of hate speech expression. Our findings provide new insights into the temporal dynamics of multimodal hateful videos and highlight the need for temporally aware models and benchmarks for improved robustness and interpretability. Code and data are available at https://github.com/Multimodal-Intelligence-Lab-MIL/HatefulVideoLabelNoise.

Enhanced Multimodal Hate Video Detection via Channel-wise and Modality-wise Fusion

May 17, 2025Abstract:The rapid rise of video content on platforms such as TikTok and YouTube has transformed information dissemination, but it has also facilitated the spread of harmful content, particularly hate videos. Despite significant efforts to combat hate speech, detecting these videos remains challenging due to their often implicit nature. Current detection methods primarily rely on unimodal approaches, which inadequately capture the complementary features across different modalities. While multimodal techniques offer a broader perspective, many fail to effectively integrate temporal dynamics and modality-wise interactions essential for identifying nuanced hate content. In this paper, we present CMFusion, an enhanced multimodal hate video detection model utilizing a novel Channel-wise and Modality-wise Fusion Mechanism. CMFusion first extracts features from text, audio, and video modalities using pre-trained models and then incorporates a temporal cross-attention mechanism to capture dependencies between video and audio streams. The learned features are then processed by channel-wise and modality-wise fusion modules to obtain informative representations of videos. Our extensive experiments on a real-world dataset demonstrate that CMFusion significantly outperforms five widely used baselines in terms of accuracy, precision, recall, and F1 score. Comprehensive ablation studies and parameter analyses further validate our design choices, highlighting the model's effectiveness in detecting hate videos. The source codes will be made publicly available at https://github.com/EvelynZ10/cmfusion.

* ICDMW 2024, Github: https://github.com/EvelynZ10/cmfusion

A Black-Box Evaluation Framework for Semantic Robustness in Bird's Eye View Detection

Dec 19, 2024

Abstract:Camera-based Bird's Eye View (BEV) perception models receive increasing attention for their crucial role in autonomous driving, a domain where concerns about the robustness and reliability of deep learning have been raised. While only a few works have investigated the effects of randomly generated semantic perturbations, aka natural corruptions, on the multi-view BEV detection task, we develop a black-box robustness evaluation framework that adversarially optimises three common semantic perturbations: geometric transformation, colour shifting, and motion blur, to deceive BEV models, serving as the first approach in this emerging field. To address the challenge posed by optimising the semantic perturbation, we design a smoothed, distance-based surrogate function to replace the mAP metric and introduce SimpleDIRECT, a deterministic optimisation algorithm that utilises observed slopes to guide the optimisation process. By comparing with randomised perturbation and two optimisation baselines, we demonstrate the effectiveness of the proposed framework. Additionally, we provide a benchmark on the semantic robustness of ten recent BEV models. The results reveal that PolarFormer, which emphasises geometric information from multi-view images, exhibits the highest robustness, whereas BEVDet is fully compromised, with its precision reduced to zero.

GlobalMapNet: An Online Framework for Vectorized Global HD Map Construction

Sep 17, 2024

Abstract:High-definition (HD) maps are essential for autonomous driving systems. Traditionally, an expensive and labor-intensive pipeline is implemented to construct HD maps, which is limited in scalability. In recent years, crowdsourcing and online mapping have emerged as two alternative methods, but they have limitations respectively. In this paper, we provide a novel methodology, namely global map construction, to perform direct generation of vectorized global maps, combining the benefits of crowdsourcing and online mapping. We introduce GlobalMapNet, the first online framework for vectorized global HD map construction, which updates and utilizes a global map on the ego vehicle. To generate the global map from scratch, we propose GlobalMapBuilder to match and merge local maps continuously. We design a new algorithm, Map NMS, to remove duplicate map elements and produce a clean map. We also propose GlobalMapFusion to aggregate historical map information, improving consistency of prediction. We examine GlobalMapNet on two widely recognized datasets, Argoverse2 and nuScenes, showing that our framework is capable of generating globally consistent results.

Position and Orientation-Aware One-Shot Learning for Medical Action Recognition from Signal Data

Sep 27, 2023

Abstract:In this work, we propose a position and orientation-aware one-shot learning framework for medical action recognition from signal data. The proposed framework comprises two stages and each stage includes signal-level image generation (SIG), cross-attention (CsA), dynamic time warping (DTW) modules and the information fusion between the proposed privacy-preserved position and orientation features. The proposed SIG method aims to transform the raw skeleton data into privacy-preserved features for training. The CsA module is developed to guide the network in reducing medical action recognition bias and more focusing on important human body parts for each specific action, aimed at addressing similar medical action related issues. Moreover, the DTW module is employed to minimize temporal mismatching between instances and further improve model performance. Furthermore, the proposed privacy-preserved orientation-level features are utilized to assist the position-level features in both of the two stages for enhancing medical action recognition performance. Extensive experimental results on the widely-used and well-known NTU RGB+D 60, NTU RGB+D 120, and PKU-MMD datasets all demonstrate the effectiveness of the proposed method, which outperforms the other state-of-the-art methods with general dataset partitioning by 2.7%, 6.2% and 4.1%, respectively.

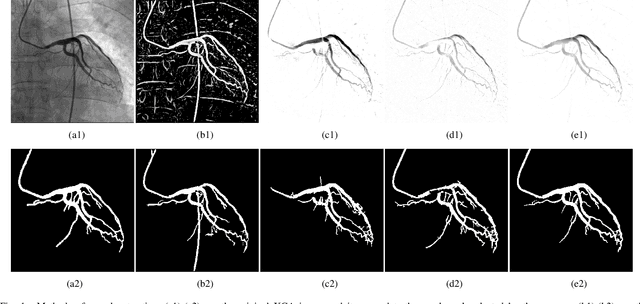

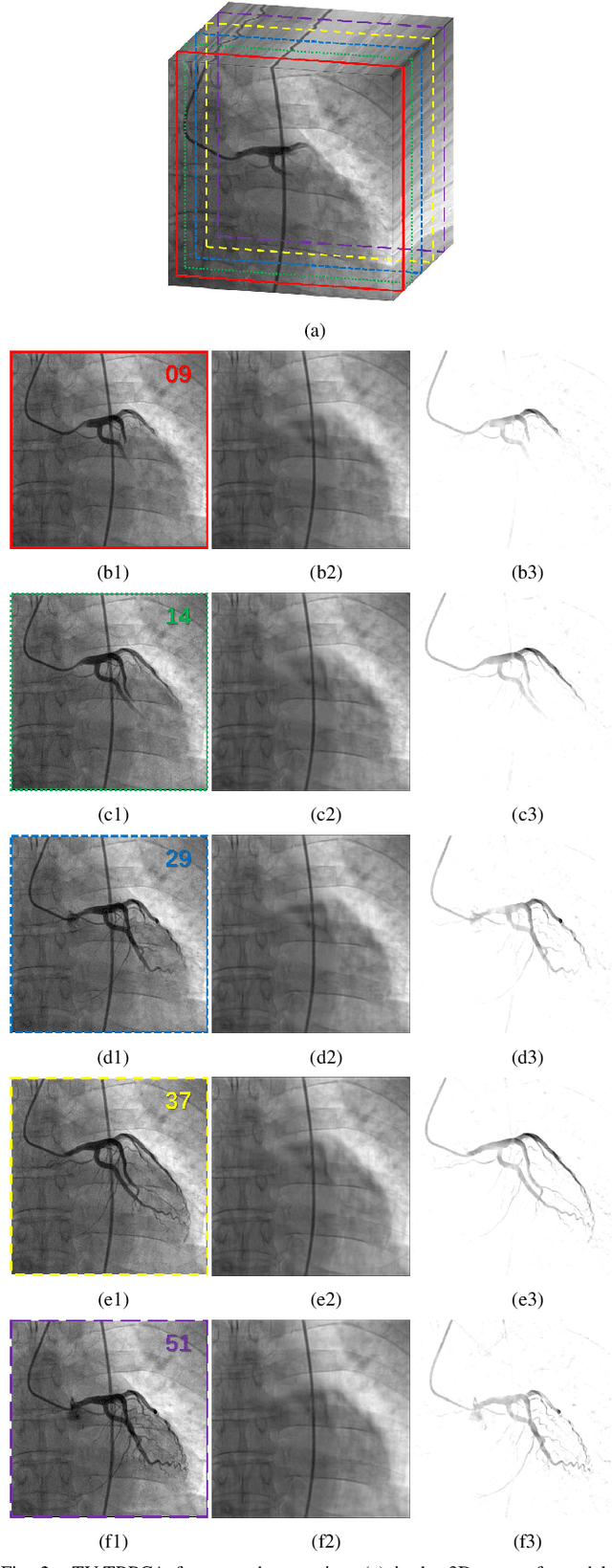

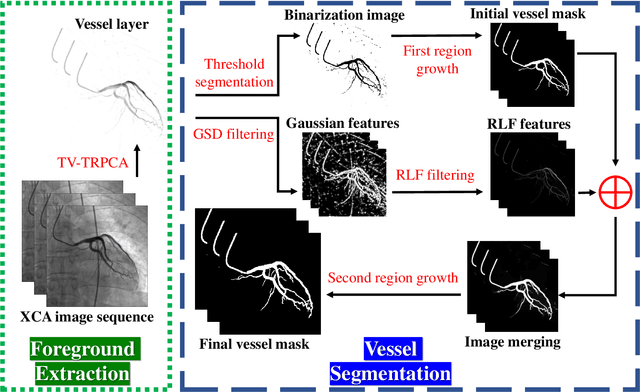

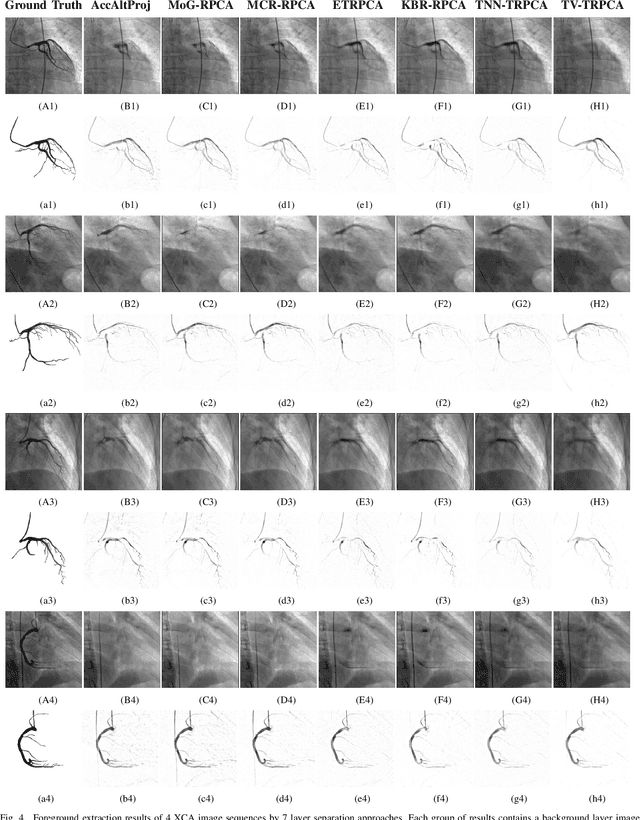

Robust Implementation of Foreground Extraction and Vessel Segmentation for X-ray Coronary Angiography Image Sequence

Sep 15, 2022

Abstract:The extraction of contrast-filled vessels from X-ray coronary angiography(XCA) image sequence has important clinical significance for intuitively diagnosis and therapy. In this study, XCA image sequence O is regarded as a three-dimensional tensor input, vessel layer H is a sparse tensor, and background layer B is a low-rank tensor. Using tensor nuclear norm(TNN) minimization, a novel method for vessel layer extraction based on tensor robust principal component analysis(TRPCA) is proposed. Furthermore, considering the irregular movement of vessels and the dynamic interference of surrounding irrelevant tissues, the total variation(TV) regularized spatial-temporal constraint is introduced to separate the dynamic background E. Subsequently, for the vessel images with uneven contrast distribution, a two-stage region growth(TSRG) method is utilized for vessel enhancement and segmentation. A global threshold segmentation is used as the pre-processing to obtain the main branch, and the Radon-Like features(RLF) filter is used to enhance and connect broken minor segments, the final vessel mask is constructed by combining the two intermediate results. We evaluated the visibility of TV-TRPCA algorithm for foreground extraction and the accuracy of TSRG algorithm for vessel segmentation on real clinical XCA image sequences and third-party database. Both qualitative and quantitative results verify the superiority of the proposed methods over the existing state-of-the-art approaches.

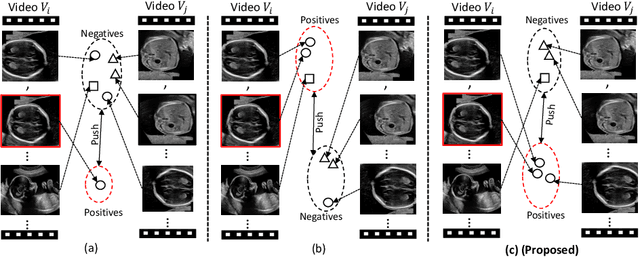

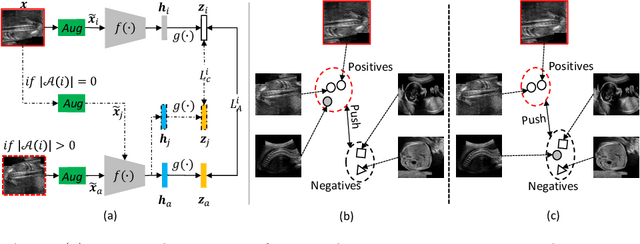

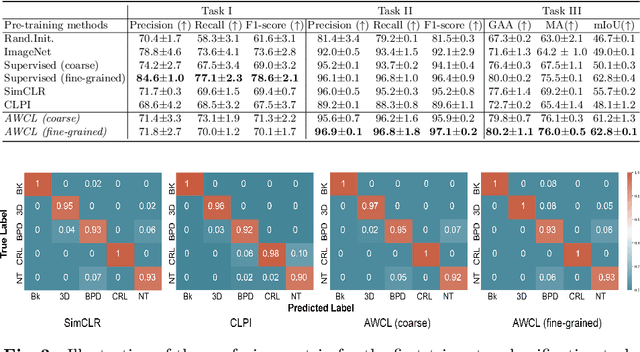

Anatomy-Aware Contrastive Representation Learning for Fetal Ultrasound

Aug 22, 2022

Abstract:Self-supervised contrastive representation learning offers the advantage of learning meaningful visual representations from unlabeled medical datasets for transfer learning. However, applying current contrastive learning approaches to medical data without considering its domain-specific anatomical characteristics may lead to visual representations that are inconsistent in appearance and semantics. In this paper, we propose to improve visual representations of medical images via anatomy-aware contrastive learning (AWCL), which incorporates anatomy information to augment the positive/negative pair sampling in a contrastive learning manner. The proposed approach is demonstrated for automated fetal ultrasound imaging tasks, enabling the positive pairs from the same or different ultrasound scans that are anatomically similar to be pulled together and thus improving the representation learning. We empirically investigate the effect of inclusion of anatomy information with coarse- and fine-grained granularity, for contrastive learning and find that learning with fine-grained anatomy information which preserves intra-class difference is more effective than its counterpart. We also analyze the impact of anatomy ratio on our AWCL framework and find that using more distinct but anatomically similar samples to compose positive pairs results in better quality representations. Experiments on a large-scale fetal ultrasound dataset demonstrate that our approach is effective for learning representations that transfer well to three clinical downstream tasks, and achieves superior performance compared to ImageNet supervised and the current state-of-the-art contrastive learning methods. In particular, AWCL outperforms ImageNet supervised method by 13.8% and state-of-the-art contrastive-based method by 7.1% on a cross-domain segmentation task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge