Xiangyu Yin

Fragile by Design: On the Limits of Adversarial Defenses in Personalized Generation

Nov 13, 2025Abstract:Personalized AI applications such as DreamBooth enable the generation of customized content from user images, but also raise significant privacy concerns, particularly the risk of facial identity leakage. Recent defense mechanisms like Anti-DreamBooth attempt to mitigate this risk by injecting adversarial perturbations into user photos to prevent successful personalization. However, we identify two critical yet overlooked limitations of these methods. First, the adversarial examples often exhibit perceptible artifacts such as conspicuous patterns or stripes, making them easily detectable as manipulated content. Second, the perturbations are highly fragile, as even a simple, non-learned filter can effectively remove them, thereby restoring the model's ability to memorize and reproduce user identity. To investigate this vulnerability, we propose a novel evaluation framework, AntiDB_Purify, to systematically evaluate existing defenses under realistic purification threats, including both traditional image filters and adversarial purification. Results reveal that none of the current methods maintains their protective effectiveness under such threats. These findings highlight that current defenses offer a false sense of security and underscore the urgent need for more imperceptible and robust protections to safeguard user identity in personalized generation.

Cumulative Consensus Score: Label-Free and Model-Agnostic Evaluation of Object Detectors in Deployment

Sep 16, 2025Abstract:Evaluating object detection models in deployment is challenging because ground-truth annotations are rarely available. We introduce the Cumulative Consensus Score (CCS), a label-free metric that enables continuous monitoring and comparison of detectors in real-world settings. CCS applies test-time data augmentation to each image, collects predicted bounding boxes across augmented views, and computes overlaps using Intersection over Union. Maximum overlaps are normalized and averaged across augmentation pairs, yielding a measure of spatial consistency that serves as a proxy for reliability without annotations. In controlled experiments on Open Images and KITTI, CCS achieved over 90% congruence with F1-score, Probabilistic Detection Quality, and Optimal Correction Cost. The method is model-agnostic, working across single-stage and two-stage detectors, and operates at the case level to highlight under-performing scenarios. Altogether, CCS provides a robust foundation for DevOps-style monitoring of object detectors.

Mic-hackathon 2024: Hackathon on Machine Learning for Electron and Scanning Probe Microscopy

Jun 10, 2025

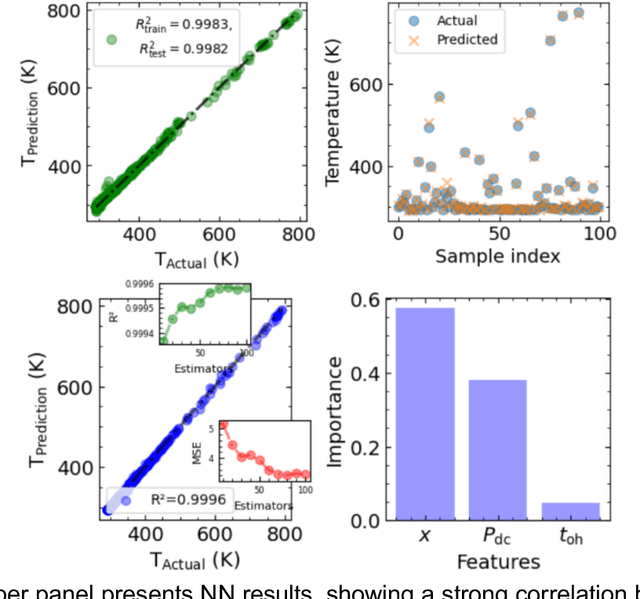

Abstract:Microscopy is a primary source of information on materials structure and functionality at nanometer and atomic scales. The data generated is often well-structured, enriched with metadata and sample histories, though not always consistent in detail or format. The adoption of Data Management Plans (DMPs) by major funding agencies promotes preservation and access. However, deriving insights remains difficult due to the lack of standardized code ecosystems, benchmarks, and integration strategies. As a result, data usage is inefficient and analysis time is extensive. In addition to post-acquisition analysis, new APIs from major microscope manufacturers enable real-time, ML-based analytics for automated decision-making and ML-agent-controlled microscope operation. Yet, a gap remains between the ML and microscopy communities, limiting the impact of these methods on physics, materials discovery, and optimization. Hackathons help bridge this divide by fostering collaboration between ML researchers and microscopy experts. They encourage the development of novel solutions that apply ML to microscopy, while preparing a future workforce for instrumentation, materials science, and applied ML. This hackathon produced benchmark datasets and digital twins of microscopes to support community growth and standardized workflows. All related code is available at GitHub: https://github.com/KalininGroup/Mic-hackathon-2024-codes-publication/tree/1.0.0.1

TAIJI: Textual Anchoring for Immunizing Jailbreak Images in Vision Language Models

Mar 13, 2025

Abstract:Vision Language Models (VLMs) have demonstrated impressive inference capabilities, but remain vulnerable to jailbreak attacks that can induce harmful or unethical responses. Existing defence methods are predominantly white-box approaches that require access to model parameters and extensive modifications, making them costly and impractical for many real-world scenarios. Although some black-box defences have been proposed, they often impose input constraints or require multiple queries, limiting their effectiveness in safety-critical tasks such as autonomous driving. To address these challenges, we propose a novel black-box defence framework called \textbf{T}extual \textbf{A}nchoring for \textbf{I}mmunizing \textbf{J}ailbreak \textbf{I}mages (\textbf{TAIJI}). TAIJI leverages key phrase-based textual anchoring to enhance the model's ability to assess and mitigate the harmful content embedded within both visual and textual prompts. Unlike existing methods, TAIJI operates effectively with a single query during inference, while preserving the VLM's performance on benign tasks. Extensive experiments demonstrate that TAIJI significantly enhances the safety and reliability of VLMs, providing a practical and efficient solution for real-world deployment.

FALCON: Fine-grained Activation Manipulation by Contrastive Orthogonal Unalignment for Large Language Model

Feb 03, 2025Abstract:Large language models have been widely applied, but can inadvertently encode sensitive or harmful information, raising significant safety concerns. Machine unlearning has emerged to alleviate this concern; however, existing training-time unlearning approaches, relying on coarse-grained loss combinations, have limitations in precisely separating knowledge and balancing removal effectiveness with model utility. In contrast, we propose Fine-grained Activation manipuLation by Contrastive Orthogonal uNalignment (FALCON), a novel representation-guided unlearning approach that leverages information-theoretic guidance for efficient parameter selection, employs contrastive mechanisms to enhance representation separation, and projects conflict gradients onto orthogonal subspaces to resolve conflicts between forgetting and retention objectives. Extensive experiments demonstrate that FALCON achieves superior unlearning effectiveness while maintaining model utility, exhibiting robust resistance against knowledge recovery attempts.

A Black-Box Evaluation Framework for Semantic Robustness in Bird's Eye View Detection

Dec 19, 2024

Abstract:Camera-based Bird's Eye View (BEV) perception models receive increasing attention for their crucial role in autonomous driving, a domain where concerns about the robustness and reliability of deep learning have been raised. While only a few works have investigated the effects of randomly generated semantic perturbations, aka natural corruptions, on the multi-view BEV detection task, we develop a black-box robustness evaluation framework that adversarially optimises three common semantic perturbations: geometric transformation, colour shifting, and motion blur, to deceive BEV models, serving as the first approach in this emerging field. To address the challenge posed by optimising the semantic perturbation, we design a smoothed, distance-based surrogate function to replace the mAP metric and introduce SimpleDIRECT, a deterministic optimisation algorithm that utilises observed slopes to guide the optimisation process. By comparing with randomised perturbation and two optimisation baselines, we demonstrate the effectiveness of the proposed framework. Additionally, we provide a benchmark on the semantic robustness of ten recent BEV models. The results reveal that PolarFormer, which emphasises geometric information from multi-view images, exhibits the highest robustness, whereas BEVDet is fully compromised, with its precision reduced to zero.

PhyT2V: LLM-Guided Iterative Self-Refinement for Physics-Grounded Text-to-Video Generation

Nov 30, 2024Abstract:Text-to-video (T2V) generation has been recently enabled by transformer-based diffusion models, but current T2V models lack capabilities in adhering to the real-world common knowledge and physical rules, due to their limited understanding of physical realism and deficiency in temporal modeling. Existing solutions are either data-driven or require extra model inputs, but cannot be generalizable to out-of-distribution domains. In this paper, we present PhyT2V, a new data-independent T2V technique that expands the current T2V model's capability of video generation to out-of-distribution domains, by enabling chain-of-thought and step-back reasoning in T2V prompting. Our experiments show that PhyT2V improves existing T2V models' adherence to real-world physical rules by 2.3x, and achieves 35% improvement compared to T2V prompt enhancers. The source codes are available at: https://github.com/pittisl/PhyT2V.

Robust RL with LLM-Driven Data Synthesis and Policy Adaptation for Autonomous Driving

Oct 16, 2024

Abstract:The integration of Large Language Models (LLMs) into autonomous driving systems demonstrates strong common sense and reasoning abilities, effectively addressing the pitfalls of purely data-driven methods. Current LLM-based agents require lengthy inference times and face challenges in interacting with real-time autonomous driving environments. A key open question is whether we can effectively leverage the knowledge from LLMs to train an efficient and robust Reinforcement Learning (RL) agent. This paper introduces RAPID, a novel \underline{\textbf{R}}obust \underline{\textbf{A}}daptive \underline{\textbf{P}}olicy \underline{\textbf{I}}nfusion and \underline{\textbf{D}}istillation framework, which trains specialized mix-of-policy RL agents using data synthesized by an LLM-based driving agent and online adaptation. RAPID features three key designs: 1) utilization of offline data collected from an LLM agent to distil expert knowledge into RL policies for faster real-time inference; 2) introduction of robust distillation in RL to inherit both performance and robustness from LLM-based teacher; and 3) employment of a mix-of-policy approach for joint decision decoding with a policy adapter. Through fine-tuning via online environment interaction, RAPID reduces the forgetting of LLM knowledge while maintaining adaptability to different tasks. Extensive experiments demonstrate RAPID's capability to effectively integrate LLM knowledge into scaled-down RL policies in an efficient, adaptable, and robust way. Code and checkpoints will be made publicly available upon acceptance.

PEAR: A Robust and Flexible Automation Framework for Ptychography Enabled by Multiple Large Language Model Agents

Oct 11, 2024

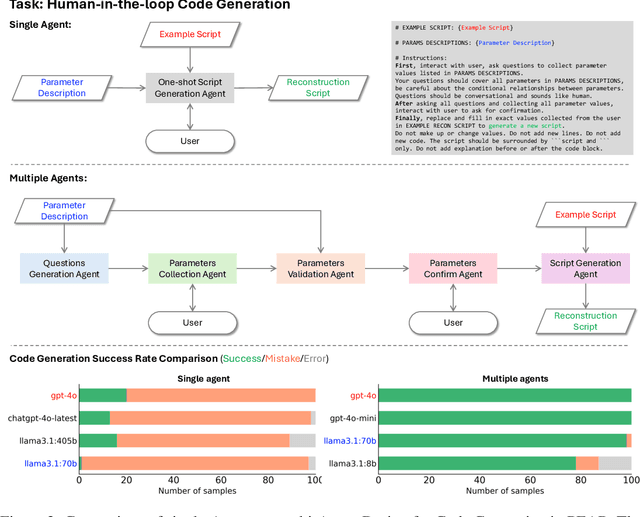

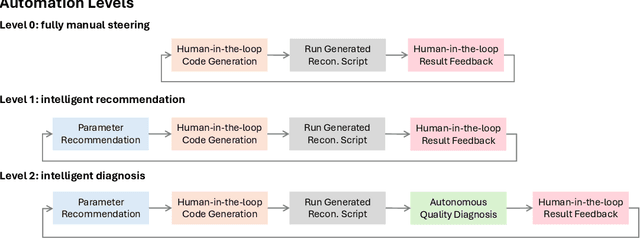

Abstract:Ptychography is an advanced computational imaging technique in X-ray and electron microscopy. It has been widely adopted across scientific research fields, including physics, chemistry, biology, and materials science, as well as in industrial applications such as semiconductor characterization. In practice, obtaining high-quality ptychographic images requires simultaneous optimization of numerous experimental and algorithmic parameters. Traditionally, parameter selection often relies on trial and error, leading to low-throughput workflows and potential human bias. In this work, we develop the "Ptychographic Experiment and Analysis Robot" (PEAR), a framework that leverages large language models (LLMs) to automate data analysis in ptychography. To ensure high robustness and accuracy, PEAR employs multiple LLM agents for tasks including knowledge retrieval, code generation, parameter recommendation, and image reasoning. Our study demonstrates that PEAR's multi-agent design significantly improves the workflow success rate, even with smaller open-weight models such as LLaMA 3.1 8B. PEAR also supports various automation levels and is designed to work with customized local knowledge bases, ensuring flexibility and adaptability across different research environments.

Achieving Sparse Activation in Small Language Models

Jun 03, 2024Abstract:Sparse activation, which selectively activates only an input-dependent set of neurons in inference, is a useful technique to reduce the computing cost of Large Language Models (LLMs) without retraining or adaptation efforts. However, whether it can be applied to the recently emerging Small Language Models (SLMs) remains questionable, because SLMs are generally less over-parameterized than LLMs. In this paper, we aim to achieve sparse activation in SLMs. We first show that the existing sparse activation schemes in LLMs that build on neurons' output magnitudes cannot be applied to SLMs, and activating neurons based on their attribution scores is a better alternative. Further, we demonstrated and quantified the large errors of existing attribution metrics when being used for sparse activation, due to the interdependency among attribution scores of neurons across different layers. Based on these observations, we proposed a new attribution metric that can provably correct such errors and achieve precise sparse activation. Experiments over multiple popular SLMs and datasets show that our approach can achieve 80% sparsification ratio with <5% model accuracy loss, comparable to the sparse activation achieved in LLMs. The source code is available at: https://github.com/pittisl/Sparse-Activation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge