Hongyang Jiang

Generalist versus Specialist Vision Foundation Models for Ocular Disease and Oculomics

Sep 03, 2025Abstract:Medical foundation models, pre-trained with large-scale clinical data, demonstrate strong performance in diverse clinically relevant applications. RETFound, trained on nearly one million retinal images, exemplifies this approach in applications with retinal images. However, the emergence of increasingly powerful and multifold larger generalist foundation models such as DINOv2 and DINOv3 raises the question of whether domain-specific pre-training remains essential, and if so, what gap persists. To investigate this, we systematically evaluated the adaptability of DINOv2 and DINOv3 in retinal image applications, compared to two specialist RETFound models, RETFound-MAE and RETFound-DINOv2. We assessed performance on ocular disease detection and systemic disease prediction using two adaptation strategies: fine-tuning and linear probing. Data efficiency and adaptation efficiency were further analysed to characterise trade-offs between predictive performance and computational cost. Our results show that although scaling generalist models yields strong adaptability across diverse tasks, RETFound-DINOv2 consistently outperforms these generalist foundation models in ocular-disease detection and oculomics tasks, demonstrating stronger generalisability and data efficiency. These findings suggest that specialist retinal foundation models remain the most effective choice for clinical applications, while the narrowing gap with generalist foundation models suggests that continued data and model scaling can deliver domain-relevant gains and position them as strong foundations for future medical foundation models.

A graph neural network-based multispectral-view learning model for diabetic macular ischemia detection from color fundus photographs

Feb 25, 2025

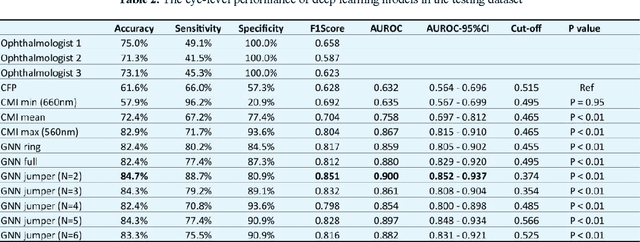

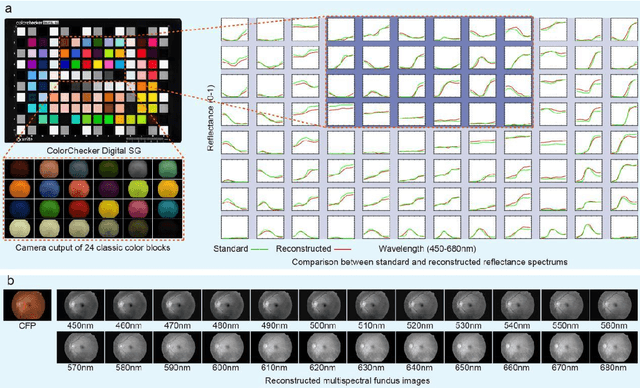

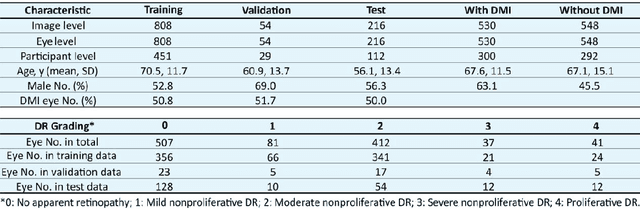

Abstract:Diabetic macular ischemia (DMI), marked by the loss of retinal capillaries in the macular area, contributes to vision impairment in patients with diabetes. Although color fundus photographs (CFPs), combined with artificial intelligence (AI), have been extensively applied in detecting various eye diseases, including diabetic retinopathy (DR), their applications in detecting DMI remain unexplored, partly due to skepticism among ophthalmologists regarding its feasibility. In this study, we propose a graph neural network-based multispectral view learning (GNN-MSVL) model designed to detect DMI from CFPs. The model leverages higher spectral resolution to capture subtle changes in fundus reflectance caused by ischemic tissue, enhancing sensitivity to DMI-related features. The proposed approach begins with computational multispectral imaging (CMI) to reconstruct 24-wavelength multispectral fundus images from CFPs. ResNeXt101 is employed as the backbone for multi-view learning to extract features from the reconstructed images. Additionally, a GNN with a customized jumper connection strategy is designed to enhance cross-spectral relationships, facilitating comprehensive and efficient multispectral view learning. The study included a total of 1,078 macula-centered CFPs from 1,078 eyes of 592 patients with diabetes, of which 530 CFPs from 530 eyes of 300 patients were diagnosed with DMI. The model achieved an accuracy of 84.7 percent and an area under the receiver operating characteristic curve (AUROC) of 0.900 (95 percent CI: 0.852-0.937) on eye-level, outperforming both the baseline model trained from CFPs and human experts (p-values less than 0.01). These findings suggest that AI-based CFP analysis holds promise for detecting DMI, contributing to its early and low-cost screening.

Is an Ultra Large Natural Image-Based Foundation Model Superior to a Retina-Specific Model for Detecting Ocular and Systemic Diseases?

Feb 10, 2025Abstract:The advent of foundation models (FMs) is transforming medical domain. In ophthalmology, RETFound, a retina-specific FM pre-trained sequentially on 1.4 million natural images and 1.6 million retinal images, has demonstrated high adaptability across clinical applications. Conversely, DINOv2, a general-purpose vision FM pre-trained on 142 million natural images, has shown promise in non-medical domains. However, its applicability to clinical tasks remains underexplored. To address this, we conducted head-to-head evaluations by fine-tuning RETFound and three DINOv2 models (large, base, small) for ocular disease detection and systemic disease prediction tasks, across eight standardized open-source ocular datasets, as well as the Moorfields AlzEye and the UK Biobank datasets. DINOv2-large model outperformed RETFound in detecting diabetic retinopathy (AUROC=0.850-0.952 vs 0.823-0.944, across three datasets, all P<=0.007) and multi-class eye diseases (AUROC=0.892 vs. 0.846, P<0.001). In glaucoma, DINOv2-base model outperformed RETFound (AUROC=0.958 vs 0.940, P<0.001). Conversely, RETFound achieved superior performance over all DINOv2 models in predicting heart failure, myocardial infarction, and ischaemic stroke (AUROC=0.732-0.796 vs 0.663-0.771, all P<0.001). These trends persisted even with 10% of the fine-tuning data. These findings showcase the distinct scenarios where general-purpose and domain-specific FMs excel, highlighting the importance of aligning FM selection with task-specific requirements to optimise clinical performance.

UWAFA-GAN: Ultra-Wide-Angle Fluorescein Angiography Transformation via Multi-scale Generation and Registration Enhancement

May 01, 2024

Abstract:Fundus photography, in combination with the ultra-wide-angle fundus (UWF) techniques, becomes an indispensable diagnostic tool in clinical settings by offering a more comprehensive view of the retina. Nonetheless, UWF fluorescein angiography (UWF-FA) necessitates the administration of a fluorescent dye via injection into the patient's hand or elbow unlike UWF scanning laser ophthalmoscopy (UWF-SLO). To mitigate potential adverse effects associated with injections, researchers have proposed the development of cross-modality medical image generation algorithms capable of converting UWF-SLO images into their UWF-FA counterparts. Current image generation techniques applied to fundus photography encounter difficulties in producing high-resolution retinal images, particularly in capturing minute vascular lesions. To address these issues, we introduce a novel conditional generative adversarial network (UWAFA-GAN) to synthesize UWF-FA from UWF-SLO. This approach employs multi-scale generators and an attention transmit module to efficiently extract both global structures and local lesions. Additionally, to counteract the image blurriness issue that arises from training with misaligned data, a registration module is integrated within this framework. Our method performs non-trivially on inception scores and details generation. Clinical user studies further indicate that the UWF-FA images generated by UWAFA-GAN are clinically comparable to authentic images in terms of diagnostic reliability. Empirical evaluations on our proprietary UWF image datasets elucidate that UWAFA-GAN outperforms extant methodologies. The code is accessible at https://github.com/Tinysqua/UWAFA-GAN.

GlanceSeg: Real-time microaneurysm lesion segmentation with gaze-map-guided foundation model for early detection of diabetic retinopathy

Nov 14, 2023

Abstract:Early-stage diabetic retinopathy (DR) presents challenges in clinical diagnosis due to inconspicuous and minute microangioma lesions, resulting in limited research in this area. Additionally, the potential of emerging foundation models, such as the segment anything model (SAM), in medical scenarios remains rarely explored. In this work, we propose a human-in-the-loop, label-free early DR diagnosis framework called GlanceSeg, based on SAM. GlanceSeg enables real-time segmentation of microangioma lesions as ophthalmologists review fundus images. Our human-in-the-loop framework integrates the ophthalmologist's gaze map, allowing for rough localization of minute lesions in fundus images. Subsequently, a saliency map is generated based on the located region of interest, which provides prompt points to assist the foundation model in efficiently segmenting microangioma lesions. Finally, a domain knowledge filter refines the segmentation of minute lesions. We conducted experiments on two newly-built public datasets, i.e., IDRiD and Retinal-Lesions, and validated the feasibility and superiority of GlanceSeg through visualized illustrations and quantitative measures. Additionally, we demonstrated that GlanceSeg improves annotation efficiency for clinicians and enhances segmentation performance through fine-tuning using annotations. This study highlights the potential of GlanceSeg-based annotations for self-model optimization, leading to enduring performance advancements through continual learning.

Label-noise-tolerant medical image classification via self-attention and self-supervised learning

Jun 16, 2023Abstract:Deep neural networks (DNNs) have been widely applied in medical image classification and achieve remarkable classification performance. These achievements heavily depend on large-scale accurately annotated training data. However, label noise is inevitably introduced in the medical image annotation, as the labeling process heavily relies on the expertise and experience of annotators. Meanwhile, DNNs suffer from overfitting noisy labels, degrading the performance of models. Therefore, in this work, we innovatively devise noise-robust training approach to mitigate the adverse effects of noisy labels in medical image classification. Specifically, we incorporate contrastive learning and intra-group attention mixup strategies into the vanilla supervised learning. The contrastive learning for feature extractor helps to enhance visual representation of DNNs. The intra-group attention mixup module constructs groups and assigns self-attention weights for group-wise samples, and subsequently interpolates massive noisy-suppressed samples through weighted mixup operation. We conduct comparative experiments on both synthetic and real-world noisy medical datasets under various noise levels. Rigorous experiments validate that our noise-robust method with contrastive learning and attention mixup can effectively handle with label noise, and is superior to state-of-the-art methods. An ablation study also shows that both components contribute to boost model performance. The proposed method demonstrates its capability of curb label noise and has certain potential toward real-world clinic applications.

Eye tracking guided deep multiple instance learning with dual cross-attention for fundus disease detection

Apr 25, 2023

Abstract:Deep neural networks (DNNs) have promoted the development of computer aided diagnosis (CAD) systems for fundus diseases, helping ophthalmologists reduce missed diagnosis and misdiagnosis rate. However, the majority of CAD systems are data-driven but lack of medical prior knowledge which can be performance-friendly. In this regard, we innovatively proposed a human-in-the-loop (HITL) CAD system by leveraging ophthalmologists' eye-tracking information, which is more efficient and accurate. Concretely, the HITL CAD system was implemented on the multiple instance learning (MIL), where eye-tracking gaze maps were beneficial to cherry-pick diagnosis-related instances. Furthermore, the dual-cross-attention MIL (DCAMIL) network was utilized to curb the adverse effects of noisy instances. Meanwhile, both sequence augmentation module and domain adversarial module were introduced to enrich and standardize instances in the training bag, respectively, thereby enhancing the robustness of our method. We conduct comparative experiments on our newly constructed datasets (namely, AMD-Gaze and DR-Gaze), respectively for the AMD and early DR detection. Rigorous experiments demonstrate the feasibility of our HITL CAD system and the superiority of the proposed DCAMIL, fully exploring the ophthalmologists' eye-tracking information. These investigations indicate that physicians' gaze maps, as medical prior knowledge, is potential to contribute to the CAD systems of clinical diseases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge