Hanyang Wang

SkillRL: Evolving Agents via Recursive Skill-Augmented Reinforcement Learning

Feb 09, 2026Abstract:Large Language Model (LLM) agents have shown stunning results in complex tasks, yet they often operate in isolation, failing to learn from past experiences. Existing memory-based methods primarily store raw trajectories, which are often redundant and noise-heavy. This prevents agents from extracting high-level, reusable behavioral patterns that are essential for generalization. In this paper, we propose SkillRL, a framework that bridges the gap between raw experience and policy improvement through automatic skill discovery and recursive evolution. Our approach introduces an experience-based distillation mechanism to build a hierarchical skill library SkillBank, an adaptive retrieval strategy for general and task-specific heuristics, and a recursive evolution mechanism that allows the skill library to co-evolve with the agent's policy during reinforcement learning. These innovations significantly reduce the token footprint while enhancing reasoning utility. Experimental results on ALFWorld, WebShop and seven search-augmented tasks demonstrate that SkillRL achieves state-of-the-art performance, outperforming strong baselines over 15.3% and maintaining robustness as task complexity increases. Code is available at this https://github.com/aiming-lab/SkillRL.

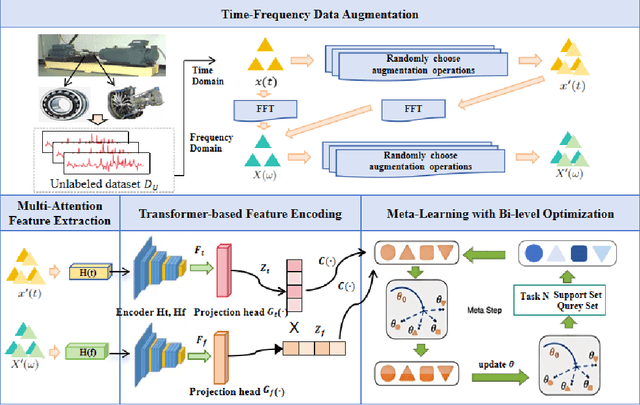

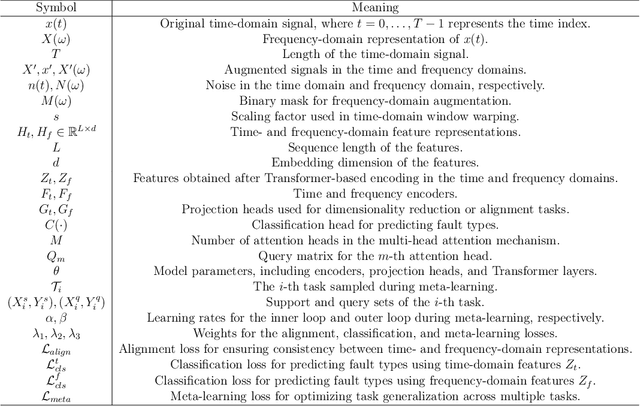

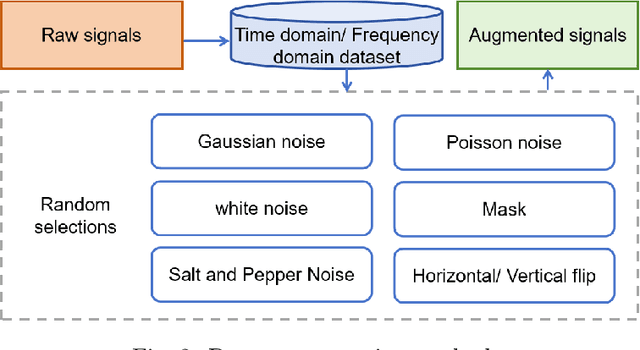

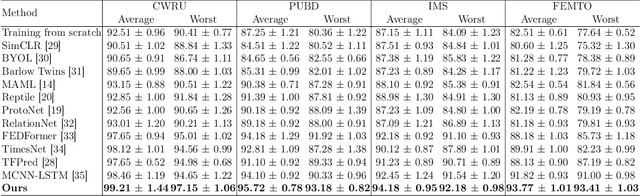

Unsupervised Multi-Attention Meta Transformer for Rotating Machinery Fault Diagnosis

Sep 11, 2025

Abstract:The intelligent fault diagnosis of rotating mechanical equipment usually requires a large amount of labeled sample data. However, in practical industrial applications, acquiring enough data is both challenging and expensive in terms of time and cost. Moreover, different types of rotating mechanical equipment with different unique mechanical properties, require separate training of diagnostic models for each case. To address the challenges of limited fault samples and the lack of generalizability in prediction models for practical engineering applications, we propose a Multi-Attention Meta Transformer method for few-shot unsupervised rotating machinery fault diagnosis (MMT-FD). This framework extracts potential fault representations from unlabeled data and demonstrates strong generalization capabilities, making it suitable for diagnosing faults across various types of mechanical equipment. The MMT-FD framework integrates a time-frequency domain encoder and a meta-learning generalization model. The time-frequency domain encoder predicts status representations generated through random augmentations in the time-frequency domain. These enhanced data are then fed into a meta-learning network for classification and generalization training, followed by fine-tuning using a limited amount of labeled data. The model is iteratively optimized using a small number of contrastive learning iterations, resulting in high efficiency. To validate the framework, we conducted experiments on a bearing fault dataset and rotor test bench data. The results demonstrate that the MMT-FD model achieves 99\% fault diagnosis accuracy with only 1\% of labeled sample data, exhibiting robust generalization capabilities.

LangScene-X: Reconstruct Generalizable 3D Language-Embedded Scenes with TriMap Video Diffusion

Jul 03, 2025

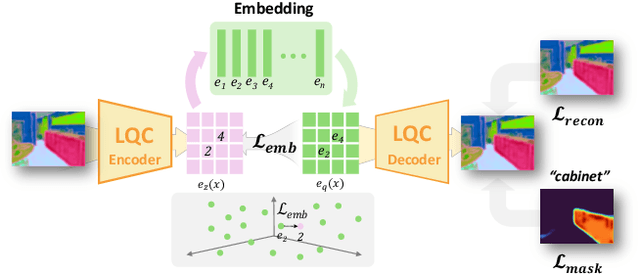

Abstract:Recovering 3D structures with open-vocabulary scene understanding from 2D images is a fundamental but daunting task. Recent developments have achieved this by performing per-scene optimization with embedded language information. However, they heavily rely on the calibrated dense-view reconstruction paradigm, thereby suffering from severe rendering artifacts and implausible semantic synthesis when limited views are available. In this paper, we introduce a novel generative framework, coined LangScene-X, to unify and generate 3D consistent multi-modality information for reconstruction and understanding. Powered by the generative capability of creating more consistent novel observations, we can build generalizable 3D language-embedded scenes from only sparse views. Specifically, we first train a TriMap video diffusion model that can generate appearance (RGBs), geometry (normals), and semantics (segmentation maps) from sparse inputs through progressive knowledge integration. Furthermore, we propose a Language Quantized Compressor (LQC), trained on large-scale image datasets, to efficiently encode language embeddings, enabling cross-scene generalization without per-scene retraining. Finally, we reconstruct the language surface fields by aligning language information onto the surface of 3D scenes, enabling open-ended language queries. Extensive experiments on real-world data demonstrate the superiority of our LangScene-X over state-of-the-art methods in terms of quality and generalizability. Project Page: https://liuff19.github.io/LangScene-X.

Text2Grad: Reinforcement Learning from Natural Language Feedback

May 28, 2025Abstract:Traditional RLHF optimizes language models with coarse, scalar rewards that mask the fine-grained reasons behind success or failure, leading to slow and opaque learning. Recent work augments RL with textual critiques through prompting or reflection, improving interpretability but leaving model parameters untouched. We introduce Text2Grad, a reinforcement-learning paradigm that turns free-form textual feedback into span-level gradients. Given human (or programmatic) critiques, Text2Grad aligns each feedback phrase with the relevant token spans, converts these alignments into differentiable reward signals, and performs gradient updates that directly refine the offending portions of the model's policy. This yields precise, feedback-conditioned adjustments instead of global nudges. Text2Grad is realized through three components: (1) a high-quality feedback-annotation pipeline that pairs critiques with token spans; (2) a fine-grained reward model that predicts span-level reward on answer while generating explanatory critiques; and (3) a span-level policy optimizer that back-propagates natural-language gradients. Across summarization, code generation, and question answering, Text2Grad consistently surpasses scalar-reward RL and prompt-only baselines, providing both higher task metrics and richer interpretability. Our results demonstrate that natural-language feedback, when converted to gradients, is a powerful signal for fine-grained policy optimization. The code for our method is available at https://github.com/microsoft/Text2Grad

VideoScene: Distilling Video Diffusion Model to Generate 3D Scenes in One Step

Apr 03, 2025

Abstract:Recovering 3D scenes from sparse views is a challenging task due to its inherent ill-posed problem. Conventional methods have developed specialized solutions (e.g., geometry regularization or feed-forward deterministic model) to mitigate the issue. However, they still suffer from performance degradation by minimal overlap across input views with insufficient visual information. Fortunately, recent video generative models show promise in addressing this challenge as they are capable of generating video clips with plausible 3D structures. Powered by large pretrained video diffusion models, some pioneering research start to explore the potential of video generative prior and create 3D scenes from sparse views. Despite impressive improvements, they are limited by slow inference time and the lack of 3D constraint, leading to inefficiencies and reconstruction artifacts that do not align with real-world geometry structure. In this paper, we propose VideoScene to distill the video diffusion model to generate 3D scenes in one step, aiming to build an efficient and effective tool to bridge the gap from video to 3D. Specifically, we design a 3D-aware leap flow distillation strategy to leap over time-consuming redundant information and train a dynamic denoising policy network to adaptively determine the optimal leap timestep during inference. Extensive experiments demonstrate that our VideoScene achieves faster and superior 3D scene generation results than previous video diffusion models, highlighting its potential as an efficient tool for future video to 3D applications. Project Page: https://hanyang-21.github.io/VideoScene

Video-T1: Test-Time Scaling for Video Generation

Mar 24, 2025Abstract:With the scale capability of increasing training data, model size, and computational cost, video generation has achieved impressive results in digital creation, enabling users to express creativity across various domains. Recently, researchers in Large Language Models (LLMs) have expanded the scaling to test-time, which can significantly improve LLM performance by using more inference-time computation. Instead of scaling up video foundation models through expensive training costs, we explore the power of Test-Time Scaling (TTS) in video generation, aiming to answer the question: if a video generation model is allowed to use non-trivial amount of inference-time compute, how much can it improve generation quality given a challenging text prompt. In this work, we reinterpret the test-time scaling of video generation as a searching problem to sample better trajectories from Gaussian noise space to the target video distribution. Specifically, we build the search space with test-time verifiers to provide feedback and heuristic algorithms to guide searching process. Given a text prompt, we first explore an intuitive linear search strategy by increasing noise candidates at inference time. As full-step denoising all frames simultaneously requires heavy test-time computation costs, we further design a more efficient TTS method for video generation called Tree-of-Frames (ToF) that adaptively expands and prunes video branches in an autoregressive manner. Extensive experiments on text-conditioned video generation benchmarks demonstrate that increasing test-time compute consistently leads to significant improvements in the quality of videos. Project page: https://liuff19.github.io/Video-T1

Robust Dynamic Facial Expression Recognition

Feb 22, 2025Abstract:The study of Dynamic Facial Expression Recognition (DFER) is a nascent field of research that involves the automated recognition of facial expressions in video data. Although existing research has primarily focused on learning representations under noisy and hard samples, the issue of the coexistence of both types of samples remains unresolved. In order to overcome this challenge, this paper proposes a robust method of distinguishing between hard and noisy samples. This is achieved by evaluating the prediction agreement of the model on different sampled clips of the video. Subsequently, methodologies that reinforce the learning of hard samples and mitigate the impact of noisy samples can be employed. Moreover, to identify the principal expression in a video and enhance the model's capacity for representation learning, comprising a key expression re-sampling framework and a dual-stream hierarchical network is proposed, namely Robust Dynamic Facial Expression Recognition (RDFER). The key expression re-sampling framework is designed to identify the key expression, thereby mitigating the potential confusion caused by non-target expressions. RDFER employs two sequence models with the objective of disentangling short-term facial movements and long-term emotional changes. The proposed method has been shown to outperform current State-Of-The-Art approaches in DFER through extensive experimentation on benchmark datasets such as DFEW and FERV39K. A comprehensive analysis provides valuable insights and observations regarding the proposed agreement. This work has significant implications for the field of dynamic facial expression recognition and promotes the further development of the field of noise-consistent robust learning in dynamic facial expression recognition. The code is available from [https://github.com/Cross-Innovation-Lab/RDFER].

Bayesian Optimization with Preference Exploration by Monotonic Neural Network Ensemble

Jan 30, 2025Abstract:Many real-world black-box optimization problems have multiple conflicting objectives. Rather than attempting to approximate the entire set of Pareto-optimal solutions, interactive preference learning allows to focus the search on the most relevant subset. However, few previous studies have exploited the fact that utility functions are usually monotonic. In this paper, we address the Bayesian Optimization with Preference Exploration (BOPE) problem and propose using a neural network ensemble as a utility surrogate model. This approach naturally integrates monotonicity and supports pairwise comparison data. Our experiments demonstrate that the proposed method outperforms state-of-the-art approaches and exhibits robustness to noise in utility evaluations. An ablation study highlights the critical role of monotonicity in enhancing performance.

Respecting the limit:Bayesian optimization with a bound on the optimal value

Nov 07, 2024

Abstract:In many real-world optimization problems, we have prior information about what objective function values are achievable. In this paper, we study the scenario that we have either exact knowledge of the minimum value or a, possibly inexact, lower bound on its value. We propose bound-aware Bayesian optimization (BABO), a Bayesian optimization method that uses a new surrogate model and acquisition function to utilize such prior information. We present SlogGP, a new surrogate model that incorporates bound information and adapts the Expected Improvement (EI) acquisition function accordingly. Empirical results on a variety of benchmarks demonstrate the benefit of taking prior information about the optimal value into account, and that the proposed approach significantly outperforms existing techniques. Furthermore, we notice that even in the absence of prior information on the bound, the proposed SlogGP surrogate model still performs better than the standard GP model in most cases, which we explain by its larger expressiveness.

Dynamical system prediction from sparse observations using deep neural networks with Voronoi tessellation and physics constraint

Aug 31, 2024

Abstract:Despite the success of various methods in addressing the issue of spatial reconstruction of dynamical systems with sparse observations, spatio-temporal prediction for sparse fields remains a challenge. Existing Kriging-based frameworks for spatio-temporal sparse field prediction fail to meet the accuracy and inference time required for nonlinear dynamic prediction problems. In this paper, we introduce the Dynamical System Prediction from Sparse Observations using Voronoi Tessellation (DSOVT) framework, an innovative methodology based on Voronoi tessellation which combines convolutional encoder-decoder (CED) and long short-term memory (LSTM) and utilizing Convolutional Long Short-Term Memory (ConvLSTM). By integrating Voronoi tessellations with spatio-temporal deep learning models, DSOVT is adept at predicting dynamical systems with unstructured, sparse, and time-varying observations. CED-LSTM maps Voronoi tessellations into a low-dimensional representation for time series prediction, while ConvLSTM directly uses these tessellations in an end-to-end predictive model. Furthermore, we incorporate physics constraints during the training process for dynamical systems with explicit formulas. Compared to purely data-driven models, our physics-based approach enables the model to learn physical laws within explicitly formulated dynamics, thereby enhancing the robustness and accuracy of rolling forecasts. Numerical experiments on real sea surface data and shallow water systems clearly demonstrate our framework's accuracy and computational efficiency with sparse and time-varying observations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge