Sibo Cheng

Trustworthy Data-Driven Wildfire Risk Prediction and Understanding in Western Canada

Jan 04, 2026Abstract:In recent decades, the intensification of wildfire activity in western Canada has resulted in substantial socio-economic and environmental losses. Accurate wildfire risk prediction is hindered by the intrinsic stochasticity of ignition and spread and by nonlinear interactions among fuel conditions, meteorology, climate variability, topography, and human activities, challenging the reliability and interpretability of purely data-driven models. We propose a trustworthy data-driven wildfire risk prediction framework based on long-sequence, multi-scale temporal modeling, which integrates heterogeneous drivers while explicitly quantifying predictive uncertainty and enabling process-level interpretation. Evaluated over western Canada during the record-breaking 2023 and 2024 fire seasons, the proposed model outperforms existing time-series approaches, achieving an F1 score of 0.90 and a PR-AUC of 0.98 with low computational cost. Uncertainty-aware analysis reveals structured spatial and seasonal patterns in predictive confidence, highlighting increased uncertainty associated with ambiguous predictions and spatiotemporal decision boundaries. SHAP-based interpretation provides mechanistic understanding of wildfire controls, showing that temperature-related drivers dominate wildfire risk in both years, while moisture-related constraints play a stronger role in shaping spatial and land-cover-specific contrasts in 2024 compared to the widespread hot and dry conditions of 2023. Data and code are available at https://github.com/SynUW/mmFire.

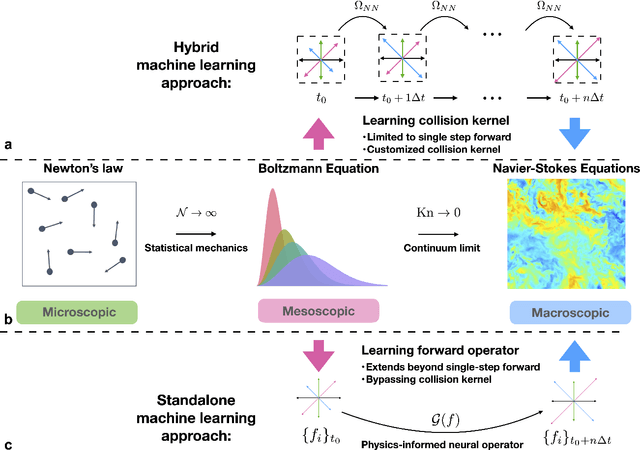

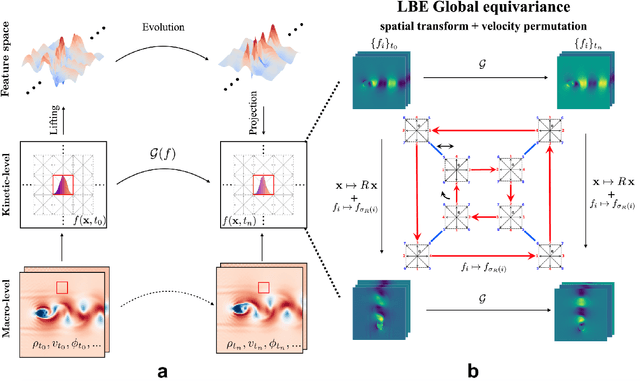

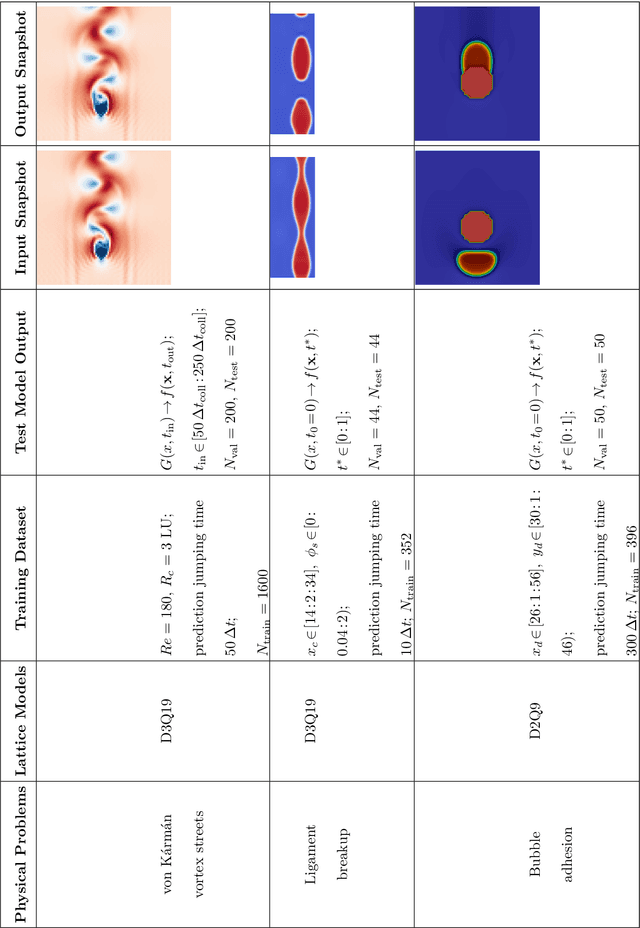

Fast-Forward Lattice Boltzmann: Learning Kinetic Behaviour with Physics-Informed Neural Operators

Sep 26, 2025

Abstract:The lattice Boltzmann equation (LBE), rooted in kinetic theory, provides a powerful framework for capturing complex flow behaviour by describing the evolution of single-particle distribution functions (PDFs). Despite its success, solving the LBE numerically remains computationally intensive due to strict time-step restrictions imposed by collision kernels. Here, we introduce a physics-informed neural operator framework for the LBE that enables prediction over large time horizons without step-by-step integration, effectively bypassing the need to explicitly solve the collision kernel. We incorporate intrinsic moment-matching constraints of the LBE, along with global equivariance of the full distribution field, enabling the model to capture the complex dynamics of the underlying kinetic system. Our framework is discretization-invariant, enabling models trained on coarse lattices to generalise to finer ones (kinetic super-resolution). In addition, it is agnostic to the specific form of the underlying collision model, which makes it naturally applicable across different kinetic datasets regardless of the governing dynamics. Our results demonstrate robustness across complex flow scenarios, including von Karman vortex shedding, ligament breakup, and bubble adhesion. This establishes a new data-driven pathway for modelling kinetic systems.

Comparative and Interpretative Analysis of CNN and Transformer Models in Predicting Wildfire Spread Using Remote Sensing Data

Mar 18, 2025

Abstract:Facing the escalating threat of global wildfires, numerous computer vision techniques using remote sensing data have been applied in this area. However, the selection of deep learning methods for wildfire prediction remains uncertain due to the lack of comparative analysis in a quantitative and explainable manner, crucial for improving prevention measures and refining models. This study aims to thoroughly compare the performance, efficiency, and explainability of four prevalent deep learning architectures: Autoencoder, ResNet, UNet, and Transformer-based Swin-UNet. Employing a real-world dataset that includes nearly a decade of remote sensing data from California, U.S., these models predict the spread of wildfires for the following day. Through detailed quantitative comparison analysis, we discovered that Transformer-based Swin-UNet and UNet generally outperform Autoencoder and ResNet, particularly due to the advanced attention mechanisms in Transformer-based Swin-UNet and the efficient use of skip connections in both UNet and Transformer-based Swin-UNet, which contribute to superior predictive accuracy and model interpretability. Then we applied XAI techniques on all four models, this not only enhances the clarity and trustworthiness of models but also promotes focused improvements in wildfire prediction capabilities. The XAI analysis reveals that UNet and Transformer-based Swin-UNet are able to focus on critical features such as 'Previous Fire Mask', 'Drought', and 'Vegetation' more effectively than the other two models, while also maintaining balanced attention to the remaining features, leading to their superior performance. The insights from our thorough comparative analysis offer substantial implications for future model design and also provide guidance for model selection in different scenarios.

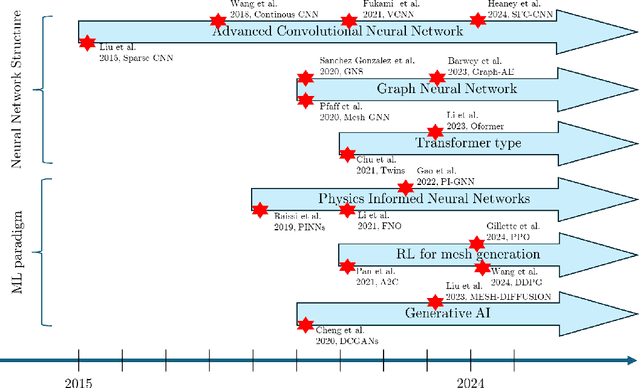

Machine learning for modelling unstructured grid data in computational physics: a review

Feb 13, 2025

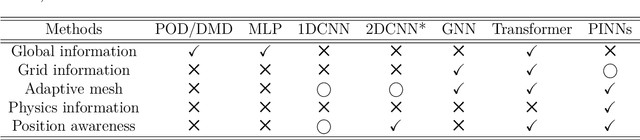

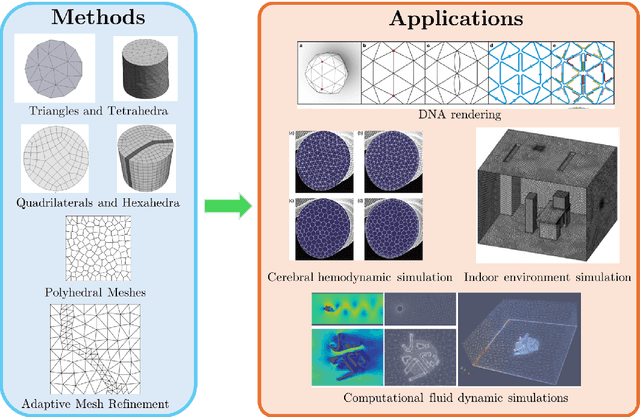

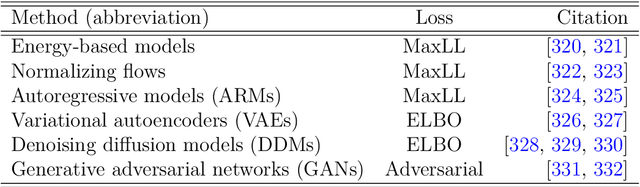

Abstract:Unstructured grid data are essential for modelling complex geometries and dynamics in computational physics. Yet, their inherent irregularity presents significant challenges for conventional machine learning (ML) techniques. This paper provides a comprehensive review of advanced ML methodologies designed to handle unstructured grid data in high-dimensional dynamical systems. Key approaches discussed include graph neural networks, transformer models with spatial attention mechanisms, interpolation-integrated ML methods, and meshless techniques such as physics-informed neural networks. These methodologies have proven effective across diverse fields, including fluid dynamics and environmental simulations. This review is intended as a guidebook for computational scientists seeking to apply ML approaches to unstructured grid data in their domains, as well as for ML researchers looking to address challenges in computational physics. It places special focus on how ML methods can overcome the inherent limitations of traditional numerical techniques and, conversely, how insights from computational physics can inform ML development. To support benchmarking, this review also provides a summary of open-access datasets of unstructured grid data in computational physics. Finally, emerging directions such as generative models with unstructured data, reinforcement learning for mesh generation, and hybrid physics-data-driven paradigms are discussed to inspire future advancements in this evolving field.

Fire-Image-DenseNet (FIDN) for predicting wildfire burnt area using remote sensing data

Dec 02, 2024

Abstract:Predicting the extent of massive wildfires once ignited is essential to reduce the subsequent socioeconomic losses and environmental damage, but challenging because of the complexity of fire behaviour. Existing physics-based models are limited in predicting large or long-duration wildfire events. Here, we develop a deep-learning-based predictive model, Fire-Image-DenseNet (FIDN), that uses spatial features derived from both near real-time and reanalysis data on the environmental and meteorological drivers of wildfire. We trained and tested this model using more than 300 individual wildfires that occurred between 2012 and 2019 in the western US. In contrast to existing models, the performance of FIDN does not degrade with fire size or duration. Furthermore, it predicts final burnt area accurately even in very heterogeneous landscapes in terms of fuel density and flammability. The FIDN model showed higher accuracy, with a mean squared error (MSE) about 82% and 67% lower than those of the predictive models based on cellular automata (CA) and the minimum travel time (MTT) approaches, respectively. Its structural similarity index measure (SSIM) averages 97%, outperforming the CA and FlamMap MTT models by 6% and 2%, respectively. Additionally, FIDN is approximately three orders of magnitude faster than both CA and MTT models. The enhanced computational efficiency and accuracy advancements offer vital insights for strategic planning and resource allocation for firefighting operations.

Dynamical system prediction from sparse observations using deep neural networks with Voronoi tessellation and physics constraint

Aug 31, 2024

Abstract:Despite the success of various methods in addressing the issue of spatial reconstruction of dynamical systems with sparse observations, spatio-temporal prediction for sparse fields remains a challenge. Existing Kriging-based frameworks for spatio-temporal sparse field prediction fail to meet the accuracy and inference time required for nonlinear dynamic prediction problems. In this paper, we introduce the Dynamical System Prediction from Sparse Observations using Voronoi Tessellation (DSOVT) framework, an innovative methodology based on Voronoi tessellation which combines convolutional encoder-decoder (CED) and long short-term memory (LSTM) and utilizing Convolutional Long Short-Term Memory (ConvLSTM). By integrating Voronoi tessellations with spatio-temporal deep learning models, DSOVT is adept at predicting dynamical systems with unstructured, sparse, and time-varying observations. CED-LSTM maps Voronoi tessellations into a low-dimensional representation for time series prediction, while ConvLSTM directly uses these tessellations in an end-to-end predictive model. Furthermore, we incorporate physics constraints during the training process for dynamical systems with explicit formulas. Compared to purely data-driven models, our physics-based approach enables the model to learn physical laws within explicitly formulated dynamics, thereby enhancing the robustness and accuracy of rolling forecasts. Numerical experiments on real sea surface data and shallow water systems clearly demonstrate our framework's accuracy and computational efficiency with sparse and time-varying observations.

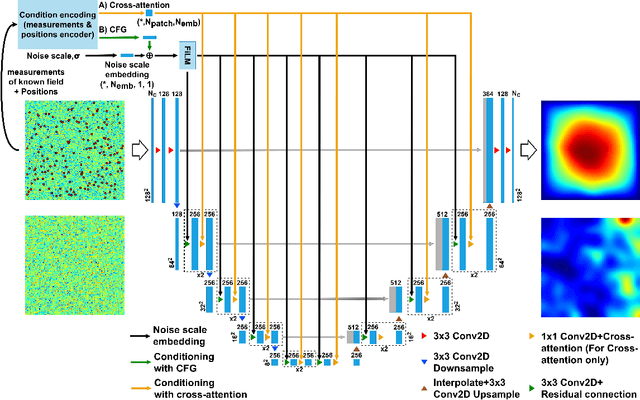

Spatially-Aware Diffusion Models with Cross-Attention for Global Field Reconstruction with Sparse Observations

Aug 30, 2024

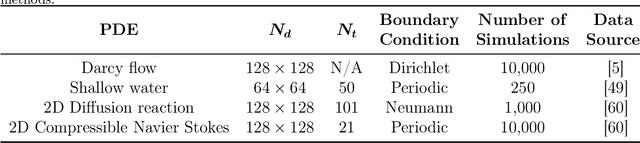

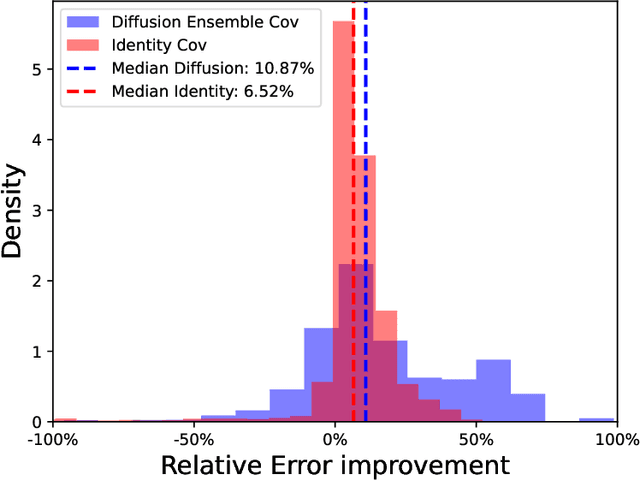

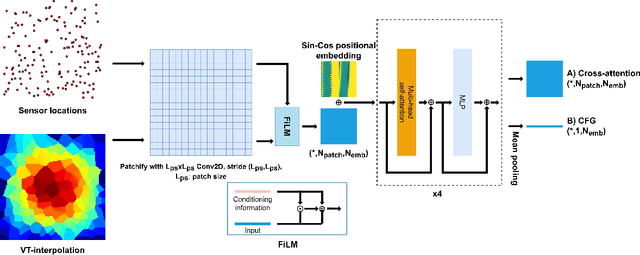

Abstract:Diffusion models have gained attention for their ability to represent complex distributions and incorporate uncertainty, making them ideal for robust predictions in the presence of noisy or incomplete data. In this study, we develop and enhance score-based diffusion models in field reconstruction tasks, where the goal is to estimate complete spatial fields from partial observations. We introduce a condition encoding approach to construct a tractable mapping mapping between observed and unobserved regions using a learnable integration of sparse observations and interpolated fields as an inductive bias. With refined sensing representations and an unraveled temporal dimension, our method can handle arbitrary moving sensors and effectively reconstruct fields. Furthermore, we conduct a comprehensive benchmark of our approach against a deterministic interpolation-based method across various static and time-dependent PDEs. Our study attempts to addresses the gap in strong baselines for evaluating performance across varying sampling hyperparameters, noise levels, and conditioning methods. Our results show that diffusion models with cross-attention and the proposed conditional encoding generally outperform other methods under noisy conditions, although the deterministic method excels with noiseless data. Additionally, both the diffusion models and the deterministic method surpass the numerical approach in accuracy and computational cost for the steady problem. We also demonstrate the ability of the model to capture possible reconstructions and improve the accuracy of fused results in covariance-based correction tasks using ensemble sampling.

Deep learning surrogate models of JULES-INFERNO for wildfire prediction on a global scale

Aug 30, 2024

Abstract:Global wildfire models play a crucial role in anticipating and responding to changing wildfire regimes. JULES-INFERNO is a global vegetation and fire model simulating wildfire emissions and area burnt on a global scale. However, because of the high data dimensionality and system complexity, JULES-INFERNO's computational costs make it challenging to apply to fire risk forecasting with unseen initial conditions. Typically, running JULES-INFERNO for 30 years of prediction will take several hours on High Performance Computing (HPC) clusters. To tackle this bottleneck, two data-driven models are built in this work based on Deep Learning techniques to surrogate the JULES-INFERNO model and speed up global wildfire forecasting. More precisely, these machine learning models take global temperature, vegetation density, soil moisture and previous forecasts as inputs to predict the subsequent global area burnt on an iterative basis. Average Error per Pixel (AEP) and Structural Similarity Index Measure (SSIM) are used as metrics to evaluate the performance of the proposed surrogate models. A fine tuning strategy is also proposed in this work to improve the algorithm performance for unseen scenarios. Numerical results show a strong performance of the proposed models, in terms of both computational efficiency (less than 20 seconds for 30 years of prediction on a laptop CPU) and prediction accuracy (with AEP under 0.3\% and SSIM over 98\% compared to the outputs of JULES-INFERNO).

TorchDA: A Python package for performing data assimilation with deep learning forward and transformation functions

Aug 30, 2024Abstract:Data assimilation techniques are often confronted with challenges handling complex high dimensional physical systems, because high precision simulation in complex high dimensional physical systems is computationally expensive and the exact observation functions that can be applied in these systems are difficult to obtain. It prompts growing interest in integrating deep learning models within data assimilation workflows, but current software packages for data assimilation cannot handle deep learning models inside. This study presents a novel Python package seamlessly combining data assimilation with deep neural networks to serve as models for state transition and observation functions. The package, named TorchDA, implements Kalman Filter, Ensemble Kalman Filter (EnKF), 3D Variational (3DVar), and 4D Variational (4DVar) algorithms, allowing flexible algorithm selection based on application requirements. Comprehensive experiments conducted on the Lorenz 63 and a two-dimensional shallow water system demonstrate significantly enhanced performance over standalone model predictions without assimilation. The shallow water analysis validates data assimilation capabilities mapping between different physical quantity spaces in either full space or reduced order space. Overall, this innovative software package enables flexible integration of deep learning representations within data assimilation, conferring a versatile tool to tackle complex high dimensional dynamical systems across scientific domains.

Deep learning-based sequential data assimilation for chaotic dynamics identifies local instabilities from single state forecasts

Aug 08, 2024

Abstract:We investigate the ability to discover data assimilation (DA) schemes meant for chaotic dynamics with deep learning (DL). The focus is on learning the analysis step of sequential DA, from state trajectories and their observations, using a simple residual convolutional neural network, while assuming the dynamics to be known. Experiments are performed with the Lorenz 96 dynamics, which display spatiotemporal chaos and for which solid benchmarks for DA performance exist. The accuracy of the states obtained from the learned analysis approaches that of the best possibly tuned ensemble Kalman filter (EnKF), and is far better than that of variational DA alternatives. Critically, this can be achieved while propagating even just a single state in the forecast step. We investigate the reason for achieving ensemble filtering accuracy without an ensemble. We diagnose that the analysis scheme actually identifies key dynamical perturbations, mildly aligned with the unstable subspace, from the forecast state alone, without any ensemble-based covariances representation. This reveals that the analysis scheme has learned some multiplicative ergodic theorem associated to the DA process seen as a non-autonomous random dynamical system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge