Linlin Xu

OpenWildlife: Open-Vocabulary Multi-Species Wildlife Detector for Geographically-Diverse Aerial Imagery

Jun 24, 2025Abstract:We introduce OpenWildlife (OW), an open-vocabulary wildlife detector designed for multi-species identification in diverse aerial imagery. While existing automated methods perform well in specific settings, they often struggle to generalize across different species and environments due to limited taxonomic coverage and rigid model architectures. In contrast, OW leverages language-aware embeddings and a novel adaptation of the Grounding-DINO framework, enabling it to identify species specified through natural language inputs across both terrestrial and marine environments. Trained on 15 datasets, OW outperforms most existing methods, achieving up to \textbf{0.981} mAP50 with fine-tuning and \textbf{0.597} mAP50 on seven datasets featuring novel species. Additionally, we introduce an efficient search algorithm that combines k-nearest neighbors and breadth-first search to prioritize areas where social species are likely to be found. This approach captures over \textbf{95\%} of species while exploring only \textbf{33\%} of the available images. To support reproducibility, we publicly release our source code and dataset splits, establishing OW as a flexible, cost-effective solution for global biodiversity assessments.

Comparative and Interpretative Analysis of CNN and Transformer Models in Predicting Wildfire Spread Using Remote Sensing Data

Mar 18, 2025

Abstract:Facing the escalating threat of global wildfires, numerous computer vision techniques using remote sensing data have been applied in this area. However, the selection of deep learning methods for wildfire prediction remains uncertain due to the lack of comparative analysis in a quantitative and explainable manner, crucial for improving prevention measures and refining models. This study aims to thoroughly compare the performance, efficiency, and explainability of four prevalent deep learning architectures: Autoencoder, ResNet, UNet, and Transformer-based Swin-UNet. Employing a real-world dataset that includes nearly a decade of remote sensing data from California, U.S., these models predict the spread of wildfires for the following day. Through detailed quantitative comparison analysis, we discovered that Transformer-based Swin-UNet and UNet generally outperform Autoencoder and ResNet, particularly due to the advanced attention mechanisms in Transformer-based Swin-UNet and the efficient use of skip connections in both UNet and Transformer-based Swin-UNet, which contribute to superior predictive accuracy and model interpretability. Then we applied XAI techniques on all four models, this not only enhances the clarity and trustworthiness of models but also promotes focused improvements in wildfire prediction capabilities. The XAI analysis reveals that UNet and Transformer-based Swin-UNet are able to focus on critical features such as 'Previous Fire Mask', 'Drought', and 'Vegetation' more effectively than the other two models, while also maintaining balanced attention to the remaining features, leading to their superior performance. The insights from our thorough comparative analysis offer substantial implications for future model design and also provide guidance for model selection in different scenarios.

Language-Informed Hyperspectral Image Synthesis for Imbalanced-Small Sample Classification via Semi-Supervised Conditional Diffusion Model

Feb 28, 2025

Abstract:Data augmentation effectively addresses the imbalanced-small sample data (ISSD) problem in hyperspectral image classification (HSIC). While most methodologies extend features in the latent space, few leverage text-driven generation to create realistic and diverse samples. Recently, text-guided diffusion models have gained significant attention due to their ability to generate highly diverse and high-quality images based on text prompts in natural image synthesis. Motivated by this, this paper proposes Txt2HSI-LDM(VAE), a novel language-informed hyperspectral image synthesis method to address the ISSD in HSIC. The proposed approach uses a denoising diffusion model, which iteratively removes Gaussian noise to generate hyperspectral samples conditioned on textual descriptions. First, to address the high-dimensionality of hyperspectral data, a universal variational autoencoder (VAE) is designed to map the data into a low-dimensional latent space, which provides stable features and reduces the inference complexity of diffusion model. Second, a semi-supervised diffusion model is designed to fully take advantage of unlabeled data. Random polygon spatial clipping (RPSC) and uncertainty estimation of latent feature (LF-UE) are used to simulate the varying degrees of mixing. Third, the VAE decodes HSI from latent space generated by the diffusion model with the language conditions as input. In our experiments, we fully evaluate synthetic samples' effectiveness from statistical characteristics and data distribution in 2D-PCA space. Additionally, visual-linguistic cross-attention is visualized on the pixel level to prove that our proposed model can capture the spatial layout and geometry of the generated data. Experiments demonstrate that the performance of the proposed Txt2HSI-LDM(VAE) surpasses the classical backbone models, state-of-the-art CNNs, and semi-supervised methods.

Spatial-Spectral Diffusion Contrastive Representation Network for Hyperspectral Image Classification

Feb 27, 2025

Abstract:Although efficient extraction of discriminative spatial-spectral features is critical for hyperspectral images classification (HSIC), it is difficult to achieve these features due to factors such as the spatial-spectral heterogeneity and noise effect. This paper presents a Spatial-Spectral Diffusion Contrastive Representation Network (DiffCRN), based on denoising diffusion probabilistic model (DDPM) combined with contrastive learning (CL) for HSIC, with the following characteristics. First,to improve spatial-spectral feature representation, instead of adopting the UNets-like structure which is widely used for DDPM, we design a novel staged architecture with spatial self-attention denoising module (SSAD) and spectral group self-attention denoising module (SGSAD) in DiffCRN with improved efficiency for spectral-spatial feature learning. Second, to improve unsupervised feature learning efficiency, we design new DDPM model with logarithmic absolute error (LAE) loss and CL that improve the loss function effectiveness and increase the instance-level and inter-class discriminability. Third, to improve feature selection, we design a learnable approach based on pixel-level spectral angle mapping (SAM) for the selection of time steps in the proposed DDPM model in an adaptive and automatic manner. Last, to improve feature integration and classification, we design an Adaptive weighted addition modul (AWAM) and Cross time step Spectral-Spatial Fusion Module (CTSSFM) to fuse time-step-wise features and perform classification. Experiments conducted on widely used four HSI datasets demonstrate the improved performance of the proposed DiffCRN over the classical backbone models and state-of-the-art GAN, transformer models and other pretrained methods. The source code and pre-trained model will be made available publicly.

Digital Twin Buildings: 3D Modeling, GIS Integration, and Visual Descriptions Using Gaussian Splatting, ChatGPT/Deepseek, and Google Maps Platforms

Feb 09, 2025Abstract:Urban digital twins are virtual replicas of cities that use multi-source data and data analytics to optimize urban planning, infrastructure management, and decision-making. Towards this, we propose a framework focused on the single-building scale. By connecting to cloud mapping platforms such as Google Map Platforms APIs, by leveraging state-of-the-art multi-agent Large Language Models data analysis using ChatGPT(4o) and Deepseek-V3/R1, and by using our Gaussian Splatting-based mesh extraction pipeline, our Digital Twin Buildings framework can retrieve a building's 3D model, visual descriptions, and achieve cloud-based mapping integration with large language model-based data analytics using a building's address, postal code, or geographic coordinates.

Gaussian Building Mesh (GBM): Extract a Building's 3D Mesh with Google Earth and Gaussian Splatting

Jan 07, 2025

Abstract:Recently released open-source pre-trained foundational image segmentation and object detection models (SAM2+GroundingDINO) allow for geometrically consistent segmentation of objects of interest in multi-view 2D images. Users can use text-based or click-based prompts to segment objects of interest without requiring labeled training datasets. Gaussian Splatting allows for the learning of the 3D representation of a scene's geometry and radiance based on 2D images. Combining Google Earth Studio, SAM2+GroundingDINO, 2D Gaussian Splatting, and our improvements in mask refinement based on morphological operations and contour simplification, we created a pipeline to extract the 3D mesh of any building based on its name, address, or geographic coordinates.

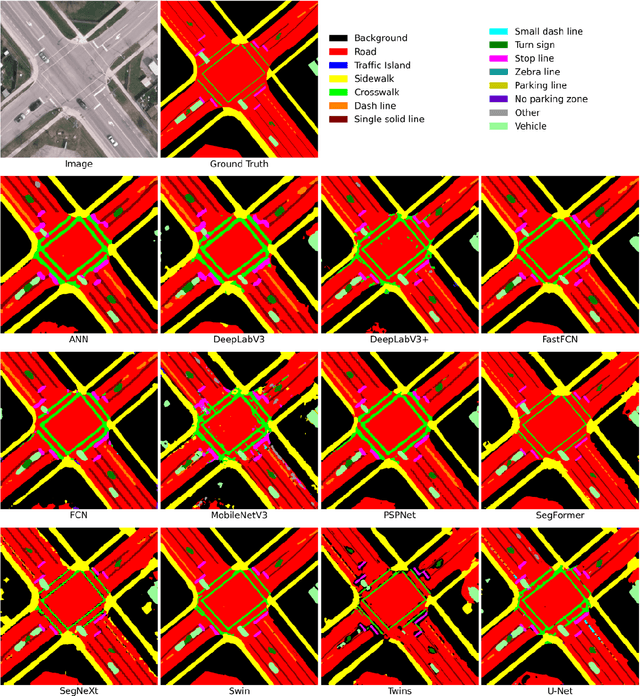

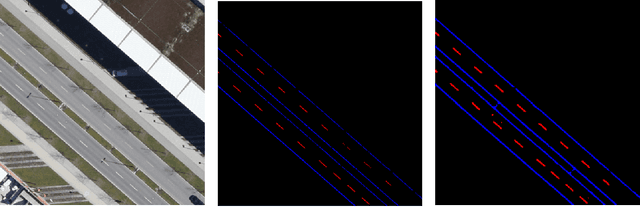

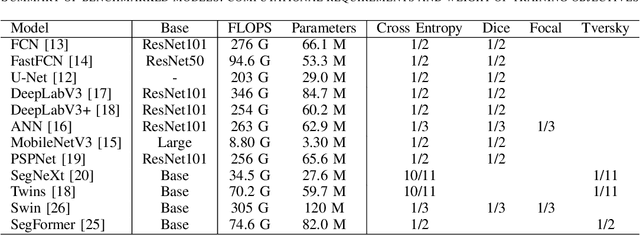

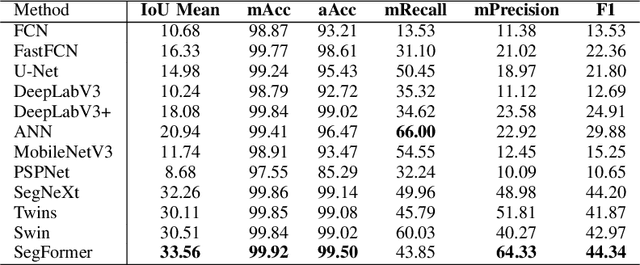

Advancements in Road Lane Mapping: Comparative Fine-Tuning Analysis of Deep Learning-based Semantic Segmentation Methods Using Aerial Imagery

Oct 08, 2024

Abstract:This research addresses the need for high-definition (HD) maps for autonomous vehicles (AVs), focusing on road lane information derived from aerial imagery. While Earth observation data offers valuable resources for map creation, specialized models for road lane extraction are still underdeveloped in remote sensing. In this study, we perform an extensive comparison of twelve foundational deep learning-based semantic segmentation models for road lane marking extraction from high-definition remote sensing images, assessing their performance under transfer learning with partially labeled datasets. These models were fine-tuned on the partially labeled Waterloo Urban Scene dataset, and pre-trained on the SkyScapes dataset, simulating a likely scenario of real-life model deployment under partial labeling. We observed and assessed the fine-tuning performance and overall performance. Models showed significant performance improvements after fine-tuning, with mean IoU scores ranging from 33.56% to 76.11%, and recall ranging from 66.0% to 98.96%. Transformer-based models outperformed convolutional neural networks, emphasizing the importance of model pre-training and fine-tuning in enhancing HD map development for AV navigation.

3D Learnable Supertoken Transformer for LiDAR Point Cloud Scene Segmentation

May 23, 2024

Abstract:3D Transformers have achieved great success in point cloud understanding and representation. However, there is still considerable scope for further development in effective and efficient Transformers for large-scale LiDAR point cloud scene segmentation. This paper proposes a novel 3D Transformer framework, named 3D Learnable Supertoken Transformer (3DLST). The key contributions are summarized as follows. Firstly, we introduce the first Dynamic Supertoken Optimization (DSO) block for efficient token clustering and aggregating, where the learnable supertoken definition avoids the time-consuming pre-processing of traditional superpoint generation. Since the learnable supertokens can be dynamically optimized by multi-level deep features during network learning, they are tailored to the semantic homogeneity-aware token clustering. Secondly, an efficient Cross-Attention-guided Upsampling (CAU) block is proposed for token reconstruction from optimized supertokens. Thirdly, the 3DLST is equipped with a novel W-net architecture instead of the common U-net design, which is more suitable for Transformer-based feature learning. The SOTA performance on three challenging LiDAR datasets (airborne MultiSpectral LiDAR (MS-LiDAR) (89.3% of the average F1 score), DALES (80.2% of mIoU), and Toronto-3D dataset (80.4% of mIoU)) demonstrate the superiority of 3DLST and its strong adaptability to various LiDAR point cloud data (airborne MS-LiDAR, aerial LiDAR, and vehicle-mounted LiDAR data). Furthermore, 3DLST also achieves satisfactory results in terms of algorithm efficiency, which is up to 5x faster than previous best-performing methods.

Efficient Point Transformer with Dynamic Token Aggregating for Point Cloud Processing

May 23, 2024

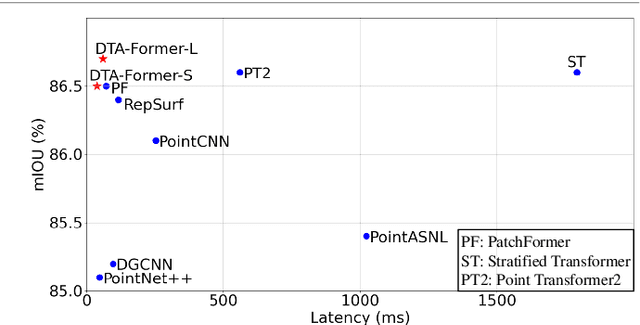

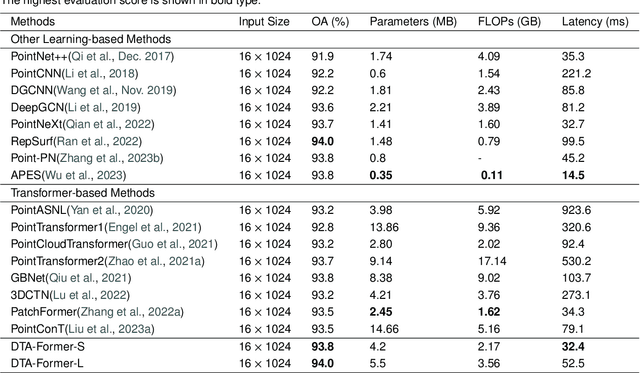

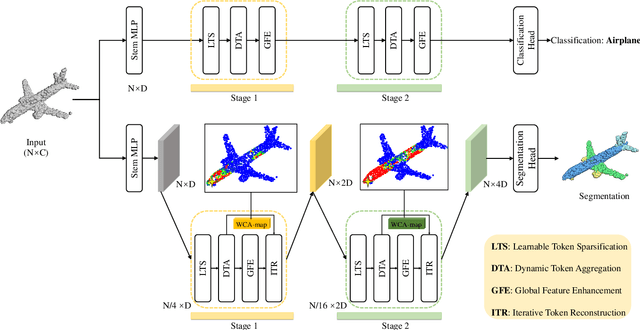

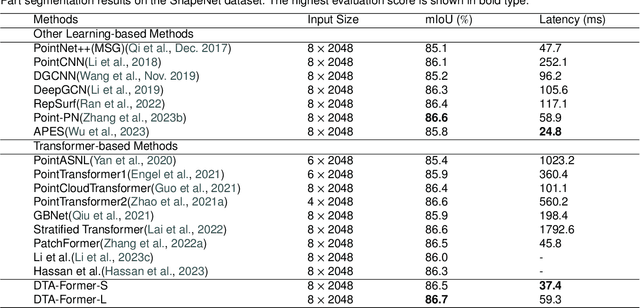

Abstract:Recently, point cloud processing and analysis have made great progress due to the development of 3D Transformers. However, existing 3D Transformer methods usually are computationally expensive and inefficient due to their huge and redundant attention maps. They also tend to be slow due to requiring time-consuming point cloud sampling and grouping processes. To address these issues, we propose an efficient point TransFormer with Dynamic Token Aggregating (DTA-Former) for point cloud representation and processing. Firstly, we propose an efficient Learnable Token Sparsification (LTS) block, which considers both local and global semantic information for the adaptive selection of key tokens. Secondly, to achieve the feature aggregation for sparsified tokens, we present the first Dynamic Token Aggregating (DTA) block in the 3D Transformer paradigm, providing our model with strong aggregated features while preventing information loss. After that, a dual-attention Transformer-based Global Feature Enhancement (GFE) block is used to improve the representation capability of the model. Equipped with LTS, DTA, and GFE blocks, DTA-Former achieves excellent classification results via hierarchical feature learning. Lastly, a novel Iterative Token Reconstruction (ITR) block is introduced for dense prediction whereby the semantic features of tokens and their semantic relationships are gradually optimized during iterative reconstruction. Based on ITR, we propose a new W-net architecture, which is more suitable for Transformer-based feature learning than the common U-net design. Extensive experiments demonstrate the superiority of our method. It achieves SOTA performance with up to 30$\times$ faster than prior point Transformers on ModelNet40, ShapeNet, and airborne MultiSpectral LiDAR (MS-LiDAR) datasets.

Photorealistic 3D Urban Scene Reconstruction and Point Cloud Extraction using Google Earth Imagery and Gaussian Splatting

May 17, 2024Abstract:3D urban scene reconstruction and modelling is a crucial research area in remote sensing with numerous applications in academia, commerce, industry, and administration. Recent advancements in view synthesis models have facilitated photorealistic 3D reconstruction solely from 2D images. Leveraging Google Earth imagery, we construct a 3D Gaussian Splatting model of the Waterloo region centered on the University of Waterloo and are able to achieve view-synthesis results far exceeding previous 3D view-synthesis results based on neural radiance fields which we demonstrate in our benchmark. Additionally, we retrieved the 3D geometry of the scene using the 3D point cloud extracted from the 3D Gaussian Splatting model which we benchmarked against our Multi- View-Stereo dense reconstruction of the scene, thereby reconstructing both the 3D geometry and photorealistic lighting of the large-scale urban scene through 3D Gaussian Splatting

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge