Guohua Wu

Generalizable Collaborative Search-and-Capture in Cluttered Environments via Path-Guided MAPPO and Directional Frontier Allocation

Dec 10, 2025Abstract:Collaborative pursuit-evasion in cluttered environments presents significant challenges due to sparse rewards and constrained Fields of View (FOV). Standard Multi-Agent Reinforcement Learning (MARL) often suffers from inefficient exploration and fails to scale to large scenarios. We propose PGF-MAPPO (Path-Guided Frontier MAPPO), a hierarchical framework bridging topological planning with reactive control. To resolve local minima and sparse rewards, we integrate an A*-based potential field for dense reward shaping. Furthermore, we introduce Directional Frontier Allocation, combining Farthest Point Sampling (FPS) with geometric angle suppression to enforce spatial dispersion and accelerate coverage. The architecture employs a parameter-shared decentralized critic, maintaining O(1) model complexity suitable for robotic swarms. Experiments demonstrate that PGF-MAPPO achieves superior capture efficiency against faster evaders. Policies trained on 10x10 maps exhibit robust zero-shot generalization to unseen 20x20 environments, significantly outperforming rule-based and learning-based baselines.

A Comprehensive Survey on Underwater Acoustic Target Positioning and Tracking: Progress, Challenges, and Perspectives

Jun 17, 2025Abstract:Underwater target tracking technology plays a pivotal role in marine resource exploration, environmental monitoring, and national defense security. Given that acoustic waves represent an effective medium for long-distance transmission in aquatic environments, underwater acoustic target tracking has become a prominent research area of underwater communications and networking. Existing literature reviews often offer a narrow perspective or inadequately address the paradigm shifts driven by emerging technologies like deep learning and reinforcement learning. To address these gaps, this work presents a systematic survey of this field and introduces an innovative multidimensional taxonomy framework based on target scale, sensor perception modes, and sensor collaboration patterns. Within this framework, we comprehensively survey the literature (more than 180 publications) over the period 2016-2025, spanning from the theoretical foundations to diverse algorithmic approaches in underwater acoustic target tracking. Particularly, we emphasize the transformative potential and recent advancements of machine learning techniques, including deep learning and reinforcement learning, in enhancing the performance and adaptability of underwater tracking systems. Finally, this survey concludes by identifying key challenges in the field and proposing future avenues based on emerging technologies such as federated learning, blockchain, embodied intelligence, and large models.

MS-YOLO: A Multi-Scale Model for Accurate and Efficient Blood Cell Detection

Jun 04, 2025Abstract:Complete blood cell detection holds significant value in clinical diagnostics. Conventional manual microscopy methods suffer from time inefficiency and diagnostic inaccuracies. Existing automated detection approaches remain constrained by high deployment costs and suboptimal accuracy. While deep learning has introduced powerful paradigms to this field, persistent challenges in detecting overlapping cells and multi-scale objects hinder practical deployment. This study proposes the multi-scale YOLO (MS-YOLO), a blood cell detection model based on the YOLOv11 framework, incorporating three key architectural innovations to enhance detection performance. Specifically, the multi-scale dilated residual module (MS-DRM) replaces the original C3K2 modules to improve multi-scale discriminability; the dynamic cross-path feature enhancement module (DCFEM) enables the fusion of hierarchical features from the backbone with aggregated features from the neck to enhance feature representations; and the light adaptive-weight downsampling module (LADS) improves feature downsampling through adaptive spatial weighting while reducing computational complexity. Experimental results on the CBC benchmark demonstrate that MS-YOLO achieves precise detection of overlapping cells and multi-scale objects, particularly small targets such as platelets, achieving an mAP@50 of 97.4% that outperforms existing models. Further validation on the supplementary WBCDD dataset confirms its robust generalization capability. Additionally, with a lightweight architecture and real-time inference efficiency, MS-YOLO meets clinical deployment requirements, providing reliable technical support for standardized blood pathology assessment.

REMS: a unified solution representation, problem modeling and metaheuristic algorithm design for general combinatorial optimization problems

May 21, 2025Abstract:Combinatorial optimization problems (COPs) with discrete variables and finite search space are critical across numerous fields, and solving them in metaheuristic algorithms is popular. However, addressing a specific COP typically requires developing a tailored and handcrafted algorithm. Even minor adjustments, such as constraint changes, may necessitate algorithm redevelopment. Therefore, establishing a framework for formulating diverse COPs into a unified paradigm and designing reusable metaheuristic algorithms is valuable. A COP can be typically viewed as the process of giving resources to perform specific tasks, subjecting to given constraints. Motivated by this, a resource-centered modeling and solving framework (REMS) is introduced for the first time. We first extract and define resources and tasks from a COP. Subsequently, given predetermined resources, the solution structure is unified as assigning tasks to resources, from which variables, objectives, and constraints can be derived and a problem model is constructed. To solve the modeled COPs, several fundamental operators are designed based on the unified solution structure, including the initial solution, neighborhood structure, destruction and repair, crossover, and ranking. These operators enable the development of various metaheuristic algorithms. Specially, 4 single-point-based algorithms and 1 population-based algorithm are configured herein. Experiments on 10 COPs, covering routing, location, loading, assignment, scheduling, and graph coloring problems, show that REMS can model these COPs within the unified paradigm and effectively solve them with the designed metaheuristic algorithms. Furthermore, REMS is more competitive than GUROBI and SCIP in tackling large-scale instances and complex COPs, and outperforms OR-TOOLS on several challenging COPs.

A No-Reference Medical Image Quality Assessment Method Based on Automated Distortion Recognition Technology: Application to Preprocessing in MRI-guided Radiotherapy

Dec 10, 2024

Abstract:Objective:To develop a no-reference image quality assessment method using automated distortion recognition to boost MRI-guided radiotherapy precision.Methods:We analyzed 106,000 MR images from 10 patients with liver metastasis,captured with the Elekta Unity MR-LINAC.Our No-Reference Quality Assessment Model includes:1)image preprocessing to enhance visibility of key diagnostic features;2)feature extraction and directional analysis using MSCN coefficients across four directions to capture textural attributes and gradients,vital for identifying image features and potential distortions;3)integrative Quality Index(QI)calculation,which integrates features via AGGD parameter estimation and K-means clustering.The QI,based on a weighted MAD computation of directional scores,provides a comprehensive image quality measure,robust against outliers.LOO-CV assessed model generalizability and performance.Tumor tracking algorithm performance was compared with and without preprocessing to verify tracking accuracy enhancements.Results:Preprocessing significantly improved image quality,with the QI showing substantial positive changes and surpassing other metrics.After normalization,the QI's average value was 79.6 times higher than CNR,indicating improved image definition and contrast.It also showed higher sensitivity in detail recognition with average values 6.5 times and 1.7 times higher than Tenengrad gradient and entropy.The tumor tracking algorithm confirmed significant tracking accuracy improvements with preprocessed images,validating preprocessing effectiveness.Conclusions:This study introduces a novel no-reference image quality evaluation method based on automated distortion recognition,offering a new quality control tool for MRIgRT tumor tracking.It enhances clinical application accuracy and facilitates medical image quality assessment standardization, with significant clinical and research value.

A Novel Automatic Real-time Motion Tracking Method for Magnetic Resonance Imaging-guided Radiotherapy: Leveraging the Enhanced Tracking-Learning-Detection Framework with Automatic Segmentation

Nov 12, 2024

Abstract:Objective: Ensuring the precision in motion tracking for MRI-guided Radiotherapy (MRIgRT) is crucial for the delivery of effective treatments. This study refined the motion tracking accuracy in MRIgRT through the innovation of an automatic real-time tracking method, leveraging an enhanced Tracking-Learning-Detection (ETLD) framework coupled with automatic segmentation. Methods: We developed a novel MRIgRT motion tracking method by integrating two primary methods: the ETLD framework and an improved Chan-Vese model (ICV), named ETLD+ICV. The TLD framework was upgraded to suit real-time cine MRI, including advanced image preprocessing, no-reference image quality assessment, an enhanced median-flow tracker, and a refined detector with dynamic search region adjustments. Additionally, ICV was combined for precise coverage of the target volume, which refined the segmented region frame by frame using tracking results, with key parameters optimized. Tested on 3.5D MRI scans from 10 patients with liver metastases, our method ensures precise tracking and accurate segmentation vital for MRIgRT. Results: An evaluation of 106,000 frames across 77 treatment fractions revealed sub-millimeter tracking errors of less than 0.8mm, with over 99% precision and 98% recall for all subjects, underscoring the robustness and efficacy of the ETLD. Moreover, the ETLD+ICV yielded a dice global score of more than 82% for all subjects, demonstrating the proposed method's extensibility and precise target volume coverage. Conclusions: This study successfully developed an automatic real-time motion tracking method for MRIgRT that markedly surpasses current methods. The novel method not only delivers exceptional precision in tracking and segmentation but also demonstrates enhanced adaptability to clinical demands, positioning it as an indispensable asset in the quest to augment the efficacy of radiotherapy treatments.

UAV 3-D path planning based on MOEA/D with adaptive areal weight adjustment

Aug 20, 2023Abstract:Unmanned aerial vehicles (UAVs) are desirable platforms for time-efficient and cost-effective task execution. 3-D path planning is a key challenge for task decision-making. This paper proposes an improved multi-objective evolutionary algorithm based on decomposition (MOEA/D) with an adaptive areal weight adjustment (AAWA) strategy to make a tradeoff between the total flight path length and the terrain threat. AAWA is designed to improve the diversity of the solutions. More specifically, AAWA first removes a crowded individual and its weight vector from the current population and then adds a sparse individual from the external elite population to the current population. To enable the newly-added individual to evolve towards the sparser area of the population in the objective space, its weight vector is constructed by the objective function value of its neighbors. The effectiveness of MOEA/D-AAWA is validated in twenty synthetic scenarios with different number of obstacles and four realistic scenarios in comparison with other three classical methods.

Performance assessment and exhaustive listing of 500+ nature inspired metaheuristic algorithms

Dec 19, 2022

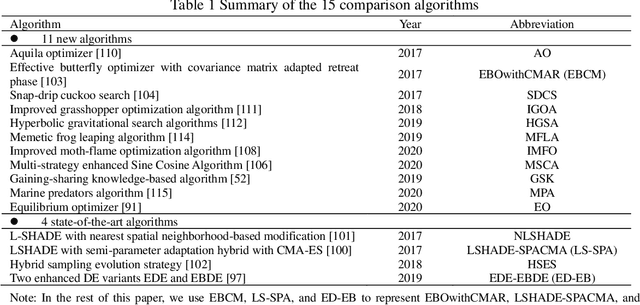

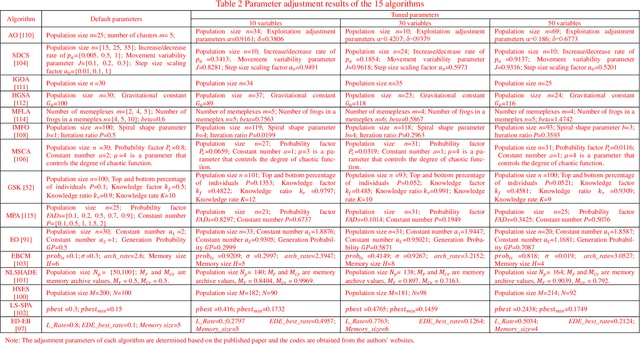

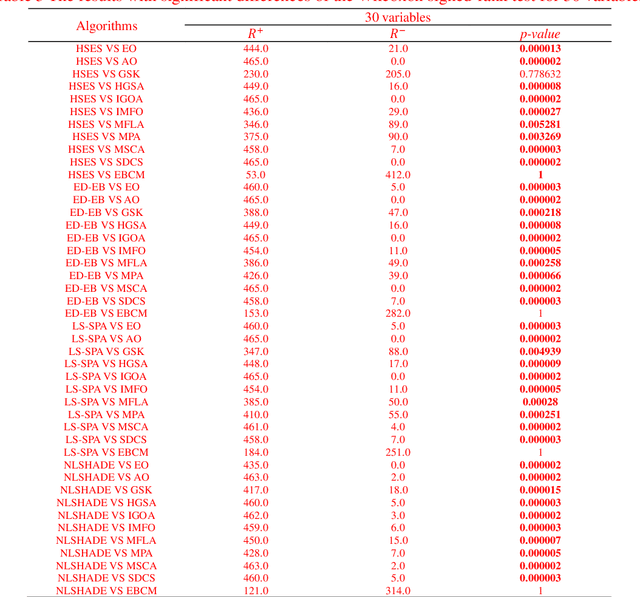

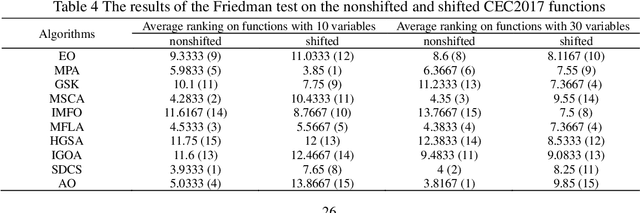

Abstract:Metaheuristics are popularly used in various fields, and they have attracted much attention in the scientific and industrial communities. In recent years, the number of new metaheuristic names has been continuously growing. Generally, the inventors attribute the novelties of these new algorithms to inspirations from either biology, human behaviors, physics, or other phenomena. In addition, these new algorithms, compared against basic versions of other metaheuristics using classical benchmark problems without shift/rotation, show competitive performances. In this study, we exhaustively tabulate more than 500 metaheuristics. To comparatively evaluate the performance of the recent competitive variants and newly proposed metaheuristics, 11 newly proposed metaheuristics and 4 variants of established metaheuristics are comprehensively compared on the CEC2017 benchmark suite. In addition, whether these algorithms have a search bias to the center of the search space is investigated. The results show that the performance of the newly proposed EBCM (effective butterfly optimizer with covariance matrix adaptation) algorithm performs comparably to the 4 well performing variants of the established metaheuristics and possesses similar properties and behaviors, such as convergence, diversity, exploration and exploitation trade-offs, in many aspects. The performance of all 15 of the algorithms is likely to deteriorate due to certain transformations, while the 4 state-of-the-art metaheuristics are less affected by transformations such as the shifting of the global optimal point away from the center of the search space. It should be noted that, except EBCM, the other 10 new algorithms proposed mostly during 2019-2020 are inferior to the well performing 2017 variants of differential evolution and evolution strategy in terms of convergence speed and global search ability on CEC 2017 functions.

DL-DRL: A double-layer deep reinforcement learning approach for large-scale task scheduling of multi-UAV

Aug 04, 2022

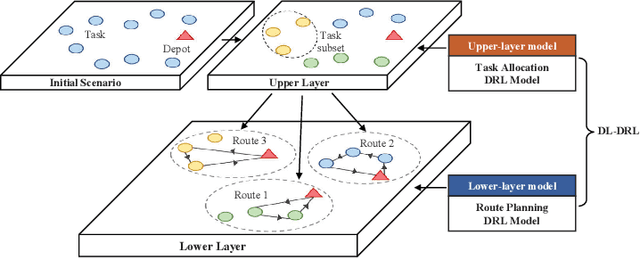

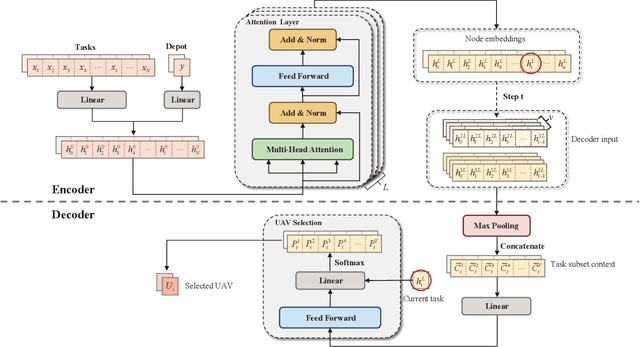

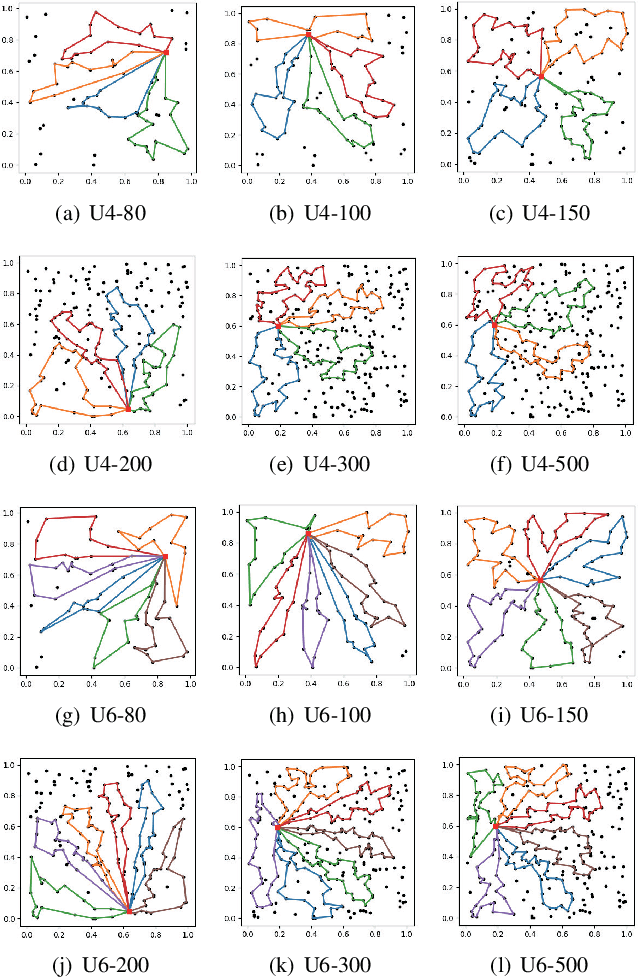

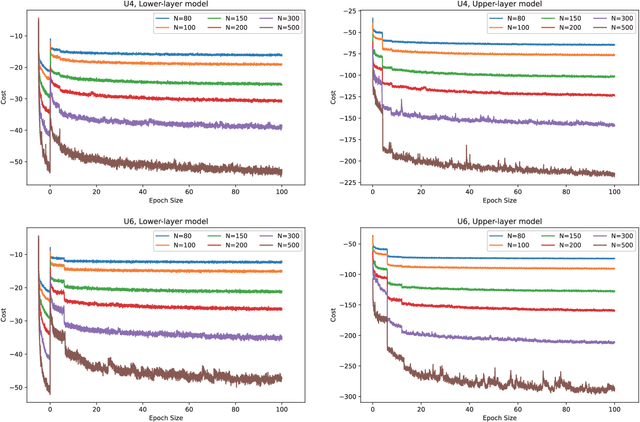

Abstract:This paper studies deep reinforcement learning (DRL) for the task scheduling problem of multiple unmanned aerial vehicles (UAVs). Current approaches generally use exact and heuristic algorithms to solve the problem, while the computation time rapidly increases as the task scale grows and heuristic rules need manual design. As a self-learning method, DRL can obtain a high-quality solution quickly without hand-engineered rules. However, the huge decision space makes the training of DRL models becomes unstable in situations with large-scale tasks. In this work, to address the large-scale problem, we develop a divide and conquer-based framework (DCF) to decouple the original problem into a task allocation and a UAV route planning subproblems, which are solved in the upper and lower layers, respectively. Based on DCF, a double-layer deep reinforcement learning approach (DL-DRL) is proposed, where an upper-layer DRL model is designed to allocate tasks to appropriate UAVs and a lower-layer DRL model [i.e., the widely used attention model (AM)] is applied to generate viable UAV routes. Since the upper-layer model determines the input data distribution of the lower-layer model, and its reward is calculated via the lower-layer model during training, we develop an interactive training strategy (ITS), where the whole training process consists of pre-training, intensive training, and alternate training processes. Experimental results show that our DL-DRL outperforms mainstream learning-based and most traditional methods, and is competitive with the state-of-the-art heuristic method [i.e., OR-Tools], especially on large-scale problems. The great generalizability of DL-DRL is also verified by testing the model learned for a problem size to larger ones. Furthermore, an ablation study demonstrates that our ITS can reach a compromise between the model performance and training duration.

Learning by Active Forgetting for Neural Networks

Nov 21, 2021

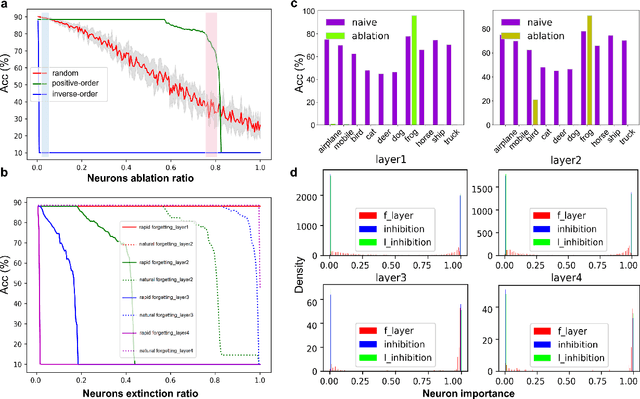

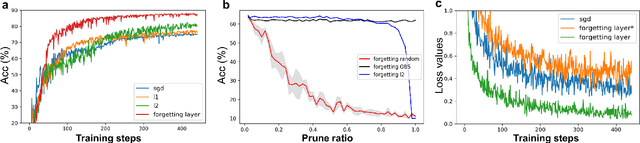

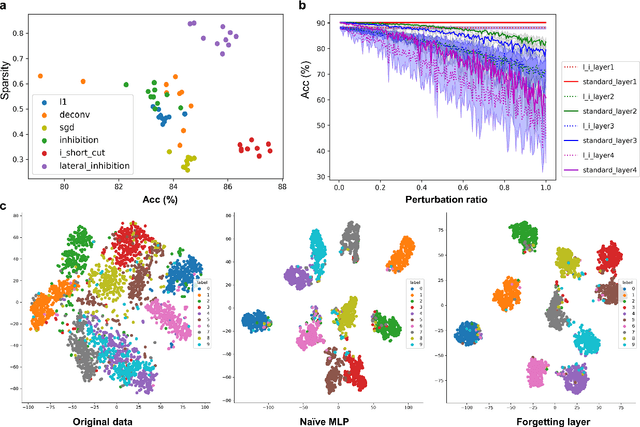

Abstract:Remembering and forgetting mechanisms are two sides of the same coin in a human learning-memory system. Inspired by human brain memory mechanisms, modern machine learning systems have been working to endow machine with lifelong learning capability through better remembering while pushing the forgetting as the antagonist to overcome. Nevertheless, this idea might only see the half picture. Up until very recently, increasing researchers argue that a brain is born to forget, i.e., forgetting is a natural and active process for abstract, rich, and flexible representations. This paper presents a learning model by active forgetting mechanism with artificial neural networks. The active forgetting mechanism (AFM) is introduced to a neural network via a "plug-and-play" forgetting layer (P\&PF), consisting of groups of inhibitory neurons with Internal Regulation Strategy (IRS) to adjust the extinction rate of themselves via lateral inhibition mechanism and External Regulation Strategy (ERS) to adjust the extinction rate of excitatory neurons via inhibition mechanism. Experimental studies have shown that the P\&PF offers surprising benefits: self-adaptive structure, strong generalization, long-term learning and memory, and robustness to data and parameter perturbation. This work sheds light on the importance of forgetting in the learning process and offers new perspectives to understand the underlying mechanisms of neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge