DL-DRL: A double-layer deep reinforcement learning approach for large-scale task scheduling of multi-UAV

Paper and Code

Aug 04, 2022

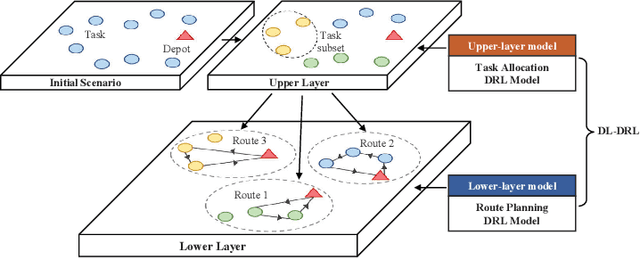

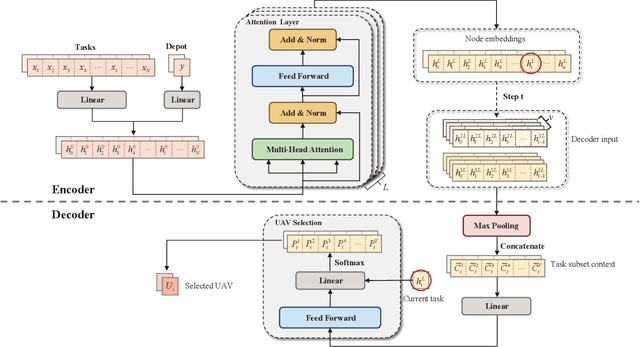

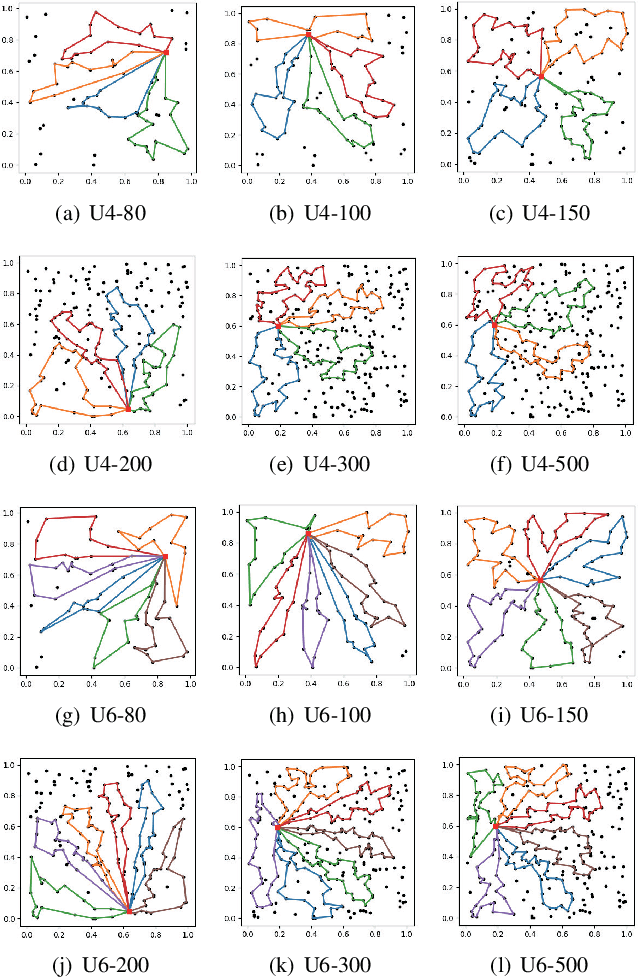

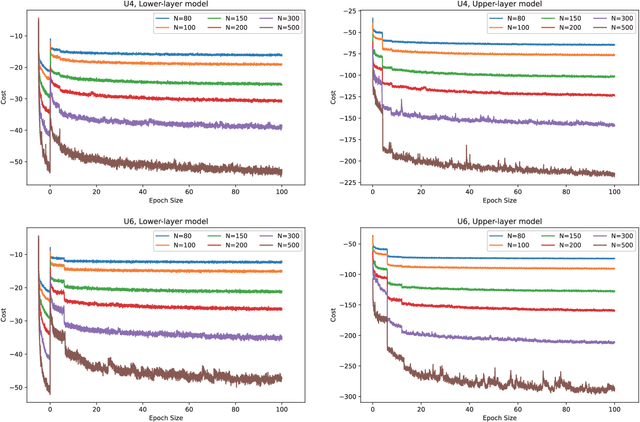

This paper studies deep reinforcement learning (DRL) for the task scheduling problem of multiple unmanned aerial vehicles (UAVs). Current approaches generally use exact and heuristic algorithms to solve the problem, while the computation time rapidly increases as the task scale grows and heuristic rules need manual design. As a self-learning method, DRL can obtain a high-quality solution quickly without hand-engineered rules. However, the huge decision space makes the training of DRL models becomes unstable in situations with large-scale tasks. In this work, to address the large-scale problem, we develop a divide and conquer-based framework (DCF) to decouple the original problem into a task allocation and a UAV route planning subproblems, which are solved in the upper and lower layers, respectively. Based on DCF, a double-layer deep reinforcement learning approach (DL-DRL) is proposed, where an upper-layer DRL model is designed to allocate tasks to appropriate UAVs and a lower-layer DRL model [i.e., the widely used attention model (AM)] is applied to generate viable UAV routes. Since the upper-layer model determines the input data distribution of the lower-layer model, and its reward is calculated via the lower-layer model during training, we develop an interactive training strategy (ITS), where the whole training process consists of pre-training, intensive training, and alternate training processes. Experimental results show that our DL-DRL outperforms mainstream learning-based and most traditional methods, and is competitive with the state-of-the-art heuristic method [i.e., OR-Tools], especially on large-scale problems. The great generalizability of DL-DRL is also verified by testing the model learned for a problem size to larger ones. Furthermore, an ablation study demonstrates that our ITS can reach a compromise between the model performance and training duration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge