Zhong Yang

A Comprehensive Survey on Underwater Acoustic Target Positioning and Tracking: Progress, Challenges, and Perspectives

Jun 17, 2025Abstract:Underwater target tracking technology plays a pivotal role in marine resource exploration, environmental monitoring, and national defense security. Given that acoustic waves represent an effective medium for long-distance transmission in aquatic environments, underwater acoustic target tracking has become a prominent research area of underwater communications and networking. Existing literature reviews often offer a narrow perspective or inadequately address the paradigm shifts driven by emerging technologies like deep learning and reinforcement learning. To address these gaps, this work presents a systematic survey of this field and introduces an innovative multidimensional taxonomy framework based on target scale, sensor perception modes, and sensor collaboration patterns. Within this framework, we comprehensively survey the literature (more than 180 publications) over the period 2016-2025, spanning from the theoretical foundations to diverse algorithmic approaches in underwater acoustic target tracking. Particularly, we emphasize the transformative potential and recent advancements of machine learning techniques, including deep learning and reinforcement learning, in enhancing the performance and adaptability of underwater tracking systems. Finally, this survey concludes by identifying key challenges in the field and proposing future avenues based on emerging technologies such as federated learning, blockchain, embodied intelligence, and large models.

A New Look at AI-Driven NOMA-F-RANs: Features Extraction, Cooperative Caching, and Cache-Aided Computing

Dec 02, 2021

Abstract:Non-orthogonal multiple access (NOMA) enabled fog radio access networks (NOMA-F-RANs) have been taken as a promising enabler to release network congestion, reduce delivery latency, and improve fog user equipments' (F-UEs') quality of services (QoS). Nevertheless, the effectiveness of NOMA-F-RANs highly relies on the charted feature information (preference distribution, positions, mobilities, etc.) of F-UEs as well as the effective caching, computing, and resource allocation strategies. In this article, we explore how artificial intelligence (AI) techniques are utilized to solve foregoing tremendous challenges. Specifically, we first elaborate on the NOMA-F-RANs architecture, shedding light on the key modules, namely, cooperative caching and cache-aided mobile edge computing (MEC). Then, the potentially applicable AI-driven techniques in solving the principal issues of NOMA-F-RANs are reviewed. Through case studies, we show the efficacy of AI-enabled methods in terms of F-UEs' latent feature extraction and cooperative caching. Finally, future trends of AI-driven NOMA-F-RANs, including open research issues and challenges, are identified.

Artificial Intelligence Driven UAV-NOMA-MEC in Next Generation Wireless Networks

Jan 27, 2021

Abstract:Driven by the unprecedented high throughput and low latency requirements in next-generation wireless networks, this paper introduces an artificial intelligence (AI) enabled framework in which unmanned aerial vehicles (UAVs) use non-orthogonal multiple access (NOMA) and mobile edge computing (MEC) techniques to service terrestrial mobile users (MUs). The proposed framework enables the terrestrial MUs to offload their computational tasks simultaneously, intelligently, and flexibly, thus enhancing their connectivity as well as reducing their transmission latency and their energy consumption. To this end, the fundamentals of this framework are first introduced. Then, a number of communication and AI techniques are proposed to improve the quality of experiences of terrestrial MUs. To this end, federated learning and reinforcement learning are introduced for intelligent task offloading and computing resource allocation. For each learning technique, motivations, challenges, and representative results are introduced. Finally, several key technical challenges and open research issues of the proposed framework are summarized.

Deep Learning for Latent Events Forecasting in Twitter Aided Caching Networks

Jan 04, 2021

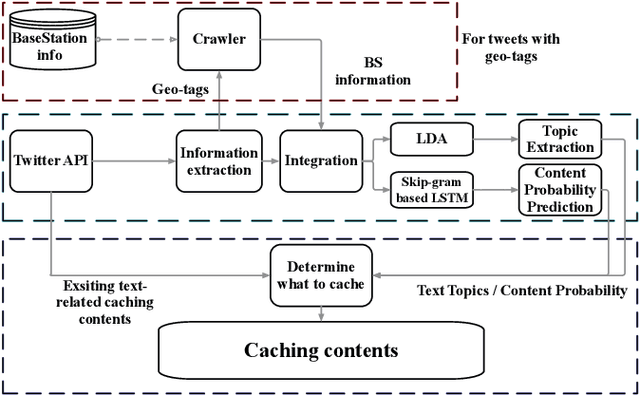

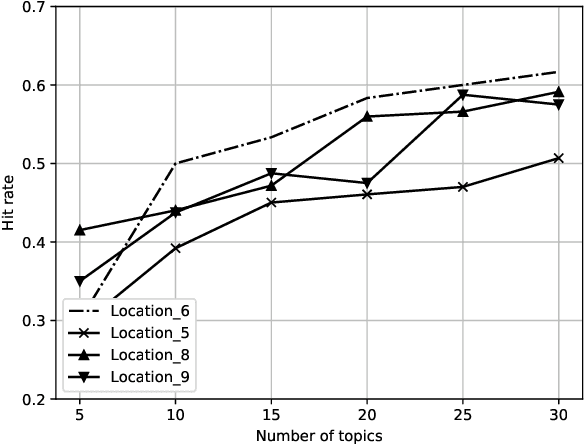

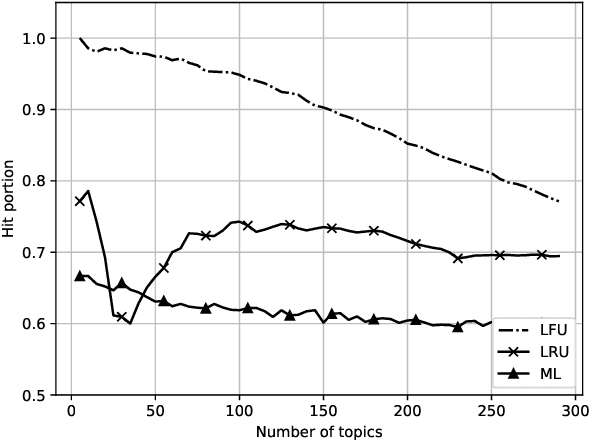

Abstract:A novel Twitter context aided content caching (TAC) framework is proposed for enhancing the caching efficiency by taking advantage of the legibility and massive volume of Twitter data. For the purpose of promoting the caching efficiency, three machine learning models are proposed to predict latent events and events popularity, utilizing collect Twitter data with geo-tags and geographic information of the adjacent base stations (BSs). Firstly, we propose a latent Dirichlet allocation (LDA) model for latent events forecasting taking advantage of the superiority of the LDA model in natural language processing (NLP). Then, we conceive long short-term memory (LSTM) with skip-gram embedding approach and LSTM with continuous skip-gram-Geo-aware embedding approach for the events popularity forecasting. Lastly, we associate the predicted latent events and the popularity of the events with the caching strategy. Extensive practical experiments demonstrate that: (1) The proposed TAC framework outperforms the conventional caching framework and is capable of being employed in practical applications thanks to the associating ability with public interests. (2) The proposed LDA approach conserves superiority for natural language processing (NLP) in Twitter data. (3) The perplexity of the proposed skip-gram-based LSTM is lower compared with the conventional LDA approach. (4) Evaluation of the model demonstrates that the hit rates of tweets of the model vary from 50% to 65% and the hit rate of the caching contents is up to approximately 75\% with smaller caching space compared to conventional algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge