Yaru Fu

Multi-Waveguide Pinching Antenna Placement Optimization for Rate Maximization

Dec 21, 2025Abstract:Pinching antenna systems (PASS) have emerged as a technology that enables the large-scale movement of antenna elements, offering significant potential for performance gains in next-generation wireless networks. This paper investigates the problem of maximizing the average per-user data rate by optimizing the antenna placement of a multi-waveguide PASS, subject to a stringent physical minimum spacing constraint. To address this complex challenge, which involves a coupled fractional objective and a non-convex constraint, we employ the fractional programming (FP) framework to transform the non-convex rate maximization problem into a more tractable one, and devise a projected gradient ascent (PGA)-based algorithm to iteratively solve the transformed problem. Simulation results demonstrate that our proposed scheme significantly outperforms various geometric placement baselines, achieving superior per-user data rates by actively mitigating multi-user interference.

Content-Aware RSMA-Enabled Pinching-Antenna Systems for Latency Optimization in 6G Networks

Dec 19, 2025Abstract:The Pinching Antenna System (PAS) has emerged as a promising technology to dynamically reconfigure wireless propagation environments in 6G networks. By activating radiating elements at arbitrary positions along a dielectric waveguide, PAS can establish strong line-of-sight (LoS) links with users, significantly enhancing channel gain and deployment flexibility, particularly in high-frequency bands susceptible to severe path loss. To further improve multi-user performance, this paper introduces a novel content-aware transmission framework that integrates PAS with rate-splitting multiple access (RSMA). Unlike conventional RSMA, the proposed RSMA scheme enables users requesting the same content to share a unified private stream, thereby mitigating inter-user interference and reducing power fragmentation. We formulate a joint optimization problem aimed at minimizing the average system latency by dynamically adapting both antenna positioning and RSMA parameters according to channel conditions and user requests. A Content-Aware RSMA and Pinching-antenna Joint Optimization (CARP-JO) algorithm is developed, which decomposes the non-convex problem into tractable subproblems solved via bisection search, convex programming, and golden-section search. Simulation results demonstrate that the proposed CARP-JO scheme consistently outperforms Traditional RSMA, NOMA, and Fixed-antenna systems across diverse network scenarios in terms of latency, underscoring the effectiveness of co-designing physical-layer reconfigurability with intelligent communication strategies.

Enhancing Mobile Crowdsensing Efficiency: A Coverage-aware Resource Allocation Approach

Mar 27, 2025

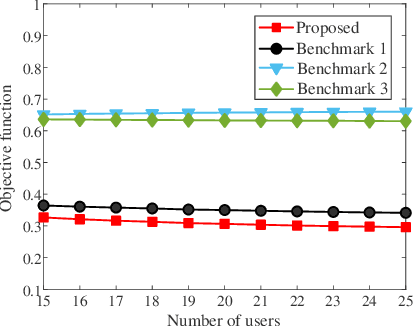

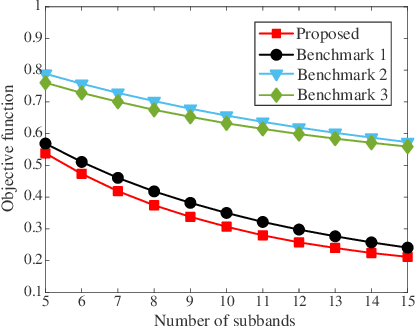

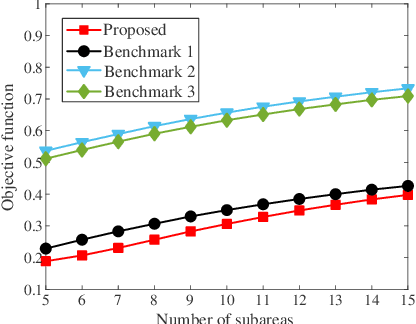

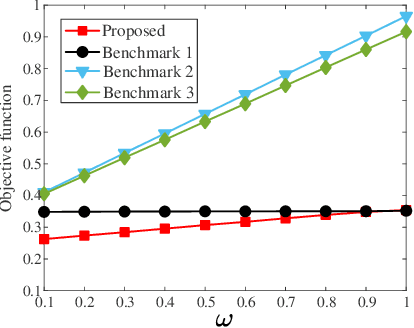

Abstract:In this study, we investigate the resource management challenges in next-generation mobile crowdsensing networks with the goal of minimizing task completion latency while ensuring coverage performance, i.e., an essential metric to ensure comprehensive data collection across the monitored area, yet it has been commonly overlooked in existing studies. To this end, we formulate a weighted latency and coverage gap minimization problem via jointly optimizing user selection, subchannel allocation, and sensing task allocation. The formulated minimization problem is a non-convex mixed-integer programming issue. To facilitate the analysis, we decompose the original optimization problem into two subproblems. One focuses on optimizing sensing task and subband allocation under fixed sensing user selection, which is optimally solved by the Hungarian algorithm via problem reformulation. Building upon these findings, we introduce a time-efficient two-sided swapping method to refine the scheduled user set and enhance system performance. Extensive numerical results demonstrate the effectiveness of our proposed approach compared to various benchmark strategies.

Energy-Efficient Federated Learning and Migration in Digital Twin Edge Networks

Mar 20, 2025

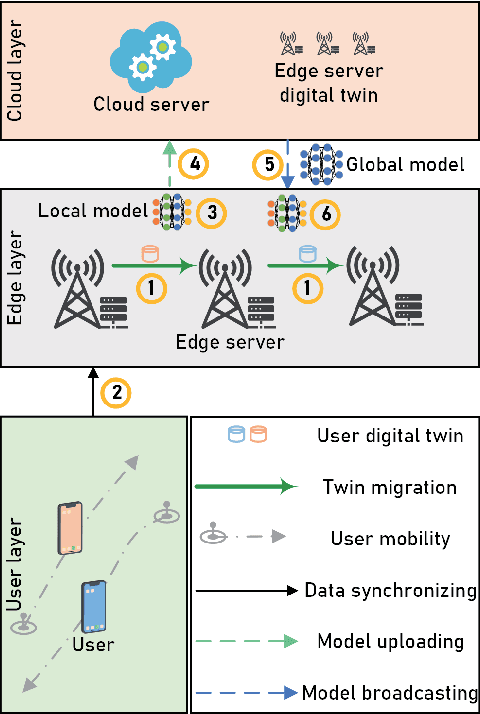

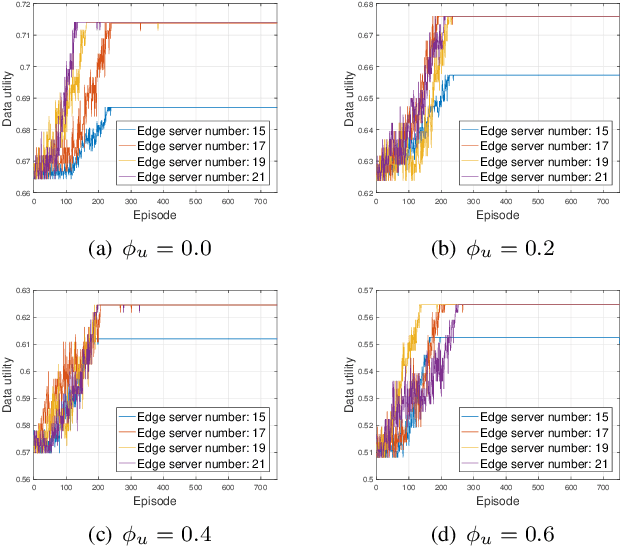

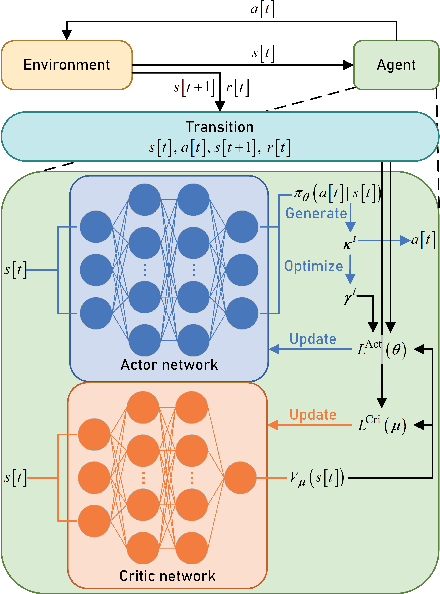

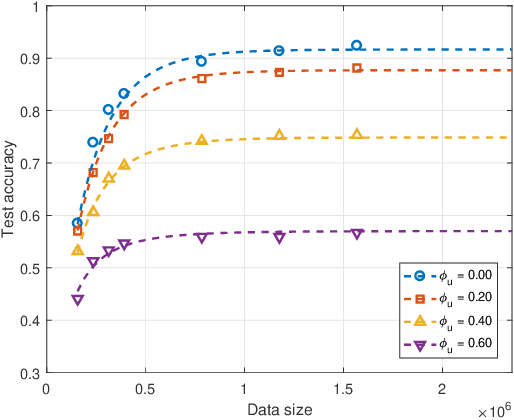

Abstract:The digital twin edge network (DITEN) is a significant paradigm in the sixth-generation wireless system (6G) that aims to organize well-developed infrastructures to meet the requirements of evolving application scenarios. However, the impact of the interaction between the long-term DITEN maintenance and detailed digital twin tasks, which often entail privacy considerations, is commonly overlooked in current research. This paper addresses this issue by introducing a problem of digital twin association and historical data allocation for a federated learning (FL) task within DITEN. To achieve this goal, we start by introducing a closed-form function to predict the training accuracy of the FL task, referring to it as the data utility. Subsequently, we carry out comprehensive convergence analyses on the proposed FL methodology. Our objective is to jointly optimize the data utility of the digital twin-empowered FL task and the energy costs incurred by the long-term DITEN maintenance, encompassing FL model training, data synchronization, and twin migration. To tackle the aforementioned challenge, we present an optimization-driven learning algorithm that effectively identifies optimized solutions for the formulated problem. Numerical results demonstrate that our proposed algorithm outperforms various baseline approaches.

Joint System Latency and Data Freshness Optimization for Cache-enabled Mobile Crowdsensing Networks

Jan 24, 2025

Abstract:Mobile crowdsensing (MCS) networks enable large-scale data collection by leveraging the ubiquity of mobile devices. However, frequent sensing and data transmission can lead to significant resource consumption. To mitigate this issue, edge caching has been proposed as a solution for storing recently collected data. Nonetheless, this approach may compromise data freshness. In this paper, we investigate the trade-off between re-using cached task results and re-sensing tasks in cache-enabled MCS networks, aiming to minimize system latency while maintaining information freshness. To this end, we formulate a weighted delay and age of information (AoI) minimization problem, jointly optimizing sensing decisions, user selection, channel selection, task allocation, and caching strategies. The problem is a mixed-integer non-convex programming problem which is intractable. Therefore, we decompose the long-term problem into sequential one-shot sub-problems and design a framework that optimizes system latency, task sensing decision, and caching strategy subproblems. When one task is re-sensing, the one-shot problem simplifies to the system latency minimization problem, which can be solved optimally. The task sensing decision is then made by comparing the system latency and AoI. Additionally, a Bayesian update strategy is developed to manage the cached task results. Building upon this framework, we propose a lightweight and time-efficient algorithm that makes real-time decisions for the long-term optimization problem. Extensive simulation results validate the effectiveness of our approach.

When Digital Twin Meets 6G: Concepts, Obstacles, and Research Prospects

Sep 03, 2024

Abstract:The convergence of digital twin technology and the emerging 6G network presents both challenges and numerous research opportunities. This article explores the potential synergies between digital twin and 6G, highlighting the key challenges and proposing fundamental principles for their integration. We discuss the unique requirements and capabilities of digital twin in the context of 6G networks, such as sustainable deployment, real-time synchronization, seamless migration, predictive analytic, and closed-loop control. Furthermore, we identify research opportunities for leveraging digital twin and artificial intelligence to enhance various aspects of 6G, including network optimization, resource allocation, security, and intelligent service provisioning. This article aims to stimulate further research and innovation at the intersection of digital twin and 6G, paving the way for transformative applications and services in the future.

Two-Timescale Synchronization and Migration for Digital Twin Networks: A Multi-Agent Deep Reinforcement Learning Approach

Sep 02, 2024

Abstract:Digital twins (DTs) have emerged as a promising enabler for representing the real-time states of physical worlds and realizing self-sustaining systems. In practice, DTs of physical devices, such as mobile users (MUs), are commonly deployed in multi-access edge computing (MEC) networks for the sake of reducing latency. To ensure the accuracy and fidelity of DTs, it is essential for MUs to regularly synchronize their status with their DTs. However, MU mobility introduces significant challenges to DT synchronization. Firstly, MU mobility triggers DT migration which could cause synchronization failures. Secondly, MUs require frequent synchronization with their DTs to ensure DT fidelity. Nonetheless, DT migration among MEC servers, caused by MU mobility, may occur infrequently. Accordingly, we propose a two-timescale DT synchronization and migration framework with reliability consideration by establishing a non-convex stochastic problem to minimize the long-term average energy consumption of MUs. We use Lyapunov theory to convert the reliability constraints and reformulate the new problem as a partially observable Markov decision-making process (POMDP). Furthermore, we develop a heterogeneous agent proximal policy optimization with Beta distribution (Beta-HAPPO) method to solve it. Numerical results show that our proposed Beta-HAPPO method achieves significant improvements in energy savings when compared with other benchmarks.

Secure Semantic Communications: From Perspective of Physical Layer Security

Aug 04, 2024

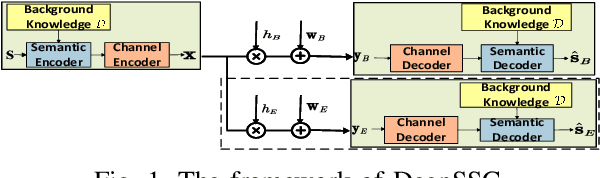

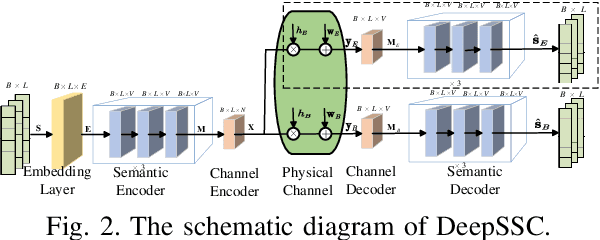

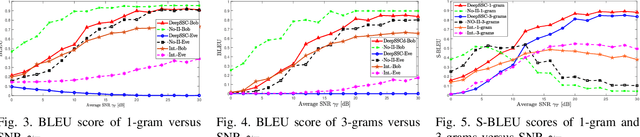

Abstract:Semantic communications have been envisioned as a potential technique that goes beyond Shannon paradigm. Unlike modern communications that provide bit-level security, the eaves-dropping of semantic communications poses a significant risk of potentially exposing intention of legitimate user. To address this challenge, a novel deep neural network (DNN) enabled secure semantic communication (DeepSSC) system is developed by capitalizing on physical layer security. To balance the tradeoff between security and reliability, a two-phase training method for DNNs is devised. Particularly, Phase I aims at semantic recovery of legitimate user, while Phase II attempts to minimize the leakage of semantic information to eavesdroppers. The loss functions of DeepSSC in Phases I and II are respectively designed according to Shannon capacity and secure channel capacity, which are approximated with variational inference. Moreover, we define the metric of secure bilingual evaluation understudy (S-BLEU) to assess the security of semantic communications. Finally, simulation results demonstrate that DeepSSC achieves a significant boost to semantic security particularly in high signal-to-noise ratio regime, despite a minor degradation of reliability.

Spectral-Efficiency and Energy-Efficiency of Variable-Length XP-HARQ

Oct 17, 2023

Abstract:A variable-length cross-packet hybrid automatic repeat request (VL-XP-HARQ) is proposed to boost the spectral efficiency (SE) and the energy efficiency (EE) of communications. The SE is firstly derived in terms of the outage probabilities, with which the SE is proved to be upper bounded by the ergodic capacity (EC). Moreover, to facilitate the maximization of the SE, the asymptotic outage probability is obtained at high signal-to-noise ratio (SNR), with which the SE is maximized by properly choosing the number of new information bits while guaranteeing outage requirement. By applying Dinkelbach's transform, the fractional objective function is transformed into a subtraction form, which can be decomposed into multiple sub-problems through alternating optimization. By noticing that the asymptotic outage probability is a convex function, each sub-problem can be easily relaxed to a convex problem by adopting successive convex approximation (SCA). Besides, the EE of VL-XP-HARQ is also investigated. An upper bound of the EE is found and proved to be attainable. Furthermore, by aiming at maximizing the EE via power allocation while confining outage within a certain constraint, the methods to the maximization of SE are invoked to solve the similar fractional problem. Finally, numerical results are presented for verification.

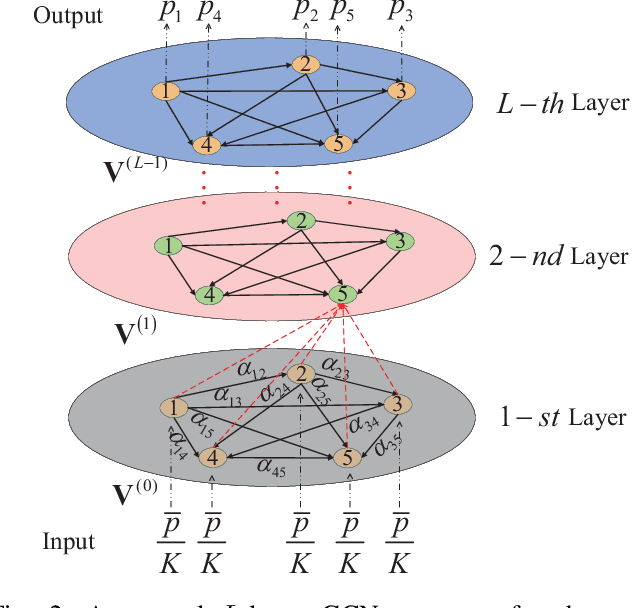

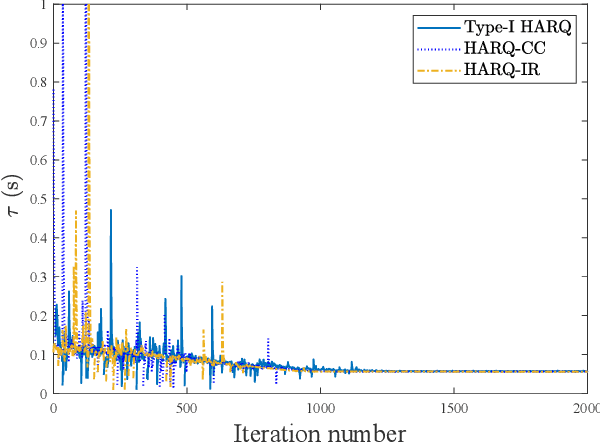

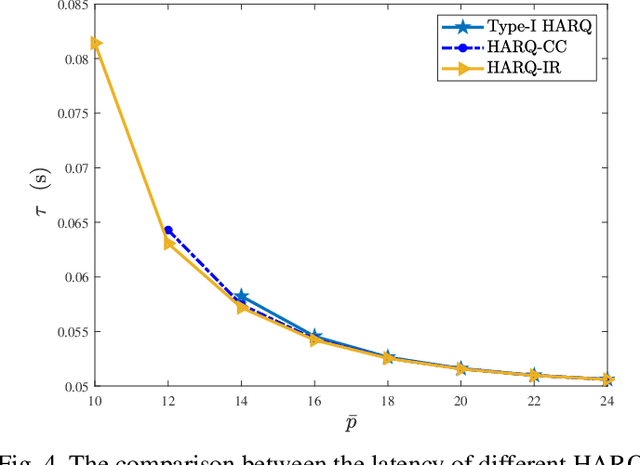

Graph Convolutional Network Enabled Power-Constrained HARQ Strategy for URLLC

Aug 04, 2023

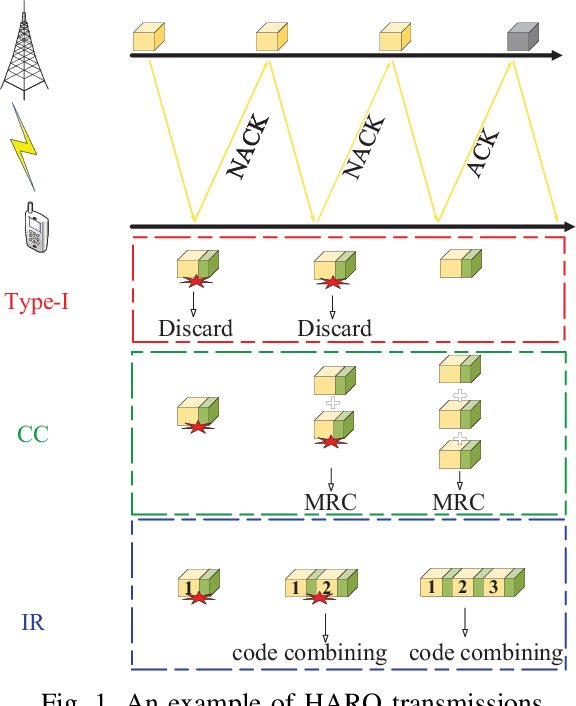

Abstract:In this paper, a power-constrained hybrid automatic repeat request (HARQ) transmission strategy is developed to support ultra-reliable low-latency communications (URLLC). In particular, we aim to minimize the delivery latency of HARQ schemes over time-correlated fading channels, meanwhile ensuring the high reliability and limited power consumption. To ease the optimization, the simple asymptotic outage expressions of HARQ schemes are adopted. Furthermore, by noticing the non-convexity of the latency minimization problem and the intricate connection between different HARQ rounds, the graph convolutional network (GCN) is invoked for the optimal power solution owing to its powerful ability of handling the graph data. The primal-dual learning method is then leveraged to train the GCN weights. Consequently, the numerical results are presented for verification together with the comparisons among three HARQ schemes in terms of the latency and the reliability, where the three HARQ schemes include Type-I HARQ, HARQ with chase combining (HARQ-CC), and HARQ with incremental redundancy (HARQ-IR). To recapitulate, it is revealed that HARQ-IR offers the lowest latency while guaranteeing the demanded reliability target under a stringent power constraint, albeit at the price of high coding complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge