Farouk Dako

Towards Trustworthy Breast Tumor Segmentation in Ultrasound using Monte Carlo Dropout and Deep Ensembles for Epistemic Uncertainty Estimation

Aug 25, 2025

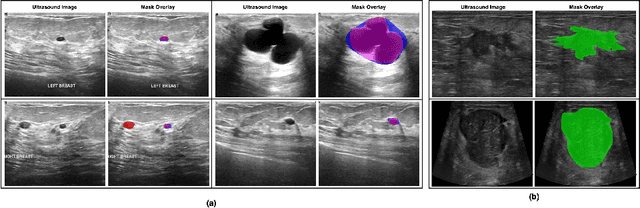

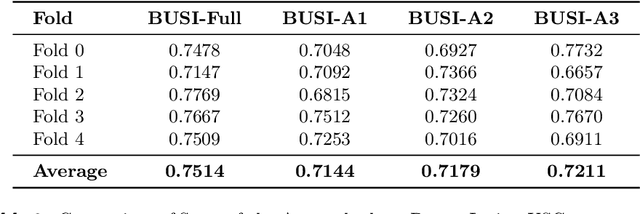

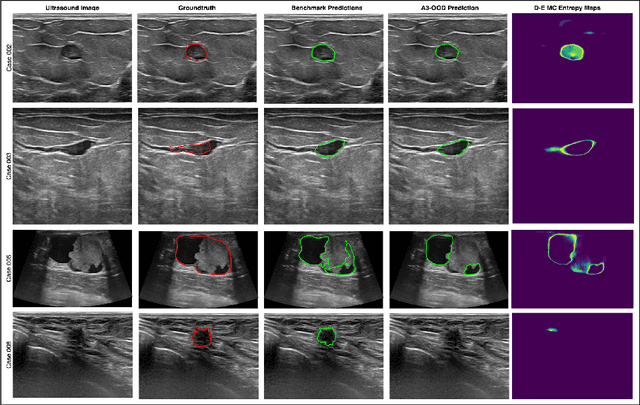

Abstract:Automated segmentation of BUS images is important for precise lesion delineation and tumor characterization, but is challenged by inherent artifacts and dataset inconsistencies. In this work, we evaluate the use of a modified Residual Encoder U-Net for breast ultrasound segmentation, with a focus on uncertainty quantification. We identify and correct for data duplication in the BUSI dataset, and use a deduplicated subset for more reliable estimates of generalization performance. Epistemic uncertainty is quantified using Monte Carlo dropout, deep ensembles, and their combination. Models are benchmarked on both in-distribution and out-of-distribution datasets to demonstrate how they generalize to unseen cross-domain data. Our approach achieves state-of-the-art segmentation accuracy on the Breast-Lesion-USG dataset with in-distribution validation, and provides calibrated uncertainty estimates that effectively signal regions of low model confidence. Performance declines and increased uncertainty observed in out-of-distribution evaluation highlight the persistent challenge of domain shift in medical imaging, and the importance of integrated uncertainty modeling for trustworthy clinical deployment. \footnote{Code available at: https://github.com/toufiqmusah/nn-uncertainty.git}

Analysis of the MICCAI Brain Tumor Segmentation -- Metastases (BraTS-METS) 2025 Lighthouse Challenge: Brain Metastasis Segmentation on Pre- and Post-treatment MRI

Apr 16, 2025Abstract:Despite continuous advancements in cancer treatment, brain metastatic disease remains a significant complication of primary cancer and is associated with an unfavorable prognosis. One approach for improving diagnosis, management, and outcomes is to implement algorithms based on artificial intelligence for the automated segmentation of both pre- and post-treatment MRI brain images. Such algorithms rely on volumetric criteria for lesion identification and treatment response assessment, which are still not available in clinical practice. Therefore, it is critical to establish tools for rapid volumetric segmentations methods that can be translated to clinical practice and that are trained on high quality annotated data. The BraTS-METS 2025 Lighthouse Challenge aims to address this critical need by establishing inter-rater and intra-rater variability in dataset annotation by generating high quality annotated datasets from four individual instances of segmentation by neuroradiologists while being recorded on video (two instances doing "from scratch" and two instances after AI pre-segmentation). This high-quality annotated dataset will be used for testing phase in 2025 Lighthouse challenge and will be publicly released at the completion of the challenge. The 2025 Lighthouse challenge will also release the 2023 and 2024 segmented datasets that were annotated using an established pipeline of pre-segmentation, student annotation, two neuroradiologists checking, and one neuroradiologist finalizing the process. It builds upon its previous edition by including post-treatment cases in the dataset. Using these high-quality annotated datasets, the 2025 Lighthouse challenge plans to test benchmark algorithms for automated segmentation of pre-and post-treatment brain metastases (BM), trained on diverse and multi-institutional datasets of MRI images obtained from patients with brain metastases.

Analysis of the BraTS 2023 Intracranial Meningioma Segmentation Challenge

May 16, 2024

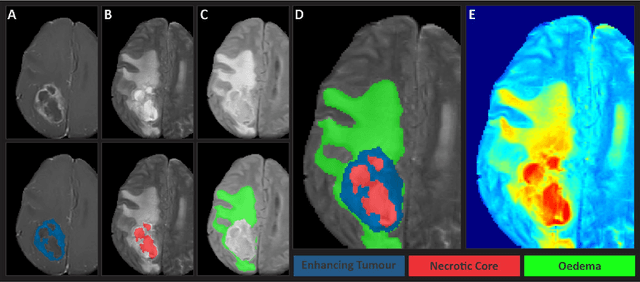

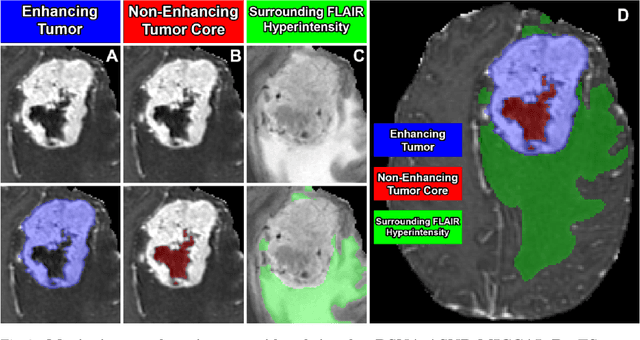

Abstract:We describe the design and results from the BraTS 2023 Intracranial Meningioma Segmentation Challenge. The BraTS Meningioma Challenge differed from prior BraTS Glioma challenges in that it focused on meningiomas, which are typically benign extra-axial tumors with diverse radiologic and anatomical presentation and a propensity for multiplicity. Nine participating teams each developed deep-learning automated segmentation models using image data from the largest multi-institutional systematically expert annotated multilabel multi-sequence meningioma MRI dataset to date, which included 1000 training set cases, 141 validation set cases, and 283 hidden test set cases. Each case included T2, T2/FLAIR, T1, and T1Gd brain MRI sequences with associated tumor compartment labels delineating enhancing tumor, non-enhancing tumor, and surrounding non-enhancing T2/FLAIR hyperintensity. Participant automated segmentation models were evaluated and ranked based on a scoring system evaluating lesion-wise metrics including dice similarity coefficient (DSC) and 95% Hausdorff Distance. The top ranked team had a lesion-wise median dice similarity coefficient (DSC) of 0.976, 0.976, and 0.964 for enhancing tumor, tumor core, and whole tumor, respectively and a corresponding average DSC of 0.899, 0.904, and 0.871, respectively. These results serve as state-of-the-art benchmarks for future pre-operative meningioma automated segmentation algorithms. Additionally, we found that 1286 of 1424 cases (90.3%) had at least 1 compartment voxel abutting the edge of the skull-stripped image edge, which requires further investigation into optimal pre-processing face anonymization steps.

The Brain Tumor Segmentation in Pediatrics (BraTS-PEDs) Challenge: Focus on Pediatrics (CBTN-CONNECT-DIPGR-ASNR-MICCAI BraTS-PEDs)

Apr 29, 2024Abstract:Pediatric tumors of the central nervous system are the most common cause of cancer-related death in children. The five-year survival rate for high-grade gliomas in children is less than 20%. Due to their rarity, the diagnosis of these entities is often delayed, their treatment is mainly based on historic treatment concepts, and clinical trials require multi-institutional collaborations. Here we present the CBTN-CONNECT-DIPGR-ASNR-MICCAI BraTS-PEDs challenge, focused on pediatric brain tumors with data acquired across multiple international consortia dedicated to pediatric neuro-oncology and clinical trials. The CBTN-CONNECT-DIPGR-ASNR-MICCAI BraTS-PEDs challenge brings together clinicians and AI/imaging scientists to lead to faster development of automated segmentation techniques that could benefit clinical trials, and ultimately the care of children with brain tumors.

The Brain Tumor Segmentation (BraTS-METS) Challenge 2023: Brain Metastasis Segmentation on Pre-treatment MRI

Jun 01, 2023

Abstract:Clinical monitoring of metastatic disease to the brain can be a laborious and time-consuming process, especially in cases involving multiple metastases when the assessment is performed manually. The Response Assessment in Neuro-Oncology Brain Metastases (RANO-BM) guideline, which utilizes the unidimensional longest diameter, is commonly used in clinical and research settings to evaluate response to therapy in patients with brain metastases. However, accurate volumetric assessment of the lesion and surrounding peri-lesional edema holds significant importance in clinical decision-making and can greatly enhance outcome prediction. The unique challenge in performing segmentations of brain metastases lies in their common occurrence as small lesions. Detection and segmentation of lesions that are smaller than 10 mm in size has not demonstrated high accuracy in prior publications. The brain metastases challenge sets itself apart from previously conducted MICCAI challenges on glioma segmentation due to the significant variability in lesion size. Unlike gliomas, which tend to be larger on presentation scans, brain metastases exhibit a wide range of sizes and tend to include small lesions. We hope that the BraTS-METS dataset and challenge will advance the field of automated brain metastasis detection and segmentation.

The Brain Tumor Segmentation Challenge 2023: Glioma Segmentation in Sub-Saharan Africa Patient Population

May 30, 2023

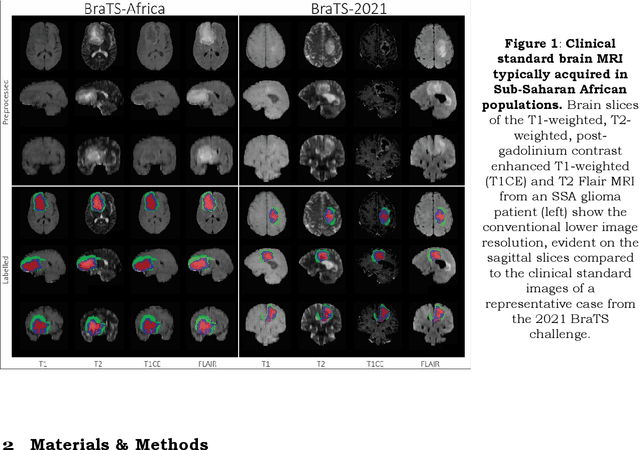

Abstract:Gliomas are the most common type of primary brain tumors. Although gliomas are relatively rare, they are among the deadliest types of cancer, with a survival rate of less than 2 years after diagnosis. Gliomas are challenging to diagnose, hard to treat and inherently resistant to conventional therapy. Years of extensive research to improve diagnosis and treatment of gliomas have decreased mortality rates across the Global North, while chances of survival among individuals in low- and middle-income countries (LMICs) remain unchanged and are significantly worse in Sub-Saharan Africa (SSA) populations. Long-term survival with glioma is associated with the identification of appropriate pathological features on brain MRI and confirmation by histopathology. Since 2012, the Brain Tumor Segmentation (BraTS) Challenge have evaluated state-of-the-art machine learning methods to detect, characterize, and classify gliomas. However, it is unclear if the state-of-the-art methods can be widely implemented in SSA given the extensive use of lower-quality MRI technology, which produces poor image contrast and resolution and more importantly, the propensity for late presentation of disease at advanced stages as well as the unique characteristics of gliomas in SSA (i.e., suspected higher rates of gliomatosis cerebri). Thus, the BraTS-Africa Challenge provides a unique opportunity to include brain MRI glioma cases from SSA in global efforts through the BraTS Challenge to develop and evaluate computer-aided-diagnostic (CAD) methods for the detection and characterization of glioma in resource-limited settings, where the potential for CAD tools to transform healthcare are more likely.

The Brain Tumor Segmentation Challenge 2023: Focus on Pediatrics

May 26, 2023Abstract:Pediatric tumors of the central nervous system are the most common cause of cancer-related death in children. The five-year survival rate for high-grade gliomas in children is less than 20\%. Due to their rarity, the diagnosis of these entities is often delayed, their treatment is mainly based on historic treatment concepts, and clinical trials require multi-institutional collaborations. The MICCAI Brain Tumor Segmentation (BraTS) Challenge is a landmark community benchmark event with a successful history of 12 years of resource creation for the segmentation and analysis of adult glioma. Here we present the CBTN-CONNECT-DIPGR-ASNR-MICCAI BraTS-PEDs 2023 challenge, which represents the first BraTS challenge focused on pediatric brain tumors with data acquired across multiple international consortia dedicated to pediatric neuro-oncology and clinical trials. The BraTS-PEDs 2023 challenge focuses on benchmarking the development of volumentric segmentation algorithms for pediatric brain glioma through standardized quantitative performance evaluation metrics utilized across the BraTS 2023 cluster of challenges. Models gaining knowledge from the BraTS-PEDs multi-parametric structural MRI (mpMRI) training data will be evaluated on separate validation and unseen test mpMRI dataof high-grade pediatric glioma. The CBTN-CONNECT-DIPGR-ASNR-MICCAI BraTS-PEDs 2023 challenge brings together clinicians and AI/imaging scientists to lead to faster development of automated segmentation techniques that could benefit clinical trials, and ultimately the care of children with brain tumors.

The Brain Tumor Segmentation Challenge 2023: Brain MR Image Synthesis for Tumor Segmentation

May 20, 2023

Abstract:Automated brain tumor segmentation methods are well established, reaching performance levels with clear clinical utility. Most algorithms require four input magnetic resonance imaging (MRI) modalities, typically T1-weighted images with and without contrast enhancement, T2-weighted images, and FLAIR images. However, some of these sequences are often missing in clinical practice, e.g., because of time constraints and/or image artifacts (such as patient motion). Therefore, substituting missing modalities to recover segmentation performance in these scenarios is highly desirable and necessary for the more widespread adoption of such algorithms in clinical routine. In this work, we report the set-up of the Brain MR Image Synthesis Benchmark (BraSyn), organized in conjunction with the Medical Image Computing and Computer-Assisted Intervention (MICCAI) 2023. The objective of the challenge is to benchmark image synthesis methods that realistically synthesize missing MRI modalities given multiple available images to facilitate automated brain tumor segmentation pipelines. The image dataset is multi-modal and diverse, created in collaboration with various hospitals and research institutions.

The Brain Tumor Segmentation Challenge 2023: Local Synthesis of Healthy Brain Tissue via Inpainting

May 15, 2023

Abstract:A myriad of algorithms for the automatic analysis of brain MR images is available to support clinicians in their decision-making. For brain tumor patients, the image acquisition time series typically starts with a scan that is already pathological. This poses problems, as many algorithms are designed to analyze healthy brains and provide no guarantees for images featuring lesions. Examples include but are not limited to algorithms for brain anatomy parcellation, tissue segmentation, and brain extraction. To solve this dilemma, we introduce the BraTS 2023 inpainting challenge. Here, the participants' task is to explore inpainting techniques to synthesize healthy brain scans from lesioned ones. The following manuscript contains the task formulation, dataset, and submission procedure. Later it will be updated to summarize the findings of the challenge. The challenge is organized as part of the BraTS 2023 challenge hosted at the MICCAI 2023 conference in Vancouver, Canada.

The ASNR-MICCAI Brain Tumor Segmentation (BraTS) Challenge 2023: Intracranial Meningioma

May 12, 2023

Abstract:Meningiomas are the most common primary intracranial tumor in adults and can be associated with significant morbidity and mortality. Radiologists, neurosurgeons, neuro-oncologists, and radiation oncologists rely on multiparametric MRI (mpMRI) for diagnosis, treatment planning, and longitudinal treatment monitoring; yet automated, objective, and quantitative tools for non-invasive assessment of meningiomas on mpMRI are lacking. The BraTS meningioma 2023 challenge will provide a community standard and benchmark for state-of-the-art automated intracranial meningioma segmentation models based on the largest expert annotated multilabel meningioma mpMRI dataset to date. Challenge competitors will develop automated segmentation models to predict three distinct meningioma sub-regions on MRI including enhancing tumor, non-enhancing tumor core, and surrounding nonenhancing T2/FLAIR hyperintensity. Models will be evaluated on separate validation and held-out test datasets using standardized metrics utilized across the BraTS 2023 series of challenges including the Dice similarity coefficient and Hausdorff distance. The models developed during the course of this challenge will aid in incorporation of automated meningioma MRI segmentation into clinical practice, which will ultimately improve care of patients with meningioma.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge